A technique for automatically scoring open

advertisement

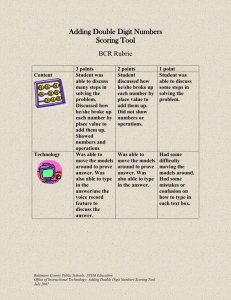

Scoring Open-Ended Concept Maps. . . 1 A technique for automatically scoring open-ended concept maps by Ellen M. Taricani and Roy B. Clariana The Pennsylvania State University College of Education Editorial contact: Dr. Roy Clariana Penn State University 1510 S. Quebec Way #42 Denver, CO 80231 RClariana@psu.edu 303-369-7071 Educational Technology Research and Development, 54, 61-78. Running Head: Scoring open-ended concept maps... Scoring Open-Ended Concept Maps. . . 2 A technique for automatically scoring open-ended concept maps Abstract This descriptive investigation seeks to confirm and extend a technique for automatically scoring concept maps. Sixty unscored concept maps from a published dissertation were scored using a computer-based technique adapted from Schvaneveldt (1990) and colleague’s Pathfinder network approach. The scores were based on link lines drawn between terms and on the geometric distances between terms. These concept map scores were compared to terminology and comprehension posttest scores. Concept map scores derived from link data were more related to terminology whereas concept map scores derived from distance data were more related to comprehension. A step-bystep description of the scoring technique is presented and the next steps in the development process are discussed. ___________________________________________________ Keywords: computer-based assessment, Pathfinder networks, technology tools Scoring Open-Ended Concept Maps. . . 3 A technique for automatically scoring open-ended concept maps Currently there is considerable interest in the use of concept maps both to promote and to measure meaningful learning (Shavelson, Lang, & Lewin, 1994). Concept maps are sketches or diagrams that show the relationships among a set of terms by the positions of the terms and by labeled lines and arrows connecting some of the terms. Guided by Ausubel’s (1968) theory, teachers and researchers, mainly in science education, have considered concept hierarchy, proposition correctness, and cross-concept links as the most salient features of concept maps (Rye & Rubba, 2002). Concept maps are most often scored by raters using rubrics to quantify content, meaning, and visual arrangement. Extensive empirical research has shown that scoring approaches with the highest reliability and criterion-related validity compare specific features in student concept maps to those in expert referent maps (Ruiz-Primo & Shavelson, 1996). Because of the nature of the information in concept maps and also the idiosyncrasies of individual maps, raters almost necessarily must be subject-matter experts in the content of the map. Also, scoring concept maps by hand takes time and experience. McClure, Sonak, and Suen (1999) reported that raters can be overwhelmed by complex scoring rubrics. After comparing six concept map scoring approaches, they concluded that the reliability of concept map scores decreases substantially as the cognitive complexity of the scoring task increases. Taken together, these issues mitigate against the casual use of concept maps for assessment in the classroom with all but the simplest scoring rubrics. Scoring Open-Ended Concept Maps. . . 4 Several large-scale projects are underway that seek to automate concept map scoring (Cañas, Coffey, Carnot, Feltovich, Hoffman, Feltovich, & Novak, 2003; Herl, O'Neil, Chunga, & Schacter, 1999; Luckie, 2001). This investigation considers a concept map scoring technique that is based on Pathfinder associative networks (Schvaneveldt, Dearholt, & Durso, 1988) that does not require raters. Pathfinder Associative Networks Pathfinder networks (PFNets) are a well established method for representing knowledge that has been applied in a number of domains of interest to instructional designers (Jonassen, Beissner, & Yacci, 1993). PFNets are 2-dimensional graphic network representations of a matrix of relationship data in which concepts are represented as nodes and relationships as unlabeled links connecting the nodes. PFNets visually resemble concept maps, but without linking terms. There are three steps in the Pathfinder approach. In Step 1, raw proximity data is collected typically using a word-relatedness judgment task. Participants are shown a set of terms two at a time, and judge the relatedness of each pair of terms on a scale from one (low) to nine (high). The number of pair-wise comparisons that participants must make is (n2 – n)/2, with n equal to the total number of terms in the list. In Step 2, a software tool called Knowledge Network and Orientation Tool for the Personal Computer (KNOT, 1998) is used to reduce the raw proximity data into a PFNet representation. Pathfinder uses an algorithm to determine a least-weighted path that links all of the terms. The rules for calculating the least-weighted path can be adapted by adjusting parameters that reduce or prune the number of links in the resulting PFNet (refer to Dearholt & Schvaneveldt, 1990). The resulting PFNet is based on a data reduction approach that is purported to Scoring Open-Ended Concept Maps. . . 5 represent the most salient relationships in the raw proximity data. In Step 3, the similarity of the participant’s PFNet to an expert referent PFNet is calculated also using KNOT software (Goldsmith & Davenport, 1990). The total number of derived links shared by two PFNets is called links in common. Common is a positive integer that ranges from zero to the maximum number of links in the referent PFNet. How can this Pathfinder approach be used to score concept maps? Several investigators have shown that concept map-like tasks capture some of the same types of relational information as word-relatedness judgment tasks (Jonassen, 1987; McClure et al., 1999; Rye & Rubba, 2002; Schau, Mattern, Zeilik, & Teague, 1999). Compared to word-relatedness judgment tasks, concept maps are relatively fast to complete and perhaps more authentic (Shavelson et al., 1994). In the present investigation, concept maps provide an alternative to word-relatedness judgment tasks in Step 1 for obtaining raw proximity data, while Steps 2 and 3 are conducted in the conventional way. Thus, the main contribution of this investigation is in clearly describing how components of a concept map can be converted into raw proximity data in Step 1, and then describing the validity and reliability of the scores obtained. An Automatic Technique for Scoring Concept Maps What information components of concept maps can be collected automatically? Concepts, links, and linking terms can be counted in various ways. In addition, following Kitchin (2000); Yin, Vanides, Ruiz-Primo, Ayala, and Shavelson (2004) have proposed that map structure complexity, as determined by examining the overall visual layout of the map, should also be considered. Automatically measuring these components of concept maps is easier with closed-ended concept mapping tasks where the student is Scoring Open-Ended Concept Maps. . . 6 provided with a predefined list of concepts and linking terms, such as the concept map scoring software used by Herl et al. (1999). However, many investigators refer to openended concept mapping, where participants may use any concepts and linking terms in their maps, as the gold standard for capturing students’ knowledge structures (McClure et al., 1999; Ruiz-Primo, Schultz, Li, & Shavelson, 1999; Yin et al., p.24). But automatically scoring open-ended concept maps is considerably more difficult. Clariana, Koul, and Salehi (in press) piloted a technique for scoring open-ended concept maps. Practicing teachers enrolled in graduate courses constructed concept maps on paper while researching the topic, “the structure and function of the human heart and circulatory system” online. Participants were given the online addresses of five articles that ranged in length from 1,000 to 2,400 words but were encouraged to view additional resources. After completing their research, participants then used their concept map as an outline to write a 250-word text summary of this topic (see Clariana, 2003). Computer software tools (Clariana, 2002) were used to measure the geometric distances between terms in the concept maps, referred to as distance data, and to count the link lines that connected terms, referred to as link data (see Figure 1). Using Pathfinder KNOT software, the raw distance and link data were converted into PFNets and then were compared to an expert’s PFNets to obtain similarity scores. Five pairs of raters using rubrics also scored all of the concept maps and text summaries. The correlation values (Pearson r) for the concept maps scored by raters compared to concept map link-based scores was 0.36, to concept map distance-based scores was 0.54, and to text summaries scored by raters was 0.49. The correlation values for the text summaries scored by raters compared to concept map link-based scores was 0.76 and to concept map distance-based scores was 0.71. The Scoring Open-Ended Concept Maps. . . 7 authors concluded that these “automatically derived concept map scores can provide a relatively low-cost, easy to use, and easy to interpret measure of students’ science content knowledge.” Link Array left atrium a b c d e f g right ventricle pulmonary vein pulmonary artery left atrium lungs oxygenate pulmonary artery pulmonary vein deoxgenate right ventricle a 0 0 0 1 0 0 b c d e f g 1 1 1 1 0 0 0 0 0 0 0 1 0 0 0 - d e f g Distance Array a b c d e f g lungs deoxygenate oxygenate left atrium lungs oxygenate pulmonary artery pulmonary vein deoxgenate right ventricle a 120 150 108 73 156 66 b 36 84 102 42 102 c 120 114 138 54 84 144 138 42 114 120 - Figure 1 The link and distance data arrays of a simple map. It is important to note that “link line” does not correspond to the term “proposition” used in the concept map literature. A proposition is the combination of two concepts (e.g., subject–predicate) and a linking term (e.g., verb) that describes the relationship between the two concepts. For example, in the proposition, “the aorta is a blood vessel”, “aorta” and “blood vessel” are concepts and “is a” is the linking term. Typically, proposition are scored based on correctness, which includes deciding whether the linking term is valid and significant for that context. Recently, Harper, Hoeft, Evans, and Jentsch (2004) reported that the correlation between just counting link lines compared to actually scoring correct and valid propositions in the same set of maps was r Scoring Open-Ended Concept Maps. . . 8 = 0.97, suggesting that the substantial extra time and effort required to specify and handscore all possible linking terms adds little additional information over just counting link lines. Thus, the approach used by Clariana et al. (in press) is a significant departure from most concept map scoring approaches because it considers link lines, not proposition correctness. In addition, their technique uses the relative spatial location of concepts to communicate hierarchical and coordinate concept relations (Robinson, Corliss, Bush, Bera, & Tomberlin, 2003, p.26). This approach is founded on previous research on free association norms (Deese, 1965), on structural analyses of text propositions (Frase, 1969), on the Matrix Model of Memory (Humphreys, Bain, & Pike, 1989; Pike, 1984), and on current neural network models of cognition (McClelland, McNaughton, & O'Reilly, 1995). For example, in an untrained neural network, concept units (e.g., subjects, predicates, and linking words) are randomly associated, but as the network learns by experiencing many propositions, structural relationships emerge. Elman (in press) has shown that verbs (linking words) and nouns (subjects–predicates) separate into two separate clusters, and then nouns sub-cluster based on their meaning (e.g., animate and inanimate). The present investigation assumes that the distances between concepts terms in concept maps captures aspects of this underlying fundamental concept structure (e.g., subject–predicate associations). In addition, distance data may provide a direct measure of what Yin et al. (2004) call map structure complexity. In a follow-up study, Clariana and Poindexter (2004) used the same Pathfinder scoring technique but asked participants to draw network maps rather than concept maps. Scoring Open-Ended Concept Maps. . . 9 Network maps are like concept maps, except that there are no linking terms. The mapping directions specifically directed the participants to use spatial closeness to show relationships and intentionally deemphasized the use of link lines. Participants completed one of three print-based text lessons on the heart and circulatory system. The three lesson treatments included adjunct constructed response questions, scrambled-sentences, and a reading only control. Participants completed three multiple-choice posttests that assessed identification, terminology, and comprehension and then were handed a list of 25 preselected terms and were asked to draw a network map. The adjunct question treatment was significantly more effective than the other lesson treatments for the comprehension outcome, and no other treatment comparisons were significant. Scores based on network map link data (relative to distance data) were more related to terminology with Pearson’s r = .77 compared to r = .69, while scores based on network map distance data (relative to link data) were more related to comprehension, with Pearson’s r = .71 compared to r = .53. Thus the geometric distances between terms related more to the broader processes and functions of the heart and circulatory system, while the links drawn to connect terms related more to verbatim knowledge from the lesson text covering facts, terminology, and definitions. The purpose of the present descriptive investigation is to confirm and extend these two previous experimental studies involving this computer-based technique for scoring open-ended concept maps. The ultimate goal of this line of research is to develop a software tool that allows a learner to create and save a concept map and then automatically score the map relative to an archived expert referent map, receiving specific feedback on their maps. One necessary component of this software tool is the Scoring Open-Ended Concept Maps. . . 10 mathematical approach used to convert raw data into scores in Step 2. Therefore, this investigation compares multiple data reduction approaches for forming PFNets. The resulting scores are compared to traditional multiple-choice posttests that measure terminology and comprehension of the lesson content as a measure of the concurrent criterion-related validity of the various concept map scores. Method A recent dissertation that used concept maps as an instructional treatment provides an ideal and convenient existing data set for the purposes of the present investigation (Taricani, 2002). Though this is previously published data, the present investigation does not reexamine the original research questions. Further, this computerbased scoring method was not available at the time of the original publication and also the concept maps were not scored by raters in that study. In Taricani’s dissertation, undergraduate students were randomly assigned to one of five treatment conditions including a learner-generated concept map treatment with feedback, a learner-generated concept map treatment without feedback, a partially completed fill-in-the blank concept map treatment with feedback, a partially completed fill-in-the blank concept map treatment without feedback, and finally a reading-only, no map or feedback control treatment. Of these five, only the first two treatments involved creating a concept map, so only these two treatments are included in the present investigation. Participants Participants were freshmen students at a large northeastern university (n = 60) recruited as volunteers from both science and non-science courses. They were randomly assigned to either the learner-generated concept map treatment with feedback (Feedback) Scoring Open-Ended Concept Maps. . . 11 or the learner-generated concept map treatment without feedback (No Feedback). The No Feedback treatment group had 17 males and 13 females and the Feedback group, 12 males and 18 females. Participation was voluntary and participants were rewarded with either extra course credit or pizza and ice cream for their participation but not for performance. Materials The print-based instructional text was a 1,900-word passage on the human heart developed by Dwyer (1972) called “The human heart: Parts of the heart, circulation of blood, and cycle of blood pressure.” The text dealt with the parts of the heart and the internal processes that occur during the systolic and diastolic phases. The complexity of the information provided was suitable for these participants' general knowledge and comprehension levels. Following the approach used by Novak and Gowin (1984), a two-page lesson on how to create a concept map was developed (available from Taricani, 2002, pp. 122-124). This two-page lesson included a description of concept mapping and an example of a hierarchical concept map. A short paragraph was presented that contained a familiar scenario about a student who took a walk to the campus library. The walk scenario was selected as a metaphor of blood flow through the heart (e.g., the student moves from point-to-point going past various buildings along the way and blood flows from point-topoint passing through various components along the way). After reading this paragraph, participants were asked to draw a concept map of the library walk scenario on a blank sheet of paper as practice. To foreshadow the lesson treatment, feedback in the form of an instructor prepared hierarchical concept map of the library walk was given to the Scoring Open-Ended Concept Maps. . . 12 Feedback group after they had completed the drawing portion of the 2-page concept map lesson. Feedback was not provided to the No Feedback group. Posttests The multiple-choice criterion posttest originally developed and validated by Dwyer (1972), consisted of 20 questions that dealt with terminology and 20 questions that dealt with comprehension. The terminology test was designed to measure declarative knowledge of facts, terms, and definitions. The comprehension test was designed to measure a more thorough understanding of the processes of the human heart, with a specific focus on the functions of different parts of the heart. The KR-20 reliability for the posttest was 0.83. Procedure The procedure was similar for both the Feedback and No Feedback treatment groups. First, participants completed a demographic survey. Next they completed the 2page training lesson on how to draw a concept map, with or without feedback. Then participants read the 3-page instructional text on the human heart and were asked to draw a concept map of that information on a blank piece of paper while reading the lesson text. Participants could use any terms and any number of terms in their maps. After completing their concept maps, the Feedback group was given an instructorgenerated hierarchical concept map as feedback. They were asked to compare this feedback map to their own map and were allowed to change their map using pens of a different color. Only three of the participants made changes to their concept maps after viewing the feedback map. Finally, the lesson materials and concept maps were collected and then all participants completed the multiple-choice posttests. Scoring Open-Ended Concept Maps. . . 13 Establishing the Expert Concept Map Scoring Referent An optimized expert referent concept map is required for comparison during the scoring process (i.e., in Step 3). With open-ended concept maps, participants may use any terms and any number of terms in their maps, but only those terms also included in an expert referent (including synonyms and metonyms) can count during scoring. In order to optimize the value of the information across the entire set of 60 concept maps, a frequency list containing all of the terms used by the participants was prepared. A common term, such as left ventricle, had a high frequency count, while a term such as white corpuscles had a low frequency count. Functionally equivalent terms, such as auricle and atrium, were listed together as acceptable synonyms. A biology content expert was provided with the instructional text and the frequency list of terms and was asked to draw a concept map of the structure and function of the human heart and circulatory system. The expert was not required to use terms from the list, though the list was intended to influence the expert’s choice of terms. The resulting optimized expert referent concept map contained 26 terms with 36 links between terms. Recall that the instructor-generated concept map that served as feedback during the lesson was hierarchical, following the requirements of Novak and Gowin (1984). However, the optimized expert referent concept map developed after-the-fact for scoring purposes was clearly not hierarchical; it had a network structure that consisted of a cluster of terms in the center, and two flow loops, one to the left and the other to the right of the central cluster. This nonhierarchical network structure for the expert’s map is supported by Ruiz-Primo and Shavelson (1996) and Safayeni, Derbentseva, and Cañas (2003), who have questioned the requirement of imposing hierarchical structure on concept maps. Scoring Open-Ended Concept Maps. . . 14 Step 1 – Collecting Raw Concept Map Proximity Data Link Data Concept map link data were collected following Clariana et al. (in press). First, the links on each concept map were entered into an array that can be analyzed by KNOT software. Each array listed the 26 terms used by the expert down the left column and across the top row, so that every cell in the array represents the relationship between two of the 26 terms. Following convention, since the data above the diagonal are redundant with data below the diagonal, each link array consisted of just the 325 elements in the lower triangle of the array (e.g., (262 – 26)/2). A “1” entered in a link array cell indicates that a link was drawn between those two terms on the concept map while a “0” indicates that no link was drawn. Referring back to Figure 1, since a link is drawn between left atrium and pulmonary vein, then a “1” is entered into the corresponding cell in the link array. Linking terms were not considered, such as “is a”, “has a” and “contains”, only the presence or absence of each link line (ink on paper) was recorded. Link raw data is similarity data, where smaller values indicate weaker relationship. When a student did not include one of the 26 terms, all possible links to that term were coded as “0” to indicate no relationship to the other terms. The inter-rater reliability for transferring links from the paper-based maps to the link array was 0.98. Distance Data Concept map distance data were collected using ALA-Mapper software (Clariana, 2002). ALA-Mapper converts the spatial location of the terms in a concept map into a distance array containing all of the pair-wise distances between terms (refer back to Scoring Open-Ended Concept Maps. . . 15 Figure 1). Each paper-based concept map was recreated in ALA-Mapper, a manual process that can introduce error, though care was taken to maintain the original spatial proportional relationships between the terms in the map. The inter-rater reliability for this process was 0.81. Distance data is dissimilarity data, where larger values indicate weaker relationship. When a student did not include one of the 26 terms, all possible links to that term were coded as infinity to indicate no relationship to the other terms. Step 2 – Reducing Raw Concept Map Data into PFNets KNOT software was used to establish link and distance-based PFNets for each concept map by selecting the command “Analyze Proximity Data” found in the “Data” main menu. To establish PFNets, KNOT software requires information that describes the raw data and also two calculation parameters. The required descriptive information includes: (a) the data structure (e.g., lower triangle), (b) whether data is dissimilarity or similarity data (e.g., the link data are similarity data and the distance data are dissimilarity data), (c) the number of decimal places in the data (e.g., zero for both data sets), (d) the maximum value (e.g., link data set to “1” and distance data set to “1000”), (e) the minimum value (e.g., “0.1” for both data sets). Note that the maximum of 1000 for distance data and the minimum of 0.1 for link data are necessary in order to exclude missing terms from the analysis. In addition, two KNOT software parameters, q and Minkowski’s r, are used to define the rules for including and removing links, thus these two parameters are used to set the amount of data reduction that is desired. Minkowski’s r can range from one to infinity and q can range from two to n - 1 (where n is the number of terms). The r parameter is used in the calculation of the weight between terms (Dearholt & Scoring Open-Ended Concept Maps. . . 16 Schvaneveldt, 1990, p. 3). The q parameter is used to set the depth of the path that is considered during link pruning. Changing the r and q parameters usually results in changes in the resulting PFNets. Using the largest meaningful values of r and q produces a PFNet with the fewest links (the most data reduction) while using the smallest values of r and q produces a PFNet with the most links (the least data reduction). The link raw data in this investigation provides an interesting exception. Because the link raw data consists only of ones and zeros, identical PFNets will be produced no matter what values are set for the r and q parameters. Further, there is no data reduction when creating these link-based PFNets. Here, the expert’s link raw data contains 36 links and the expert’s link-based PFNet contains the same 36 links. For link data, the r and q parameters were set to the maximum values, with r equal to infinity and q equal to 25 (i.e., n – 1) because this is the recommended parameter values for ordinal level data. What values of the r and q parameters are appropriate for raw concept map distance data? Few specific guidelines exist for which values of Minkowski’s r is best for what situation (Durso & Coggins, 1990). Most investigations use the maximum values of r (infinity) and q (n - 1), designated in the form PFNet (r, q), perhaps since this should reveal the most salient information in the raw data and also because most research involving Pathfinder uses ordinal-level data. Rather than use one set of values, Dearholt and Schvaneveldt (1990) state, “Frequently, important information from a given set of proximity data can be obtained from different PFNets, constructed using different values of r and q.” (p. 5) Following this recommendation, four separate sets of PFNets were established from raw distance data across a range of Minkowski’s r values including 1, 2, 3, and infinity. Regarding the q parameter, because distance raw data are the actual Scoring Open-Ended Concept Maps. . . 17 distances between terms in 2-dimensional space, the value used for q is not as critical (regarding violations of the triangle inequality in Euclidean space, see Buzydlowski, 2002, p. 27). Thus, following convention, the q parameter was set to its maximum meaningful value of 25 (i.e., n – 1). Step 3 – Comparing PFNets to the Optimized Expert Referent KNOT software was used to compare participants’ PFNets to the optimized expert referent PFNet by selecting the command “Analyze Networks” found in the “Network” main menu. The software provides two highly correlated measures of similarity called common and configural similarity. Common is a global measure of similarity that is simply the total number of links shared by two PFNets. Common is reported in this investigation because it is easier to understand and also because it was a better predictor than configural similarity in the two previous studies. Reliability of the Concept Map Scores Because the expert’s link-based PFNet contains 36 links, then participants’ maximum possible score is 36 (see the column labeled “Maximum Possible” in Table 1). These link-based scores may be viewed as a 36-item test. A test item response table with 37 columns by 60 rows was created. Column 1 listed the 60 participants’ names while columns 2 through 37 listed the performance of each student for each of the expert’s 36 links. Students received a “1” in the appropriate cell when their link agreed with the expert and a “0” when it did not. For the link-based scores with 36 possible links, Cronbach alpha was 0.86 for the No Feedback group and 0.76 for the Feedback group. In the same way, a test item response table for the distance-based scores was created (34 maximum possible for PFNet (2,25)). Cronbach alpha was 0.80 for the No Feedback group Scoring Open-Ended Concept Maps. . . 18 and 0.66 for the Feedback group. Thus, the link-based scores are a little more reliable than the distance-based scores, and both link-based and distance-based scores for the No Feedback group are more reliable than those of the Feedback group. Table 1. Means and standard deviations for the multiple-choice posttests and the alternate concept map scores. 20 20 1 to 19 2 to 19 No Feedback Group M SD 9.5 (4.6) 8.7 (4.1) 36 1 to 27 11.5 (6.4) 11.8 (5.1) 65 34 31 28 1 to 65 1 to 21 1 to 17 1 to 16 23.4 10.3 9.2 8.4 (13.4) (5.2) (4.5) (4.2) 27.3 9.5 8.4 7.7 (15.0) (4.0) (3.7) (3.4) Maximum Observed Possible Range Multiple-Choice Posttests Terminology Comprehension Concept Map Scores Link data PFNet (, 25) Distance data PFNet (1, 25) PFNet (2, 25) PFNet (3, 25) PFNet (, 25) Feedback Group M SD 8.3 (4.1) 7.0 (3.7) Results and Discussion Though not of central interest in this present analysis, the seven posttest and concept map dependent variables listed in Table 1 were analyzed by MANOVA to determine whether the Feedback and No Feedback group means were different. Though the No Feedback group means for both the multiple-choice and the concept map scores tended to be a little larger than those of the Feedback group (see Table 1), none of the seven dependent variables were significantly different. For example, the greatest between-subjects effect was observed for comprehension posttest scores, with F (1,59) = 2.887, MSe = 0.038, p = .09. The remainder of this section will describe the relative characteristics of the concept map scores. Scoring Open-Ended Concept Maps. . . 19 Estimating the Concurrent Criterion-related Validity of the Concept Map Scores Correlation analysis is a common approach used for estimating concurrent criterion-related validity (Crocker & Algina, 1986). Pearson’s correlation was used to compare the terminology and comprehension multiple-choice criterion posttests to each concept map score shown in Table 2. Table 2. Correlation analyses of concept map scores with the multiple-choice posttests. Term 0.78* Comp 0.54* Feedback Term Comp 0.46* 0.30 0.32 0.48* 0.40* 0.38* 0.45* 0.61* 0.53* 0.49* 0.02 0.26 0.36 0.38* No Feedback Link data PFNet (, 25) Distance data PFNet (1, 25) PFNet (2, 25) PFNet (3, 25) PFNet (, 25) 0.05 0.11 0.18 0.22 (* p <.05; Term – terminology posttest; Comp – comprehension posttest) First, the No Feedback and Feedback groups obtained very different values and patterns of correlations in Table 2 and few of the Feedback group correlations were significant. The low correlations observed for the Feedback group between multiplechoice posttest scores and concept map scores can be at least partially accounted for by attenuation due to the lower test reliability of the Feedback group’s concept map scores, especially for distance-based scores. Further, it is not obvious that concept map scores should strongly correlate with multiple-choice test scores, thus Pearson r values in the 0.30 to 0.40 range seem reasonable and even expected. Thus establishing both convergent and discriminant validity is an important step in understanding what concept maps measure. Scoring Open-Ended Concept Maps. . . 20 However, low correlations for the Feedback group may also be partially accounted for by the negative effect of concept-maps as feedback (Tan, 2000). Recall that the main difference between the Feedback and No Feedback group is the instructorgenerated hierarchical concept map given as feedback to the Feedback group. Lee and Nelson (in press) suggest that presenting completed concept maps to learners as instructional materials places emphasis on “…unintentional imitation of its structure by the learners because the learners are given a concept map and cannot organize the concept map to make it fit with their internal knowledge structures.” Similarly, Lambiotte and Dansereau (1992) report that, “students with more well established schemas for the circulatory system performed less well when structure was imposed by an outline or a knowledge map (sic concept map).” (p. 198) Safayeni et al. (2003) go so far as to suggest that hierarchical concept maps of the circulatory and other biological systems are incompatible with the actual understood nature of those systems (p. 12) and propose a new form of concept map called cyclic concept maps (Kinchin, 2000). Further, neither the participants nor the expert created hierarchical concept maps of this content, and so the instructor-generated hierarchical concept map given as feedback was likely difficult to reconcile since it was so different from the students’ nonhierarchical maps. In hindsight, providing this hierarchical concept map as feedback was ill-advised. Future research should consider the effects of using cyclical versus hierarchical concept maps as lesson feedback. Second, scores derived from concept map link data were, in general, more related to multiple-choice posttest performance than were those derived from concept map distance data. Again, this can be at least partially accounted for by attenuation due to the Scoring Open-Ended Concept Maps. . . 21 lower reliability of the distance-based concept map scores. On the other hand, because it takes extra work to draw the link lines, links drawn on paper are probably more salient to the individual creating the concept map than are the distances between terms. Third, identical to the findings reported by Clariana and Poindexter (2004), for the No Feedback group, distance-based scores relative to link-based scores were a better predictor of comprehension, r = .61 compared to r = .54, and link-based scores relative to distance-based scores were a better predictor of terminology scores, r = .78 compared to r = .48 (see Table 2). This finding suggests that link and distance data in the same map are capturing different aspects of the participants’ knowledge. General Discussion This investigation confirms a technique for scoring open-ended concept maps using a Pathfinder network analysis approach. The concept map score reliability and the criterion-related correlation results indicate that the technique is capturing different aspects of participants’ knowledge of the lesson content. Most automatic concept map scoring systems focus on proposition correctness, which totally depends on the correctness of the linking term connecting the two concepts (see Cañas et al., 2003; Herl et al., 1999; Luckie, 2001). Based on our connectionist view for representing knowledge, the technique applied in this investigation totally disregards linking terms yet manages to capture information. In addition, our technique is different than other current automatic scoring approaches in that (a) it represents concept map raw data in vector arrays that allows for easier manipulation, aggregation, reduction, comparison, and analysis; (b) it provides a measure of map structural complexity that likely reflects the structure of the content; (c) Scoring Open-Ended Concept Maps. . . 22 participants’ concept maps can easily be compared to multiple distinct forms of expert concept maps and to each other as required by the researcher; and (d) pragmatically, it can be applied to both closed and open-ended concept maps. The instructional content and the centrality of the expert referent map in this investigation limit the generalizability of these findings. The lesson content involved blood flow through the circulatory system, which is essentially a cyclical path and Pathfinder networks are path representations (Safayeni et al., 2003). This concept map scoring technique may not be adequate for content that is not path-like. Second, the quality of the expert referent map is critical to the quality of the analyses since every map is compared to the referent map. Future research should consider how consistently superior referent maps can be established, perhaps by using multiple expert maps. The ultimate goal of this line of research is to develop a software tool that can be used to create, save, and automatically score concept maps, providing feedback on acceptable and less acceptable components of the map. Future research should consider using weighted and directional link lines. Students could select links that are dashed, thin, and thick to indicate degree of relatedness and single or double-headed arrows to show the directionality of the link relationship. This type of data can be easily represented in the data arrays used in this investigation and KNOT software can already accommodate this type of analysis. An important module of this software tool is the mathematical approach used to convert raw concept map data into scores. Besides PFNets, future research should consider alternate scoring approaches for directly analyzing raw link and distance data such as correlation, factor analysis, and multidimensional scaling, as well as approaches Scoring Open-Ended Concept Maps. . . 23 for comparing visual representations from the fields of biology (e.g., protein visualization tools) and engineering (e.g., data mining). Further refinement of this technique for scoring concept maps is warranted. References Ausubel, D.P. (1968). Educational psychology: a cognitive view. New York, NY: Holt, Rinehart, and Winston. Buzydlowski, J. W. (2002). A comparison of self-organizing maps and Pathfinder networks for the mapping of co-cited authors. Doctoral dissertation completed at Drexel University. Retrieved October 3, 2004, from http://faculty.cis.drexel.edu/~jbuzydlo/papers/thesis.pdf Cañas, A.J., Coffey, J.W., Carnot, M.J., Feltovich, P., Hoffman, R.R., Feltovich, J., & Novak, J.D. (2003). A Summary of Literature Pertaining to the Use of Concept Mapping Techniques and Technologies for Education and Performance Support. A report for The Chief of Naval Education and Training at Pensacola, Florida. Retrieved October 3, 2004, from http://www.ihmc.us/users/aCañas/Publications/ ConceptMapLitReview/IHMC/Literature Review on Concept Mapping.pdf Clariana, R.B. (2002). ALA-Mapper, version 1.0. Retrieved October 3, 2002, from http://www.personal.psu.edu/rbc4/ala.htm Clariana, R.B. (2003). Latent semantic analysis writing prompt, used with the permission of Knowledge Analysis Technologies, LLC. Retrieved October 30, 2003 from http://www.personal.psu.edu/rbc4/frame.htm Clariana, R.B., Koul, R., & Salehi, R. (in press). The criterion related validity of a computer-based approach for scoring concept maps. International Journal of Instructional Media, 33 (3), in press. Clariana, R.B., & Poindexter, M. T. (2004). The influence of relational and propositionspecific processing on structural knowledge. Presented at the annual meeting of the American Educational Research Association, April 12-16, San Diego, CA. Retrieved April 15, 2004 from http://www.personal.psu.edu/rbc4/aera_2004.doc Crocker, L.A., & Algina, J. (1986). Introduction to classical and modern test theory. New York, NY: Holt, Rinehart, and Winston. Dearholt, D.W. & Schvaneveldt, R.W. (1990). Properties of Pathfinder Networks. In Schvaneveldt (Ed.), Pathfinder associative networks: studies in knowledge organization (pp. 1-30). Norwood, NJ: Ablex Publishing Corporation. Deese, J. (1965). The structure of associations in language and thought. Baltimore, MD: The Johns Hopkins Press. Durso, F. T., & Coggins, K. A. (1990). Graphs in the social and psychological sciences: empirical contributions of Pathfinder. In Schvaneveldt (Ed.), Pathfinder associative networks: studies in knowledge organization (pp. 31-51). Norwood, NJ: Ablex Publishing Corporation. Dwyer, F. M. (1972). A guide for improving visualized instruction. State College, PA: Learning Services. Scoring Open-Ended Concept Maps. . . 24 Elman, J.L. (in press). An alternative view of the mental lexicon. Trends in Cognitive Science, in press. Retrieved December 10, 2004 from http://www.crl.ucsd.edu/~elman/Papers/elman_tics_opinion_2004.pdf Frase, L. T. (1969). Structural analysis of the knowledge that results from thinking about text. Journal of Educational Psychology, 60 (6, Part 2), 1-16. Goldsmith, T.E., & Davenport, D.M. (1990). Assessing structural similarity of graphs. In Schvaneveldt (Ed.), Pathfinder associative networks: studies in knowledge organization (pp. 75-87). Norwood, NJ: Ablex Publishing Corporation. Harper, M. E., Hoeft, R. M., Evans, A. W. III, & Jentsch, F. G. (2004). Scoring concepts maps: Can a practical method of scoring concept maps be used to assess trainee’s knowledge structures? Paper presented at the Human Factors and Ergonomics Society 48th Annual Meeting. Herl, H.E., O'Neil, H.F. Jr., Chunga, G.K.W.K., & Schacter, J. (1999). Reliability and validity of a computer-based knowledge mapping system to measure content understanding. Computers in Human Behavior, 15, 315-333. Humphreys, M.S., Bain, J.D., & Pike, R. (1989). Different ways to cue a coherent memory system: A theory for episodic, semantic and procedural tasks. Psychological Review, 96, 208-233. Jonassen, D.H. (1987). Assessing cognitive structure: verifying a method using pattern notes. Journal of Research and Development in Education, 20 (3), 1-13. Jonassen, D. H., Beissner, K., & Yacci, M. (1993). Structural knowledge: techniques for representing, conveying, and acquiring structural knowledge. Hillsdale, NJ: Lawrence Erlbaum Associates. Kinchin, I. M. (2000). Using concept maps to reveal understanding: A two-tier analysis. School Science Review, 81, 41-46. KNOT (1998). Knowledge Network and Orientation Tool for the Personal Computer, version 4.3. Retrieved October 3, 2003 from http://interlinkinc.net/ Lambiotte, J., & Dansereau, D. (1992). Effects of knowledge maps and prior knowledge on recall of science lecture content. Journal of Experimental Education, 60, 189201. Lee, Y., & Nelson, D. (in press). Viewing or visualizing – which concept map strategy works best on problem solving performance? British Journal of Educational Technology, in press. Luckie, D. (2001). C-TOOLS: Concept-Connector Tools for online learning in science. A National Science Foundation grant. Retrieved October 3, 2003 from http://www.msu.edu/~luckie/CTOOLS.pdf McClure, J., Sonak, B., & Suen, H. (1999). Concept map assessment of classroom learning: reliability, validity, and logistical practicality. Journal of Research in Science Teaching, 36, 475-492. McClelland, J.L., B.L. McNaughton, & O'Reilly, R.C. (1995). Why there are complementary learning systems in the hippocampus and neocortex: insights from the successes and failures of connectionist models. Psychological Review, 102, 419-457. Novak, J.D., & Gowin, D.B. (1984). Learning how to learn. Cambridge, UK: Cambridge University Press. Scoring Open-Ended Concept Maps. . . 25 Pike, R. (1984). A comparison of convolution and matrix distributed memory systems. Psychological Review, 91, 281-294. Robinson, D.H., Corliss, S.B., Bush, A.M., Bera, S.J., & Tomberlin, T. (2003). Optimal presentation of graphic organizers and text: A case for large bites? Educational Technology Research and Development, 51 (4), 25-41. Ruiz-Primo, M. A., & Shavelson, R.J. (1996). Problems and issues in the use of concept maps in science assessment. Journal of Research in Science Teaching, 33, 569600. Ruiz-Primo, M. A., Schultz, S., Li, M., & Shavelson, R. J. (1999). On the cognitive validity of interpretations of scores from alternative concept mapping techniques. (CSE Tech. Rep. No. 503). Los Angeles: University of California, National Center for Research on Evaluation, Standards, and Student Testing (CRESST). Rye, J. A., & Rubba, P. A. (2002). Scoring concept maps: an expert map-based scheme weighted for relationships. School Science and Mathematics, 102, 33-44. Safayeni, F., Derbentseva, N., & Cañas, A.J. (2003). Concept maps: a theoretical note on concepts and the need for cyclic concept maps. Retrieved October 3, 2004 from http://cmap.ihmc.us/Publications/ResearchPapers/Cyclic%20Concept%20Maps.p df Schvaneveldt, R.W. (1990). Pathfinder associative networks: studies in knowledge organization. Norwood, NJ: Ablex Publishing Corporation. Schvaneveldt, R.W., Dearholt, D.W., & Durso, F.T. (1988). Graph theoretic foundations of Pathfinder networks. Computers and Mathematics with Applications, 15, 337345. Schau, C., Mattern, N., Zeilik, M., & Teague, K.W. (1999). Select-and-fill-in concept map scores as a measure of students’ connected understanding of introductory astronomy. Paper presented at the Annual Meeting of the American Educational Research Association (Montreal, Canada, April). Available from ERIC ED434909. Shavelson, R. J., Lang, H., & Lewin, B. (1994). On concept maps as potential authentic assessment is science. (CSE Tech. Rep. No. 388). Los Angeles: University of California, National Center for Research on Evaluation, Standards, and Student Testing (CRESST). Tan, S.C. (2000). The effects of incorporating concept mapping into computer assisted instruction. Journal of Educational Computing Research, 23, 113-131. Taricani, E.M. (2002). Effects of the level of generativity in concept mapping with knowledge of correct response feedback on learning. Dissertation Abstracts International, ISBN # 0-493-67101-3. Yin, Y., Vanides, J., Ruiz-Primo, M.A., Ayala, C.C., & Shavelson, R.J. (2004). A comparison of two construct-a-concept-map science assessments: created linking phrases and selected linking phrases. (CSE Tech. Rep. No. 624). Los Angeles: University of California, National Center for Research on Evaluation, Standards, and Student Testing (CRESST).