Headers will be added later The rise of informatics as a research

advertisement

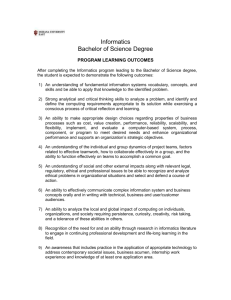

Headers will be added later The rise of informatics as a research domain Peter Fox1 1Tetherless World Constellation, Rensselaer Polytechnic Institute, 110 8th St., Troy, NY 12180 USA, pfox@cs.rpi.edu Abstract: Over the past five years, data science has emerged as a means of conducting science over many disciplines and domains. Accompanying this emergence is the realization that different informatics approaches, i.e. the science of data and information underlying the developments, have also emerged independently and empirically in many areas, e.g. astro, bio, geo, hydro, ocean and over different timescales, funding models and corresponding appreciation by their communities. To fully enable both interdisciplinary research and cope with increasingly complex data within domains, a move to a more repeatable and interworkable mode is required, i.e. adding a research component to the application component of informatics that enables data science as a means to address integrative science grand challenges areas such as water, environment and climate, ultimately resulting in the discovery of new knowledge. This contribution details some key elements of research informatics, the class of people who appear in this discipline, and the state of some current research challenges. Keywords: Informatics; Data Science; Provenance; Semantics. 1 EVOLVING CONDUCT OF SCIENCE Recent advances in data acquisition techniques quickly provide massive amounts of complex data characterized by source heterogeneity, multiple modalities, high volume, high dimensionality, and multiple scales (temporal, spatial, and function). In turn, science and engineering disciplines are rapidly becoming more and more data driven with goals of higher sample throughput, better understanding/modeling of complex systems and their dynamics, and ultimately engineering products for practical applications. However, analyzing libraries of complex data requires managing its complexity and integrating the information and knowledge across multiple scales over different disciplines. For example, the reductionist approach to biological research has provided an understanding of how the linear arrangement of nucleotides encode the linear arrangement of amino acids and how proteins interact to form functional groups governing signal transduction and metabolic pathways, etc. But at each level of biological organization, new forms of complexity are encountered such that we’ve taken our biological machines apart but can’t put them back together again (B. Yener, personal communication) - our ability to accumulate reductionist data has outstripped our ability to understand it. Thus, we encounter a gap in the structure/function relationship; having accumulated an extraordinary amount of detailed information about biological, material, and environmental structures, we cannot assemble it in a way that explains the correspondingly complex functions these structures perform. Educating and training data scientists with the necessary knowledge and skills to close the data - knowledge gap becomes a crucial requirement for successfully competing in science and engineering. Attention to data science has spread from being a discussion among researchers (Baker et al., 2008, Nativi and Fox, 2010), e.g. the Fourth Paradigm publication (Hey et al., 2009), into more general audiences, for example the recent Nature and Science special issues on “Data”. Not surprisingly there is explicit emphasis on data and information in professional societies and international scientific unions (SCCID, 2011), in national and international agency programs, foundations (for example the Keck Foundation and the Gordon and Betty Moore Foundation) and corporations (IBM, Slumberger, GE, Microsoft, etc.). Surrounding this attention is a Headers will be added later proliferation of studies, reports, conferences and workshops on Data, Data Science and workforce. Examples include: “Train a new generation of data scientists, and broaden public understanding” from an EU Expert Group (Riding the Wave, 2010), “…the nation faces a critical need for a competent and creative workforce in science, technology, engineering and mathematics (STEM)...” (NSF 2011a), "We note two possible approaches to addressing the challenge of this transformation: revolutionary (paradigmatic shifts and systemic structural reform) and evolutionary (such as adding data mining courses to computational science education or simply transferring textbook organized content into digital textbooks).” (NSF2011a), and “The training programs that NSF establishes around such a data infrastructure initiative will create a new generation of data scientists, data curators, and data archivists that is equipped to meet the challenges and jobs of the future." (NSF 2011b). Currently, there are very few graduate education and training programs in the world that can prepare students in a cohesive and interdisciplinary way to be productive data scientists. The emphasis on advanced degree programs thus required a rich research agenda, one that draws on many disciplines. 2 2.1 INFORMATICS – BALANCING RESEARCH AND APPLICATION Definition One of the more useful definitions is Informatics: “Information science includes the science of (data and) information, the practice of information processing, and the engineering of information systems. Informatics studies the structure, behavior, and interactions of natural and artificial systems that store, process and communicate (data and) information. It also develops its own conceptual and theoretical foundations. Since computers, individuals and organizations all process information, informatics has computational, cognitive and social aspects, including study of the social impact of information technologies.” (Wikipedia, italics added by author to note added content). Many disciplines, science, engineering, theory, all together. 2.2 Key elements of informatics Since science informatics efforts have emerged largely in isolation across a number of disciplines, it is only recently that certain core elements have been recognized as increasingly common. Since informatics bridges to end-use, the ‘use case’ (or user scenario) is one of the most important. Introduced by Jacobson (1987) and popularized by Cockburn (2000) for the purposes of software development, a use case ‘… is a prose description of a system’s behavior when interacting with the outside world.’ An excellent coverage of the topic is presented by Bittner and Spence (2002) who outline key factors in the development of use cases, especially the ‘why’ in addition to the ‘what’ and ‘how’. The second core element is the use of conceptual information models, especially working with domain science, application and data experts and matching and leveraging those models with others, especially ones that underlying key standards (see Research Challenges). There are other elements but these two are critical. Thus an overall skillset for an informaticist features two main elements: for information architecture - to sustainably generate information models, designs and architectures – and for technology development - to understand and support essential data and information needs of a wide variety of producers and consumers. In order to continue to meet evolving end-use needs at the current rate technical and technology change, informaticists must be grounded in the underpinnings of informatics, including information systems theoretical methods and best practices. This combination of knowledge and skills currently resides in ~ 1 in 100 information practitioners in the sciences (purely empirically and ethnographically determined by the author). The balance of research, application and a strong interdisciplinary educational curriculum is needed for informatics to address integrative science grand challenge problems that are the centre-piece of many international research organization missions, especially in Earth system sciences. Headers will be added later 3 EXAMPLAR APPLICATION IN A WIRADA CONTEXT Sensor Agency Activity Sensing/ Collecting Observations Deploy Sensor <<information>> Raw Observations from Agency Transfer Data <<information>> Sensor Information Data Transformation & Publishing <<information>> Published Observations in O&M Kepler – Generate Gridded Rainfall Data Figure 1: Map of Tasmania with place marks indicating stream gauge sensor placements. Different colours represent different operating agencies. The South Esk area is in the ENE. <<information>> Flow Forecast Output (in TSF) <<information>> Specified Rainfall Range <<information>> Gridded Rainfall Data Stream Forecast Model During 2010-2011 the CSIRO Tasmanian Information and Communication Technologies Centre (ICTC) undertook an excellent <<information>> Data application of many of the aforementioned Published Flow Forecast Transformation & Result (in O&M) Publishing informatics capabilities and in doing so encountered many of the research challenges discussed below. They developed many use cases, information models, evaluated language Figure 2: Information model for SEFF encodings for sensor networks, water domain vocabularies and provenance, and deployed the beginning with the sensor and ending with a stream flow forecast. Rounded rectangles software implementation in support of two represent processes and square rectangles hydrological sensor webs for the South Esk represent data and information products. (north-east Tasmania, see Fig. 1) Flow Forecast (SEFF; see Fig. 2) and the Water Information Research and Development Flood Early Warning System (WIRADA-FEWS). Detailed descriptions of these systems are available in other papers both at the conference and in the proceedings. 4 RESEARCH CHALLENGES AND PATHS FORWARD Seen collectively, prominent domains of informatics (DeRoure and Goble, 2006) have two key things in common: i) a distinct shift towards systematic methodologies and corresponding shift away from the tight dependence on technologies and ii) the importance of a multi-disciplinary and collaborative approaches. Together, these changes point to a maturing of the discipline itself, with the aim of providing reproducible results, i.e. ones based on a solid underlying set of theoretical concepts rather than trial and error with various technologies. The current evolution in what capabilities are developed today is that many of the information systems theories (Shannon, 1948), semiotics (Peirce 1898), and cognitive and architectural design principles (Dowell and Long, 1998) are all beginning to coalesce in the modern practice of informatics. The key new element is the fourth paradigm of science noted earlier. The problems to be solved are more about integration, crossing disciplines and responding to a much wider variety of Headers will be added later stakeholders (SCCID 2011); those that really need to use many forms of data and information. In practice this means that at the intersection of prior theoretical concepts (almost all of them developed in the analog world of data and information) and real applications is a research environment that is providing fertile for the new breed of informaticists and in turn, data scientists (Hey et al., 2009). Of the many current research challenges, several examples, which are applicable to water/hydro informatics, are discussed below. Where’s the data and what happened to it? One of the most important and increasingly evident challenges arises from the shift of where and how the data are being made available. More and more ‘distance’ in the form of data and information from different computers, undergoing network transmission and format translation means that without very careful curation of these processes, obfuscation results and the probability of information loss increases (Weaver and Shannon, 1963). The present worst-case scenario is that data may be used for a purpose that it is not fit for. While it is highly likely that this situation occurs frequently today, the consequences of incorrect conclusions or erroneous decisions are far reaching, especially when avoidable with a combination of methodological and technical means. The challenge then is to propagate relevant knowledge, information and/or metadata (or pointers to them) along with the data as it a) proceeds through a workflow, b) through an analysis pipeline, c) is extracted, transformed, and loaded into applications or transmitted over the Internet. The ultimate realization then is that an informatics task involves constructing when needed, a sense of ‘state’ from a highly distributed and non-uniform quality, knowledge base. As a whole, this important topic falls under the heading of provenance research, and has grown in significance and understanding over the last ~ 10 years (Buneman et al., 2001; Simmhan et al. 2005). Machine processable provenance also provides and opportunity to instrument the scientific data ‘enterprise’ enabling explanation and verification use cases. Allowing for unintended use? A closely related challenge to the previous one, also focused on legitimate end use, is how to effectively increase the return on scientific investment made in generating and processing data by enabling use in a discipline or application area different from the original purpose? This challenge adds a dimension beyond the view of provenance noted above to include additional information and documentation along the lines of what is intended for digital data preservation. One of the biggest examples has occurred with the proliferation of ‘geobrowser’ applications (e.g. Microsoft Visual Earth tm or Google Earthtm) making immense amounts of previous inaccessible geospatial data (often on hard copy maps or in proprietary Geographic Information Systems (GIS) available and useable for very different purposes (Hayes et al., 2005), e.g. navigation and feature identification or recreation in the case of the general public, all the way to post-natural disaster damage evaluation. Past approaches, which fall into the category of ‘save everything known about the data’ are increasingly untenable and are unlikely to scale at the present rate of increase of data volumes and diversity. While planning for the unknown is difficult, the small number of current strategies used (e.g. using common/ standard data formats and metadata representations) must be augmented. New tools or new again? As informatics approaches to data science start to make their way into the modern design and implementation of information systems, some new opportunities for further enhancing the conduct of science emerge. The most obvious target for change is the user’s (presumably) electronic device that they use to perform a task. Progress with relatively light-weight clients (hand held devices, Web browsers) has meant that people can change the tools they are using for certain tasks relatively frequently, e.g. communicating, seeking information, reviewing it, and perhaps retrieving a sample. This situation is desirable, as the rate of technical change means this market sees the greatest innovation and capability enhancement. However, as science or application research proceeds, different tools are used. Analysis tools such as electronic spread sheets (e.g. Microsoft Exceltm) or fourth generation language tools (e.g. Matlabtm) and some of the most common tools, are the least flexible and least integrated into newer information systems, and most importantly, able (or not) to handle increasing data volumes, complexity and heterogeneity (let alone semantics; see below). Continuing research is needed to understand how common modes of scientific investigation: Headers will be added later analysis, modelling, visualization can be brought closer to the end user and sooner in the process, especially for visualization (Fox and Hendler, 2011). A role for standards? Standards, both community and those certified by national and international bodies (e.g. the International Standards Organization; ISO), are one means for disparate information systems to reliably exchange data and information, over the Internet (Percival, 2010; Woolf, 2010). However, while the development of standards is largely a methodological and technical exercise (organizational and political factors aside), the adoption and/ or implementation of standards is very much a social (and organizational/ political) one. Further, the need to be able to consistently but rapidly evolve standard means of data and information exchange is often hard to achieve without paying attention to the socio-technical aspects of the systems, or systems-of-systems. Again, informatics approaches are a valuable path forward as the pragmatics of end-use and real implementations tends to drive different choices in deciding what needs to be agreed on and what does not (E. Christian, personal communication). Encoding meaning? As noted by Baker et al. (2008), the gap that informatics bridges is that between science and application areas and underlying, discipline-agnostic ICT infrastructure (such as databases, web servers, wikis, etc.). The informatics task then is to model and represent the semantics expressed in the use case, or at the very least in the questions that need answers (Fagin et al., 2005). Traditionally, this knowledge has been embedded in software implementations that operate on data and information, often with convoluted or complex logic and human reasoning. There are many consequences but two prominent ones for this discussion are the inability to present sufficient provenance and explanation as to what happened and why (see earlier discussion; Buneman et al. 2001) and the rigid nature of the encodings which make them harder to maintain, especially as technology changes and different people, with different understandings of the semantics become involved. The latter circumstance highlights the important distinction between closed world and open world semantics, especially for data exchange (Libkin and Sirangelo, 2011). In short, the closed world assumption (CWA) means all knowledge is known and encoded and that which is not encoded is assumed false. A primary example is a relational database schema. In contrast, the open world assumption (OWA) fosters incomplete and evolving knowledge encodings and what is not encoded is simply not known. As a result another shift is occurring toward more explicit (declarative) semantics in the form of ontologies (Gruber, 1993) and consequent use of semantic web technologies (Berners-Lee et al. 2001) with applications in diverse science areas (Fox et al. 2009). The challenges here are numerous (Fox and Hendler, 2009) and include forming and sustaining suitable collaborative teams that include domain and data experts, information and knowledge modellers, and software engineers, balancing what semantic expressivity to encode, with how they will be implemented and used, as well as how they are maintained and/or evolve (see standards discussion). ACKNOWLEDGMENTS The author acknowledges the invitation and support from the conference organizers (CSIRO) and a Research Collaboration agreement between CSIRO/TasICTC and RPI and stimulating research discussions with Stephen Giugni, Kerry Taylor and Andrew Terhorst. The hydroinformatics application contributions to the sensor webs mentioned in this paper are due to Quan Bai, Corne Kloppers, Brad Lee, Qing Liu, Chris Peters and Peter Taylor (and others); they are the informaticists of today. REFERENCES Baker D, Barton C, Peterson W and Fox P (2008) Informatics and the 2007–2008 Electronic Geophysical Year. Eos Trans. AGU 89(48), 485-486. Bittner K and Spence I (2002) Use Case Modeling, Addison-Wesley Professional. Headers will be added later Buneman P, Khanna S and Wang-Chiew T (2001) Why and Where: A Characterization of Data Provenance, Lecture Notes in Computer Science, 1973, 316-330, DOI: 10.1007/3-54044503-X_20 Cockburn A (2000) Writing Effective Use Cases, Addison-Wesley, Boston, MA. De Roure D and Goble C (2009) Software Design for Empowering Scientists, IEEE Software, 26, (1), pp. 88-95. Dowell J and Long J (1998) A conception of the cognitive engineering design problem, Ergonomics , 41 (2) 126 - 139. Fagin R, Kolaitis Ph, Miller R, Popa L (2005) Data exchange: semantics and query answering. Theor. Comput. Sci. 336(1): 89–124. Fox P, McGuinness DL, Cinquini L, West P, Garcia J, Benedict JL and Middleton D (2009) Ontology-supported scientific data frameworks: The Virtual Solar-Terrestrial Observatory experience, Computers & Geosciences, 35 (4), 724-73. Fox P and Hendler J (2009) Semantic eScience: Encoding Meaning in Next-Generation Digitally Enhanced Science, in The Fourth Paradigm: Data Intensive Scientific Discovery, Eds. T. Hey, S. Tansley and K. Tolle, Microsoft External Research, pp. 145-150. Fox P and Hendler (2011) Changing the Equation on Scientific Data Visualization, Science, 331 (6018) pp. 705-708, DOI: 10.1126/science.1197654 online at http://www.sciencemag.org/content/331/6018/705.full Gruber TR (1993) Toward Principles for the Design of Ontologies Used for Knowledge Sharing, Formal Ontology in Conceptual Analysis and Knowledge Representation, eds. N. Guarino and R. Poli, Kluwer Academic Publishers, pp. 1-23. Hayes, GS, Mayers SA and Pensack CE (2005) Using GIS to Find New Uses for Old Data, Government engineering, Sept.-Oct. 2005, pp. 16-19. Hey T, Tansley S and Tolle K, Eds. (2009) The Fourth Paradigm: Data Intensive Scientific Discovery, Microsoft External Research. Jacobson I (1987) Object oriented development in an industrial environment, OOPSLA ‘87: Object-Oriented Programming Systems, Languages and Applications. ACM SIGPLAN, pp. 183-191. Libkin L and Sirangelo C (2011) Data Exchange and Schema Mappings in Open and Closed Worlds, Journal of Computer and System Sciences, 77 (3), 542-571. Nativi S and Fox P (2010) Advocating for the Use of Informatics in the Earth and Space Sciences, Eos Trans. AGU 91(8), 75-76, doi: 10.1029/2010EO080004. Peirce CS (1898) Reasoning and the Logic of Things: The Cambridge Conference Series of 1898, Ed. K Ketner, Harvard University Press (January 1, 1992). Percival G (2010) The Application of Open Standards to Enhance the Interoperability of Geoscience Information, International Journal of Digital Earth, 3, Suppl. 1, 14-30, doi: 10.1080/17538941003792751 Report Of The National Science Foundation Advisory Committee For Cyberinfrastructure Task Force On Cyberlearning And Workforce Development (2011a) http://www.nsf.gov/od/oci/taskforces/ Report Of The National Science Foundation Advisory Committee For Cyberinfrastructure Task Force On Data and Visualization (2011b). Riding the wave (2010) How Europe can gain from the rising tide of scientific data http://www.grdi2020.eu/Pages/Unlock.aspx, Final report of the High Level Expert Group on Scientific Data A submission to the European Commission October 2010. SCCID Interim Report (2011) The International Council for Science’s Strategic Coordinating Committee on Information and Data Interim Report, ICSU, April 2011. On the web at http://www.icsu.org/what-we-do/committees/information-data-sccid/?icsudocid=interim-report Shannon, CE (1948) A Mathematical Theory of Communication, Bell System Technical Journal, 27, pp. 379–423 & 623–656, July & October, 1948. Simmhan YL, Plale B and Gannon D (2005) A survey of data provenance in e-science, ACM SIGMOD, 34 (3), 31-36, doi: 10.1145/1084805.1084812 Weaver W and Shannon CE (1963) The Mathematical Theory of Communication. Univ. of Illinois Press. ISBN 0252725484. Woolf A (2010) Powered by Standards – New Data Tools for the Climate Sciences, International Journal of Digital Earth, 3, Suppl. 1, 85-102, doi: 10.1080/17538941003672268.