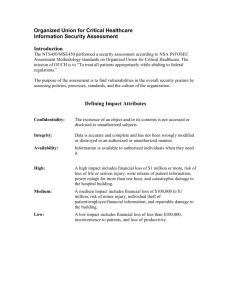

6 Software dependability and safety methods and techniques

advertisement