Lab Week 3

advertisement

Mobile Robotics COM596

Laboratory Session: week 3

Neural Networks for Khepera Robot Control using Matlab

Neural Network Toolbox

Integrated environment of MATLAB is shown in the diagram below. You can create

your own tools to customize toolbox or harness with Neural Network Toolbox.

Neural Net

Toolbox

Simulink

User-written

M-files

MATLAB

Figure 1: Neural Network Toolbox in Matlab environment

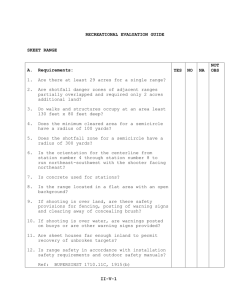

Defining network architecture and training algorithms

A single layer network with m inputs elements and n neurons is shown in Figure 2. In

this neural network (NN), each input element is connected with each neuron through

the weight matrix W. ith input element is connected with jth neuron – thus weight

matrix is written as Wji. If an input vector x is applied to the NN, the output vector y

will be

y f Wx b

Using the Matlab function newff() an architecture can be created with desired number

of layers and neurons.

Different training algorithms are available as functions. An example is given in

exercise 1.

b1

w1,1

net1

N1

f(.)

Y1

f(.)

Y2

x1

b2

x2

x3

N2

x4

.

.

.

xm

net2

.

.

.

bn

wn,m

Nn

netn

f(.)

Yn

Figure 2: Single layer feedforward network.

Exercise 1: Define a NN architecture and train with input-output data

Write the Matlab code and save it under a name and run it from command prompt.

%Training set

P = [0 1 2 3 4 5 6 7 8 9 10];

T = [0 1 2 3 4 3 2 1 2 3 4];

%Here a two-layer feed-forward network is created. The network's input ranges from

%[0 to 10]. The first layer has five TANSIG neurons, the second layer has one

%PURELIN neuron. The TRAINGD network training function is to be used.

net = newff([0 10],[5 1],{'tansig' 'purelin'},'traingd');

%Set network parameters as follows

net.trainParam.epochs = 50

net.trainParam.goal = 0.1

net.trainParam.lr = 0.01

net.trainParam.min_grad=1e-10

net.trainParam.show = 25

net.trainParam.time = inf

%Maximum number of epochs to train

%Performance goal

%Learning rate

%Minimum performance gradient

%Epochs between displays

%Maximum time to train in seconds

%Here the network is simulated and its output plotted against the targets.

net = train(net,P,T);

Y = sim(net,P);

plot(P,T,P,Y,'o')

Exercise 2: Train NN with different training algorithms

To train the NN, different training algorithms can be used. They have their own

features and advantages as stated below.

traingd – Basic gradient descent learning algorithm. Slow resonse but can be mused in

incremental mode training.

traingdm – Gradient descent with momentum. Generally faster than traingd, can be

used in incremental mode training.

traingdx – Adaptive learning rate. Faster training than traingd, but can only be used in

batch mode training.

trainrp – Resilient backpropagation. Simple batch mode training algorithm with fast

convergence and minimal storage requirements.

trainlm – Levenberg-Marquart algorithm. Faster training algorithm for networks of

moderate size, has memory reduction feature for use when the training set is large.

There can be different activation functions with distinct features. Those are explained

in lecture 5 in detail. Some of them are

tansig

logsig

purelin

Observe the differences of these algorithms and activation functions on the training of

the patterns given in Exercise 1.

Exercise 3: Matlab code for obstacle avoidance of Khepera

%This program is running OK avoiding

%obstacles on left/right or in front of

%Date of update 4/7/2009

ref=kopen([0,9600,100]);

for i=1:100

v=kProximity(ref);

u=kAmbient(ref);

d5=v(5);d6=v(6);

lvL=u(7);lvR=u(8);

lv=(lvL+lvR)/2;

%direction is set by

if(lv<1/15)

kMoveTo(ref,5,-1) %move right

%position in pulse 1 pulse = 1/12 =0.083mm

end

x=rand(1);

if(x==0)

kSetSpeed(ref,5,-1) %move right

else if(lv>1/15)

kSetSpeed(ref,-1,5) %move left

else

kSetSpeed(ref,4,-1) %move right

end

end

end

kMoveTo(ref,0,0);

kclose(ref);