DDApplication

advertisement

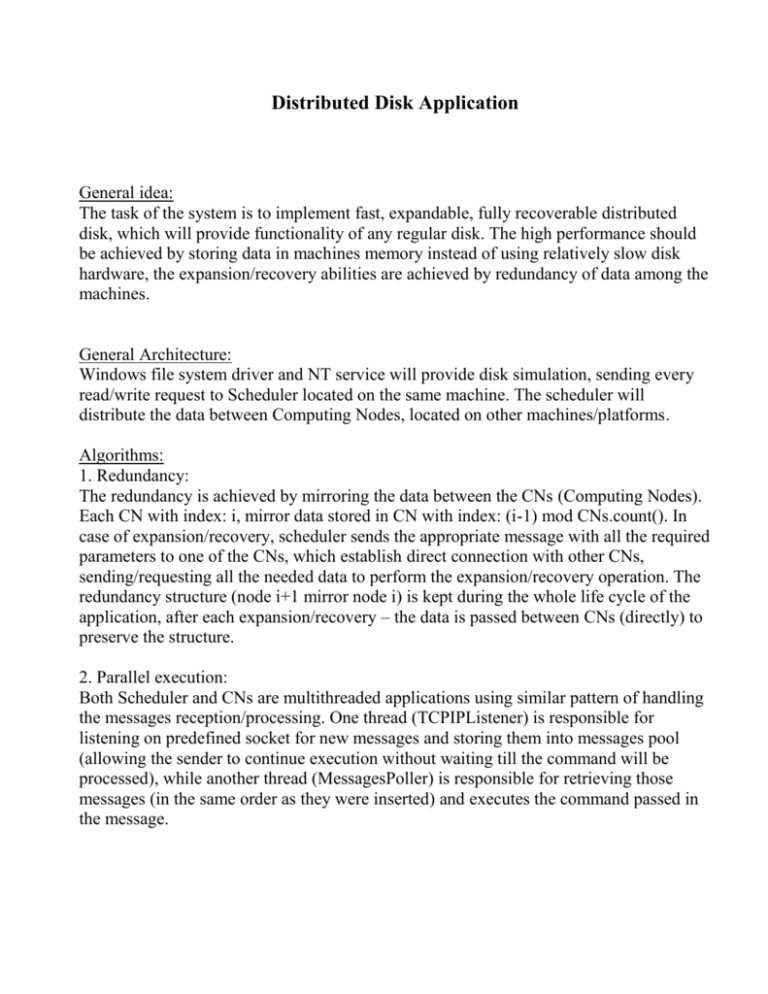

Distributed Disk Application General idea: The task of the system is to implement fast, expandable, fully recoverable distributed disk, which will provide functionality of any regular disk. The high performance should be achieved by storing data in machines memory instead of using relatively slow disk hardware, the expansion/recovery abilities are achieved by redundancy of data among the machines. General Architecture: Windows file system driver and NT service will provide disk simulation, sending every read/write request to Scheduler located on the same machine. The scheduler will distribute the data between Computing Nodes, located on other machines/platforms. Algorithms: 1. Redundancy: The redundancy is achieved by mirroring the data between the CNs (Computing Nodes). Each CN with index: i, mirror data stored in CN with index: (i-1) mod CNs.count(). In case of expansion/recovery, scheduler sends the appropriate message with all the required parameters to one of the CNs, which establish direct connection with other CNs, sending/requesting all the needed data to perform the expansion/recovery operation. The redundancy structure (node i+1 mirror node i) is kept during the whole life cycle of the application, after each expansion/recovery – the data is passed between CNs (directly) to preserve the structure. 2. Parallel execution: Both Scheduler and CNs are multithreaded applications using similar pattern of handling the messages reception/processing. One thread (TCPIPListener) is responsible for listening on predefined socket for new messages and storing them into messages pool (allowing the sender to continue execution without waiting till the command will be processed), while another thread (MessagesPoller) is responsible for retrieving those messages (in the same order as they were inserted) and executes the command passed in the message. Class diagram - messages class hierarchy: CommonMessage (Abstract Class) AckMessage (To acknowledge only) Data Message (Read/write data – 1 block) RecoveryExpansionMessage (Data needed for recovery/ expansion) Work flow of expansion process: Suppose existence of n active Computing Nodes, adding node n+1: 5 CN1 6 CN2 CNn CNn+1 … 3 4 1 2 Scheduler 1. Scheduler initializes the new CN (sending block size, and checks connection) 2. Acknowledge Message excepted 3. Scheduler sends Recovery Expansion Message to CN1 – with Expansion command and parameters of the last CN. 4. CN1 sends all the mirrored data (of CNn) to CNn+1 (according to the above algorithm node n+1 should mirror node n). 5. CNn+1 sends acknowledge message to CN1. 6. CN1 send acknowledge message to Scheduler. Work flow of recovery process: Suppose that n active Computing Nodes exist in the system, while sending message to node i, scheduler discovered that it failed: 5 4 CN1 … CNi-1 CNi CNi+1 2 CNi+2 … CNn 3 1 6 Scheduler 1. Scheduler sends "Start Recovery" command to CNi+1 with addresses of CNi+2 and CNi-1. 2. CNi+1 move its mirrored data (of NCi) into its data, and send request to CNi+2 to mirror it. 3. CNi+2 inserts received data to its mirror and sends acknowledge message. 4. CNi+1 requests data from CNi-1 (because now it's not mirrored). 5. CNi+1 receives data from CNi-1 and stores it in as mirror. 6. CNi+1 sends acknowledge message to Scheduler. * Note: In the current implementation, recovery and expansion processes considered as atomic, meaning that any failure during one of them might insert the system into unstable state with unpredictable further behavior.