Notes on Information Encoding - Systems and Computer Engineering

advertisement

Course Notes

1.2

Fall 01/02

94.201

Page 1 of 122

Notes on Information Encoding

The values of the state variables in the components of a computer system are fixed-length

strings of bits. Each bit is a binary-valued digit. Since the state variable values are

binary-valued, it is necessary to use a language based on binary values to describe all

information relevant to a program. Using binary values to represent application

information is referred to as a binary encoding of the information. Both the data and the

algorithm (instructions) associated with a program must be encoded. This section will

deal with data encoding, and the discussion of instruction encoding will be delayed until

after exploring processor architecture and simple programming concepts.

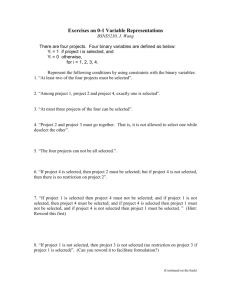

This discussion of information encoding is based on Figure 1. The figure shows two sets

of information: application information, and fixed-length, binary valued strings. The

application information has meaning to people and applications. Unfortunately, a

computer can only represent information using binary values. Programmers must use a

representation mapping to encode application information as binary values, and then

write application programs to work with the information in its binary form.

application

information

representation

mapping

fixed-length

binary-valued

strings

Figure 1: Representing Application Information as Binary Strings

To construct an application, it is necessary to understand how information is encoded.

For some types of information, the computer hardware may provide some built-in support

based on encodings that have become defacto standards (i.e. encodings that have been

proven over time to be appropriate for data types frequently used in applications). Most

computers have built-in support for integers (both signed and unsigned), and provide

instructions that manipulate binary strings under integer encoding assumptions.

Built-in support for data types simplifies the task of building applications, however, there

are many data types for which the hardware does not have built-in support. In these cases,

the programmer must select (or invent) an appropriate encoding and then use general bit

manipulation instructions to work with the encoded values.

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 2 of 122

This section will discuss the standard encodings used for: unsigned integers (binary

number system encoding), signed integers (2’s complement encoding), and characters

(ASCII encoding).

In addition, the signed magnitude encoding of signed integers will be discussed as one

possible (but rarely used) alternate to 2’s complement encoding.

Binary Values as Strings of 0’s and 1’s

Each bit in a computer system state variable may have the value 0 or 1. If a state variable

is viewed as a string of N bits, then there are 2N different values for the string (there are 2

possible values for each digit, and N digits). If you are uncomfortable with accepting this

mathematical conclusion, convince yourself by considering several cases for increasing

values of N. You will eventually conclude that adding a bit to the string doubles the

number of possible values (half of the values have the new bit = 0, and half of the values

have the new bit = 1). If the number of possible values for N – 1 bits is 2N-1, then

doubling the number by increasing to N bits gives: 2 x 2N-1 = 2N.

By itself, a string of 0’s and 1’s has no meaning – it is just a pattern of symbols. Inside a

computer system, a string of 0’s and 1’s can have many possible interpretations.

Fixed-Length Limitation: There is a significant limitation in using fixed-length binary

strings to represent information (as shown in Figure 1). The number of bits used in the

string limits the number of possible unique patterns of 0’s and 1’s, and therefore, limits

the number application values that can be represented by the strings. The fixed-length

limitation often constrains encodings to a finite subset, or range, of the application

information.

Number Systems

Applications often deal with numbers, so it is useful to consider number systems. The

decimal number system is used extensively in everyday life, but it is just one example of

a number system. In general, a number system consists of a base and an interpretation

rule. The base determines the set of symbols (the digits) that can appear in numbers, and

the value of each symbol. The interpretation rule specifies how to interpret a string of

digits as a numeric value. The rule usually specifies how to convert the string to a base 10

(decimal) number, since these are the numbers people use most frequently to represent

numeric concepts.

Counting Number Example: The number system we use to count things is also referred to

as the base 10 system. In this system, there are 10 digits (0,1,2,3,4,5,6,7,8,9) and each

symbol has an associated value. For example, the digit 0 represents the value zero. Some

might find this overly trivial, but do not confuse the syntactic symbol “0” with the

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 3 of 122

counting concept of zero – the concept is the meaning (the semantics), while the symbol

is a language construct (the syntax) used to represent the concept in a written

communication. Different number systems might use a different symbol to represent the

concept zero. A string of base 10 digits can be used to represent a counting number, and

the interpretation of the string is based on the positions of the digits in the string. The

right-most (least significant) digit is in position 0, and in a string of I digits, the left-most

(most significant) digit is in position I–1. The string represents a summation of terms in

which the coefficient of each term corresponds to the value of the digit in the string, and

the coefficient is multiplied by a power of 10. The exponent of each term is determined

by the position of the digit in the string. The 4-digit string D3D2D1D0, represents the

counting number obtained from the sum:

D3 x 103 + D2 x 102 + D1 x 101 + D0 x 100

For example, the string of base 10 digits: 2458 represents the number obtained from the

summation:

2 x 103 + 4 x 102 + 5 x 101 + 8 x 100

= two thousand, four hundred and fifty eight

The example may seem trivial, since it involves base 10 representation – perhaps the

examples for base 2 and base 16 will be more instructive.

General Base-N Number System

The base 10 number system discussed above can be generalized to an arbitrary base N.

All that is required is a mapping from the base symbols to counting values, and a

generalization of the interpretation rule. In the generalized interpretation rule, the ith term

of the summation is:

(counting value of ith digit) x (base 10 value of the base) i

where i starts at 0 for the right-most digit

Notation: Where there is possible confusion about the base that pertains to a string of

digits, the string will be followed by a subscript indicating the appropriate base. For

example:

245810 indicates base 10 representation.

Binary Number System

The binary number system is an instance of the general base N system in which N = 2. In

this number system there are only two symbols: 0 and 1. The symbols have their usual

decimal interpretations (i.e. zero and one, respectively).

Example:

100112

applying the base 10 interpretation rule gives:

1 x 24 + 0 x 23 + 0 x 22 + 1 x 21 + 1 x 20

= 16 + 0 + 0 + 2 + 1

= 19

Therefore, 100112 = 1910

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 4 of 122

Hexadecimal Number System

The hexadecimal number system is an instance of the general base N system where N =

16. In this number system the symbols are 0 through 9 (with the usual counting value

interpretations), and A through F with the following counting value interpretations:

A = 10

B = 11

C = 12

D = 13

E = 14

F = 15

Example:

A0D316

applying the interpretation rule gives:

A x 163 + 0 x 162 + D x 161 + 3 x 160

substituting counting values gives

10 x 163 + 0 x 162 + 13 x 161 + 3 x 160

= 10 x 4096 + 0 + 13 x 16 + 3

= 41,17110

Using Hexadecimal as a Shorthand for Binary

As mentioned previously, computer system state variables are binary valued. Machinelevel programming requires references to state variable values, and this leads to the need

to refer to binary values in discussions. The length of binary numbers makes them

awkward to say and write, and error prone to use. It might be tempting to use decimal

numbers as a shorthand representation for binary numbers. In the above example, the 5digit binary value 100112 corresponds to the 2-digit value 1910, and it would be much

easier for us to talk about the number “nineteen base ten” than the number “ten thousand

and eleven base two”). Unfortunately, the conversion between binary number system

representation and decimal number system representation is not intuitively obvious, and

requires calculations. As a result, decimal numbers are awkward to use for this purpose.

Fortunately, the conversion between binary number representation and hexadecimal

number representation is intuitively simple and does not require calculation. Therefore, it

will be convenient to use hexadecimal numbers as a shorthand representation for binary

numbers.

Consider the following 12-digit binary value:

b11 b10 b9 b8 b7 b6 b5 b4 b3 b2 b1 b0

This is interpreted as:

b11 x 211 + b10 x 210 + b9 x 29 + b8 x 28 +

b7 x 27 + b6 x 26 + b5 x 25 + b4 x 24 +

b3 x 23 + b2 x 22 + b1 x 21 + b0 x 20

Which can be rewritten as:

(b11 x 23 + b10 x 22 + b9 x 21 + b8 x 20) x 28 +

(b7 x 23 + b6 x 22 + b5 x 21 + b4 x 20) x 24 +

(b3 x 23 + b2 x 22 + b1 x 21 + b0 x 20) x 20

4

But 2 = 16, and therefore the expression can be rewritten as:

(b11 x 23 + b10 x 22 + b9 x 21 + b8 x 20) x 162 +

(b7 x 23 + b6 x 22 + b5 x 21 + b4 x 20) x 161 +

(b3 x 23 + b2 x 22 + b1 x 21 + b0 x 20) x 160

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 5 of 122

And this is the first three terms of the interpretation rule for base 16 values. Therefore, if

there is an easy way to convert between 4-bit binary values and hexadecimal digits, then

there is an easy way to convert between strings of binary digits and strings of

hexadecimal digits. It turns out that the conversion is simple:

Bin

0000

0001

0010

0011

Hex

0

1

2

3

Bin

0100

0101

0110

0111

Hex

4

5

6

7

Bin

1000

1001

1010

1011

Hex

8

9

A

B

Bin

1100

1101

1110

1111

Hex

C

D

E

F

The conversion from binary to hex can be accomplished by starting at the right end of the

binary string and replacing successive groups of 4 binary digits with their corresponding

hex digits. For example:

1001 1111 0011 01102 = 9F3616

9

F

3

6

The conversion from hex to binary can be accomplished by replacing each hex digit with

its corresponding 4-bit binary value. For example:

80C516 = 1000 0000 1100 01012

Hexadecimal values are used frequently in programs, and the 16 subscript is awkward in

simple text editors. To solve this problem, hexadecimal values are often denoted by

extending the digits by the character 'H' (or 'h'). For example:

80C516 might be written as 80C5H (or 80C5h)

Binary Encoding of Unsigned Integers

The set of unsigned integers are the counting numbers: {0, 1, 2, 3, 4, 5, … }

The unsigned integers can also be visualized on a number line:

0 1 2 3 4 5

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 6 of 122

Computer applications often involve unsigned integer values, and an encoding of the

values is needed. (Recall from Figure 1 that a binary encoding is an interpretation

mapping between application information and fixed-length binary strings.) Most

computers have built-in support for unsigned integers under the binary number system

encoding, i.e. binary strings are interpreted as unsigned integers using the base 2 number

system interpretation rule described earlier.

The fixed-length limitation (recall previous discussion) constrains the range of unsigned

integers that can be represented. If N-bit binary strings are used, then there are 2N unique

binary values that can be used in the encoding. It might be tempting to conclude that 2N is

the largest unsigned integer value that could be encoded, however this would not account

for the encoding of the value 0. Since one of the binary values (i.e. the binary string

consisting of all 0’s) is used to represent 0, then there are 2N – 1 values left to encode

values greater than 0. Therefore, under the binary number system interpretation, N-bit

strings can encode unsigned integer values in the range:

0 . . 2N–1.

In terms of the number line visualization, the fixed-length limitation truncates the number

line to a finite range:

0 1 2 3

...

2N – 1

Representing only a finite range of unsigned integer values creates the potential for

problems when performing arithmetic operations on the binary encoded values. For

example, suppose that adding two values together results in an answer that is outside of

the finite range? ( E.G. add 1 to 2N–1.) This problem is referred to as overflow and is a

major concern when performing computer arithmetic.

The conversion between binary values and unsigned integer values is reasonably

straightforward. The conversion follows the binary number system interpretation rule

described previously. There are several possible algorithms for converting unsigned

values to binary representations. The algorithm shown below generates the bits one at a

time, beginning with the least significant bit and ending with the most significant bit. In

the algorithm assume that div is an integer division operation (i.e. it ignores any

remainder, for example: 5 div 2 = 2), and mod is a modulus operation that results in only

the remainder of a division (for example 5 mod 2 = 1).

An algorithm for converting an unsigned integer to an N-bit representation (follows

previous convention of labeling the least significant bit as b0):

value = value to be converted

for ( i = 0; i < N; i++ )

{

bi = value mod 2 ;

value = value div 2;

}

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 7 of 122

In the algorithm, each iteration through the loop generates the value of one bit and

reduces the value remaining to be converted. Try a few values to convince yourself that

the algorithm works!

Binary Encoding of Signed Integers

The set of signed integers is: { … , -3, -2, -1, 0, 1, 2, 3, … }

The signed integers can also be visualized on a number line:

–3 –2 –1 0 1 2 3

Notes: The unsigned integers are a subset of the signed integers. Numbers to the left of

zero are negative, while numbers to the right are positive. 0 is neither positive nor

negative.

Now consider the problem of encoding signed integers as binary values. The binary

number system interpretation will not (by itself) be sufficient, since it does not account

for the possibility of negative numbers. Two encoding schemes will be presented;

however, it is the 2’s complement method that is most frequently used in typical

computer systems (and by most high-level language compilers).

Method 1: Signed Magnitude Encoding

A simple solution to encoding signed integer values in a fixed length sequence of bits

would be to use one bit to encode the sign, and the remaining bits to encode the unsigned

magnitude using the binary number system interpretation (as discussed previously).

To illustrate the signed magnitude approach, consider 8-bit values, and assume that the

most significant bit (b7) is used to encode the sign. Let b7 = 1 denote negative values,

and b7 = 0 denote positive values. Using this scheme, the following values would result:

Signed Integer Value (decimal)

1

0

-1

127

-20

8-Bit Signed Magnitude Encoding

0 0000001

0 0000000

1 0000001

0 1111111

1 0010100

The primary advantage of the scheme is that the encoded binary values can be interpreted

easily as signed values. There are, unfortunately, several disadvantages, including:

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 8 of 122

Two representations for zero. Under the scheme, there are two encodings for zero:

0 0000000

positive zero (b7 = 0)

1 0000000

negative zero (b7 = 1)

The negative encoding for zero is not ideal, since zero is not usually thought of as being

negative (zero is not really positive either – positive numbers are greater than zero).

Furthermore, having two encodings for the same signed integer value means that the use

of one of the encoded values is redundant (i.e. it could be used to encode some other

value).

Method 2: 2’s Complement Encoding

The method used in most computers to represent signed integers is the 2’s complement

encoding. The method relies on the ability to perform the 2’s complement operation on

binary values. The operation first requires forming the complement of each bit, and then

adding 1 to the result. The complement of a bit is obtained by inverting its value (i.e. the

complement of 0 is 1, and the complement of 1 is 0). Binary addition is carried out in a

bitwise fashion, with the following rules:

0

0

1

1

+0

+1

+0

+1

0

1

1

10

0 carry 1

For the last case, the carry is propagated to the next most significant bit.

For example, consider the 2’s complement operation on the 8-bit value 01101011:

01101011

10010100

+

1

10010101

(each bit has been complemented)

(add 1)

2’s complement of 01101011

The 2's complement operation by itself does not define the mapping of binary strings to

signed integers. Since the unsigned integers are a subset of the signed integers, it would

be convenient to use the same binary values to represents the same integer values under

both interpretations. Therefore, zero and any positive integers should be represented by

the same binary values under both unsigned and signed integer interpretations. What

remains is the definition of the mapping of binary values to negative integers.

Under the 2’s complement interpretation, the binary value representing the negation of a

value can be obtained by applying the 2’s complement operation to the original value.

For example, the 8-bit representation of –1 can be obtained by applying the 2’s

complement operation to the 8-bit representation of +1:

+1 = 00000001

+

11111110

1

11111111

(each bit has been complemented)

(add 1)

2’s complement representation of –1

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 9 of 122

In the 2’s complement encoding, it turns out that the representations for all non-negative

numbers (i.e. zero and all positive numbers) have the most significant bit = 0, while the

representations of all negative numbers have the most significant bit = 1. It might be

tempting to think that this is the same as the sign bit used in the signed magnitude

encoding described earlier, however, this is not the case! In 2’s complement encoding, all

bits including the most significant bit are relevant to the magnitude of the value being

represented.

The fixed-length limitation imposes a range on the signed integer values that can be

represented using 2's complement encoding. For N-bit binary strings, half of the 2N

binary values have the most significant bit = 1, and therefore represent negative integers:

1 x 2N = 2N–1 binary values represent negative integers

2

Therefore, the smallest negative integer that can be represented (i.e. the negative integer

with the largest magnitude) is –2N–1, and there are 2N–1 binary values left to represent

non-negative integers. Since one binary value represents zero, then the largest positive

integer that can be represented is 2N–1 – 1.

In terms of the number line visualization, the fixed-length limitation truncates the number

line to a finite range:

–2N–1

–2 –1 0 1 2

2N–1–1

For non-negative integers, the conversion between binary values and signed integers

follows the binary number system interpretation (as for unsigned integers). The

conversion is not as obvious for negative integers. To form the binary value that

represents a negative integer, first form the unsigned representation of the magnitude (as

done for unsigned integers), and then perform the 2’s complement operation to obtain the

representation of the negation of the value. This process was illustrated above to obtain

the representation of –1. To obtain the negative integer represented by a binary value,

first perform the 2’s complement operation to obtain the representation of the magnitude,

and then calculate the magnitude (as done for non-negative values) – but, remember that

the result is a negative value!

For example, consider the integer value represented by the 8-bit binary value 11111111.

Since the most significant bit = 1, the integer value is negative. To find the magnitude,

the 2's complement of the value must be taken:

11111111

00000000

(each bit has been complemented)

+

1

(add 1)

00000001

2’s complement of 11111111

But the 2's complement result is easily identified as the magnitude = 1, and therefore, the

original binary value represents the signed integer –1.

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 10 of 122

It is interesting to compare the two examples given above. The first example obtained

the encoding for –1 by taking the 2's complement of +1 (i.e. by negating the

representation for 1). The second example negated the resulting representation of –1, and

arrived back at the original representation for +1 (as introduced in the first example). The

two examples show the consistency of the negation operation:

negation( negation( +1 ) ) = +1

Consider several more interesting cases:

Recall that one of the problems with the signed magnitude encoding was that it resulted

in two representations of the value zero. Consider the 2's complement of the 8-bit value

00000000:

0000000

11111111

(each bit has been complemented)

+

1

(add 1)

1 00000000

The addition of 1 to the complemented value has propagated a carry through all the bits

to create a 9-bit value! The use of fixed-length binary strings creates problems here, since

the 9-bit result cannot be represented by an 8-bit value. As a rule when performing

arithmetic on fixed-length binary strings, say of length N, the result is truncated to keep

only the N least significant bits of the answer. (The carry out of the most significant bit is

sometimes relevant and will be considered further in the discussion of overflow.)

Truncating the 9-bit answer obtained above to keep the least significant 8 bits gives:

00000000, which is the representation of 0. Therefore, the negation of 0 is 0 (which is

mathematically correct), and there is only one representation for the value zero.

Now consider the largest positive value that can be represented by an 8-bit value under

2's complement encoding: From the previous discussion of the signed integer range, the

largest integer is 28–1 – 1 = 12710 = 011111112. Negating this value gives:

01111111

10000000

+

1

10000001 = –12710

Subtracting 1 from this value gives:

10000001

–

1

10000000 = –12810 (i.e. the value –28–1)

Now what should happen if we negate this value? Should we get the representation of

+128? (We should be surprised if we do, since +127 is the largest value that can be

represented!) Try it and see what happens!

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 11 of 122

Binary Encoding of Characters

Applications often deal with information in the form of textual characters (e.g. output to

the display or a printer). Since the state variables of the computer system only hold binary

values, the characters must also have binary encodings. The 7-bit ASCII encoding

scheme is widely used in practice. (ASCII = American Standard Coding for Information

Interchange) The encoding is shown in the attached ASCII table. In the encoding, each

character is represented by a 7-bit binary value. This encoding is often extended to form

an 8-bit value by extending the encoding with b7 = 0. The table lists hexadecimal values

instead of binary values (recall: hexadecimal notation is used as shorthand for binary

notation).

Several points are worth noting: The encoding for the character '0' (character zero) is 30H

(not 00H). A blank space has a representation in the encoding (20H). Upper and lower

case letters have different encodings (e.g. 'A' is encoded as 41H, while 'a' is encoded as

61H).

The table shows the encodings for displayable characters, but there are several nondisplayable encodings worth noting:

carriage return

0DH

line feed

0AH

These codes are often used to control the cursor position on the display, and the print

head on a printer.

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada

Course Notes

1.2

Fall 01/02

94.201

Page 12 of 122

ASCII Table (for displayable 7-bit ASCII character set)

hex

char

hex

char

hex

char

20

21

22

23

blank space

!

"

#

40

41

42

43

@

A

B

C

60

61

62

63

`

a

b

c

24

25

26

27

$

%

&

'

44

45

46

47

D

E

F

G

64

65

66

67

d

e

f

g

28

29

2A

2B

(

)

*

+

48

49

4A

4B

H

I

J

K

68

69

6A

6B

h

i

j

k

2C

2D

2E

2F

,

.

/

4C

4D

4E

4F

L

M

N

0

6C

6D

6E

6F

l

m

n

o

30

31

32

33

0

1

2

3

50

51

52

53

P

Q

R

S

70

71

72

73

p

q

r

s

34

35

36

37

4

5

6

7

54

55

56

57

T

U

V

W

74

75

76

77

t

u

v

w

38

39

3A

3B

8

9

:

;

58

59

5A

5B

X

Y

Z

[

78

79

7A

7B

x

y

z

{

3C

3D

3E

3F

<

=

>

?

5C

5D

5E

5F

\

]

^

_

7C

7D

7E

7F

|

}

~

non-displayable

Copyright Trevor W. Pearce, September 12, 2000

For use in the 94.201 course only – not for distribution outside of the

Department of Systems and Computer Engineering, Carleton University, Ottawa, Canada