Ecology Laboratory

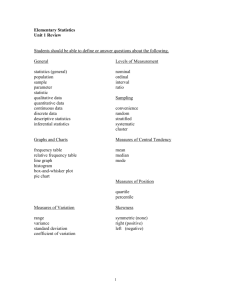

advertisement

Statistics – A Survival Manual Part 1 - Measures Of Central Tendency "What's the price of bacon?" "How is the weather in Southern California this time of year?" "How tall does the Douglas fir grow?" These are examples of questions that are really looking for a figure that would be representative of your total population, that would give a fairly good general impression, a measure of central tendency. Some form of "average" is generally used to provide the answer. The mean and median are the most common measures of central tendency. Mean The mean, occasionally called the arithmetic mean, is the most common measure of central tendency. This measure of central tendency allows the value (magnitude) of each score in a sample to have an influence on the final measure. To obtain a mean, all of the observations (X) in a sample are added together, then that sum is divided by the total number (n) of observations in the sample. Mean = X/n Median The median is a second measure of central tendency. Unless you have a particularly large group of numbers, this is a rather simple measure. To determine the median, sort your data so the observations either increase or decrease in value. The median is then simply the middle observation in your data set. If the number of observations in your data set is odd, the middle observation can be found by dividing the total number of observations by two, then rounding the resulting number up to the nearest whole number. For example, if you have 25 observations in your data set, the median would be obtained by dividing 25 by 2, giving 12.5. Rounding up to the nearest whole number gives 13, making observation 13 in your ordered data set the median value for your sample. If the number of observations in the data set is even, the median is obtained by averaging the values for the middle two observations after the data set has been ordered. For example, in a data set with 24 values, the middle two observations would be observations 12 and 13. If the value for observation 12 was " 100" and the value for observation 13 was " 102", the median for the data set would be "101 ". Part 2 - Measures Of Variability Or Dispersion Means and medians are expressions of central tendency. They provide a general picture of what a group of numbers is like, however, measures of central tendency can be misleading. For example, let us say the average height of two 1 basketball teams is 180 cm. On team A, all of the players are exactly 180 cm, but on team B, one is 180 cm, two are 160 cm and two are 200 cm. Knowledge of this variation, or dispersion from the mean would be meaningful to the coach of team A. A number of measures of dispersion are in common use; the range and the interquartile range (used in conjunction with the median) and the variance, standard deviation and coefficient of variance (used in conjunction with the mean) being the most common. The Range When the nature of the distribution is not known, or when the distribution is known to be other than normal (e.g., skewed), the range will give a rough idea of the dispersion. The range is the difference between the high and low scores. The range does not tell us anything about the nature of the distribution, only its extent. If our sample observations were 18, 17, 17, 12, 11, 11, the range would be 7, (18 - 11 = 7). The Interguartile Range Quartiles, like the median, represent points in an ordered set of observations. The first quartile (Ql) is a point below which 25% of the observations fall; 50% of the cases fall below Q2 and 75% below Q3. The interquartile range includes those 50% of the scores that fall between Q I and Q3. When the distribution of numbers is not normal because of the extremes on either or both ends, an inspection of the middle 50% may prove to be most revealing in describing a group of numbers. Inspect the following set of ordered data. In this data set, the first quartile includes observations 1 through 5, the second quartile includes observations 6 through 10 the third quartile includes observations 11 through 15, and the interquartile range extends from 17 to 61. Obs. # Value Obs. # Value 1 2 3 4 5 6 7 8 9 10 10 10 11 13 15 17 21 23 26 30 11 12 13 14 15 16 17 18 19 20 35 39 42 50 61 73 95 102 113 140 The Variance Another group of measures of dispersion is based on the distance of each measurement from the center of the distribution (i.e. the mean or median). The 2 first of these is the variance. To obtain the variance of a set of numbers, follow these steps: 1. 2. 3. 4. 5. Subtract each observation from the measure of central tendency (usually the mean) to obtain the "deviation" of each observation. Square each of these deviations. Add together all of your squared deviations. Subtract 1 from the total number of observations in your sample (n- 1) Divide your "sum of squared deviations" by this number. Bingo! You've got the variance of your data set. Just as a side note, dividing by "n1 " gives what is technically know as the sample variance. The Standard Deviation With a normal distribution, the mean is the most accurate and descriptive measure of central tendency because it considers the magnitude (or value) of each score. In like manner the standard deviation considers the magnitude of each score and therefore is the preferred measure of dispersion if the distribution is normal. Although the variance is a useful starting point in describing in the variability of a normal data set, the standard deviation is more commonly used (for reasons we will not get into now). Once you have the variance of a set of scores, getting the standard deviation is simple. All you have to do is take the square root of the variance. Coefficient of Variance The final measure of dispersion is the coefficient of variation (CV for short). The CV is a useful measure of dispersion because it allows us to compare the magnitude of variation between two sets of data when the means (and the ranges) differ significantly. To obtain the CV, simply divide the standard deviation by the mean, and multiply the result by 100. Part 3 - A Test Of Location Perhaps one of the most basic questions we ask in science is "Do these two [fill in the blank with your favorite research topic] differ?" In most cases, we begin answering that question by using a statistical test that looks for differences in the location of the measure of central tendency for the two "things". The most commonly used test for differences in central tendency between two samples is the t-test. The t-test should only be applied to independent samples. This means the selection of a member of one group has no influence on the selection of any member of the second group. If you do not understand what this means, you need to ask me 3 about it, because this concept of independence between or among samples is a very basic assumption in many of the statistics commonly used in the biological sciences. The t-test incorporates not only a measure of central tendency (the mean) for the samples, it also incorporates measures of dispersion (the standard deviation) and the total number of observations in each sample (the sample size). Our ability to detect a true difference between two samples depends not just on the absolute difference between the means, but on the amount of dispersion and the size of our sample. In general, for the same difference between means, as variability decreases, our ability to detect a statistically significant difference increases. Conversely, as sample size increases , our ability to detect a difference increases. Once our arithmetic manipulations are completed, the result is applied to a table of values (often called a "values of t" table, see the attached sheet). The table has several columns of values or levels of significance. The researcher must decide how much flexibility can be allowed. This will depend on the degree of accuracy of the measuring instrument. Most scientific research sets the significance level at .05. This means there is only a 5 percent chance an error has been made in saying the means of the two groups were not exactly alike. The t-test The formula for the t-test was developed by W.S. Gossett in the early 1900's. As a brewery employee he developed a system of sampling the brew as a periodic check on quality. He published his works under the name Student which explains why you hear this formula called the Student t from time to time. In order to proceed with a t test, the "standard error of the difference" between the means of the two groups must be determined. Just as the name implies, this is a measure of variability in our estimate of the difference between the two means. To illustrate the t-test, look at the following table. The numbers represent average fish lengths from an urban and rural stream. Mean Length Urban Stream Rural Stream 80 84 Standard Deviation 9.16 7.83 Sample Size 30 30 At first glance this would seem to suggest fish in the rural steam are larger. But, we must ask the question, is the 4 mm difference a chance difference or does it really represent a true difference. If we were to take another sample from each stream and do it again, would we get the same results? To answer this question, you must first compute the standard error of the difference (sdiff). The following formula is appropriate for independent samples, if the distributions are normal. Once you compute the standard error of the 4 difference you can determine if a true (statistically significant) difference exists with the aid of the t-test and tables or an appropriate computer program. If we plug our numbers in from the data table, we find that the "Standard Error of the Difference" for our 4 mm length difference is 2.29 mm. The next step in the process is to use our value for sdiff to calculate a t-score. The formula for the tscore is given on the next page. As mentioned above, in order to determine if there is a true difference between the means, you need a table of values of t. You also need to know the degrees of freedom used in the problem. Degrees of freedom are determined by adding the number of scores in each group and subtracting 2, [n1 + n2 - 2]. Looking at the attached sheet, the left hand column is labeled df (for degree of freedom), Look down that column until you find the appropriate df for your problem and read across to the column labeled .05 for two tailed tests. If the figure you found in the t-test is larger than the .05 figure there is a true difference between the means. For the example above, looking down the column for degrees of freedom we pick row 50. The degrees of freedom for this problem would be 58, (n, + n2 - 2) but in that there is no row 58 we would drop to row 50, the more conservative alternative. The two tailed values in this row are .05 = 2.009, .01 = 2.678 and .001 = 3.496. Our t value was 1.746 which is smaller than 2.009 so we can say there is not a significant difference between the means at the .05 level or 5% level. It should be noted that this only tells us if there is a significant difference, not the direction of any difference; to conclude the latter requires more than the intent of this survival manual. Formula to Calculate the Standard Error of the Difference 5 Formula to Calculate t-scores Part 4 - Correlation Even though we often think of statistical techniques as a way of telling if two things, groups or sets of data are different, we can also use statistical analysis to ask if two things are related. For example, we might want to know whether the rate of growth in a bacterial colony is related to temperature. Alternatively, we might be interested in the relationship between the density of two types of plants that use similar types of resources. These and many other questions can be tested using a statistical process called correlation. There are several different formulas to determine correlation. The most frequently used is the Pearson product moment correlation, named after Karl Pearson. This procedure requires that the numbers be at least interval in nature. It also requires that the data be paired. We can use the bacterial colony question as an example of what is meant by paired data. Each item of the pair must have a common denominator. In this instance, the number of colonies counted and the temperature both come from the same petri dish. The temperature in a given dish is one of the paired numbers and the number of bacterial colonies is the other member of the pair. The pair of scores for dish #1 (see the next table) are 100 (Number of colonies) and 85 (temperature). 6 Dish Number Number of Colonies Temperature 1 2 3 4 5 6 100 70 85 75 65 60 42 34 34 32 30 29 From the data above it can easily be seen that the warmer the dish, the greater the number of colonies. Knowing this may be adequate for your purposes, or you may wish to have a more precise measure of the degree of relationship. You may even be interested in graphically showing this phenomenon. Scattergram The graphical procedure to show correlation is called a scattergram. To develop a scattergram you first place one set of the numbers in a column in descending order and label it X. In our example of temperature and bacterial growth, we can label the number of colonies as the X column. The column of the paired figures (temperature) is listed next to the X column and labeled the Y column. Do not break up the pair. Except in the instance of a perfect positive correlation the Y column will not be in perfect descending order. On graph paper, a horizontal scale is marked off for the X column near the bottom of the page. The values increase from left to right. A vertical scale is drawn to intersect one line to the left of the lowest X value. The vertical scale represents the temperature on the Y column. The horizontal scale is the X axis and the vertical scale the Y axis. The length of the scale on the X axis should be about equal to the length of the scale on the Y axis. There is no rule that requires this, however the procedure helps space the scattergram which makes it easier to interpret. Each pair of figures is plotted on the graph. A dot is placed where the X and Y values intersect for each pair of figures. The dots are not connected. Graph the data presented above on a sheet of graph paper. If you have done this correctly, the dots should form nearly a straight line from lower left to upper right. This configuration would be considered a positive correlation, and the more nearly the dots form a straight line the stronger the relationship between the two variable. If the dots had formed a pattern from upper left to lower right, the correlation would have been regarded as negative; that is to say there was indeed a relationship, but the conclusion would be that the variables plotted had an inverse affect on each other. If we had continued to increase the temperature above 50, we might have seen a decline in the number of colonies as we exceeded the 7 temperature tolerance of the bacterial species we were testing. If we had only graphed the response to temperatures above 50, we might have observed a negative relationship. If the dots appeared randomly over the scattergam, you would interpret this as no relationship between the X and Y column measurements. Computation As mentioned above, there are several formulas for determining correlation. At this point we shall only be concerned with the raw score method. The formula is: Correlation coefficients should always fall between (+1.0) and (-1.0); it can not be anything else. The closer to 1.0, the more perfect the relationship is between the two sets of numbers in a positive way, that is, when one figure goes up, the corresponding paired number also goes up and in like manner when one of the paired numbers goes down, so will the other. The negative correlation supports the opposite conclusion, when the first number goes up, its pair goes down or vice versa. The closer to zero, the less likelihood of any relationship. Part 5 – Contingency Table Analysis Introduction The types of statistics you have seen so far allow you to describe measures of central tendency and dispersion (means, medians, ranges, standard deviations, 8 etc.), test for differences among populations (the t-test), or look for relationships between two measures (correlation). One additional group of statistical techniques that is often useful in ecological studies are tests that allow us to compare the frequency of observations in various categories between two different sets of observations. Our comparisons may be between a set of real world observations and some expected distribution of observations, or we may want to compare frequency of counts between two sets of real world observations drawn from different areas or populations. You have already encountered the first type of comparison in your introductory biology courses when you compared observed phenotypes in a population to those that would be expected if the population were in HardyWeinberg equilibrium. This general class of tests is known as a "goodness-of-fit" test since we are attempting to determine if our observations "fit" our expectations. The most common goodness-of-fit test is the chi-square goodnessof-fit test. The second type of comparison is more common in ecological field studies. In this case, we usually have two variables that we have used to classify our observations. Each variable has two or more categories. Based on these categories we can set up an table, with n columns (where n = the number of categories for the first variable) and p rows (where p = the number of categories for the second variable). Each observation can then be assigned to one of the cells in our table. The question we hope to answer is whether the value for the column variable has any influence on the values of the row variables. Statistically, we are asking the following question: "Is the value of our second variable independent of the value for our first variable?" This type of test is known as a chi-square contingency table analysis. The null hypothesis for contingency table tests is "The effect of our first variable is not influenced by our second variable." If this seems a little fuzzy, hang on while we run through an example. If it still seems fuzzy after that, come and talk to me about it! Frequency Distributions Before we get too far along on the contingency table test, we should take a moment to understand the idea of frequency distributions. Let's start with a simple idea that everybody can grasp, gender. Assume we have a class that has 50 students in it. We can classify each person in the class as male or female. If the class is split evenly according to gender, then the frequency of males in the class is 25 and the frequency of females is 25. Gender is easy because it is what is commonly called a discrete or "nominal scale" variable. There is little doubt how many categories we have. 9 But what if we wanted to categorize the class not by gender, but by height? Height is a "continuous" variable, meaning that if we had a measuring device that was accurate enough, there are an infinite number of categories into which we could place the members of our class. What we normally do with continuous data when we want to determine frequencies is establish intervals of equal "width" or magnitude, and assign our observations to those categories. In fact, we do this as a matter of course every day. For example, we might hear a person claim that they are 175 centimeters tall (or we would if we used metrics like the rest of the world), but if we were to measure their true height, we would find that in fact they were actually 174.67 cm in height. The "width" of our categories depends both on the number of observations we have and the range of our data. Contingency Table Analysis To illustrate the idea of contingency table analysis, let's continue with the same example. We can ask the question, does the gender of an individual have an effect on their height? As a null hypothesis, we could state this question as "The gender of an individual has no effect on their height". Assume that we measure the height and record the gender of all the members of our hypothetical 50 member class. The raw data is given in the table below. Height 150 151 152 152 153 154 155 155 156 157 Gende r F M M M F F F M M M Height 158 158 159 160 161 161 162 163 164 164 Gende r F F F M M M F F F F Height 165 166 167 167 168 169 170 170 171 172 Gende r M M F M F F F F F M Height 173 173 174 175 176 176 177 178 179 179 Gende r F M F M M F F M M F Height Gende r M M M M M F F F M M 180 181 182 182 183 184 185 185 186 187 When setting up a contingency table, we want to have at least 5 observation in 80% of our cells (one of those statistical rules that you should just take as a given), so when we are deciding about the "width" of our intervals, we should keep this in mind. For the data above, an interval width of 10 cm works pretty well. That gives us four classes, individuals less than or equal to 160 cm, individuals from 161170 cm, from 171-180 cm, and over 180 cm. We can then assign all of our observations to one of the cells of a 2 4 contingency table, where the columns represent gender and the rows represent the height interval. 10 Height Interval (cm) Gender Male Female < = 160 161 - 170 171 - 180 > 180 7 5 7 6 7 9 6 3 This table represents our observed frequencies. What do we compare it to? We need to create a second table that represents what our data would look like if gender and height were totally independent of one another. To do this, we use our observations to estimate what our cell frequencies would be if this were true. The first step in this process is get total counts for each row, column, and for the overall table, as illustrated below. < = 160 161 - 170 171 - 180 > 180 Column Total Male Female Row Total 7 5 7 6 25 7 9 6 3 25 14 14 13 9 Grand Total 50 The next step is to use the row total, the column total, and the grand total to determine the expected value for each cell in the table. To do this, you multiply the column total by the row total for each cell, then divide that number by the grand total. The table below illustrates the process. < = 160 161 - 170 171 - 180 > 180 Column Total Male Female Row Total (14*25)/50 = 7 (14*25)/50 = 7 (13*25)/50 = 6.5 (9*25)/50 = 4.5 25 (14*25)/50 = 7 (14*25)/50 = 7 (13*25)/50 = 6.5 (9*25)/50 = 4.5 25 14 14 13 9 Grand Total 50 Finally, now that we have our expected distribution, we can compare that to our observations to determine if there is a difference. To accomplish this, we begin by subtracting our expected values from our observed values for each cell in our table. We square that number, then divide by our expected value. 11 Step 1 Male < = 160 161 - 170 171 - 180 > 180 7-7 = 0 5-7 = -2 7-6.5 = 0.5 6-4.5 = 1.5 Step 2 Female 7-7 = 0 9-7 = 2 6-6.5 = -0.5 3-4.5 = -1.5 Male Female (0) /7 = 0.00 (-2)2/7 = 0.57 (0.5)2/6.5 = 0.04 (1.5)2/4.5 = 0.50 (0)2/7 = 0.00 (2)2/7 = 0.57 (-0.5)2/6.5 = 0.04 (-1.5)2/4.5 = 0.50 2 Once we have the values from step 2, we sum these values to obtain our chi-square value. In this case, our calculated value is 2.22. We then take this value to our handy chi-square table (attached to this handout) to determine if we should reject or fail to reject our null hypothesis. To get your p-value, you need to know (as always) the degrees of freedom you have. In contingency tables, your degrees of freedom are equal to (number of rows - 1) (number of columns -1) = (4-1) (2-1) = 3. Looking at our table, we find that with 3 degrees of freedom, the chi-square value at the 0.05 level of significance is 7.815. To reject our null hypothesis, our calculated chi-square value must be greater than 7.815. Since our calculated value is less than this, we cannot reject our null hypothesis. Based on this data, the height of an individual does not depend on his or her gender. This does not surprise me since I used a random number generator to assign heights to males and females! Practice, practice, practice! To be sure that you can do a contingency table analysis, play with the data set below on your own time. The data represent the color patterns of a species of tiger beetle during different times of the year. Your null hypothesis is that season does not affect color. If you carry out the test correctly, you should obtain a chisquare value near (given rounding errors) 27.7. Color Season Bright Red Pale Red Early Spring Late Spring Early Summer Late Summer 29 273 8 64 11 191 31 64 12 The information given in this survival manual is drawn from the following three sources: Ott, L, R. F. Larson, and W. Mendenhall. 1983. Statistics: A tool for the social sciences. Roys, K. B. 1989. Research and Statistics: An introduction Sokal, R. R. and F. J. Rohlf 1981. Biometry Additional references that are useful for sampling and statistical information are listed below. Cochran, W. G. 1977. Sampling techniques. Green, R. H. 1979. Sampling design and statistical methods.for environmental biologists. Krebs, C. J. 1989. Ecological Methodology. Southwood, T. R. E. 1979. Ecological methods. 13