Some Challenges of Statistical Capacity Building

advertisement

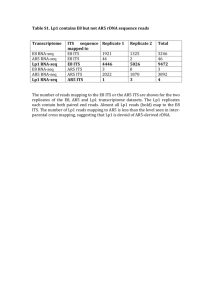

Carol S. Carson (ccarson@IMF.org ) with Lucie Laliberté and Sarmad Khawaja International Monetary Fund, Statistics Department 53rd Session of ISI, Seoul, August 22-23, 2001 IPM 43: Enhancing Statistical Capabilities Some Challenges of Statistical Capacity Building 1. We may be at a critical juncture as we examine statistical capacity, statistical capacity building, and international efforts to support statistical capacity building. The information society, globalization, demands for transparency, and increased use of quantified expressions of national and global goals have given new prominence to official statistics. The last decade has witnessed the emergence of capacities to produce statistics as needed for a marketoriented economy in a number of countries, and we may be seeing emphasis shifted to strengthening statistical capacity in countries where alleviating poverty is the overarching policy concern. As the emphasis shifts, there is an opportunity to examine whether international efforts at technical cooperation or assistance in statistics (hereafter TA) were optimal and what changes might be made if they are not. As well, for several reasons, there is a strong interest in getting more results from TA and other assistance provided by donors to countries attempting to strengthen their statistical capacity, for reasons that may range from general "aid fatigue" to more specific calls by donors for accountability in the use of scarce resources. 2. Whether all these and other factors come together as a single challenge representing a critical conjuncture for statistics could be explored as a contribution to this panel. Probably each of the developments could be explored on its own as a challenge. However, rather than pursuing either of these topics, I would like to pursue the idea that these and other factors have a common implication. That implication is that the time has come for us to firm up what we mean by statistical capacity and then identify how we might track changes in statistical capacity. I will present some ideas of how this might be done as a springboard to encourage comments and suggestions. The ideas that I will present draw on the IMF's experience in statistics as embodied in the Data Quality Assessment Framework. In addition to discussion here, I expect that the Paris21 Consortium's Task Team on Indicators of Statistical Capacity Building will be a forum where this discussion will be carried forward. 3. Section A sets out why I think the time has come for us to take up these topics. The Data Quality Assessment Framework (DQAF) and IMF experience are briefly described in Section B. Section C suggests a way forward toward a methodology for tracking statistical capacity, and Section D asks “Where do we go from here?” 726814144 April 8, 2020 (8:46 PM) -2- A. The Time Has Come 4. Many of the interrelated factors listed above push us to firming up what we mean by statistical capacity and to be able to identify whether and how much progress is being made in developing statistical capacity. 5. We noted the new prominence of official statistics. How do we respond to the calls for more and better statistics? Can we just say that resources are tight and we cannot do more? We may need to show that priorities must be established and sequenced across a range of statistics, and/or that progress is being made even if it is slower than some might expect. We noted that more attention is turning to strengthening statistical capacity in areas when poverty alleviation is the overarching concern. But the record of the past TA may not be heartening. The recipient countries are as disillusioned as the donors, if not more so. Donors, however, are better positioned to ask for comprehensive planning of statistical capacity building and for documentation of results. How do we respond? With the danger lurking that resources may be curtailed, the need is fast emerging for an appropriate response. 6. To date, we have not been adequately equipped to address statistical capacity building in its entire complexity. Our approach, to varying degrees, has been mainly intuitive, spontaneous, and conducted on an ad hoc basis. We, the international community, tend to focus our efforts on our own area of expertise and, to some degree, without knowledge of aid provided by other agencies. What this paper is suggesting is a methodology that, I believe, could help us change this situation. B. The DQAF and IMF Experience 7. In a nutshell, the DQAF identifies the most important characteristics of the statistical system and organizes them into a framework that can be used around the world. It encompasses the organizational aspects of a statistical unit, the policies and processes of a statistical unit, and the characteristics of the statistical output. It is organized in a cascading structure that progresses from the general level to the specific. The first level defines the institutional preconditions required for quality and five dimensions of data quality: integrity, methodological soundness, accuracy and reliability, serviceability, and accessibility. Each component of this level is in turn characterized by elements, which are further elaborated by indicators. This main framework, which is referred as the generic framework (presented in Annex 1), constitutes the common basis from which the more concrete and detailed frameworks specific to datasets are elaborated (e.g., national accounts, balance of payments). 8. We, in the IMF Statistics Department, have used the DQAF in three ways: to assess data quality, as a compass for TA, and as a device to monitor such assistance. The DQAF is a framework that permits the full capture of a statistical system.1 For further information, see the following articles on the Fund’s Data Quality Reference Site (http://dsbb.imf.org/dqrsindex.htm ): "Toward a Framework for Assessing Data Quality" (continued) 1 -3- 9. The Data Module of so-called Reports on the Observance of Standards and Codes (ROSCs)2 appraise current data dissemination practices against the Special Data Dissemination Standard (SDDS) or General Data Dissemination System (GDDS) and assess data quality. Data quality is assessed by applying the DQAF. The summary assessment of quality provides a snapshot of the current status of the statistics using a 4-point scale describing the observance of the good practices identified in the DQAF. IMF staff also enter into an exchange about how to effect the desired improvements. The DQAF is helpful in precisely identifying the areas for improvements. 10. IMF technical assistance missions have increasingly used the DQAF both to structure the evaluation of the situation and their recommendations. As an evenhanded tool of assessment, the DQAF is applied easily across a very diverse range of countries that comprise the IMF’s membership. Also, the DQAF is applied flexibly with the objective of pointing to areas that may need attention so that an action plan, and the resources to carry it out, can be identified. 11. We are exploring the use of the DQAF as a tool for planning and monitoring its TA activities. TA is organized as projects with clearly identified objectives, with each project involving one or more missions. The department currently uses a “logical framework” (matrix approach), where current activities of the recipient country are defined and, based on certain assumptions, clearly linked to expected outcomes with a timetable. At any stage during the project, one can track what has been achieved against the expected outcomes, including the reasons for the results, and the need, if any, to review the basic assumptions. Consideration is being given to using the DQAF as the main structure of the system with a view to harmonizing its various functions of describing, planning and monitoring. The main features of what would constitute the new system are presented in the next section. C. Toward a Methodology for Tracking Statistical Capacity 12. The IMF experience suggests that the DQAF, or an adaptation of it, could be effectively used as the underlying methodology for tracking statistical capacity. The strength of the DQAF for this purpose lies in its comprehensiveness: it addresses all aspects of a dataset, covering for that specific dataset the statistical unit, the processes, and the output. It is applicable across a range of datasets. It is adaptable to different country situations. By bringing together internationally accepted standards and codes of good practices so that (October 2000) and "Further steps Toward a Framework for Assessing Data Quality" (May 2001). 2 ROSCs are reports that are produced for assessing the adherence of countries to standards and codes. They are part of an international effort, undertaken in 1999, to strengthen the architecture of the international financial system. For statistics, the relevant standards are the SDDS and GDDS, depending on the country circumstances. -4- country’s current practices can be compared with them, it helps to highlight the vulnerabilities of the system and facilitates the identification of the TA interventions required to strengthen it. It gives guidance on sequencing the tasks required by taking into account the actual circumstances of the system and the interdependencies of the statistical operations. 13. The application of the DQAF to tracking statistical capacity involves two broad steps: selection of statistics that can be taken as representative of the statistical system and setting up tools to perform three functions. These functions are (i) providing a snapshot reading of the current status of the statistics (describing); (ii) providing a framework for planning statistical development (planning); and (iii) providing a framework for monitoring statistical capacity building (monitoring) and evaluation. Selection of datasets 14. It is widely recognized that users’ statistical needs evolve over time reflecting the changing reality under measurement as well as newly recognized concerns. The statistics to be selected should be from among those that help in developing, conducting, and monitoring public policy purposes. However, selection needs to be brought to workable proportions through the use of criteria that would be adapted to countries’ circumstances. For developing countries, the series contained in the GDDS constitute a good starting point since they were identified as relevant for economic analysis and the monitoring of social and demographic progress. Among these statistics, the focus could be, for example, on those for which methodological manuals exist in the field. Alternatively, the focus could be on those used to track global goals. A further selection could be to concentrate on statistics, such as national accounts and population, that are recognized as providing a comprehensive reading of certain aspects of society. The advantage of comprehensive statistics is that they provide a broad framework to harmonize the definitions and classification of other statistics on which they are based, and constitute the denominators for a number of important economic and social indicators. Functions of the framework 15. Once the datasets have been selected as representative of the statistical system, the DQAF can be applied to perform the three functions of describing, planning, and monitoring. The description will provide a snapshot reading of the current status of the statistics and, as such, constitutes a measure of statistical capacity for these datasets. The DQAF can also be used as a mechanism for planning statistical development; a common platform for planning will be especially useful when some of the milestones are to be performed with the assistance of various external donors. Finally, the use of a common format for describing and planning should greatly facilitate the monitoring of statistical capacity building and evaluation of TA. 16. The combination and interrelationship of these three functions are presented in a summary form in the Statistical Capacity Matrix (Matrix) shown in Table 1. While the Matrix can be applied to any dataset, it is applied here, for illustrative purposes, to national accounts. The first column lists areas of activity that have a role in statistical production. The -5- subsequent columns deal with the three functions of describing, planning, and monitoring, as described below. Snapshot reading of current status of the statistics 17. The observations concerning each of the activities listed in column 1 are recorded in summary form in column 2. The current conditions are described by comparing them to internationally accepted standards, guidelines, or practices. The Matrix, to illustrate, uses a 4level scale: practice not observed (NO), largely not observed (LNO), largely observed (LO), and up to observed (O). Provisions are also made to take into account work under progress (U) and non-applicability or non-availability of the information (NA). Such description can be carried out either by a technical assistance mission, by the country authorities, or by independent assessors. Planning statistical development 18. With this reading of the current situation on national accounts, it is much easier to identify the measures required to improve the situation. It also suggests some form of sequencing (prioritization) to apply these measures. For instance, there would be little point in trying to improve the accessibility of statistics until the situation improves in regard to the availability of resources to process the statistics. The second and third columns provide a synopsis of milestones along with target dates to reach these milestones. Underpinning these milestones would be an action plan (e.g., medium term) that could be developed with the authorities when external assistance is involved. Monitoring statistical capacity building and evaluation 19. The plans having been developed with the same framework as that used for describing the statistical system makes it much easier to assess the outcomes achieved; a second reading of the situation constitutes effectively a monitoring on how the plans proceeded. For the example used here, a new reading of the situation shows that by 1999 there was some improvements, although they were not numerous or, on the face of it, striking (column 5). The reading shows marked improvement in two areas, serviceability and methodological soundness, where the country was by then “largely observing” good practices. Some improvements were noted in some aspects of accuracy and reliability. Where targets were set, they were met. However, it is also noted that there is some deterioration in one aspect of accuracy and reliability. A snapshot reading in 2002 (column 6) will show to what extent the country is still lagging not only in the areas where technical assistance was provided, but also elsewhere in its statistical system. -6- Table 1. Statistical Capacity Matrix Country: XXX Statistics: National Accounts Activity (1) Prerequisites of quality Environment is supportive of statistics Resources are commensurate with statistical program Quality awareness is cornerstone of work Integrity Policies are guided by professional principles Policies and practices are transparent Practices are guided by ethical standards Methodological soundness Concepts definitions follow international frameworks Scope is in accord with international standards Classification systems are in accord with int. standards Flows/stocks are valued according to international standards Accuracy and reliability Source data are adequate Statistical techniques conform with sound procedures Source data are assessed and validated Intermediate results are assessed and validated Revisions are tracked Status as of Dec 1998 (2) Milestone by Achievement as of Dec Aug Dec 1999 2002 1999 (3) (4) (5) NO LO O NO NO LO O NO Aug 2002 (6) O O O O O O O O NO U LO NO NO U O NO NO LO O LO NO LNO LO NO NO LNO LO NO NO LNO LO NO NO LNO LO LNO NO LNO LO LNO NO LO O LO NO LO O LO NO LNO LO NO NO LNO LO NO LO O Serviceability Statistics cover relevant information on the subject Timeliness, periodicity follow dissemination standards Statistics are consistent Data revisions follow regular, publicized procedures Accessibility Presentation is clear and data are available Up-to-date pertinent metadata are available Prompt, knowledgeable support is available O O O O O O Note: Assessment follows the following scale: O – Practice observed; LO – Practice largely observed; LNO – Practice largely not observed; NO – Not observed; U- Work under progress; NA - Information not available. -7- D. Where Do We Go From Here? 20. The experience of using the DQAF as the methodological basis for my department’s work has been very positive. While the Matrix set out here is a starting point, a number of issues and concerns will need to be addressed. Two are obvious: Identification of statistics to serve as representative of the statistical system: How should the scope for statistical capacity measurement be defined? What is the critical mass of economic (both macro and micro) and socio-demographic statistics that reflects at least the core statistical capacity of a country? Adaptation of the DQAF: Should the DQAF as it now stands be compressed but still maintain the full spectrum of characteristics of a system? Or should it, for the purposes of statistical capacity building, focus more on some characteristics, such as institutional setting? As I noted at the outset, my purpose in sharing our experience with the DQAF is to encourage discussion of the use of the DQAF, or some adaptation of it, to provide a systematic way to describe, strengthen, and monitor statistical capacity building. I hope I have provided some ideas to serve as a springboard to encourage discussion and comment. The challenges are out there; let’s work together to meet them. -8- ANNEX 1 Data Quality Assessment FrameworkGeneric Framework (Draft as of July 2001) Quality Dimensions Prerequisites of quality1 1. Integrity The principle of objectivity in the collection, processing, and dissemination of statistics is firmly adhered to. Elements Indicators 0.1 Legal and institutional environment – The environment is supportive of statistics. 0.1.1 The responsibility for collecting, processing, and disseminating statistics is clearly specified. 0.1.2 Data sharing and coordination among data producing agencies are adequate. 0.1.3 Respondents' data are to be kept confidential and used for statistical purposes only. 0.1.4 Statistical reporting is ensured through legal mandate and/or measures to encourage response. 0.2 Resources – Resources are commensurate with needs of statistical programs. 0.2.1 Staff, financial, and computing resources are commensurate with statistical programs of the agency. 0.2.2 Measures to ensure efficient use of resources are implemented. 0.3 Quality awareness – Quality is a cornerstone of statistical work. 0.3.1 Processes are in place to focus on quality. 0.3.2 Processes are in place to monitor the quality of the collection, processing, and dissemination of statistics. 0.3.3 Processes are in place to deal with quality considerations, including tradeoffs within quality, and to guide planning for existing and emerging needs. 1.1 Professionalism – Statistical policies and practices are guided by professional principles. 1.1.1 Statistics are compiled on an impartial basis. 1.1.2 Choices of sources and statistical techniques are informed solely by statistical considerations. 1.1.3 The appropriate statistical entity is entitled to comment on erroneous interpretation and misuse of statistics. 1.2 Transparency – Statistical policies and practices are transparent. 1.2.1 The terms and conditions under which statistics are collected, processed, and disseminated are available to the public. 1.2.2 Internal governmental access to statistics prior to their release is publicly identified. 1.2.3 Products of statistical agencies/units are clearly identified as such. 1.2.4 Advance notice is given of major changes in methodology, source data, and statistical techniques. 1.3 Ethical standards – Policies and practices are guided by ethical standards. 1.3.1 Guidelines for staff behavior are in place and are well known to the staff. -9- ANNEX 1 Data Quality Assessment FrameworkGeneric Framework (Draft as of July 2001) Quality Dimensions 2. Methodological soundness The methodological basis for the statistics follows internationally accepted standards, guidelines, or good practices. Elements Indicators 2.1 Concepts and definitions – Concepts and definitions used are in accord with internationally accepted statistical frameworks. 2.1.1 The overall structure in terms of concepts and definitions follows internationally accepted standards, guidelines, or good practices: see dataset-specific framework. 2.2 Scope – The scope is in accord with internationally accepted standards, guidelines, or good practices. 2.2.1 The scope is broadly consistent with internationally accepted standards, guidelines, or good practices: see dataset-specific framework. 2.3 Classification/sectorization – Classification and sectorization systems are in accord with internationally accepted standards, guidelines, or good practices. 2.3.1 Classification/sectorization systems used are broadly consistent with internationally accepted standards, guidelines, or good practices: see dataset-specific framework. 2.4 Basis for recording – Flows and stocks are valued and recorded according to internationally accepted standards, guidelines, or good practices. 2.4.1 Market prices are used to value flows and stocks. 2.4.2 Recording is done on an accrual basis. 2.4.3 Grossing/netting procedures are broadly consistent with internationally accepted standards, guidelines, or good practices. - 10 - ANNEX 1 Data Quality Assessment FrameworkGeneric Framework (Draft as of July 2001) Quality Dimensions 3. Accuracy and reliability Source data and statistical techniques are sound and statistical outputs sufficiently portray reality. Elements Indicators 3.1 Source data – Source data available provide an adequate basis to compile statistics. 3.1.1 Source data are collected from comprehensive data collection programs that take into account country-specific conditions. 3.1.2 Source data reasonably approximate the definitions, scope, classifications, valuation, and time of recording required. 3.1.3 Source data are timely. 3.2 Statistical techniques – Statistical techniques employed conform with sound statistical procedures. 3.2.1 Data compilation employs sound statistical techniques. 3.2.2 Other statistical procedures (e.g., data adjustments and transformations, and statistical analysis) employ sound statistical techniques. 3.3 Assessment and validation of source data–Source data are regularly assessed and validated. 3.3.1 Source data—including censuses, sample surveys and administrative records—are routinely assessed, e.g., for coverage, sample error, response error, and non-sampling error; the results of the assessments are monitored and made available to guide planning. 3.4 Assessment and validation of intermediate data and statistical outputs.-Intermediate results and statistical outputs are regularly assessed and validated. 3.4.1 Main intermediate data are validated against other information where applicable. 3.4.2 Statistical discrepancies in intermediate data are assessed and investigated. 3.4.3 Statistical discrepancies and other potential indicators of problems in statistical outputs are investigated. 3.5 Revision studies – Revisions, as a gauge of reliability, are tracked and mined for the information they may provide. 3.5.1 Studies and analyses of revisions are carried out routinely and used to inform statistical processes. - 11 - ANNEX 1 Data Quality Assessment FrameworkGeneric Framework (Draft as of July 2001) Quality Dimensions 4. Serviceability Statistics are relevant, timely, consistent, and follow a predictable revisions policy. Elements Indicators 4.1 Relevance – Statistics cover relevant information on the subject field. 4.1.1 The relevance and practical utility of existing statistics in meeting users’ needs are monitored. 4.2 Timeliness and periodicity – Timeliness and periodicity follow internationally accepted dissemination standards. 4.2.1 Timeliness follows dissemination standards. 4.2.2 Periodicity follows dissemination standards 4.3 Consistency – Statistics are consistent within the dataset, over time, and with other major datasets. 4.3.1 Statistics are consistent within the dataset (e.g., accounting identities observed). 4.3.2 Statistics are consistent or reconcilable over a reasonable period of time. 4.3.3 Statistics are consistent or reconcilable with those obtained through other data sources and/or statistical frameworks. 4.4 Revision policy and practice – Data revisions follow a regular and publicized procedure. 4.4.1 Revisions follow a regular, well-established and transparent schedule. 4.4.2 Preliminary data are clearly identified. 4.4.3 Studies and analyses of revisions are made public. - 12 - ANNEX 1 Data Quality Assessment FrameworkGeneric Framework (Draft as of July 2001) Quality Dimensions 5. Accessibility Data and metadata are easily available and assistance to users is adequate. Elements Indicators 5.1 Data accessibility – Statistics are presented in a clear and understandable manner, forms of dissemination are adequate, and statistics are made available on an impartial basis. 5.1.1 Statistics are presented in a way that facilitates proper interpretation and meaningful comparisons (layout and clarity of text, tables, and charts). 5.1.2 Dissemination media and formats are adequate. 5.1.3 Statistics are released on the pre-announced schedule. 5.1.4 Statistics are made available to all users at the same time. 5.1.5 Non-published (but non-confidential) subaggregates are made available upon request. 5.2 Metadata accessibility – Upto-date and pertinent metadata are made available. 5.2.1 Documentation on concepts, scope, classifications, basis of recording, data sources, and statistical techniques is available, and differences from internationally accepted standards, guidelines or good practices are annotated. 5.2.2 Levels of detail are adapted to the needs of the intended audience. 5.3 Assistance to users – Prompt and knowledgeable support service is available. 5.3.1 Contact person for each subject field is publicized. 5.3.2 Catalogues of publications, documents, and other services, including information on any charges, are widely available. The elements and indicators included here bring together the “pointers to quality” that are applicable across the five identified dimensions of data quality. 1