Crises and Attempted Resolutions

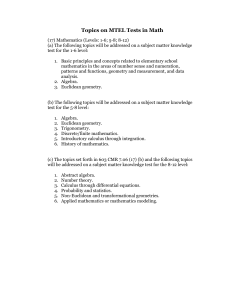

advertisement

Crises and Attempted Resolutions of Paradoxes in Foundations of Mathematics By Matt Insall I was recently asked on an email discussion group (http://groups.yahoo.com/group/4DWorldx/) to elaborate on how mathematics was changed between the 19th and 20th century to reestablish consistency. Below is my response. I will give an abbreviated version, for lay readers, of my take on the status of the problem of consistency in mathematics. For many centuries, mathematics developed in fits and starts. The applications of mathematics drove early discovery, and the Greek philosophers then began to make mathematics more an art, or a philosophical study than an applied science. They studied infinity. They studied what it means to be a number. They studied ideal forms, such as the Platonic solids. They studied geometry as a pure science, but in a more philosophical light than in the applied versions that had previously existed. This did not end the applications, or replace them. It only enhanced the ability of mathematicians, both pure and applied, to explain to students, who would then go on to apply mathematics or to teach others themselves. A standard tool of these mathematicians developed (not just in the hands of the Greeks) in the form of argumentation via reductio ad absurdum. The mathematics was more successful with this tool, both as a philosophical pursuit and as an applied science. Other scientists relied on the results of mathematics to support their arguments about the workings of the world, in both a pure and an applied sense. The idea of a consistent system of axioms for the workings of geometry in particular and mathematics in general were emerging. This comfort in the workings of the systems being used and studied for use in mathematics was to last several centuries. It was bolstered by the success of its applications and its popularity as a pure science (or art or philosophical pursuit) among the learned of various societies. The highest form of mathematics was seen to be geometry, which was what we now refer to as euclidean geometry. Then a crisis occurred in geometry. Until Bolyai, Lobachevski and Gauss came on the scene, it was believed that geometry had to be of this type. In particular, there was one postulate of euclidean geometry whose self-evidence had not been assumed, namely the fifth postulate of euclid, sometimes called the parallel postulate. It states that for any line L and any point p not on the line L, there is a unique line K through p that is parallel to L. This seemed to be true in the physical world, but it had never been proved. Attempts had been made to prove it, but all were unsuccessful. Gauss, Lobachevsky and Bolyai showed that the fifth postulate was not provable from the other postulates (or axioms) of euclidean geometry. In doing so, they demonstrated a way to do proofs that we now call ``independence proofs''. In modern parlance, they showed that the parallel postulate is independent of the other axioms of euclidean geometry. (Similar methods may have been employed previously. I will comment on this below.) Their approach was to ``construct'' a model of the other axioms that failed to satisfy the parallel postulate. In a sense, there was not a need to ``construct'' such a model, for one such model turns out to have been under their noses. The surface of a ball is a two-dimensional geometric structure that satisfies all of euclids axioms except the parallel postulate. It had been believed that any geometric structure that satisfied the other axioms of euclidean geometry must also satisfy the parallel postulate, but this was seen now to be not the case. But at least, the axioms of euclid, including the parallel postulate, were consistent, as was evident from the existence of at least one model: the universe. In the early twentieth century, Einstein was to rely on the work of Bolyai, Lobachevski and Gauss, among others, to deny us even this model of euclidean geometry, in the development of his general theory of relativity, designed to account for a new ida of the workings of gravitational force. (Now the universe is thought of as locally euclidean, but not euclidean in the global sense.) In the late nineteenth century, Cantor was studying convergent and divergent trigonometric series, which are a mainstay of a branch of mathematics known as harmonic analysis. Harmonic analysis studies, among other things, the way to design initial-boundary conditions for partial differential equations so that one can have a procedure for solving the given equations. This was an area of what is known now as ``hard analysis'', in contrast to something known as ``soft analysis''. (I will not go into the distinction in detail, in part because I am not an analyst of any sort, though I have done some analysis in the ``hard'' arena.) A special type of set of real numbers was of interest. Now these sets are referred to as ``thin sets'', and in particular, Cantor was studying sets of uniqueness for trigonometric series. He was trying to describe their structure, in terms that other mathematicians would understand and in terms that could be used to explain why they are sets of uniqueness. (A set of uniqueness E is a set of real numbers such that if a trigonometric series converges to zero at all points outside E, then the coefficients of the series must all be zero.) Some of the easiest sets to recognize as sets of uniqueness are the countable ones, and Cantor had shown that. He began to realize that a well-developed theory of sets needed to be developed in order to explain the structure of these sets of uniqueness. Thus he devised what is now referred to as Cantorian Set Theory or Naive Set Theory. In it he found the need to describe a new kind of number, which he called transfinite numbers, for they were larger than any given finite number. This began to be seen as a formulation of a foundation for mathematics in general, and not just for the theory of sets of uniqueness in the real line. In particular, Frege began a program to axiomatize the new ``theory of sets'', and things were going well, including a move by Dedekind to found the theory of real numbers in terms of sets of rational numbers, which had been founded on sets of integers. (Dedekind described the reals as ``cuts'' [now called ``Dedekind cuts''] of rational numbers.) Thus, all of mathematics outside geometry was being reduced to a study of the theory of sets. The beautiful landscape was shattered when Bertrand Russell found a contradiction in the axiom system of Frege. The theory of sets was inconsistent. This was a new crisis. It had already been realized that when a theory was inconsistent, the problem could be remedied by removal of one or more of the foundational assumptions of the theory. The way in which Russell obtained his contradiction, now called ``Russell's Paradox'', led mathematicians to believe that the problem lay in the Fregean axiom of unrestricted comprehension. The comprehension axiom at that time said roughly that for any property P, there is a set whose members are all and nothing but the sets which satisfy property P. Russel obtained his contradiction by considering the property ``is not a member of itself''. In fact, he showed that the set of all sets which are not members of themselves cannot exist. Revisions of Fregean set theory then found ways to restrict the axiom of comprehension so that sets such as this could be proven not to exist and were not in any obvious way postulated by the given axioms. One such axiom system that has survived and is now considered by many to be the most useful foundationally for mathematics is Zermelo-Fraenkel Set Theory, or ZF for short. At the opening of the twentieth century, there was a huge congress of mathematicians in europe (I forget where. I think it was held in Paris.) At the international congress, a well-known mathematician of the time, David Hilbert, proposed that a certain twenty-four problems should be considered top priority for mathematicians in the coming decades. One of these was to show by finitary means that ZF is consistent. By ``finitary means'', it was meant that the proof should be accomplished in a way that can be checked by a (hypothetical) machine, or, what was believed equivalent, by humans working in an entirely mechanical manner. Thus, an area of mathematics grew up, called mathematical logic. In various incarnations, it takes other names, such as ``computer science'' or ``recursive function theory''. But also, these may be taken as parts of mathematical logic, along with the area now known as ``model theory''. In the 1930s, Goedel answered this particular problem of Hilbert negatively, in the following sense. It was believed that the idea of ``provable by finitary means'' should be modeled mathematically by recursive functions. Goedel showed the following things about the first-order theory PA of Peano Arithmetic: 1. in PA, one can interpret the concepts of provability (by finitary means), consistency, etc., and in particular, one may express in PA the statement Con(PA) that PA is consitent. 2. the statement Con(PA) is not provable from the axioms of PA. Then he proceeded to show that the above results could be generalized to many other first-order axiom systems, including ZF. Thus, roughly, ZF can express its own consistency problem, but ZF cannot prove its own consistency. Goedel's theorems made essentail use of an assumption I mentioned above that is known as Church's Thesis: ``Every mechanical procedure corresponds to a recursive function.'' There are ways to state Goedel's results so that they do not use Church's Thesis, and are merely results that show the ``recursive unsolvability'' of the consistency problem in mathematics. Now, many people in mathematics take ZF to be consistent, but also, many point out - rightly - that their work does not use all the axioms of ZF, and they contend that the axioms needed for their work are consistent, and are provably consistent. This is being checked, albeit slowly. Many do not see the need to check it, but there is an area of foundational studies called reverse mathematics, developed by Harvey Friedman and Stephen Simpson, among others, which studies for given mathematical results in the literature exactly what set theoretic axioms are required to prove the given result. I do not know a lot about this area of mathematical logic, but I would enjoy learning more. There is another direction being considered that is closer to the original view. It is a study of a particular set theory that is obtained by restricting the axioms of ZF. This set theory is called Kripke-Platek Set Theory, or KP for short. I do not know a lot about it, but it appears to me to be an attempt to provide a very broad foundation for all of mathematics which is provably consistent. (I am not entirely certain of this, as I am only recently becoming acquainted with notions in the related area of admissible sets.) The culprits are seen by proponents of KP set theory to be not only comprehension, but the power set axiom and the axiom of choice. (The axiom of choice is not a part of ZF. It is part of the modern set theory known as ZFC, Zermelo-Fraenkel Set Theory with the Axiom of Choice.) In any case, theorems of the following form are provable: 1. 2. 3. If PA is consistent, then ZF is consistent, and vice versa. If ZF is consistent, then ZFC is consistent, and vice versa. If PA is consistent, then KP is consistent. (And I think 3 can be strengthened.) Why is it important to try to get a consistent foundation for mathematics? Well, we want to still use the old tool: reductio ad absurdum. If the foundation of mathematics is inconsistent, then this means that there is a reductio ad absurdum argument that proves a statement that is false. (More to the point, if the foundations of mathematics are inconsistent, then every statement, whether true or false, is provable using a reductio ad absurdum argument. This would make such foundations less useful than guessing in practical mathematical applications!) Let me now weigh in, philosophically. I consider the determination of whether our axiom system is consistent to be a valuable question, but after looking at it for quite a while, I am convinced (though I have not proved) that ZFC is consistent. It produces some strange results that make some people uncomfortable, but it is a theory that I believe to be consistent. Thus I expect that no one will ever prove that ZFC is inconsistent, but I admit that I have no (finitary) proof of its consistency. (For PA, there are infinitary proofs of consistency, but I am convinced that on the meta-level, these require an ontological commitment to the consistency of ZF or ZFC.) Moreover, the attempts to provide a (finitarily provably) consistent foundation for mathematics tend to lead either to oversimplified set theories not capable of an appropriate expressiveness or to extremely complicated set theories that will not gain wide acceptance primarily due to the difficulty inherent in just intuitively understanding their axioms. (As it is, some people find the axioms of ZF to be more complicated than they want to deal with. Personally, I find them quite simple to understand, for they were designed to model a consistent portion of the inconsistent form of Naive set theory that was developed by the genius of George Cantor.)