Applications

advertisement

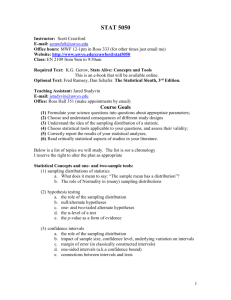

Propspectus: THE LOGIC OF STATISTICAL REASONING The Logic of Statistical Reasoning (LSR) fills a gap among statistics texts for social science students. The current population of texts vary somewhat in length, style of writing, graphics format and the kinds of examples used. But they replicate earlier texts in that they assume that the ground to be covered in the introductory course has not changes significantly over the last two or three decades. While statistical texts haven’t changed, the quantitative methods used in social research have. The result is that while students may leave the introductory course with some understanding of statistical inference they are ill-equipped to read the research literature. We have addressed that problem in LSR, and in the process produced a text that is pedagogically stronger than most on the market. Like many other texts, LSR assumes that the typical student in the introductory social statistics course has very limited mathematical background. But unlike other texts, LSR emphasizes critical thinking about the logic underpinning various statistical methods. The goal of the book is to help the student become a thoughtful reader of quantitative analysis. Our experience demonstrates that students are capable of such critical thinking even when they lack mathematical sophistication. LSR uses three strategies to encourage critical thinking about statistical methods: --LSR emphasizes models as a fundamental metaphor in all quantitative analysis, from the simplest hypothesis tests through regression. The idea of the model becomes the basis that structures for critical thinking. And the models allow students to connect the statistical procedures to the substantive concerns that motivate them. --LSR uses repetition with variation to underpin its pedagogy. All hypothesis tests and confidence intervals and all measures of goodness of fit are treated as variations on theme. This builds confidence and helps students see connections. LSR also uses a consistent set of examples in every chapter so that students develop familiarity with the substance of the examples and also learn that there is more than one way to approach the same research question. -- LSR does not elaborate on tools rarely used in contemporary research. Rather the emphasis on models as a master metaphor allows LSR to move briskly to regression, which is the basis of nearly all statistical analysis in the social sciences. The implementation of these strategies gives LSR in a new and much stronger pedagogical approach than existing texts. It reflects both the reality of statistical practice the students will encounter in other courses and their jobs, and it reflects the realities of how students with limited mathematical backgrounds use statistics. Competition. There more than a dozen texts targeting the introductory course in statistics for sociology and other social science students, so detailed comparison with all of them is not practical. LSR is as clearly written as the best of them and has a better pedagogic strategy. A crucial issue for a new text is how well its outline parallels that of texts currently being used, since similarity encourages adoption. However, the best selling texts (Frankfort-Nachmias & Guerrero, Healey and Levin & Fox) each have somewhat different outlines so no new text can match all of them. The outline of LSR is closest to that of Healey. Market. LSR is intended for introductory social statistics courses that are taught in all sociology departments. We have chosen recurring examples that also make it suitable for students in criminal justice, health sciences and environmental studies, and the text has been tested with these audiences as well as with sociology students. Status. The full manuscript of LSR, including examples and exercises, is complete. This text has been used in draft several times in introductory social statistics courses. We are open to developing supplemental materials but have not yet done so. THE LOGIC OF STATISTICAL REASONING Thomas Dietz & Linda Kalof Michigan State University July 2005 TABLE OF CONTENTS PREFACE: A STRATEGY FOR APPROACHING QUANTITATIVE ANALYSIS Statistics is hard Statistics is important Quantitative analysis as craftwork The discourse of science A strategy for learning What have we learned? CHAPTER 1: AN INTRODUCTION TO QUANTITATIVE ANALYSIS What is statistics? Models to explain variation Explaining variation The use of statistical methods Types of error Error in models Sampling error Randomization error Measurement error Perceptual error Comparison to random numbers Assumptions What have we learned? Applications Advanced Topic: A third and more technical definition of statistics Advanced Topic: Ways of drawing probability samples Feature: Tea tasting and random numbers Feature: Diversity in Statistics: Profiles of African American, Mexican American and Women Statisticians CHAPTER 2: SOME BASIC CONCEPTS Key Concepts Variables Levels of measurement Nominal Ordinal Interval Ratio Tools for working with measurement levels Scaling Other terms Labeling variables Constants Functions Units of analysis Data structure Sample size and sample selection What have we learned? Applications Exercises CHAPTER 3: DISPLAYING DATA ONE VARIABLE AT A TIME Graphic display of nominal and ordinal data Pie chart Bar chart Dot plot histogram Graphic display of continuous data One-way scatterplot Cleveland dotplot Histogram Stem and leaf diagram Skew and mode Rules for graphing What have we learned? Applications Exercises Advanced Topic: Tukey’s new way of tabulating Feature: John W. Tukey CHAPTER 4: DESCRIBING DATA Descriptive statistics and exploratory analysis Measures of central tendency The mean Deviations from the mean Effect of outliers The median Deviations from the median Effect of outliers The trimmed mean The mode Measures of variability Variance Standard deviation Median absolute deviation from the median Interquartile range Relationship among the measures Boxplot Side-by-side boxplot What have we learned? Applications Exercises Advanced Topic: Squared deviations and their relationship to the mean Advanced Topic: Breakdown point Feature: Models, error, and the mean: A brief history CHAPTER 5: PLOTTING RELATIONSHIPS AND CONDITIONAL DISTRIBUTIONS Scatterplot Time series graph Bivariate plot for categorical or grouped independent variables An historical example What have we learned? Applications Exercises Advanced Topic: Scatterplot matrices Advanced Topic: Ordinary least squares Advanced Topic: Smoothers CHAPTER 6: CAUSATION AND MODELS OF CAUSAL EFFECTS Causation and correlation Causation in non-experimental data Explanatory and Extraneous Variables Causal notation Assessing causality: Elaboration and controls Another example An example with continuous variables What have we learned? Applications Exercises Advanced Topic: More elaborate causal notation Advanced Topic: Further ideas in assessing causality Feature Feature Feature What is an experiment? What is correlation? Causal notation and measurement CHAPTER 7: PROBABILITY What is random? Probability Equal probability and independence Independent events Random variables and models of error Probability distributions The Normal distribution and the law of large numbers Random variables and data analysis Sampling error Randomization error Measurement error Left-out variables Permutation errors What have we learned? Feature Feature Feature Feature A brief note on Thomas Bayes Making decisions about uncertainty Cumulative probability distributions Kinds of samples and probability CHAPTER 8: SAMPLING DISTRIBUTIONS AND INFERENCE The logic of inference The sampling experiment The sampling distribution The expected value and the standard error The law of large numbers Small samples and estimating the population variance The t distribution What have we learned? Advanced Topic: Sampling with replacement and sampling from large populations Advanced Topic: Bootstrapping Advanced Topic: Small sample versus large sample estimators Advanced Topic: Properties of estimators (bias, efficiency, mean square error, consistency, robustness, maximum likelihood estimators) Feature: Who invented the Central Limit Theorem? Feature: Pearson to Gosset to Fisher: The coevolution of statistical and scientific thinking Feature: William Sealy Gosset CHAPTER 9: USING SAMPLING DISTRIBUTIONS: CONFIDENCE INTERVALS Confidence intervals using the Central Limit Theorem Constructing the confidence interval for large samples Sampling experiments Confidence intervals using the t distribution Size of confidence intervals Graphing confidence intervals Confidence intervals for dichotomous variables Rough confidence intervals What have we learned? Applications Exercises CHAPTER 10: USING SAMPLING DISTRIBUTIONS: HYPOTHESIS TESTS The logic of hypothesis tests The formal approach Steps in testing a hypothesis An example An example with a small sample One-sided and two-sided tests Small sample tests Hypotheses about differences in means A small sample difference in means test Models for differences in means Limits to hypothesis tests What have we learned? Applications Exercises Advanced Topic: Advanced Topic: Advanced Topic: Thinking about the hypothesis test as a model The more correct, less simple formulas Confidence intervals and hypothesis tests CHAPTER 11: THE SUBTLE LOGIC OF ANALYSIS OF VARIANCE A review of the two-group example More than two groups example A model Partitioning variance Inference in analysis of variance (the F-test) A step-by-step example What have we learned? Applications Exercises Advanced Topic: Advanced Topic: Feature: Feature: What is Normally-distributed? F versus t and z Analysis of variance the hard way How to conduct many tests with the same data CHAPTER 12: GOODNESS OF FIT AND MODELS OF FREQUENCY TABLES Chi-square applied to a frequency table Assumptions for chi-square Chi-square and the association between two qualitative variables Beyond 2 x 2 tables What we have learned? Applications Exercises CHAPTER 13: BIVARIATE REGRESSION AND CORRELATION Straight Lines Fitting a straight line to data Lines as summaries Error in fit Ordinary least squares OLS and conditional distributions Calculating the OLS line Calculating B Calculating A ^ Calculating Y Calculating E Goodness of fit Fit and error Partitioning sums of squares or variances R and R2 Pearson’s correlation coefficient Interpreting Regression Lines Interpreting A Interpreting B Interpreting R2 Interpreting r Interpreting E Inference in regression: A basic approach Working with B Working with A Working with R2 What have we learned? Applications Exercises Advanced Topic: Breakdown point Advanced Topic: Advanced Topic: Advanced Topic: Advanced Topic: Pearson’s r and B coefficients Confidence intervals for predictions Thinking about inference in regression Feature: The invention of regression CHAPTER 14: BASICS OF MULTIPLE REGRESSION Two independent variables Terminology and notation Geometric view Algebraic view Calculations Interpretation Interpreting a Interpreting b Interpreting R2 Relationship between bivariate and multiple regression Inference Tests regarding values for a and b Test for R2 Partitioning variance and statistical control Multiple regression Other features of multiple regression coefficients Collinearity Sample size Statistical control A three independent variable example What have we learned? Applications Exercises Advanced Topic: Adjusted R2 Advanced Topic: Residuals and statistical control