1.2.4.4 Automated Generation of IL2

advertisement

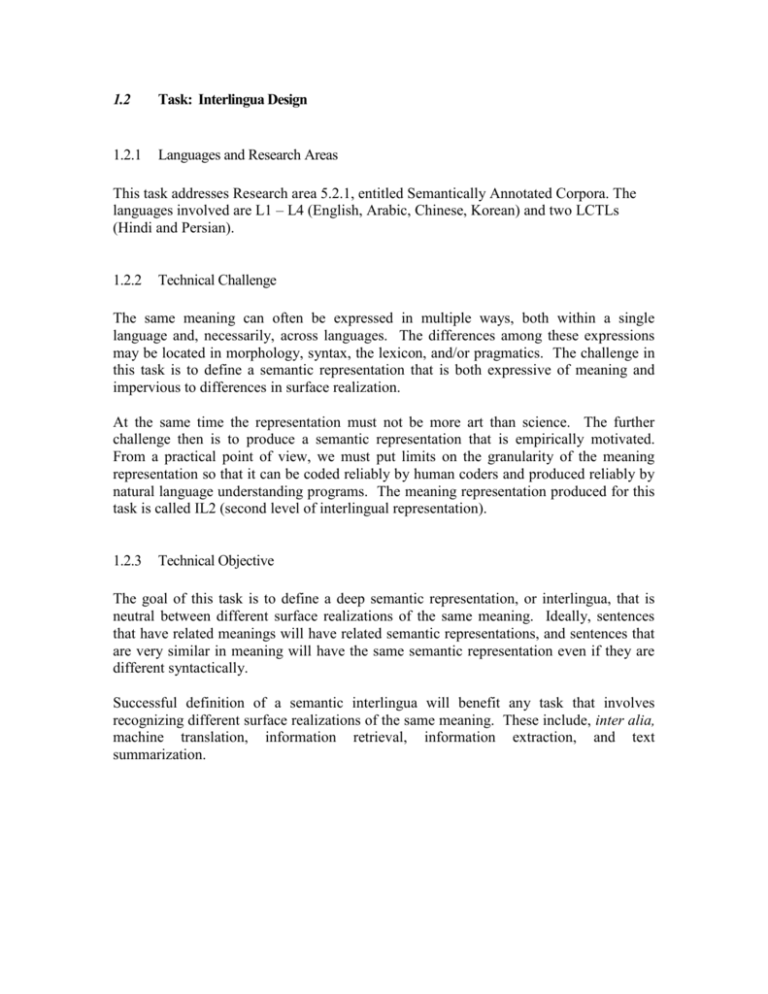

1.2 Task: Interlingua Design 1.2.1 Languages and Research Areas This task addresses Research area 5.2.1, entitled Semantically Annotated Corpora. The languages involved are L1 – L4 (English, Arabic, Chinese, Korean) and two LCTLs (Hindi and Persian). 1.2.2 Technical Challenge The same meaning can often be expressed in multiple ways, both within a single language and, necessarily, across languages. The differences among these expressions may be located in morphology, syntax, the lexicon, and/or pragmatics. The challenge in this task is to define a semantic representation that is both expressive of meaning and impervious to differences in surface realization. At the same time the representation must not be more art than science. The further challenge then is to produce a semantic representation that is empirically motivated. From a practical point of view, we must put limits on the granularity of the meaning representation so that it can be coded reliably by human coders and produced reliably by natural language understanding programs. The meaning representation produced for this task is called IL2 (second level of interlingual representation). 1.2.3 Technical Objective The goal of this task is to define a deep semantic representation, or interlingua, that is neutral between different surface realizations of the same meaning. Ideally, sentences that have related meanings will have related semantic representations, and sentences that are very similar in meaning will have the same semantic representation even if they are different syntactically. Successful definition of a semantic interlingua will benefit any task that involves recognizing different surface realizations of the same meaning. These include, inter alia, machine translation, information retrieval, information extraction, and text summarization. 1.2.4 Technical Approach 1.2.4.1 Background The definition of IL2 has several sub-parts: (1) an ontology for labeling nodes in IL2; (2) relations for linking elements from the ontology in IL2; (3) a typology of extended paraphrase relations, within and across languages, and a decision about which types of paraphrases will be normalized in IL2; (4) procedures for generating IL2 representations automatically where possible; (5) a system of linkages between annotations; and (6) a syntactic specification of the format of an IL2. The IL2 definition will be documented in a coding manual. The various parts of the IL2 specification will be developed and tested in Year 1. Production of IL2 annotations will occur in Year 2 and the option year. 1.2.4.2 The Ontology and the Relations The ontology used for IL1 will be expanded for IL2 by including entries for events and their attendant relations from PropBank and FrameNet. (See Section 1.2.5 for a brief description of these projects and their relation to our work). We are also exploring the semi-automatic identification of event and relation types–discussed further below in this subsection–which might also be incorporated into the ontology. In this section we describe the basic assumptions underlying the ontology, while in Section XXXIinsertCrossReferenceHereXXX we describe the construction of that ontology in more detail. Consider the RIDE_VEHICLE semantic predicate (from FrameNet) in which a Theme (traveler) moves from a Source (originating) location to a Goal (destination) location along some Path in a Vehicle. All of the following sentences express more or less the same meaning while simultaneously enjoying various pragmatic differences: (1) The vice president traveled by plane from Boston to the home office. (2) The vice president returned from Boston to the home office by plane. (3) The vice president took a plane from Boston back to headquarters. (4) The vice president flew from Boston to the home office. While the essential meaning remains the same for all four sentences, their syntactic structure varies: For example, in (1)-(2) the Vehicle plane shows up in a prepositional phrase; in (3) plane is the direct object of the verb; and in (4) the plane is implied by the verb fly and is thus absent from the surface structure of the sentence. Still, in all four cases, the vice president is the Theme/traveler, Boston is the Source, the home office– whether designated as ‘home office’ or as ‘headquarters’–is the Goal, the Path between Boston and the home office is unspecified, but is presumed to involve air travel, and the Vehicle is a plane. Based on the FrameNet RIDE_VEHICLE semantic predicate (a.k.a. ‘frame’) and its set of corresponding relations (a.k.a. frame elements or slots), including, among others, Theme, Source, Goal, and Vehicle, the IL2 representation of all four sentences would share the following elements: RIDE_VEHICLE (Theme, vice_president) (Source, Boston) (Location, Boston) (Goal, home_office) (Vehicle, plane) Such a representation normalizes over the use of different predicates across the four sentences. In this particular situation it makes explicit an argument (‘plane’) that is encoded within the meaning of the verb ‘fly.’ In other cases, such a representation may also normalize over disparate IL1 theta role assignments (e.g., the Agent of ‘buy’ is the Beneficiary of ‘sell’). Frames are evoked by words. For example, the RIDE_VEHICLE frame is evoked by specific senses of cruise, fly, hitchhike, jet, ride, sail, and taxi. In our research (Green, 2004; Green, Dorr, & Resnik 2004), we are identifying frames automatically through a process of discovering sets of word senses that evoke a common frame, based largely on data about word senses in WordNet 2.0 and including relationships implicit in the glosses and example sentences. This effort has a dual payoff, since it identifies frames and the association between word senses and frames simultaneously. This extensional identification of frames avoids the need otherwise to posit frames in an ad hoc manner. The co-occurrence in the text being annotated of words that evoke common frames will aid in selecting semantic predicates from the ontology semi-automatically. 1.2.4.3 Typology of Extended Paraphrase Relations We continue to gather examples of extended paraphrase relations from the research literature (see the first two columns of Table 1, based largely on Hirst, 2003; Kozlowski, McCoy, & Vijay-Shanker, 2003; and Rinaldi et al. 2003) and, more importantly as we move forward, from the corpus of multiple translations of individual source texts that we are annotating. Assuming a basic faithfulness in the translations, translations of the same text should receive the same semantic representation within IL2. The linguistic relations between corresponding sentences in parallel translations will be studied to augment this typology. 1.2.4.4 Automated Generation of IL2 Where possible, we will develop procedures for automatically normalizing IL2 representations for particular paraphrase types listed in Table 1. Some of these transformations may involve post-processing IL1 annotations, while others may involve Relationship type Example Syntactic variation The gangster killed at least 3 innocent bystanders. vs. At least 3 innocent bystanders were killed by the gangster. The toddler sobbed, and he attempted to console her. vs. The baby wailed, and he tried to comfort her. I was surprised that he destroyed the old house. vs. I was surprised by his destruction of the old house. This is Joe’s new car, which he bought in New York. vs. This is Joe’s new car. He bought it in New York. Lexical synonymy Morphological derivation Clause subordination vs. anaphorically linked sentences Different argument realizations Noun-noun phrases Head switching Overlapping meanings Comparatives vs. superlatives Different sentence types Inverse relationship Inference Viewpoint variation Bob enjoys playing with his kids. vs. Playing with his kids pleases Bob. She loves velvet dresses. vs. She loves dresses made of velvet. Mike Mussina excels at pitching. vs. Mike Mussina pitches well. vs. Mike Mussina is a good pitcher. Lindbergh flew across the Atlantic Ocean. vs. Lindbergh crossed the Atlantic Ocean by plane. He’s smarter than everybody else. vs. He’s the smartest one. Who composed the Brandenburg Concertos? vs. Tell me who composed the Brandenburg Concertos. Only 20% of the participants arrived on time. vs. Most of the participants arrived late. The tight end caught the ball in the end zone. vs. The tight end scored a touchdown. The U.S.-led invasion/liberation/occupation of Iraq . . . You’re getting in the way. vs. I’m only trying to help. Where Normalized IL0 IL1 IL2 IL2 IL2 IL2 IL2 IL2 Not normalized Not normalized Not normalized Not normalized Not normalized Table 1. Relationship Types Underlying Paraphrase post-processing IL2 annoations. An example where such normalization should be possible is the equivalence between the IL2 representation of a sentence involving clause subordination and a pair of anaphorically linked sentences with the same meaning. 1.2.4.5 Annotation Linkage IL2 will incorporate two kinds of links between annotations. One link type will relate IL2 annotations to their corresponding IL1 annotations. The other link type will relate co- referring frame slots. We will study existing co-reference strategies and adopt the approach that best meets our needs. 1.2.4.6 Syntactic Specification of Format As with IL1, for display purposes IL2 representations will take the general form of dependency trees. Information kept at nodes within the tree will include, as appropriate, a link to the corresponding IL1 node, one or more concepts from the ontology for the event or object in question, and various features (e.g., co-reference links, the relation between an object and its governing event). The data will be maintained in a specific format (the ‘.fs’ format). 1.2.5 Comparison with Other Work/Uniqueness Two other major research efforts with some degree of similarity to our work are FrameNet and PropBank. FrameNet, which is based on the theory of Frame Semantics (Lowe, Baker, & Fillmore, 1997), is also producing a set of frames and frame elements for semantic annotation, with associated sets of evoking words (not word senses). The frames and frame elements are validated through corpus annotation, but the intuition-based origin of the FrameNet frames stands as a serious impediment to building a comprehensive inventory of frames. Through its extension to other languages (for example, the SALSA project [http://www.coli.uni-sb.de/lexicon] applies frames to German), FrameNet may lay claim to multilinguality. However, the set of frames and frame elements are modified as needed in other languages, thus meaning that FrameNet is not a true interlingual representation. PropBank is adding semantic annotation to the Penn English Treebank in the form of frames and arguments (or roles). The identification of roles tends to be verb-specific, although the roles for some classes of verbs (e.g., buy, sell, price, cost) are labeled so as to show their interrelationships. The use of Levin’s (1993) verb classes, is not able, however, to support the full discovery of semantically related verbs. In contrast, we emphasize establishing a semantic representation that is not dependent on specific lexical items, which then further promotes the development of an interlingua which extends across languages. [Owen: I don’t know enough about this to deal with it properly.] Note that now there is the merging of FrameNet and PropBank going on as a joint project, we need to mention that. To be added.Ontobank!