Reference Extraction from Environmental Regulation Texts

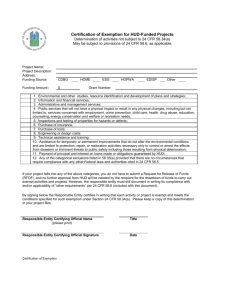

advertisement

Reference Extraction from Environmental Regulation Texts Shawn Kerrigan Spring 2001 1 Introduction 1.1 Problem to be investigated Environmental regulation provisions contain a large number of casual English references to other regulation provisions. Extracting complete references that make explicit the referenced provisions poses an interesting NLP problem. I developed three research questions related to this information extraction task that I sought to answer in with this project. Can an effective parser be built to recognize and transform regulation references into a standard format? Can an n-gram model be built to help the parser “skim” through a document very quickly without missing many references? Would the n-gram referenceprediction model be a useful tool for context-free grammar development? 1.2 Approach The references I attempted to make explicit range from simple (“as stated in 40 CFR section 262.14(a)(2)”) to more complex (“the requirements in subparts G through I of this part”). These references can be converted into lists of complete references such as: 40.cfr.262.14.a.2; and 40.cfr.265.G, 40.cfr.265.H and 40.cfr.265.I, respectively. Parsing these references required the development of a context-free grammar (CFG) and semantic representation/interpretation system. Statistical models were used to investigate the ability to predict that a reference exists even when the reference could not be parsed using the grammar specification. N-gram models were learned from the text preceding references that the parser identified. These n-gram models were used to return sections of text that appeared to have unidentified references within them, so as to facilitate development of a more robust reference parser. This method provided automatic feedback on missed references so that the most difficult examples from the corpus could be used as targets for further parser development. The statistical model can also be used to accelerate the parsing process by only parsing parts of the corpus that appear to contain references. Experimenting with the statistical model for the parser dramatically improved the parsing speed. When the predictions are accurate, the CFG parser only needs to process text in areas of predicted references, allowing it to skim over significant portions of the corpus. 1 1.3 Running the program The source code, makefile, corpus files and sample results files are included with this report. All coding was done in java. The program can be run using the following command line examples: There are three basic ways to run the program from the command line: > java RefFinder > java RefFinder > java RefFinder [unigram lambda] [-fsi] [grammar [-fsi] [grammar [-fsi] [grammar [bigram lambda] file] [lexicon file] [train corpus] file] [lexicon file] [train corpus] [test corpus] file] [lexicon file] [train corpus] [test corpus] [trigram lambda] The [-fsi] options are: f = save the references that are found in “found.refs” s = save the suspected references in “suspect.refs” i = insert the references found in the training file back into the xml document If the unigram, bigram and trigram lambdas are not specified, default values are used. An example run of the parser to extract a few references is: > java RefFinder –f parser.gram parser.lex sample.xml A larger example run of the program with training is: > java RefFinder –fs parser.gram parser.lex 40P0262.xml 40P0261.xml 2 Overall System Architecture The problem of extracting good references from the text source was divided into a number of tasks, and specialized components were designed for each particular task. Figure 1 shows the five core modules for the reference extraction system. In addition to these five modules seven other modules were developed to perform subtasks and serve as data structures. RefFinder.java RegXMLReader.java NGramModel.java Parser.java ParseTreeParser.java Figure 1. Basic System Architecture 2 The RefFinder.java module controls the overall operation of the program. The basic operation of this module is to initialize a new parser, open the regulation XML file (through RegXMLReader.java), and build an N-gram model (using NGramModel.java) by parsing through the regulation file. In this basic mode of operation the program can write all the identified references to a “found.refs” file. The RefFinder module includes extended functionality for a variety of tasks like running accelerated parses (using the ngram models) through a second regulation XML file, evaluating probabilistic versus complete document parses, running brute-force searches through possible values for probabilistic parameters, and producing output files containing input lines that could not be parsed but were predicted to contain references. The RegXMLReader.java module is used to read the XML regulations. It implements some standard file reading operations like readline(), close(), etc, but specializes these operations to the parsing task. Readline() returns that next line of text that is contained within a <regulation_text> </regulation_text> element. The object provides information on where within the regulation the current text is coming from (what subpart, section, etc.). It also allows the RefFinder to provide references that will be added to the XML file as additional tags immediately following the closing </regulation_text> tag. The NgramModel.java records all the string tokens preceding identified references during the training phase, and then it counts the number of times these n-grams appear in the source file to build probability estimates. The n-gram model differs from a standard approach in that not all possible n-grams are constructed from the corpus vocabulary to estimate their probabilities of occurrence. Only token sequences that might predict a reference following them are important, so no smoothing is done and probabilities are only calculated for token sequences that actually precede a reference during training. The probability is calculated as (# of times preceding a reference / # of times occurring in corpus file). Requesting probability values for any other token-sequence returns zero. The Parser.java module is a modified simple tabular parser. The parser takes a string of X tokens as input, and attempts to build the longest parse string it can starting with the first token. For example, the reference “40 CFR 262.12(a) is used to…”, should be parsed as “40 CFR 262.12(a)” and not as “40 CFR”. Some special features developed to accommodate these types of parses were to implement matching functions for all the special tokens (bracketed letters, numbers, section symbols, etc) and to modify the termination conditions for the tabular parsing algorithm. Parses are considered complete if the category stack is empty, and the input stack still contains tokens. The complete correct parse is selected from the set of all possible parses by identifying the parse that consumed the largest number of input stack tokens. The parsing system is described in more detail below. The ParseTreeParser.java module does the semantic interpretation by parsing though the parse tree constructed by Parser.java and returning a list of well-formed references. This module is also at its core a simple tabular parser that processes parse trees in a modified 3 depth-first mode like input stacks. The grammar for this module is restricted to rules that start with “REF”, essentially reducing the grammar to a list of legal well-formed reference templates. The lexicon for this module specifies how to treat the various categories developed for the Parser.java module when this semantics processor comes across them, and there are special processing rules for all of the categories. The use of these grammar and lexicon specification files builds a great deal of flexibility into the parsing system. These specification files also should allow the system to be extended to work well for parsing any type of reference if some simple tagging conventions are followed. The ParseTreeParser is described in more detail below. A sixth major component developed for the RefFinder was a WordQueue.java object. This utility class was used by several of the components discussed above to tokenize and buffer the text input. Breaking up the input into small enough chunks for processing and properly splitting these input chunks into tokens for processing was one of the more difficult tasks in this project. This component is discussed in more detail below. 3 3.1 Parser System Development Grammar Categories As mentioned above, the parsing system is based on a simple tabular parser. The termination conditions were changed so that the parse is considered complete if the category stack was empty. The parser was also modified to recognize a number of special category tokens in addition to the lexicon. Grammar specifications can use special categories like “txt(abc)” to match “abc” input, which also makes some patterns more transparent by bypassing the lexicon. Grammar specifications can use the categories below, in addition to a vocabulary specified in the lexicon: Category INT DEC NUM UL LL ROM BRAC_INT BRAC_UL BRAC_LL BRAC_ROM Matches Integers Decimal numbers Integers or decimal numbers Uppercase letters Lowercase letters Roman numerals Integers enclosed in () Uppercase letters enclosed in () Lowercase letters enclosed in () Roman numerals enclosed in () A third type of grammar category was of the form “ASSUME_LEV1”, where LEV1 could be any level within the reference hierarchy. When the parser encounters a category that starts with “ASSUME_” during a parse attempt, it requests whatever follows the 4 underscore from the RegXMLReader (in this case “LEV1”) and the returned value is added to the parse as a match.1 The RegXMLReader returns the appropriate reference level for the current part of text it is reading as the assumed reference. 3.2 Tokenizing the input Preparing the input for the parser was a difficult problem, requiring several design iterations to develop a good system. The WordQueue is used to tokenize and buffer the input. Lines are read from the input file and fed into the WordQueue until it contains more than some specified number of tokens. The input is then passed to the parser to look for a reference. If a reference is found, the tokens that constitute the reference are removed from the queue. Otherwise, the first token in the queue is removed and the input is passed back to the parser. If the WordQueue’s length drops below a threshold, more lines are read from the input file and added to the queue. The difficult part was developing an algorithm for the WordQueue to use for tokenizing the input. The first attempt was to split on white space and then split off any trailing punctuation. Upon testing, this proved woefully inadequate. Some of the text included lines like, “oil leaks (as in §§279.14(d)(1)). Materials…”. The tokenized version of this line (using a space delimiter) should look like, “oil leaks ( as in § § 279.14 (d) (1) ) . Materials”. The algorithm cannot split on all “.” because some may occur as part of a number. It cannot split on all “(“ or “)” because some may be part of a (d) marker that should be preserved. The final solution was to first split the input on white space, and then do a second pass on each individual token. This second pass involves splitting the token into smaller tokens until no more splits are possible. The second process looks to split off starting “§” symbols, trailing punctuation, unbalanced opening or closing parenthesis2, and groups of characters enclosed in parenthesis. 3.3 Grammar Development Grammar and lexicon development was started by reading through some regulations and manually identifying patterns. After patterns were encoded in the grammar and lexicon, the RefFinder system was run to identify references and produce an output file containing failed parse attempts for which the n-gram model predicted a reference would be found (“suspected” references). This is similar to the treatment of “empty” rules, except an assumed value is matched. This means splitting off unequal numbers of “(“ or “)” on a token. For example, “(d))” is split into “(d)” and “)”. 1 2 5 It is difficult to quantify the value of these “suspected” references, but they were extremely useful. The first iteration on building the grammar and lexicon files only found 28 references in a particular regulation file. The “suspect.refs” output file consisted almost entirely of missed references, with only a few false predictions. This reduced the difficultly in identifying weak areas where the grammar needed extension. As the quality of the grammar increased, so did the number of identified references and the number of false predictions in the “suspect.refs” file. The final system found 331 references in the regulation file, and the “suspect.refs” file consisted entirely of false predictions. Although it is difficult to quantify the value of the n-gram reference-prediction model for grammar development, the answer to the question, “Is it valuable?”, is clearly “yes”. 4 Statistical Parsing Evaluation Some experiments were done to test how valuable an n-gram model would be for speeding up the parsing process by skimming over text that was not predicted to contain a reference. To develop a good n-gram model, a regulation corpus of about 650,000 words was assembled. The parser found 8,503 references training on this corpus.3 These 8,503 references were preceded by 184 unique unigrams, 1,136 unique bigrams, and 2,276 unique trigrams. For these n-grams to be good predictors of a reference, they should occur frequently enough to be useful predictors, and they should not occur so frequently in the general corpus that their reference prediction value is washed out. For the unigrams, it was interesting to note that 18 of the most “certain” predictors were highly “certain” because they were only seen once in the entire corpus. Other unigrams, such as “in”, which one might intuitively expect to be a good predictor for references, had a surprisingly low 5% prediction. This is because the 2,626 references that were preceded by “in” were so heavily outweighed by the 49,325 total occurrences of “in” in the corpus. These two factors made the unigram model a weak one, since words with high certainty tended to be those that were rarely seen, and words that preceded many references tended to be common words that also appeared often throughout the corpus. One exception to this was the word “under”, which preceded 1,135 references and only appeared 2,403 times in the corpus. The bigram model was a good predictor of references. While over 200 (18%) of the bigrams only occurred once in the corpus, the bigrams that preceded a large number of references were not washed out by an even larger number of occurrences in the corpus This also demonstrates the high concentration of references in the regulation documents – a reference occurs on average once every 76 words. 3 6 (as “in” did for unigrams). For example, “requirements of”, preceded 1,059 references, and was seen 1,585 times total.4 The trigram model helped refine some of the bigram predictors. For example, “described in” with a 61% prediction rate was refined into 35 trigrams with prediction probabilities ranging from 11% to 100%. In general however, the trigram model appeared to split things too far, since about 1/3 of the trigrams only appeared a single time in the entire corpus. The three n-gram models were used together by calculating a weighted sum of unigram (U), bigram (B) and trigrams (T) before attempting a parse of the input. A threshold of 1.0 was used to determine if the parse should be carried out. Changing the weightings for the parameters changes which parses are attempted. 1U 2 B 3T 1 Equation 1. Threshold function for n-gram model The n-gram model is effective for speeding up parsing, but there is a tradeoff between parsing speed and recall. To study this tradeoff the n-gram model was trained on the 650,000-word corpus and then tested on a 36,600-word corpus. To test the system a brute-force search was done through a range of ’s (1 = 1-20,000, 2 = 1-10,000, 3 = 1640). There were over 10,000 passes through the test file completed, and the number of reference parse attempts and successful reference parses were recorded. Examples with the lowest number of parse attempts for a given level of recall were selected from the test runs. This provided an efficient frontier that shows the best efficiency (successful parses / total parse attempts) for a given level of recall. These results are shown in Figure 2. Some other examples are “specified in” that occurred 874/1147, “defined in” which occurred 228/256, and “required by” which occurred 208/333. 4 7 Recall vs. Parse Attempts Trade-off Parse attempts as a multiple of the total # of references in document 30 25 20 15 10 5 0 0 0.2 0.4 0.6 0.8 1 Percent references found Figure 2. Trade-off between recall and required number of parse attempts The x-axis of the graph shows the level of recall for the pass through the test file. So as to provide an indicator of how much extra work the parser was doing, the y-axis shows the total number of parse attempts divided by the total number of references in the document. This means an ideal system would create a plot from (0,0) to (1,1) with a slope of 1, since it every parse attempt would result in an identified reference. There were at total of 569 references in the test corpus As can be seen from the graph, the system reasonably approximates an ideal parse prediction system for recall levels less than 90%. There is clearly a change in the difficulty of predicting references as the desired recall level goes above 90%. For recall levels between 0 and 90% the slope of the graph is about 1.7. This means the price of increasing the recall is relatively low, and the parser is not doing that much extra work. For recall levels above 90%, the slope of the graph becomes very large (greater then 400), which means any additional increase in recall will come at a very significant increase in the number of parse attempts. It was surprising to see that the prediction system was able to achieve 100% recall on the test set, which was previously unseen data. It was expected that the system would peak out in the upper 90% range. The 100% recall should not be achievable in general because there always exists a word that can precede a reference that has not been seen before in training. 8 Despite the steep curve to achieve recall above 90%, the total number of parses to achieve 100% was only 14,310. This compares quite well to the 37,132 parse attempts required to check the document for references without using the n-gram model (by attempting all possible parses). To further investigate the shape of the curve in Figure 2, plots of the coefficients were examined for the n-gram models. A rough but distinct trend in this data was observable. For the points on the “optimal frontier” between 0 and 60% the three n-gram models appear fairly balanced in terms of importance. For the points between 60% and 90% the trigram and bigram models dominated the unigram model. Above 90%, it appears the unigram model dominates the bigram and trigram models. It is not clear why there is a difference between the 0-60% and 60-90% range, but these findings do explain why there is a sharp change in the slope of Figure 2 around 90% recall. It appears the usefulness of the bigrams and trigrams is exhausted around this range, mostly likely to a sparseness problem in the training data. The only way to increase the number of reference predictions beyond this point is to shift the focus to the unigram model – which was noted above to have much lower accuracy than the bigram or trigram models. This results in a significantly steeper recall vs. parse attempts trade-off curve. 5 5.1 Semantic Parsing System Overall Architecture The semantic parsing system was built on top of a simple tabular parser that does a type of depth-first processing of the parse tree and treats each node as an input token. The processing deviates from strict depth-first processing when special control categories are encountered. Grammar and lexicon files provide control information to the semantic interpreter. The parsing algorithm differs from a simple tabular parser in that when a category label is found, it is not removed from the category search stack. Instead, the found category is marked “found” and remains on top of the stack. The next matching category can be the “found” category or the second category in the stack. If the second category in the stack is matched, it is marked found and the top category is removed. 5.1.1 Grammar The grammar file is essentially a list of templates that specify what type of reference is well formed. All parse tree parser grammar rules must start with “REF --> “. The grammar used for the environmental regulations was: REF --> LEV0 LEV1 LEV2 REF --> LEV0 LEV3 LEV4 LEV5 LEV6 LEV7 9 These two grammar rules correspond to the two types of references that were being searched for: 40.cfr.262.F (chapter, part, subpart) 40.cfr.262.12.a.13.iv (chapter, part, section, subsection, etc..) 5.1.2 Lexicon The lexicon file specifies how to treat different parsing categories. There are five semantic interpretation categories that can be used in the lexicon. These categories are used to classify the categories used earlier for the parser. These five semantic interpretation categories are: Category Meaning PTERM Indicates the node is a printing terminal string (to be added the reference string currently being built) Indicates the node is a non-printing terminal string (the node is ignored) Indicates the next child node of parent should be ignored and not processed Indicates the current reference string is complete, and a new reference string should be started Indicates that a list of references should be generated to make a continuous list between the previous child node and the next child node. (If the child node sequence was “262, INTERPOLATE, 265”, this would generate the list “263, 264”) NPTERM SKIPNEXT REFBREAK INTERPOLATE 5.2 Algorithms 5.2.1 Simple Semantic Interpretation The semantic parser works by attempting to match the category stack to the nodes in the tree. The parser maintains a “current reference” string that is updated as nodes in the parse tree are encountered. References are added to a list of complete references when the parser encounters “REFBREAK” or “INTERPOLATE” nodes, or completes a full parse of the tree. Two examples follow that explain this process in detail. 10 REF LEV0’ LEV0 INT 40 CFR CFR LEV1a’ LEV1a CONN’ LEV1a’ LEV1p CONN and LEV1a PART INT CONL2 parts 264 e Figure 3. Example Simple Parse Tree LEV1s INT 265 The original reference for the parse tree in Figure 3 was, “40 CFR parts 264 and 265”. The semantic interpretation parser transforms this into two complete references: 40.cfr.264, and 40.cfr.265. Figure 3 is an example of a simple parse tree that can be interpreted. The parser starts by expanding the REF category in its search list to “LEV0 LEV1 LEV2”. It then starts a depth-first parse down the tree, starting at REF. The LEV0’ node matches LEV0, so this category is marked as found. The LEV0 node also matches the LEV0 search category. Next the children of LEV0 are processed from left to right. Looking INT up in the lexicon shows it is a PTERM, so the current reference string is now “40”. Looking CFR up in the lexicon shows it is also a PTERM, so this leaf’s value is appended to the current reference string to form “40.cfr”. Next, LEV1a’ is processed, and a note is made that the incoming current reference string was “40.cfr”. LEV1a’ matches LEV1, so the top LEV0 search category is discarded and the LEV1 category is marked as found. Processing continues down the LEV1a branch of the tree to the LEV1p node. The PART child node is found to be a NPTERM in the lexicon, so it is not appended to the current reference string. INT is found to be a PTERM, so it is concatenated to the search string. Since CONL2 is also a NPTERM, the algorithm returns back up to LEV1a’. The next child node to be processed is CONN’, which is found to be a REFBREAK in the lexicon. This means the current reference is complete, so “40.cfr.264” is added to the list of references and the current reference is reset to “40.cfr”, the value it had when the LEV1a’ parent node was first reached. Processing then continues down from LEV1a’ to the right-most leaf of the tree. At this point the current reference is updated to “40.cfr.265” and a note is made that the entire tree has been traversed, so “40.cfr.265” is added the to the list of 11 identified references. Next the parser would try the other expansion of REF as “LEV0 LEV3 LEV4 LEV5”, but since it would be unable to match LEV3 this attempt would fail. The final list of parsed references would then contain 40.cfr.264 and 40.cfr.265. 5.2.2 Complex semantic interpretation The basic approach described in 5.2.1 was extended to handle references where the components of the reference do not appear in order. For example, the parser might encounter the reference “paragraph (d) of section 262.14”. A proper ordering of this reference would be “section 262.14, paragraph (d)”. To handle these cases, if the top of the category search stack cannot be matched to a node in the tree the remainder of the parse tree is scanned to see if the missing category appears elsewhere in the tree (a “backreference”). If the category is found, it is processed and appended to the current reference before control returns to the original part of the parse tree. If multiple references are found during the back-reference call, the order needs to be reversed to maintain correctness. This allows parsing an interpretation from trees like the following: REF ASSUME_LEV0 40.cfr LEV2’ BACKREFKEY SUBPART UL’ Subpart of UL O LEV1r CONN’ LEV1a’ LEV1p CONN LEV1a PART INT CONL2 part Figure 4. Example Parse Tree LEV1r’ 264 e or LEV1s INT 265 The original reference for the parse tree in Figure 4 was, “Subpart O of part 264 or 265”. The semantic interpretation parser transforms this into two complete references: 40.cfr.264.O, and 40.cfr.265. In cases of ambiguous meaning, the parser maximizes the scope of ambiguous references. For example, the parse in Figure 4 could also 40.cfr.264.O and 40.cfr.265.O, but this might be too narrow a reference if 40.cfr.264.O and 40.cfr.265 was actually intended. Figure 4 is an example of a complex parse tree that can be interpreted. A brief explanation of this parse tree follows. In this example, the semantic parser will first expand the starting REF category to be “LEV0 LEV1 LEV2”. LEV0 will match the 12 ASSUME_LEV0 leaf, and the current reference string will be updated to be “40.cfr”. Next, the parser encounters LEV2’, which does not match LEV0 or LEV1. The parser then searches for a possible “back-reference” (a level of the reference that is out of order, referring back to a lower level), which it finds as LEV1r’. The parser processes this part of the tree, concatenating the INT under LEV1p to the reference string. It also notes the reference string is complete upon encountering the CONN’ (a REFBREAK), so a new reference string is started with “265” and a notation is made that the rightmost leaf of the tree was found. Upon returning from the back-reference function call, it is noted that multiple references were encountered, so a reconciliation procedure is run to swap “40.cfr.264” with “40.cfr.265” in the complete reference list and to set “40.cfr.264” as the current reference string. Now the parser can match the LEV2’ category and update the current reference list to be “40.cfr.264.O”. Next the parser encounters the BACKREFKEY category, which the lexicon identifies as type SKIPNEXT, so the parser can skip the next child node. Skipping the next child node brings the parser to the end of the tree. Since the parser noted earlier that it had processed the right-most leaf, it knows that this was a successful semantic parsing attempt, and it adds the “40.cfr.264.O” to its reference list. The subsequent attempt to parse the tree using “LEV0 LEV3 LEV4…” will fail to reach the rightmost leaf, so no more parses will be recorded. Thus, the final reference list is 40.cfr.264.O and 40.cfr.265. 5.2.3 General Applicability Of The Semantic Parsing Algorithm The parsing system that was developed, along with the semantic interpreter for the parse trees, should be simple to reconfigure to parse and interpret a variety of different referencing systems or desired text patterns. Using a grammar and lexicon to specify how to treat categories from a parsed reference provides a great deal of flexibility for the system. New grammar and lexicon files can be introduced to change the system so that it parses for new types of references. The main limitation of the system is that rules cannot be left-recursive. 6 6.1 Conclusions Possible Further Extensions Not Pursued For purposes of this project I only worked with an n-gram model for predictions. Another option that could perform the same tasks as my n-gram model is a probabilistic context model. When I started this project I chose to use an n-gram model for two reasons. First, it seemed intuitively clear that there are some common words and phrases that precede references in the text, so I thought it would be interesting to see how valuable these words and phrases are for reference prediction. Second, I had already written a context model for programming project 2, so I thought I would be interesting to try working with n-grams. 13 Although the n-gram model worked well for predicting references, I believe that the ngram model faces limitations. As noted in section 4, there are always new words that could precede a reference, so attaining high reference recall with an n-gram model is problematic. If a context model were built to predict references by looking at the top three or four input tokens, this model might be quite successful. A key requirement for the context model to operate effectively would be for it to group tokens in a manner similar to the reference parser. For example, “262.12” and “261.43” should both be counted as belonging to the “numbers” group. The same idea would apply to roman numerals, uppercase letters in parenthesis, etc. Using these groupings the context model could learn the common words and components of a reference, rather than rely on the words preceding a reference. 6.2 Summary This project answered three questions surrounding the issue of regulation reference extraction. It was shown that an effective parser can be built to recognize and transform environmental regulation references into a standard format. It was also shown that an ngram model can be used to help the parser “skim” through a document quickly without missing many references, although there was a significant time/recall tradeoff as explored in Figure 2. Finally, it was found, qualitatively at least, that an n-gram referenceprediction model is a useful tool for grammar development when attempting to build a parser for sections of text. 7 References Manning, C., Schütze, H. 1999. Foundations of Statistical Natural Language Processing. MIT Press. Jurafsky, D., Martin, J. 2000. Speech and Language Processing. Prentice Hall. Gazdar, G., Mellish, C., 1989. Natural Language Processing in PROLOG: An Introduction to Computational Linguistics, Addison Wesley Longman, Inc. 14 Appendix A – Reference Parser Grammar REF --> LEV0' REF --> ASSUME_LEV0 LEV1r' REF --> LEV2' BackRefKey LEV0 REF REF REF REF --> --> --> --> ASSUME_LEV0 ASSUME_LEV0 ASSUME_LEV0 ASSUME_LEV0 LEV2' BackRefKey LEV1r' SEC LEV3' SecSymb LEV3' SecSymb SecSymb LEV3' REF --> ASSUME_LEV0 PARA LEV4' BackRefKey LEV3 #REF --> ASSUME_LEV0 ASSUME_LEV1 PARA LEV4' BackRefKey LEV3 REF --> ASSUME_LEV0 ASSUME_LEV1 LEV2' REF --> ASSUME_LEV0 LEV2' BackRefKey LEV1a LEV0' --> LEV0 LEV0' --> LEV0 CONN' LEV0' LEV0 --> INT CFR LEV1a' LEV0 --> INT CFR LEV3' LEV1a' --> LEV1a LEV1a' --> LEV1a CONN' LEV1a' LEV1a' --> LEV1a INTERP LEV1a' LEV1r' --> LEV1r LEV1r' --> LEV1r CONN' LEV1a' LEV1r' --> LEV1r INTERP LEV1a' LEV1a' --> LEV1s LEV1a' --> LEV1p LEV1r' --> LEV1p LEV1a --> LEV1s LEV1a --> LEV1p LEV1r --> LEV1p LEV1s --> INT LEV1s --> LEV1_SELFREF LEV1p --> PART INT CONL2 LEV1_SELFREF --> txt(this) txt(part) ASSUME_LEV1 CONL2 --> txt(,) LEV2' CONL2 --> e LEV2' --> LEV2_SELFREF LEV2' --> SUBPART UL' LEV2_SELFREF --> txt(this) txt(subpart) ASSUME_LEV2 LEV2_SELFREF --> txt(this) txt(Subpart) ASSUME_LEV2 15 UL' --> UL UL' --> UL CONN' UL' UL' --> UL INTERP UL' LEV3' --> LEV3 LEV3' --> LEV3 CONN' LEV3' LEV3' --> LEV3 INTERP LEV3 LEV3 --> LEV3_SELFREF LEV3 --> DEC CONL4 LEV3 --> PART DEC CONL4 LEV3_SELFREF --> txt(this) txt(section) ASSUME_LEV3 CONL4 --> e CONL4 --> LEV4' LEV4' --> LEV4 LEV4' --> LEV4 CONN' LEV4' LEV4' --> LEV4 INTERP LEV4' LEV4 --> BRAC_LL CONL5 CONL5 --> e CONL5 --> LEV5' LEV5' --> LEV5 LEV5' --> LEV5 CONN' LEV5' LEV5' --> LEV5 INTERP LEV5' LEV5 --> BRAC_INT CONL6 CONL6 --> e CONL6 --> LEV6' CONN' --> CONN CONN' --> SEP CONN LEV6' --> LEV6 LEV6' --> LEV6 CONN' LEV6' LEV6' --> LEV6 INTERP LEV6' LEV6 --> BRAC_ROM CONL7 CONL7 --> e CONL7 --> LEV7' LEV7' --> LEV7 LEV7 --> BRAC_UL CONL8 LEV6' --> LEV6 CONN' LEV6' LEV6' --> LEV6 INTERP LEV6' CONL8 --> e 16 Appendix B – Reference Parser Lexicon CONN --> and CONN --> or CONN --> , SEP --> , SEP --> ; INTERP --> through INTERP --> between INTERP --> to PART PART PART PART --> --> --> --> part parts Part Parts SUBPART SUBPART SUBPART SUBPART --> --> --> --> SEC SEC SEC SEC section sections Section Sections PARA PARA PARA PARA --> --> --> --> --> --> --> --> subpart subparts Subpart Subparts paragraph paragraphs Paragraph Paragraphs BackRefKey --> of BackRefKey --> in CFR --> CFR CFR --> cfr 17 Appendix C – Semantic Parser Grammar REF --> LEV0 LEV1 LEV2 REF --> LEV0 LEV3 LEV4 LEV5 LEV6 LEV7 Appendix D – Semantic Parser Lexicon PTERM PTERM PTERM PTERM PTERM PTERM PTERM PTERM NPTERM NPTERM NPTERM NPTERM NPTERM NPTERM NPTERM --> --> --> --> --> --> --> --> --> --> --> --> --> --> --> INT CFR DEC UL BRAC_INT BRAC_LL BRAC_UL BRAC_ROM PARA PART SUBPART SEC SecSymb txt e SKIPNEXT --> BackRefKey REFBREAK --> CONN REFBREAK --> SEP REFBREAK --> CONN' INTERPOLATE --> INTERP 18 Appendix E – Simple Example Run tree2:~> java RefFinder -f parser.gram parser.lex sample.xml Saving found references in found.refs no test file provided using default n-gram lambdas. Initializing new parser reading grammar file... reading lexicon file... Initializing new parse tree parser. reading grammar file... reading lexicon file... training... 40.cfr parts 262 E through 265 , 268 , and parts 270 E , 271 , and 124 ----------- Retrieved Refs --------ref.40.cfr.262 ref.40.cfr.263 ref.40.cfr.264 ref.40.cfr.265 ref.40.cfr.268 ref.40.cfr.270 ref.40.cfr.271 ref.40.cfr.124 ***** found parse: 40.cfr parts 262 E through 265 , 268 , and parts 270 E , 271 , and 124 parts 262 through 265 , 268 , and parts 270 , 271 , and 124 of this chapter and which are ject to the notification requirements of section 3010 of RCRA . In this part : (1) Subpart A defines the terms regulation as hazardous wastes under 40.cfr 261 Subpart A ----------- Retrieved Refs --------ref.40.cfr.261.A ***** found parse: 40.cfr 261 Subpart A Subpart A defines the terms ``solid waste'' and ``hazardous waste'' , identifies those wastes which are special management requirements for hazardous waste produced by conditionally exempt small quantity tors and hazardous waste which is recycled . (2) sets forth the criteria In this part : (1) 40.cfr § § 261.2 E and 261.6 E ----------- Retrieved Refs --------ref.40.cfr.261.2 ref.40.cfr.261.6 ***** found parse: 40.cfr § § 261.2 E and 261.6 E § § 261.2 and 261.6 : (1) A ``spent material'' is any material that has been used and as a result of contamination can no longer serve the purpose for which it was produced without processing ; (2) ``Sludge'' has the same meaning (c) For the purposes of 40.cfr § 261.4 (a) (13) E ----------- Retrieved Refs --------ref.40.cfr.261.4.a.13 ***** found parse: 40.cfr § 261.4 (a) (13) E § 261.4 (a) (13) ) . (11) ``Home scrap metal'' is scrap metal as generated by steel mills , foundries , and refineries such as turnings , cuttings , punchings , and borings . (12) ``Prompt scrap metal'' is scrap circuit boards being recycled ( 40.cfr paragraph (b) E of this section 261.2 ----------- Retrieved Refs --------- 19 ref.40.cfr.261.2.b ***** found parse: 40.cfr paragraph (b) E of this section 261.2 paragraph (b) of this section ; or (ii) Recycled , as explained in (iii) Considered inherently waste-like , section ; or (iv) A military munition identified as a (b) Materials are solid waste if they are abandoned by being : Abandoned , as explained in 40.cfr paragraphs (c) (1) E through (4) E of this section 261.2 ----------- Retrieved Refs --------ref.40.cfr.261.2.c.1 ref.40.cfr.261.2.c.2 ref.40.cfr.261.2.c.3 ref.40.cfr.261.2.c.4 ***** found parse: 40.cfr paragraphs (c) (1) E through (4) E of this section 261.2 paragraphs (c) (1) through (4) of this section . (1) Used in a manner constituting disposal . (i) Materials noted with a `` Column 1 of Table I are solid wastes when they are : (A) Applied to or placed on the land recycled by being : in 40 CFR 261.4 (a) (17) E ----------- Retrieved Refs --------ref.40.cfr.261.4.a.17 ***** found parse: 40 CFR 261.4 (a) (17) E 40 CFR 261.4 (a) (17) ) . (4) Accumulated speculatively . Materials noted with a `` Table 1 are solid wastes when accumulated speculatively . (i) Used or reused as ingredients in an industrial process to make a product , ( except as provided under 40.cfr paragraphs (e) (1) (i) E through (iii) E of this section 261.2 ----------- Retrieved Refs --------ref.40.cfr.261.2.e.1.i ref.40.cfr.261.2.e.1.ii ref.40.cfr.261.2.e.1.iii ***** found parse: 40.cfr paragraphs (e) (1) (i) E through (iii) E of this section 261.2 paragraphs (e) (1) (i) through (iii) of this section ) : (i) Materials used in a manner constituting disposal , or used to produce products that are applied to the land ; or (ii) Materials burned for energy recovery , used to produce a fuel , or original process ( described in 40.cfr paragraphs (d) (1) E and (d) (2) E of this section 261.2 ----------- Retrieved Refs --------ref.40.cfr.261.2.d.1 ref.40.cfr.261.2.d.2 ***** found parse: 40.cfr paragraphs (d) (1) E and (d) (2) E of this section 261.2 paragraphs (d) (1) and (d) (2) of this section . (f) Documentation of claims that materials are not solid wastes or are conditionally exempt from regulation . Respondents in actions to enforce regulations implementing who raise a claim that a certain or (iv) Materials listed in Found 9 references in corpus tree2:~> 20