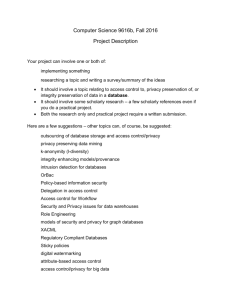

Trustworthy Computing - School of Computer Science

advertisement

Trustworthy Computing Jeannette M. Wing School of Computer Science Carnegie Mellon University 31 August 2004 A computing system that is trustworthy is reliable, secure, privacy-preserving, and usable. Whereas much research over the decades has focused on making systems more reliable and more secure, we still have systems that fail and that are vulnerable to attack. Moreover, as our systems keep growing in size, functionality, and complexity, as the environments in which they operate are more unpredictable, and as the number of attackers and sophistication of attacks continue to increase, we can count on having unreliable and insecure systems for the foreseeable future. Recall this axiom from the security community, which I rename the Trustworthy Axiom: Trustworthy Axiom: Good guys and bad guys are in a never-ending race. It’s not a matter of achieving trustworthiness and declaring victory; it’s a matter of trying to stay ahead of the bad guys. Addressing privacy and usability of a computing system is even far less understood from a technical, let alone formal viewpoint, than reliability and security. Yet, both are as important to ensure for a system to be trustworthy, if in the end we want humans to use our systems, to have trust in the computing systems they use. Let’s look at each of these properties of trustworthy computing in turn. 1. Reliability By reliable, I mean the system computes the right thing; in theory, reliable means the system meets its specification—it is “correct”; in practice, because we often do not have specifications at all or the specifications are incomplete, inconsistent, imprecise, or ambiguous, reliable means the system meets the user’s expectations—it is predictable and when it fails, no harm is done. We have made tremendous technical progress in making our systems more reliable. For hardware systems, we can use redundant components to ensure a high-degree of predictive failsafe behavior. For software systems, we can use advanced programming languages and tools and formal specification and verification techniques to improve the quality of our code. What is missing? Here are some starters: Science of software design. While we have formal languages and techniques for specifying, developing, and analyzing code, we do not yet have a similar science for software design. To build trustworthy software, we need to identify software design principles (e.g., Separation of Concerns) with security in mind and to revisit security design principles (e.g., Principle of Least Privilege) with software in mind. Compositional reasoning techniques. Today’s systems are made up of many software and hardware components. While today’s attacks exploit bugs in individual software components, tomorrow’s attacks will likely exploit mismatches between independently designed components. I call these “design-level vulnerabilities” because the problem is above the level of code: even if the individual components are implemented correctly, 1 when put together emergent abusive behavior can result. We need ways to compose systems that preserve properties of the individual components, or when put together, achieve a desired global property. Similarly, we need way to detect and reason about emergent abusive behavior. Software metrics. While we have performance models and benchmark suites for computer hardware and networks, we do not for software. We need software metrics that allow us to quantify and predict software reliability. When is a given system “good enough” or “safe enough”? Notably, the Computing Research Associates Grand Challenges in Trustworthy Computing Workshop (http://www.cra.org/grand.challenges), held in November 2003, formulated the following grand challenge: Within 10 years, develop quantitative information-systems risk management that is at least as good as quantitative financial risk management. 2. Security By secure, I mean the system is not vulnerable to attack. In theory, security can be viewed as another kind of correctness property. A system, however, is vulnerable at least under the conditions for which its specification is violated and more subtly, under the conditions that the specification does not explicitly cover. In fact, a specification’s precondition tells an attacker exactly where a system is vulnerable. One way to view the difference between reliability and security is given by Whittaker and Thompson in How to Break Software Security, Pearson 2004, in this Venn diagram: The two overlapping circles combined represent system behavior. The left circle represents desired behavior; the right, actual. The intersection of the two circles represents behavior that is correct (i.e., reliable) and secure. The left shaded region represents behavior that is not implemented or implemented incorrectly, i.e., where traditional software bugs are found. The right shaded region represents behavior that is implemented but not intended, i.e., where security vulnerabilities lie. As for reliability, for security we think in terms of modeling, prevention, detection, and recovery. We have some (dated) security models, such as Multi-Level Security and Bell-LaPadula, to state desired security properties and we use threat modeling to think about how attackers can enter our 2 system. We have encryption protocols that allow us to protect our data and our communications; we have mechanisms such as network firewalls to protect our hosts from some attacks. We have intrusion detection systems to detect (though still with high false positive rates) our systems are under attack; we have code analysis tools to detect buffer overruns. Our recovery mechanisms today rely on aborting the system (e.g., in a denial-of-service attack) or installing software patches. We need more ways to prevent and protect our systems from attack. It’s more cost-effective to prevent an attack than to detect and recover from it. There are two directions in which I would like to see the security community steer their attention: (1) design-level, not just code-level vulnerabilities and (2) software, not just computers and networks. 3. Privacy By privacy, I mean the system must preserve users’ identity and protect their data. Much past research in privacy addresses non-technical questions with contributions from policymakers and social scientists. I believe that privacy is the next big area related to security for technologists to tackle. It is time for the technical community to address some fundamental questions such as: What does privacy mean? How do you state a privacy policy? How can you prove your software satisfies it? How do you reason about privacy? How do you resolve conflicts among different privacy policies? Are there things that are impossible to achieve wrt some definition of privacy? How do you implement practical mechanisms to enforce different privacy policies? As they change over time? How do you design and architect a system with privacy in mind? How do you measure privacy? My call to arms is to the scientists and engineers of complex software systems to start paying attention to privacy, not just reliability and security. To the theoretical community—to design provably correct protocols that preserve privacy for some formal meaning of privacy, to devise models and logics for reasoning about privacy, to understand what is or is not impossible to achieve given a particular formal model of privacy, to understand more fundamentally what the exact relationship is between privacy and security, and to understand the role of anonymity in privacy (when is it inherently needed and what is the tradeoff between anonymity and forensics). To the software engineering community—to think about software architectures and design principles for privacy. To the systems community—to think about privacy when designing the next network protocol, distributed database, or operating system. To the artificial intelligence community—to think about privacy when using machine learning for data mining and data fusion across disjoint databases. How do we prevent unauthorized reidentification of people when doing traffic and data analysis? To researchers in biometrics, embedded systems, robotics, sensor nets, ubiquitous computing, and vision—to address privacy concerns along with the design of their next-generation systems. 4. Usability By usability, I mean the system has to be usable by human beings. To ensure reliability, security, and privacy, often we need to trade between user convenience and user control. Also, there are tradeoffs we often need to make when looking at these four properties in different combinations. 3 Consider usability and security. Security is only as strong as the system's weakest link. More often than not that weakest link involves the system's interaction with a human being. Whether the problem is with choosing good passwords, hard-to-use user interfaces, complicated system installation and patch management procedures, or social engineering attacks, the human link will always be present. Similarly consider usability and privacy. We want to allow users to control access, disclosure, and further use of their identity and their data; yet we do not want to bombard them with pop-up dialog boxes asking for permission for each user-system transaction. Similar remarks can be made for tradeoffs between usability and reliability (and for that matter, security and privacy, security and reliability, etc.). Fortunately, the human-computer interaction community is beginning to address issues of usable security and usable privacy. We need to design user interfaces to make security and privacy both less obtrusive to and less intrusive on the user. As computing devices become ubiquitous, we need to hide complicated security and privacy mechanisms from the user but still provide user control where appropriate. How much of security and privacy should we and can we make transparent to the user? We also should turn to the behavioral scientists to help the computer scientists. Technologists need to design systems to reduce their susceptibility to social engineering attacks. Also, as the number and nature of attackers change in the future, we need to understand the psychology of the attacker: from script kiddies to well-financed, politically motivated adversaries. As biometrics become commonplace, we need to understand whether and how they help or hinder security (perhaps by introducing new social engineering attacks). Similarly, help or hinder privacy? This usability problem occurs at all levels of the system: at the top, users who are not computer savvy but interact with computers for work or for fun; in the middle, users who are computer savvy but do not and should not have the time or interest to twiddle with settings; at the bottom, system administrators who have the unappreciated and scary task of installing the latest security patch without being able to predict the consequence of doing so. We need to make it possible for normal human beings to use our computing systems easily, but with deserved trust and confidence. 5. Trustworthy Computing at Carnegie Mellon’s School of Computer Science See attached spreadsheet of SCS faculty, centers, institutes, and research groups that cover the four areas of trustworthy computing. Acknowledgments The term Trustworthy Computing is used by Microsoft Corporation. Whereas I define trustworthy in terms of reliability, security, privacy, and usability, Microsoft’s Four Pillars of Trustworthy Computing are reliability, security, privacy, and business integrity. I gained much appreciation for the scope and difficulty of this initiative while on a one-year sabbatical at Microsoft Research. 4