C.3. Annotations useful in the sub

advertisement

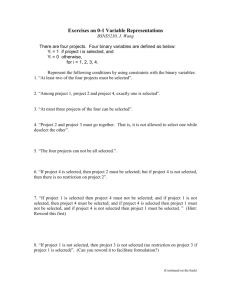

十二、研究計畫內容: (二)研究計畫之背景及目的。請詳述本研究計畫之背景、目的、重要性及國內外有關本計畫 之研究情況、重要參考文獻之評述等。本計畫如為整合型研究計畫之子計畫,請就以上 各點分別述明與其他子計畫之相關性。 (三)研究方法、進行步驟及執行進度。請分年列述:1.本計畫採用之研究方法與原因。2.預 計可能遭遇之困難及解決途徑。3.重要儀器之配合使用情形。4.如為整合型研究計畫, 請就以上各點分別說明與其他子計畫之相關性。5.如為須赴國外或大陸地區研究,請詳 述其必要性以及預期成果等。 (四)預期完成之工作項目及成果。請分年列述:1.預期完成之工作項目。2.對於學術研究、國家 發展及其他應用方面預期之貢獻。3.對於參與之工作人員,預期可獲之訓練。4.本計畫如為 整合型研究計畫之子計畫,請就以上各點分別說明與其他子計畫之相關性。 A. Background In the past, after the binary code is generated by a static compiler and is linked and loaded to the memory, it is pretty much left untouched and unchanged during its program execution and in the rest of its software life cycle unless the code is re-compiled and changed. However, on more recent systems, code manipulation has been extended beyond static compilation time. The most notable systems are virtual machines such as Java virtual machine (JVM), C# and some recent scripting languages. Intermediate code format such as bytecode in JVM is generated first and then interpreted at runtime, the intermediate code could also be compiled dynamically after hot code traces are detected during its execution, and further optimized in its binary form for continuous improvement and transformation during its program execution. As a matter of fact, binary code manipulation is not just limited to virtual machines. Virtualization at all system levels has become increasingly important at the advent of multi-cores and the dawn of utility computing, also known as clouding computing. When there are many compute resources available on the platform, utilizing such resources efficiently and effectively often requires code compiled in one instruction set architecture (ISA) to be moved around in the internet and run on platforms with different ISAs. Binary translation and optimization becomes very important to support such system virtualization. There are also other important applications that require manipulation of binary code. The most notable application includes binary instrumentation in which additional binary code segments are inserted to some specific points of the original binary code. These added binary code segments could be used to monitor the program execution by collecting runtime information during program execution that could be fed back to profile-based static compilers to further improve their compiled code, to detect the potential breach of security protocols, to trace program execution for testing and debugging, or to reverse engineer the binary code and retrieve its algorithms or other vital program information. The main objective of this research is to support high-performance binary code manipulation, in particular, to support system virtualization in which binary translation and binary optimization are crucial to their performance. Existing binary manipulation systems such as DynamoRio, QEMU, Simics, all assume that the binary code they use as input comes straight from the exiting optimizing compilers. Such binary code is often assembled after linking with runtime libraries and other relevant files that are needed for its execution. Hence, it has the entire execution code image. It allows such binary manipulation systems to have a global view of the entire code and could do a global analysis which each individual code piece could not do during its individual compilation phase. However, such global analyses are notoriously time consuming. Furthermore, a lot of vital program information such as control and data flow graph, data types and liveness of their variables, as well as alias information is often not available, and is very difficult to obtain from the original binary code. Such program information could be extremely valuable for a runtime binary manipulation system to carry out more advanced types of code translation and optimization. Binary translation and optimization have been used very successfully in many applications. In functional simulators such as QEMU and Simics, an entire guest operating system using a different ISA could be brought up on a host platform with a completely different ISA and operating system. Guest applications running on the guest operating system will be totally unaware of the host platform and the host operating system it is run on. Such a virtualization system has many important applications. One of the important applications is to allow system and application software to be developed in parallel with a new hardware system still under development. The new hardware system still under development could be virtualized and run on a host system with a completely different ISA and OS. It could save a significant amount of software development time and shorten the time to market substantially. Other virtualization systems being developed on multicore platforms allow different OS’s to be run simultaneously on the same multicore platforms. It provides excellent isolation among these OS’s for high security and reliability. A crashed OS will not affect the other OS’s concurrently run on the same platforms. B. Previous related work We have many years of experience in dynamic binary optimization systems. ADORE [ ] developed on single-core platforms was started in early 2000’s on Intel’s Itanium processor, and later ported to microprocessors developed by Sun Microsystems. ADORE (see Figure 1) has several major components. It relies on hardware performance monitoring system to help in identifying program phases, program control flow structures, and performance bottlenecks in the programs. As such runtime binary manipulation system will take away compute resources from the application that is currently running, the overhead of such manipulation system will be counted as part of the application execution time it tries to optimize. Hence, the resulting program improvement has to be substantial enough to offset such an overhead, or the overhead has to be minimized sufficiently enough not to interfere with the original program execution. Even though such binary manipulation could be done on a different core, thus not interfering with the program execution, it still takes away major core resources from other useful work. To minimize runtime overhead, ADORE uses hardware performance monitoring system to sample machine states at a fixed interval. The interval size determines the overhead, the program phases and the amount of runtime information it could collect. As a stable program phase is detected, hot traces of the program execution will be generated and optimizations will be applied to the hot traces. Optimized hot traces are then placed in a code cache, the original program will be patched to cause control transfer to the optimized code stored in the code cache. If most of the execution time, the program is executing from the optimized code in the code cache, good performance could be achieved. ADORE, COBRA and current NSF grants C. Proposed approach To provide valuable program information obtained during sophisticated static compiler analyses for more effective and efficient runtime binary manipulation, we plan to have the static compiler annotate the generated binary code with such information. The types and the extent of program information useful for runtime binary manipulation will be one of the main subjects of this research. For example, from the experience of ADORE, we found that it is extremely difficult to find free registers that could be safely used by the runtime binary optimizer. As the runtime binary optimizer will share the same register file as the code it tries to optimize, it needs to spill some registers to the memory for its own use. However, there is no application interface (API) convention defined for such interaction between the static compiler and the runtime optimizer. Hence, it is even not easy to find available registers to execute the code that will spill the registers because, to spill registers, it will need at least one register to keep the address of the memory location it plans to spill into. Another example is that it is quite difficult to determine the boundaries of a loop, especially in loops that have complex control flow structures. This could cause extreme difficulty to insert memory prefetch instructions for identified long latency delinquent load operations. Because such prefetch instructions are often inserted in a location that needs to be executed several loop iterations before the intended delinquent load instruction in order to offset the long miss penalty. It is because loops are often implemented in jump instructions which could be difficult to differentiate from the other jump instructions existed in the same loop or the nesting loops. Hence, annotating loop boundaries and/or the control flow graph of each procedure could save a lot analysis time and give runtime binary optimizer tremendous advantages of possible optimization opportunities. Several potentially useful types of program annotations were identified in [Das]. They include: (1) control flow annotations; (2) register usage; (3) data flow annotations; (4) annotations that will be useful for exception handlers; (5) annotations describing load-prefetch relationship. Our research in annotations will be first driven by the need of binary translation and binary optimization, in particular, for multi-core systems. As most of the studies in this area focused primarily on single-core systems so far, adding annotations for multi-core applications will add additional complexity and challenge. In particular, the information that could be used at runtime to balance the workload and to help in mitigating synchronization overhead will be of particular interests to this research. (Expand on this) C.1. Annotate binary code by expanding ELF and adding require information The annotations will primarily be incorporated in the binary code. We plan to use ELF binary format in our prototype as ELF is a standard binary format use on most Linux systems. The challenges will primarily be in several important areas: (1) Size of annotations. Annotating all of the information mentioned in [ ] could take up a lot of memory space and expand the binary code size to the extent that becomes unmanageable. A lot of annotated information may not be useful for a particular program. A carefully designed annotation format and encoding scheme could drastically reduce the annotation size and keep ELF as compact as possible (2) API for annotations. Annotating the types of runtime information that is useful to a particular program on a particular platform could further reduce its size. In many cases, the programmer has the needed inside knowledge about such information, for example, whether the target platform is single-core or multi-core, the program is integer-intensive or floating-point intensive. Such knowledge could affect the type of runtime optimizations that could be useful to the code. Hence, a carefully design API that allows programmers to direct the types of annotations to be generated, e.g. CFG, DFG, register liveness information, or likelihood of exceptions, for some particular code regions could be particularly useful to both the generation of annotations and the potential sets of runtime optimizations to be applied. (3) Dynamic update for annotations. The types of useful annotation might change after each phase of binary manipulation. For example, during binary translation, we might want to add additional information that could be useful to the later runtime optimization. For example, there might be changes to the original CFG or DFG due to binary translation, some useful runtime information may point to the uselessness of some original annotations and trim them to reduce overall code size. (4) Code security. As runtime binary manipulation could potentially alter the original binary code, avoid accidentally overriding some code regions during annotation updates, or binary code translation and optimization, should be carefully considered. For example, using offset to some base address for all memory references instead of absolute memory address could avoid accidental stepping into forbidden code regions and improve its security. C.2. API for specifying the program information to be included in the binary code To allow flexibility and “annotate as your go”, we need to provide good API for user/compiler to specify the types of annotation needed. Provide good API to allow binary manipulator (i.e. translator and optimizer) to access annotation without knowing the format and arrangement of annotations. Good for future upgrades and changes of annotations with changing the users of the annotation as the API is standardized C.3. Annotations useful in the sub-project 2 for binary translation The main purpose of binary translation is to translate a code in the guest ISA to a binary code in the host ISA. In sub-project 2, we propose to build a binary translator based on QEMU-like functional simulator. In such a translator, each guest binary instruction is first interpreted and translated into host binary instruction. In QEMU, each guest instruction is translated into a string of micro-operations defined in QEMU. These micro-operations are then converted to the host ISA. The advantage of such an approach is that the machine state could be accurately preserved and converted from one ISA to another ISA. Hence, even privileged instructions could be interpreted and translated this way. It allows an OS to be booted and run on QEMU accurately. However, the overhead for such an approach is quite high as each guest instruction will require several micro-operations to carry out, and each micro-operation may in term require several host machine instruction to execute it. As shown in sub-project 2, we plan to interpret and translate guest instructions on a per basic block basis. These basic blocks will be translated into an intermediate representation (IR) format, such as that used in LLVM. They will be optimized at the IR level using the annotated information provided in the guest binary code. Such optimization will usually be somewhat machine independent and fast. LLVM code generator could then be used to generate machine dependent host binary code. When hot traces are identified and further machine dependent optimizations will be applied on the identified hot traces, they will be kept in a hot-trace code cache for fast program execution and further optimization. Information collected by runtime hardware performance monitoring system could be used in such further optimizations. There are many implications on how the annotated information in the guest binary code is used and it in term determines what kinds of annotated information will be useful. Some past experiences show that instead of interpreting each guest binary instruction first and then start translating them after hot traces are identified, it is actually more efficient to translate each guest binary instruction as it is being executed. Code optimization could be applied after hot traces are identified. Optimized hot traces are then placed in the code cache for future execution. If the coverage of the traces in the code cache is high, most of the execution time will come from the optimized hot traces in the code cache, and the overall performance could be significantly improved. In the approach proposed in sub-project 2, we will first translate the guest binary into a machine independent IR format on a per basic block basis, and carry out machine independent code optimization at the IR level followed by code generation to the targeted host machine with another ISA. The annotation in the guest binary code could then be used directly in machine independent code optimization within each translated basic block before host machine code is generated. The annotated information could help in forming hot traces and in global optimization across basic blocks within an identified hot trace. Some of the annotation such as those identified [Das] on branch target information for indirect branches could be useful in this process also. C.4. Annotations useful in the sub-project 3 for further binary optimization Many types of annotation that could be useful in binary optimizations for single-core platforms have been identified in [Das]. We plan to identify more annotated information useful on multi-core platforms. Here, we have two possible scenarios. One is similar to a traditional binary optimizer, such as ADORE, in which guest binary code is using the same ISA as that of the host platforms. The other is for the binary optimizer to optimize the translated binary code from a binary translator. In that case, annotated information may have to be converted to match the translated host binary code as well. In sub-project 3, our main focus will be on optimizing the translated host binary code. As mentioned earlier, there are two levels of optimization that could be performed. One is machine independent optimization at the machine independent IR level. The other is at the machine dependent host binary code level after runtime information is collected during program execution. Even for some machine dependent optimizations such as register allocation, they might be parameterized and done during the machine independent optimization phase at the IR level. For example, if we know the number of registers on the host platform and their ABI convention such as register assignment in a procedure call, the types of annotation in the guest binary code such as alias and data dependence information could be used to optimize register allocation. Other useful annotations include: (1) Control flow graph. It could be used in determining the loop structures for hot traces to increase optimized code coverage. It has also been proposed that edge profiling information could be used to annotate each branch instruction and indicate whether it is most likely to be taken or not-taken. Hot traces could be formed this way during binary translation phase. Indirect branch instructions in which branch targets are not known until runtime are the most challenging in forming CFG. However, many indirect branches are from high-level program structures such as the switch statement in a C program, or the return statement of a procedure. If such information could be annotated, their target instructions could be identified and more accurate CFG could be constructed. (2) Live-in and live-out variables could be annotated to provide information for more optimized local register allocation. If the optimization is performed after binary translation, live-in and live-out information might be updated as the translated code might introduce some additional information that needs to be passed on to the following basic blocks. Here, the register acquisition problem may not be as serious as in ADORE as register allocation will be performed during binary translation process. Available free registers could be annotated after the translation phase to the binary optimizer for use later. (3) Data flow graph. Def-use and use-def information could be useful in many well-know compiler optimizations. Other useful information includes alias information and data dependence information. Such information could be useful in register allocation and some well-known partial redundancy elimination (PRE) optimizations. It is even suggested that some information obtained from value profiling could be annotated to help in tracking data flow information. However, the effectiveness of such optimizations and the amount of overhead required for such optimization at runtime is interesting research issues. (4) Exception handlers. A lot of optimizations could alter the order of program execution and, hence, could alter the original machine state when an exception is thrown. However, binary code usually does not have the information on where the exception handlers will be used. To produce accurate machine states when exceptions occur in the original guest binary code, a lot potential code optimization such as code scheduling need to be conservatively suppressed which could have a very significant impact on the program performance. By annotating the regions of exception handlers and only avoid aggressive optimizations in those regions such concerns could be eliminated. (5) Prefetch instruction and its corresponding load instruction. Prefetching could be very effective in reducing miss penalty and cache misses on single-core platforms. However, prefetching instructions usually come with some additional instructions to compute prefetch addresses. Also, because of its proven effectiveness, exiting optimizing compilers often generate very aggressive, and often excessive, prefetcihng instructions. Such excessive prefetching instructions could consume a lot of bus and memory bandwidth if their corresponding load instructions are not delinquent load instructions as originally assumed. It have been shown that by selectively eliminating such aggressive prefetching instructions, performance actually will improve in many programs on single-core platforms [ ]. In a multi-core environment when multiple programs could be in execution concurrently. Too aggressive prefetching from one program could adversely affect the other programs running on the same platform because it takes away valuable bus and memory bandwidth needed by other programs. Identifying a particular load instruction with its corresponding prefetch instruction and the supporting address calculation instructions could help to eliminate those instructions if the load instruction is no longer a delinquent load in this particular run. (6) Workload and synchronization information for parallel applications. For parallel applications running on multi-core platforms, the types of annotation that could help in improving programming performance, or help in tracking program execution for testing and debugging, will be identified and studied. Some well-known information that could help in improving the parallel programs includes workload estimation of each thread and the synchronization information that identifies critical sections or signal and wait instructions. Such information could help in workload balancing at runtime and reducing synchronization wait time and overhead. Other useful information such as the one identified in (5) that could improve the bus and memory bandwidth as well the load latency on a multi-core platform and the tracking of cache coherence traffic to remove potential false data sharing will be of great interests to our study. C.5. Evaluation Evaluation of the effectiveness of adding annotations to the binary code will be another major effort in this project. Open64 compiler will be used as our main platform in generating annotations. Open64 has been a very robust production-level and high-quality open-source compiler. It has all of the major components of a production compiler and is also supporting profile-based approach. It has good documentations and a large and active user community. It is currently supported by major companies such as HP and AMD, and major compiler groups such as University of Minnesota, University of Delaware, University of Houston and several others. It supports almost all major platforms such as Intel IA-64, IA32, Itanium, MIPS and CUDA. It is a very general-purpose compiler that supports C, C++, FORTRAN, and JAVA. We plan to use it to generate all of the useful binary annotations and study its effectiveness in improving program performance, its code size expansion, and the API support needed. D. Other related work E. Methodology and our Work Plan (for each year) E.1 The annotation framework Figure 1 is this sub-project’s framework and it can be divided into two parts, annotation producer and consumer. Producer's functionality focuses on producing annotation and annotates these into binary file. The main component of the producer is the compiler. Consumer's functionality reads annotation from binary file and try to leverage it efficiently, and the main components of the consumer are the binary translator and the binary optimizer. The source of annotation data come from two parts, the one is from the static compiler analysis, and the other one is from the program’s profiles analysis. We will adopt Open64 as the compiler, it is an open source, optimizing compiler for the Itanium and x86-64 microprocessor architectures. The compiler derives from the SGI compilers for the MIPS R10000 processor and was released under the GNU GPL in 2000. Open64 supports Fortran 77/95 and C/C++, as well as the shared memory programming model OpenMP. The major components of Open64 are the frontend for C/C++ (using GCC) and Fortran 77/90 (using the CraySoft front-end and libraries), inter-procedural analysis (IPA), loop nest optimizer (LNO), global optimizer (WOPT), and code generator (CG). It can conduct high-quality inter-procedural analysis, data-flow analysis, data dependence analysis, and array region analysis. Figure 1: The annotation framework. E.2 The Producer Figure 2: The producer’s components and data flowchart. Figure 2 shows the flow of how the producer annotates the information to the ELF executable file. There are two ways to produce the annotated ELF executable file according to the annotation source, the static compiler analysis and the profile analysis. At the static compiler analysis way, the program source code will be compiled and produced assemble code and annotation data by the modified Open64 compiler. However, at the compilation phase, without the virtual address produced by the compiler, only function labels can be identified. Therefore, if the annotation data contain information in the function, the function labels will be the basic point and the annotation data’s position will be record as the offset of function labels. After the assembly code are assembled and produced the ELF executable file by the assembler, the virtual address of the function and instruction are assigned. The annotation data and the corresponding virtual address will be combined and produced the annotated ELF executable file by the annotation combiner. At the profile analysis way, profile data will be analyzed by the profile analyzer, and then producing the useful annotation data. The produced annotation data will be combined by the annotation combiner as well as the static compiler analysis way’s annotation data and then producing the annotated ELF executable file. Annotation information can be stored as a new section in the ELF file (for example “.annotate”). This section can be loaded in the memory after the text segment by setting the SHF_ALLOC flag in the section header for .annotate section and adding this section to the program header table with the PT_LOAD flag set. In order for the consumer (the sub-project 2 or the sub-project 3) to find the location of the .annotate section, the section-name string table should be loaded in memory too. Modifications can be made to the ELF file to enable this. The consumer can now read the in-memory representation of the ELF headers and load the contents of the .annotate section. E.3 The annotation granularity There are four annotation data level, such as the program level, the procedural unit level, the basic block level and instruction level, according to the range of the described information. The instruction level needs the biggest storage space; it is because the annotation data has to record each instruction’s information. Each instruction maps to a virtual address at runtime, therefore, the annotation data will contain the instruction information and its corresponding virtual address. On the other side, the program level needs the smallest storage space; it is because the described information represents for the whole program and without to keep the corresponding virtual address. The data in program level describes whole program’s information. Such as the hot trace annotation, it is to annotate a set of basic blocks to form a frequently executed path called hot trace and then the hot trace will be treated as an optimization unit at runtime. The phase change annotation is to annotate which program regions are hit frequently, when the program’s execution path hit these regions over a threshold at runtime in a period, the phase change can be identified. The inter-procedure loop annotation is to annotate loop’s relation in the procedure; it can help for building hot traces efficiently. The data in procedure unit level describes procedure’s information in the program, like intra-procedure loop annotation. It is to annotate the information of loop in procedure. The loop is usually executed for many times at runtime; therefore, it is often treated as an optimization unit. The data in basic block level describes basic block’s information in the program. Register usage is an annotation which labels each basic block register usage and this information can be used to identify free registers at runtime. The data in instruction level describes instruction’s information in the program, such as a memory reference annotation. It is to annotate each instruction’s memory reference. If the memory reference information is correctly known, instruction rescheduling can be performed further by the dynamic binary optimizer. E.4 The Consumer Figure 3: Annotation framework’s position in the virtualization system. Figure 3 shows this sub-project’s position in the whole virtualization system (red parts). The goal of this sub-project is to assist the sub-project 2 to perform dynamic binary translation efficiently and to help the sub-project 3 to perform advanced optimizations. Therefore, the sub-project 2 and sub-project 3 are the consumers for this sub-project. The annotation data will be annotated to the EFL executable file firstly and then reading by the sub-project 2. When the sub-project 2 performs dynamic binary translation, it will influence the information range described by the annotation data. It is because the memory layout of the guest machine will be changed to the memory layout of the host machine. Therefore, the annotation data will be adjusted when the sub-project 2 performs dynamic binary translation so that the dynamic binary optimizer can read the correct annotation data. F. Work Plan There are three points in the first year. The first point is to exploit the useful annotations from the static compiler analysis to improve the dynamic binary translator efficiency. The second one is to exploit the beneficial annotations from the static compiler analysis to help the dynamic binary optimizer performing advanced optimization. In order to achieve these two points, we have to know benchmark’s runtime behaviors which are manipulated by the dynamic binary translator and dynamic binary optimizer explicitly. The last one is to modify the compiler to produce the annotation data which are exploited by the previous two points. There are three points in the second year. The first point is to design a guest binary annotation encoding format for the dynamic binary translator. The second point is to implement the annotations which are exploiting from the first to the dynamic binary translator. The last point is to exploit useful annotations from the profile data which is produced by the dynamic binary translator and then feedback to the dynamic binary translator. There are three points in the second year. The first point is to translate the guest binary annotation encoding format to the host annotation format for the dynamic binary translator. The second point is to implement the annotations which are exploiting from the first to the dynamic binary optimizer. The last point is to exploit useful annotations from the profile data which is produced by the dynamic binary optimizer and then feedback to the dynamic binary optimizer. F. Milestones and Deliverables First Year 1. Exploiting various annotations to help the binary translation and binary optimization from the static compiler analysis and to estimate the performance gain and overhead using these annotations. 2. Modifying the open64 compiler to produce corresponding annotations and to estimate the size of annotations. Second Year 1. Designing the annotation format for the binary translator to consume annotation and annotating the annotation to the ELF file. 2. Equipping the annotation schemes which are exploited from the first year to the binary translator and evaluating the performance gain and overhead when the dynamic translator utilizes annotations. 3. Exploiting various annotations to help the binary translation form the profiles produced by the binary translator and implementing these annotation scheme to the binary translator. Third year 1. Transforming the annotation format from the binary translator to the binary optimizer. 2. Equipping the annotation schemes which are exploited from the first year to the binary optimizer and evaluating the performance gain and overhead when the dynamic translator utilizes annotations. 3. Exploiting various annotations to help the binary optimization form the profiles produced by the binary optimizer and implementing these annotation scheme to the binary optimizer. 4. Evaluating the performance gain and overhead for the virtualization system when using the annotation framework. 表C012 共 頁 第 頁