Air Force Test & Evaluation Guidebook

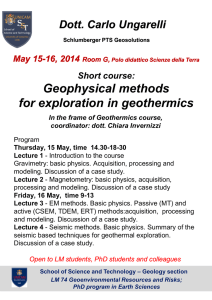

advertisement