Chester F - Carlson Center for Imaging Science

advertisement

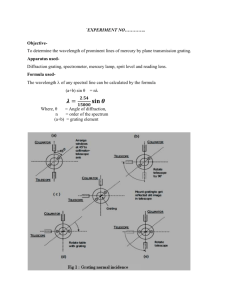

Chester F. Carlson Center for Imaging Science Ph.D. Comprehensive Examination, June 2007 Part I Problems and Solutions 1. In magnetic resonance imaging (MRI), the imaging system puts a stimulus into the imaged object, detects the signal coming back, and processes it to create an image. Consider a hypothetical MRI system that generates a stimulus pulse by apodizing (modulating) a cosine wave (whose frequency, , is equal to100 MHz) with a 2 Gaussian shaped function of the form e a (t b ) , where a = 7x1014 s-2 and b = 2x10-7 s . a) Describe the shape of the resultant stimulus pulse and its frequency content (a sketch is acceptable if appropriately labeled and annotated). Where have you seen this stimulus pulse before? b) This stimulus pulse is sent into the imaged object using a resonant circuit with a quality factor, Q, of 100 (note: Q = /B, where B is the resonant circuit bandwidth). Describe the shape and frequency content of the stimulus pulse in the resonant circuit. Solution: a) The apodizing function is a Gaussian centered at 2x10-7 s. A cosine apodized by this Gaussian is a Gaussian shaped pulse of 100 MHz energy centered at 2x10-7 s. 1 0.5 0 -0.5 -1 0 0.0000001 0.0000002 0.0000003 0.0000004 t (s) [Where have you seen this stimulus pulse before?] This is the new CIS logo. b) To determine the frequency content, we take the Fourier transform. Using the convolution theorem, we see that the above function is produced by the product of the Cosine and the Gaussian. Therefore, the answer can be found by the convolution of the FT(Cos) with the FT(Gaussian). The FT of the Cosine function is a delta function at ±100 MHz. The FT of a Gaussian is a Gaussian. Since we are interested in the frequency content, we only need the magnitude of this convolution. e 6 2 100 x10 0 2 a 20 40 60 80 100 120 140 +/- Frequency (MHz) A resonant device at = 100 MHz with a Q = 100 has a Gaussian frequency response with a bandwidth (full width at half height) of B = /Q = 100x106 s-1/100 = 1x106 s-1 Clearly this is much less than the frequency content of the pulse and therefore we can assume that the frequency content of the stimulus is determined by the bandwidth of the resonant device. The shape of the frequency domain spectrum of the stimulus in the resonant device is Gaussian, centered on ±100 MHz, and its bandwidth is 1 MHz. 2. You are tasked to design a simple spectrograph for an f/10 telescope with a 10-meter diameter primary mirror. The spectrograph is to be used to disperse IR radiation at a wavelength of 2 microns. The spectrograph consists of a rectangular slit at the prime focus, a collimator (lens) with focal length of 1m, a diffraction (reflection) grating, a one-element ideal camera lens, and a detector (see diagram below). The detector array has 10 micron pixels. The grating equation is given by m * lambda * T = sin(a) + sin(b), where m=order number lambda=wavelength T=groove density a=angle between input beam and grating normal b=angle between output beam and grating normal and the diffraction limited resolution for a grating is RDL N m , where N is the number of illuminated grooves and m = order number. grating normal telescope detector lens slit collimator grating a) If the grating has a groove density of 23 lines/mm, its facet-normals are inclined 63 degrees to the grating normal, and the input and output light beams are also inclined by 63 degrees to the grating normal, in which order will the grating best be utilized (such that the diffracted beam will be centered on the detector)? b) What combination of collimator diameter, camera lens focal length, and slit width will ensure both that the spectral resolution is diffraction-limited and the dispersed (output) light is Nyquist sampled? Solution: a) For a=b=63 degrees, m=39 b) For m=39: RDL=N*m=m*T*D_coll/cosa=23*39*100mm/cos63=200000=l/delta_l N = T*D_coll/cosa, where D_coll is the diameter of the collimator. So, the diffraction limited resolution is T*m*D_coll/cosa. D_coll=F_coll/(f_telescope)=1000mm/10=100mm. We can calculate the camera lens focal length by demanding that delta_l be matched to two pixels for Nyquist sampling. So, F_camera*theta=2*x, F=2*x/theta=2*x*cosb/(m*T*delta_l)=1m where x is the pixel size. So, the magnification is F_coll/F_camera=1m/1m=1 and the slit width to match two pixels is 20 microns. Note that the imaging diffraction limit for the telescope is: theta_DL=1.22*lambda/D=50 milliarcseconds which corresponds to 24 microns at the slit. This is a good match to the grating diffraction limited slit size. 3. The performance of an optical system in the spatial frequency domain can be characterized by its Modulation Transfer Function (MTF). The Contrast Sensitivity Function (CSF) is used to describe response of the human visual system in the spatial frequency domain. Describe the MTF and the CSF, clearly explaining the relationship and difference between the two metrics, and how each relates to the concept of a “point-spread function.” Include in your discussion the reason for the difference between the low-frequency response of optical systems and the visual system. [Answer should describe optical MTF as ratio of modulation out/in as f(spatial frequency), and CSF as inverse of detection threshold for sine (or Gabor) targets at different frequencies. Discussion should include the Fourier relationship between MTF/PSF and CSF/retinal receptive field with inhibitory surround. All passive optical systems’ MTF = unity at f = 0, falling to zero at f = ∞, while CSF is band-pass with lowfreq drop due to lateral inhibition in retina. CSF sketch should show low-freq drop, peak at ~3-6 deg-1, and limit of ~50-60 deg-1. The CSF is the product of the MTF of the visual optics and the neural (frequency) response.] 4. What will be the output if a Gaussian of amplitude 100 and standard deviation 4 that is centered on x0 = 8 is input into a shift-invariant, linear system having an impulse response that is a Gaussian of amplitude 2 and standard deviation 3, but is centered on the origin? Write down the expression and sketch the input and the output on the same graph. Solution: see next page 5. A photographic transparency 128 mm square is to be digitized by raster scanning with a 1 mm square hole through which light passes to a photodetector. Special transparencies are prepared for test purposes in the form of gratings consisting of alternate opaque and transparent bars of equal width. As the square hole scans across the grating in the direction normal to the bars the digitized output rises and falls between maxima Ymax and minima Ymin. Define “contrast” in decibels to mean 10log10(Ymax/Ymin). a. When the opaque bars are 10 mm wide, what is the contrast? b. Define “resolution” as the number of bars per millimeter such that the contrast is 3dB. What is the resolution? c. What output would you expect if the bars were 0.5 mm wide? d. What would the contrast be for bars of width 0.33 mm? Solution: The scanning aperture is a 2D RECT(x,y). the light transmitted by a transparency t(x,y) is the 2D convolution t(x,y)**RECT(x,y), whose Fourier transform is via filter theorem SINC(u,v)T(u,v). a. The test grating has a spatial period of 20 mm, therefore fundamental spatial frequency is 0.05 cycles/mm. Since SINC(0.05) = 0.996 the response ratio for a sinusoidal test pattern would be Ymax/Ymin. For the bar pattern Ymax/Ymin is infinite. b. Since SINC(0.75) = 0.3 we might expect 0.75 bar/mm to be a rough estimate of the resolution. It turns out that Ymax is 2/3 of the full response (1 mm hole centered on 0.67 mm transparency strip) and Ymin is 1/3 (hole centered on 0.67 mm opaque bar) c. A steady output equal to ½ full response. d. The contrast is 3dB because Ymax is 0.67 (hole centered on 0.33 mm bar) and Ymin = 0.33 (hole centered on 0.33 mm transparency strip). 6. Maximum likelihood classification of multiband data is based on descriptive statistics of Gaussian-distributed random variables. Beginning with Bayes’ theorem, using probabilities defined in N-space, derive the linear discriminant functions used in maximum likelihood classification and describe the physical interpretation of each term that remains in the final discriminant function. Solution: Given Bayes’ theorem, one can define the conditional probability of finding a pixel that belongs to class i given we have a pixel located at position x in n-space p( i | x) p(x | i ) p( i ) p(x) where p(x | i ) is the conditional probability that a pixel exist at position x in N-space given that we are interested in pixels of material type i , p( i ) is the a priori probability of finding a pixel of type i in the scene, and p(x) is the n-dimensional probability density function of the image. Assuming Gaussian-distributed data, we canrepresent the conditional probability above as in the numerator p(x | i ) 1 N 2 1 2 i 2 e 1 (x i )t 1 i (x i ) 2 where i and i are the mean vector and covariance matrix for representative pixels chosen to represent class i . The decision rule for deciding what class of material a particular pixel belongs to, one can consider the decision rule x i if p( i | x) p( j | x) for all j i but since we don’t know p(i | x) directly, one can rewrite the inequality in terms of the Bayes theorem representation above as p(x | i ) p( i ) p(x | j ) p( j ) p(x) p(x) which reduces to p(x | i ) p( i ) p(x | j ) p( j ) and allows the decision rule to be rewritten as x i if p(x | i ) p( i ) p(x | j ) p( j ) for all j i This decision rule allows us to utilize probabilities that can either be determined from class-specific descriptive statistics or estimated from visual inspection of the image. Converting these probability products to discriminant functions, our decision rule becomes x i if gi (x) g j (x) for all j i where gi (x) is represented as gi (x) ln p(x | i ) p( i ) ln p(x | i ) ln p( i ) 1 1 (x i )t 1 i (x i ) 2 ln p( i ) ln N e 1 2 2 2 i N2 1 1 (x i )t 1i (x i ) ln p( i ) ln 2 i 2 e 2 1 (x i )t 1i (x i ) N2 1 ln p( i ) ln 2 ln i 2 ln e 2 N 1 1 ln p( i ) ln 2 ln i (x i ) t 1 i (x i ) 2 2 2 and eliminating the constant term for the N-space, one is left with 1 1 gi (x) lnp( i ) ln i (x i )t 1 i (x i ) 2 2 At this point, the remaining three terms can be interpreted as ln p( i ) 1 ln i 2 represents the probability that a pixel is of type i given no other knowledge of the actual multiband digital count data in the image (your best guess knowing what the rough scene composition is) represents a penalty function for a class which has a very “large” covariance among its band constituents 1 represents a penalty function for being far away from the mean (x i ) t 1 i (x i ) 2 (in a covariance normalized sense) of a class of pixels in question. 7. I want to use the digital camera set up shown below to measure the reflectance of samples located as shown below. What reflectance would you calculate for my sample if I observe a signal of 5.7x104 electrons? What error would I assign to that measurement due to the detector if the noise for similar signal levels is 900 electrons? Solution: see next page 8. A photon detector has a dark noise whose variance 2D = 0.1 counts/second is proportional to the exposure time, and a readout noise whose variance is 2R = 10 counts and is at a fixed level. The detector area is A = 0.3 cm2 and the detector conversion efficiency is = 0.75 counts/photon. Find the DQE when the detector is placed in a flux of 3.3 photons/cm2/s and is exposed for T = 10 minutes. Chester F. Carlson Center for Imaging Science Ph.D. Comprehensive Examination, June 2007 Part II Problems and Solutions 9. The Center for Imaging Science is considering putting a small telescope, equipped with CCD camera (at the telescope focal plane), on the roof of the Carlson building. The telescope would be a 16" (~ 0.4 m) diameter f/10 reflector. It is estimated that (1) telescope vibrations, due to such things as students walking around on the roof and elevator motion, will have mean amplitudes of about 20 microns at frequencies ~10 Hz;. and (2) the best-case “seeing” (atmospheric turbulence) will smear the angular diameter of images of point sources (i.e., stars) to a full-width at half maximum of about 2 arcseconds (1/1800 of a degree) in typical exposure times of a few seconds. The available CCD cameras have CCDs with the following specifications: 512x512, 20 micron pixels; 1600x1200, 7.4 micron pixels; 765x510, 9 micron pixels. The goal is to perform wide-field imaging while fully sampling the system point spread function. Which is the best choice of CCD, and what field of view (in solid angle, i.e. square degrees or steradians) will it yield? Be sure to state any important assumptions you have made. Solution: The student should recognize, and implicitly show or explicitly state, that (s)he needs to calculate the telescope system PSF under three different limiting assumptions -- (a) diffraction limited; (b) vibration limited; (c) atmosphere (“seeing”) limited – then work out which CCD offers the best compromise between spatial sampling at the Nyquist frequency and maximal angular field of view. a) The diffraction limit of this telescope at 550 nm is theta = 1.22 (lambda/D) = 1.22 * 1.38e-6 = 1.67e-6 radians (~0.33 arcsec) Its focal length is 4 m (10*0.4m). Hence the PSF FWHM at the focal plane (assumption: objects to be imaged are at infinity) is FWHM = 1.67e-6 * 4m = 6.7 microns b) the vibration limit is given as 20 microns, assuming exposure times >> 0.1 sec c) 2 arcsec ~ 1e-5 radians, or 40 microns at the focal plane Hence the image will be seeing-limited, and the best choice is the 20-micron-pixel CCD, which would yield a FOV of about 0.02 sq degrees (~6e-6 steradians). 10. Two searchlights are pointing toward a two-slit diffraction apparatus you have constructed for purposes of measuring interference patterns. The searchlights are equally bright and at about the same distance (10 km); they are placed 3 km apart from each other. The apparatus consists of a filter that passes monochromatic light of wavelength lambda -- where lambda can be selected anywhere within the range 100 nm to 1 micron -- followed by a flat surface with a pair of slits whose separation is also adjustable (call this separation d). The normal to the slit surface points to the midpoint between the searchlights. a) Find the total intensity as a function of d and angle (theta) away from this normal, for the interference pattern of the light that passes through the slits. b) How must one change d so as to produce patterns with the same spatial frequency for wavelengths that lie at the extremes of the range of human vision? Solution: a) To arrive at the correct solution the student must first note which sources are coherent. Light from each beam falls on each of the two slits, and so the light from each beam emerging from the two slits is coherent. But the two beams are not coherent sources with respect to each other. So we have a two-slit interference pattern for each beam, centered on the line joining the beam and the slits. These two patterns then add incoherently (that is, the intensities add). The student should write down the expression for intensity as a function of angle that is appropriate for two-slit diffraction for each beam, and then the expression for the sum of the intensities of the two patterns. (See solution on next page to “Rigel and Betelguese” problem, for specific formulae – the two searchlights are separated in angle by about 17 degrees, as in that problem) b) As the wavelength increases, the slit separation must increase, to maintain the same spatial frequency. Since the range of human vision is about a factor of two in wavelength, d must change by about a factor of two… 11. Like many optical systems, the human eye suffers from chromatic and spherical aberrations, but there are characteristics of the system that serve to limit the image-quality limitations due to those aberrations. Describe the causes of chromatic and spherical aberration in the visual system and discuss the characteristics and methods that serve to maintain the quality of the perceived image. [Answer should discuss the fact that index of refraction is f(λ), so dispersion takes place in cornea, crystalline lens, aqueous and vitreous humors; spherical surfaces are not ideal shape for the s/s’ conjugates, and marginal rays are refracted too strongly wrt paraxial rays. Chromatic Aberration: i. ii. iii. iv. When sufficient light is available, the pupil constricts, limiting the aperture and the circle-of-confusion due to chromatic aberration. There are far fewer S cones in the retina than M&L cones, so the shortwavelength image (which is significantly blurred by chromatic aberration) is under-sampled compared to the rest of the spectrum. The macula lutea (‘yellow spot’) blocks blue light over the ~5º of central retina, limiting further blurring due to short wavelength in central vision. There is a very small (~0.5º) region in the very center of the retina that has no S cones at all. Spherical Aberration: i. ii. iii. iv. When sufficient light is available, the pupil constricts, limiting the aperture and therefore the spherical aberration. The cornea is not spherical; at the point where it joins with the sclera the radius gradually increases, forming an aspherical surface with lower power at the periphery (compensating for spherical aberration). The crystalline lens is a gradient-index (GRIN) material, with the index greatest at the center (compensating for spherical aberration). The Stiles-Crawford Effect: Cones in the fovea are directionally selective; light entering the center of the pupil has a higher relative luminous efficiency than marginal rays (which are more likely to be absorbed in the retina before reaching the photodetectors).] 12. Suppose you are allowed only one input to an imaging system that is known to be linear and shift-invariant. That input function f(x) is given below: f ( x) RECT (2 x) * COMB ( x / 2) Let the system MTF be given by H( ), where stands for the spatial frequency. Assume that you have the system output available to you for analysis. Decide if you can determine the system MTF at only one frequency or at many other discrete frequencies as well. Justify your answer and be as quantitative as possible. If you can determine the system MTF at other discrete frequencies, what are those possible frequencies and how will you determine the MTF? Solution: 13. Suppose you were provided with the following quantized phase image: You then apply some sort of transformation and obtain the following image: Name and describe (using appropriate equations/formalism) the phenomenon in the transformation process that has produced the upside down ghost image. Solution: The quantized phase image is a real array with no symmetry. Which means that the Fourier transform is Hermitian (real part even, imaginary part odd). The mirror image is a consequence of these symmetry properties. 14. Some digital SLR cameras have preset shutter and aperture settings that simulate 100, 200, 400 and 1000 speed film. In reading through the manual for such a camera, there appears the following footnote, regarding how many images can be stored in JPEG format on Compact Flash cards of various sizes: “The above estimates [of the number of images that could be stored] are for an ISO setting of 100. If you shoot with the camera set for a higher ISO speed, the number of images that can be stored will be reduced.” Explain this footnote in terms of the reason(s) that the number of digital images collected should be dependent on equivalent film speed. Your explanation should include answers to the following: What camera setting(s) should be adjusted to simulate ISO (film) speed, and how would these be adjusted to obtain images at “higher speed”? What are the key aspects of digital images that make them compressible? How is the specific file format important; in particular, does the above footnote offer any indication as to whether this camera stores images in JPEG or JPEG2000 format? Solution/explanation: The camera must either increase the shutter speed or decrease the aperture (or both) to simulate the use of film with higher ISO speed. Hence the dependence of storage capacity on simulated ISO speed is due to the increased level of noise produced by the reduction in the number of photons that impact the exposure of the array. Classically, interpixel redundancy, coding redundancy, and psychovisual redundancy are the three areas that compression routines attempt to exploit. JPEG specifically attempts to do so by subsectioning the image into 8x8 blocks in the hope that the interpixel redundancy in this small area is high. This results in relatively few significant DCT coefficients for the block transform and hence, after quantization, less data to store. Increased noise increases the number of significant coefficients and therefore the storage requirement. JPEG2000 does not rely on the use of the DCT, but the DWT instead. The block size is also variable from 1 pixel to the full image size. So the result is much less clear. In general, however, the amount of energy described in the LH, HL, and HH subbands will be increased due to the increase in high frequency information in the image (random noise) so the minimization in data size due to arithmetic coding will be less effective. This suggests that the camera uses JPEG image formatting. 15. Late one night, my mad professor released a new gas he has developed which he assures us will induce anyone who breathes it to support his effort to take over the world. In the lab we have carefully analyzed the gas and found it strongly absorbs at 567 nm, has an absorption cross section of 2x10-12 m2 at this wavelength and the professor claims it is effective only when present at more than a million parts/ m3 . We decide to use a set up we have on the roof, to bounce lasers off the U of R’s Optics Lab where we have installed a large mirror, to see if it’s safe to go out. At 4am (when we believe the gas has uniformly dissipated across town) we don gas masks and aim a 567 nm laser with a 0.5x10-3 radian beam divergence and 0.5W of power at the mirror 6 km away. We observe the irradiance on the sensor collocated with our laser to be 1.5x10-2 W/m2. Is it safe to go out on the streets or are our fellow citizens doomed as we are to be forever the pawns of the mad professor? Solution: E= I or A 2 2 4 4 E= 2 2 W r 2 2 2 2 area illuminated = d 2 2 4 4 where d is diameter of illuminated spot d=r 2 , is divergence angle, is the distance to U of R E 2 2 4 0.848 e-mcz - n m 6.87 106 molecules / m3 cz …WE ARE DOOMED… 2 2 2 2 A A W= 4 r 2 2 2 4 2 2 z = 2 = 12km 16. Photons arrive at a detector at a rate of 900 photons per millisecond. Estimate the probability that the detector sees more than 930 photons in one millisecond. JHK notes: OOPS! I made no such table available…should be interesting to see how the students handled this…