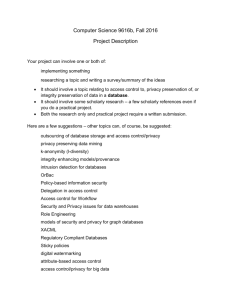

fnt-end-user-privacy.. - School of Computer Science

advertisement