Bayesian epistemology is superior to traditional epistemology

advertisement

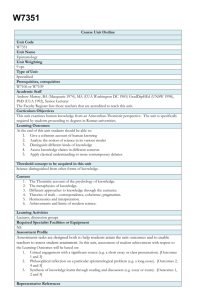

Formal methods and Bayesian epistemology: Two Hegelian Dialectics Alan Hájek [Nearly-finished draft] 0. Introduction Our brief for this workshop is to discuss: In what ways do (or should, or can) Formal Methods shed light on Traditional Epistemological Concerns? In other words, how may formal epistemology fruitfully inform and engage traditional issues and approaches, and how should traditional approaches respond to the formalists? There are three key phrases there: formal methods, traditional epistemological concerns, and formal epistemology. They will be my main points of departure in this talk. In the spirit of the workshop’s goal of fostering discussion of these themes, I will present my talk in the form of two Hegelian dialectics: 1. Formal methods: thesis and antithesis (i) I will give a brief characterization of formal methods and examine their use in philosophy – in particular, in epistemology. (ii) I will consider first the thesis that formal methods are a boon to philosophy, and especially to epistemology. (iii) I will then consider the antithesis that formal methods are a bane to philosophy, and especially to epistemology. (iv) Then, like a good Hegelian, I will offer something of a synthesis, attempting some reconciliation of these views. 2. Traditional epistemology vs Bayesian epistemology: thesis and antithesis (i) I will give a brief characterization of traditional epistemology and Bayesian epistemology, my favorite example of formal epistemology. (ii) I will consider first the thesis that Bayesian epistemology is superior to, or should supplant, traditional epistemology. (iii) I will then consider the antithesis that Bayesian epistemology is not superior to, and should not supplant traditional epistemology, and indeed that Bayesian fails to do justice to some of the deepest problems in epistemology. (iv) Then, like a good Hegelian, I will offer something of a synthesis, suggesting that both approaches are alive and well, Bayesian epistemology directs us to various promising avenues of research for epistemology, while traditional epistemology still has things to offer the Bayesian. [Time allowing, I may go on to: 3. Bayesian epistemology vs other formal approaches: thesis only In passing, I want to briefly compare Bayesian epistemology with a couple of rival formal approaches to epistemology. There, I won’t be Hegelian at all. Thesis: Bayesian is just plain superior to them. End of the dialectic! (Well, actually I will confess my ignorance of the rival approaches, and invite the audience to continue the dialectic.)] 1. Formal methods: thesis, antithesis, and synthesis 1 (i) What are formal methods? It’s easy to come up with examples of formal methods: the use of various logical systems, computational algorithms, causal graphs, information theory, probability theory and mathematics more generally. What do they have in common? They are all abstract representational systems. Sometimes the systems are studied in their own right for their intrinsic interest, but often they are regarded as structurally similar to some target subject matter of interest to us, and they are studied to gain insights about that. They often, but not invariably, have an axiomatic basis; they sometimes have associated soundness and completeness results. There is something of a spectrum of ‘formality’ here. At the high end, we have, for example, the higher reaches of set theory. At the low end we have rather informal presentations of arguments in English in ‘premise, premise … conclusion’ form. Higher up we find more formal representations of these arguments, whittled down to schematic letters, quantifiers, connectives, and operator symbols. Near the top we find Euclid’s Elements; lower down, Spinoza’s Ethics. 1(ii) Thesis: Formal methods are a boon to philosophy, and especially to epistemology Formal methods often force us to be explicit about our assumptions, keeping us on high alert when questionable assumptions might otherwise be smuggled in. Formal systems often provide a safeguard against error: by meticulously following a set of rules prescribed by a given system, we minimize the risk of making illicit inferences. And reducing a proof to symbol manipulation, when that is possible, can often make it easier. It is striking how one can start with a rather imprecise philosophical problem stated in English, precisify it, translate it into a formal system, use the inference rules of the system to prove some results about it, then translate back out to a conclusion stated in English. It is easy to admire the rigor, the sharpening of questions and their resolution, and to enjoy the feeling that one is really getting results. Formal methods also provide something of a lingua franca. I was recently in China, and when English failed my interlocutors (and trust me, my Mandarin didn’t even get off the starting line), we could still communicate in the language of logic and mathematics—well, somewhat. I am also impressed by how formal systems can stimulate creativity. Staring at the theorems of a particular system can make one aware of hitherto undiscovered possibilities, or of hitherto unrecognized constraints. And it can enable one to discern common structures across different subject matters. I’ll give a couple of examples from my work—not so much in a shameless act of self-promotion (although that may also be true), but because I am particularly authoritative about the creative processes involved, such as they were; it’s not for me to speculate as to what may have inspired someone else’s creative processes. A former student of mine, Harris Nover and I offered a new paradox for expected utility theory in our (2004). Expected utilities are sums—sums of products of the form ‘utility times probability’. The St. Petersburg game exploits one well-known kind of anomalous sum: a divergent series. But we know from real analysis that another kind of anomalous infinite sum is one that is conditionally convergent—if we leave it alone, it converges, but if we replace all of its terms by their absolute value, the resulting series diverges. Riemann’s rearrangement theorem tells us that every conditionally convergent series can be reordered so as to sum to any real number; and it can be reordered so as to diverge to infinity and to negative infinity; and it can be reordered so as to simply diverge. Now let this piece of mathematics guide the creation of a new game, whose expectation series has exactly this property—the formal model thus inspires a new kind of anomaly for rational decision-making. Harris and I proposed a St. Petersburglike game—the Pasadena game—in which the pay-offs alternate between rewards and punishments, in such a way that the resulting expectation is conditionally convergent. Decision theory apparently tells us that the desirability of the game is undefined, thus falling silent as to its desirability. Worse, the theory falls silent about the desirability of everything, as long as you give any credence whatsoever to your playing the Pasadena game—for any mixture of undefined and any other quantity is itself undefined. In that case, for example, you can’t rationally choose between pizza and Chinese for dinner, since both have undefined expectation (each being ‘poisoned’ by a positive probability, however tiny, of a subsequent Pasadena game). Thus, you are paralyzed—a sin against practical rationality. Yet assigning probability 0, as opposed to extremely tiny positive probability, to the Pasadena game seems excessively dogmatic—a sin against theoretical rationality. The upshot was that the use of the formal mathematical model of decision theory facilitated the invention of a new kind of problem for decision theory. At the risk of more self-promotion (again, because it’s an example I know particularly well): my early philosophical work was on probabilities of conditionals. I liked Adams’s and (independently) Stalnaker’s idea of looking to probability theory, and in particular its familiar notion of conditional probability, for inspiration for understanding conditionals. In short, they looked to a formal structure for illumination on a philosophical problem. They both advanced versions of the thesis that probabilities of conditionals are conditional probabilities. Then along came Lewis’s triviality results, which began an industry of showing that various precisifications of the thesis entailed triviality of the probability functions. I liked this industry a lot, and I joined in, proving some further triviality results. One of them owed its existence to the easy visualization that can be given of probabilities in terms of a ‘muddy Venn diagram’ (in van Fraassen’s coinage). [EXPLAIN PERTURBATIONS. NOTICE THAT IF THE CONDITIONAL IS INDEXICAL, THE PROOF IS BLOCKED.] Without this heuristic I could not have ‘seen’—in both senses of the word—the trouble that probability dynamics could cause for Adams’/Stalnaker’s theses. Then along came the so-called desire-as-belief thesis. David Lewis canvassed a certain anti-Humean proposal for how desire-like states are reducible to belief-like states: roughly, the desirability of X is the probability that X is good. He proved certain triviality results that seemed to refute the proposal. This was another lovely example of how formal methods could serve philosophical ends: this time, a thesis that was born in an informal debate in moral psychology could apparently be expressed decision-theoretically. The decision-theoretic machinery could then be deployed to deliver a formal verdict, which could then be translated back to bear on the informal debate. I noticed that the probabilities-of-conditionals-are-conditional-probabilities thesis of Adams and Stalnaker looked suspiciously like the desire-as-belief thesis, and that Lewis’s triviality results against the former looked suspiciously like his triviality results against the latter. This gave me the idea that the subsequent moves and countermoves that were made in the probabilities-of-conditionals debate could be mimicked in the desire-as-belief debate. ‘Perturbations’ similarly cause trouble for the desire-as-belief thesis. And I showed that, much as Lewis’s original triviality results and the perturbations results could be blocked by making the conditional indexical in a certain sense, his later triviality results could be blocked by making ‘good’ indexical in the same sense. Philip Pettit and I (2003) then translated back out of the formalism, suggesting meta-ethical theories that accorded ‘goodness’ the necessary indexicality. So the trick was to notice a similarity between the formal structures that underpinned the probabilities-of-conditionals and the desire-as-belief debates, something that I could not have noticed about the original debates themselves. After that, it was easy to see how the next stages of the desire-as-belief debate should play out, paralleling the way they did in the probabilities-of-conditionals debate. Two seemingly disparate debates turned out to be closely related. 1(iii) Antithesis: formal methods are a bane to philosophy, and especially to epistemology In my paean to formal methods I have said a bit about how their use may fertilize the imagination. There is also a risk of the opposite phenomenon: that they may encourage one to think inside a box, constraining one’s imagination. I have mentioned how formal methods often force one to be clear on the assumptions that one is making; but a risk is that the set of assumptions that underlie a given model may become presuppositions that are not questioned, and that perhaps should be. There is a danger of reading too much off a given formalism. It may resemble some target in certain important respects, but it must differ from the target in other important respects. (Compare how a map of a city differs from the city itself in all sorts of ways—it had better do so in order to be of any use!) One has to be vigilant in keeping track of which assumptions are specific to a formal model, and which carry over to the target. A solution to a problem in a formal model may not be a solution to its real-life counterpart; and one should be careful when reifying a problem in a formal model, reading it back into the world. For example, I think that some Bayesians overstate the lessons of the famous convergence theorems. Here is a real-world question: how can we explain the huge amount of agreement we find among different humans, and in particular, among scientists? A common Bayesian answer: the convergence theorems show us that in the long run such agreement is guaranteed. For example: If observations are precise… then the form and properties of the prior distribution have negligible influence on the posterior distribution. From a practical point of view, then, the untrammeled subjectivity of opinion… ceases to apply as soon as much data becomes available. More generally, two people with widely divergent prior opinions but reasonably open minds will be forced into arbitrarily close agreement about future observations by a sufficient amount of data. (Edwards, et al., p. 201) Call this convergence to intersubjective agreement; such agreement, moreover, is often thought to be the mark of objectivity (see Nozick 19xx). The “forcing” here is a result of conditionalizing the people’s priors on the data. Gaifman and Snir (19xx) and Jim Hawthorne (200x) similarly show that for each suitably open-minded agent, there is a data set sufficiently rich to force her arbitrarily close to assigning probability 1 to the true member of a partition of hypotheses. Call this convergence to the truth. These are beautiful theorems, but one should not overstate their epistemological significance. They are ‘glass half-full’ theorems, but a simple alternation of the quantifiers turns them into ‘glass half-empty’ theorems. For each data set, there is a suitably open-minded agent whose prior is sufficiently perverse to thwart such convergence: after conditionalizing her prior on the data set, she is still nowhere near assigning probability 1 to the true hypothesis, and still nowhere near agreement with other people. And strong assumptions underlie the innocent-sounding phrases “suitably open-minded agent” and “sufficiently rich data set”. No data set, however rich, will drive a dogmatic agent anywhere at all. Worse, an agent with a wacky enough prior will be driven away from the truth. Consider someone who starts by giving low probability to being a brain in a vat, but whose prior regards all the evidence that she actually gets as confirming that she is. And we can always come up with rival hypotheses that no courses of evidence can discriminate between—think of the irresolvable conflict between an atheist and a creationist who sees God’s handiwork in everything. Related, there may be a residue of an original problem that remains even when it has been solved in a model. For example, there has been wonderful work on analyzing the preface paradox from a Bayesian point of view – Jim Hawthorne’s paper with Luc Bovens is an example. But the preface paradox was originally a paradox about belief. I have myself appealed in print to the “Lockean thesis” (endorsed by Jim and Luc) that belief is probability above a threshold (perhaps contextually determined). But I also admit to having some qualms about that thesis. Do you really believe that your lottery ticket will lose, as the Lockean thesis would apparently have it (for a big enough lottery)—after all, you don’t tear it up! Rather, you believe that it will very probably lose, but that is a different epistemic state. The Lockean thesis also has trouble explaining ‘cross-over’ cases. It seems that you do believe that your ticket lost when you read in the newspaper that the winning ticket was some other one. Yet you do not give probability 1 to the truth of the newspaper report, and indeed there exists some other lottery whose probability of a given ticket winning is higher, but which you do not believe will win: so we have a belief with lower probability than a non-belief. Finally, the Lockean thesis seems to make it too easy to rationally hold inconsistent beliefs—indeed, it seems to be forced in lottery cases, if we set the threshold for belief below 1. On the other hand, if we set it at 1, then belief seems all too hard to come by. Either way, something has gone wrong— arguably, something in the formal model of belief, rather than in belief itself. Be that as it may, my point is that while there is clearly no preface “paradox” for degrees of belief—and this is an important insight—still one might think that the preface paradox for binary belief remains. Similarly, Ned Hall gives a trenchant Bayesian analysis of the ‘surprise exam paradox’. But again, one might think that the paradox for binary belief remains. If you don’t like these examples, I’m sure you’ll find others that you do. My point ought to be a platitude: for the very reason that a model must differ from a target system in order to be a model of that system, one has to be careful that a result reached in the model can be faithfully applied to the system. The ‘antithesist’ in me here is now drawing attention to cases in which one may read too much into the model. I am also reminded of a salutary caution that Paul Benacerraf has given in a few places: beware of philosophers claiming to derive philosophical conclusions from formal results—in particular, mathematical facts. “The point is that you need some further premises - and in [a case that he considers], clearly philosophical ones… I am making a sort of Duhemian point about philosophical implications. You need some to get some.” Here are some examples of violations of Benacerraf’s cautionary words: - Penrose and Lucas: Godel's theorem shows that minds are not machines. - Putnam: the Lowenheim-Skolem theorem shows that realism is false. - Various people: the Dutch book theorem shows that rational credences must be probabilities. - Forster and Sober: “A literal reading of Akaike's Theorem is that we should use the best fitting curve from the family with the highest estimated predictive value.' (1994, p. 18, my emphases). - “Arrow’s impossibility theorem shows that democracy is dead”, as I’ve heard a political scientist say. This segues nicely into another concern about the use of formal methods, which I call formalism fetishism. We see it all too often: someone representing some philosophical problem with triple integrals, or tensors, or topologies, or topoi, just because they can. Why say it in English when you can say it with a self-adjoint operator instead?! And you can’t help suspecting that in some cases there’s at least an unconscious desire to impress or to intimidate one’s audience. As James Bond’s girlfriend (in Goldeneye) would say: “Boys with toys!” If nothing else, there is a risk that you will lose, or turn off your audience. (On the other hand, if they’re formal fetishists, you may instead turn them on!) 1(iv) Synthesis [I plan to add to this section.] As in all the things, the answer, of course, is to exercise good judgment when using formal methods. I find Lewis’s use of formal methods to be exemplary. I am especially drawn to his work on counterfactuals, on causation, and of course on probability and decision theory. He used such methods sparingly and judiciously, always to illuminate and to make insights easier to come by and to understand. His work serves as a model to me. The points I made in support of the ‘thesis’ live in perfect harmony with those in support of the ‘antithesis’. There’s no denying the heuristic and clarificatory value of formal methods. But like the proverbial ladder that one kicks away, once they have served their purpose, I want to see their translation back into plain English. And where some formalism is used to aid with the presentation of a philosophical argument, let us not mistake it for the argument itself. 2. Traditional epistemology vs Bayesian epistemology: thesis, antithesis, and synthesis 2(i) What are traditional epistemology and Bayesian epistemology? Bayesianism is our leading theory of uncertainty. Epistemology is defined as the theory of knowledge. So “Bayesian Epistemology” may sound like an oxymoron. Bayesianism, after all, studies the properties and dynamics of degrees of belief, understood to be probabilities. Traditional epistemology, on the other hand, places the singularly non-probabilistic notion of knowledge at center stage, and to the extent that it traffics in belief, that notion does not come in degrees. So how can there be a Bayesianism epistemology? According to one view, there cannot: Bayesianism fails to do justice to essential aspects of knowledge and belief, and as such it cannot provide a genuine epistemology at all. According to another view, Bayesianism should supersede traditional epistemology: where the latter has been mired in endless debates over skepticism and Gettierology, Bayesianism offers the epistemologist a thriving research program. I will advocate a more moderate view: Bayesianism can illuminate various long-standing problems of epistemology, while not addressing all of them; and while Bayesianism opens up fascinating new areas of research, it by no means closes down the staple preoccupations of traditional epistemology. The contrast between the two epistemologies can be traced back to the mid-17th century. Descartes regarded belief as an all-or-nothing matter, and he sought justifications for his claims to knowledge in the face of powerful skeptical arguments. No more than four years after his death, Pascal and Fermat inaugurated the probabilistic revolution, writ large in the Port-Royale Logic, in which the many shades of uncertainty are represented with probabilities, and rational decision-making is a matter of maximizing expected utilities (as we now call them). Correspondingly, the Cartesian concern for knowledge fades into the background, and an alternative, more nuanced representation of epistemic states has the limelight. Theistic belief provides a vivid example of the contrasting orientations. Descartes sought certainty in the existence of God grounded in apodeictic demonstrations. Pascal, by contrast, explicitly shunned such alleged ‘proofs’, arguing instead that our situation with respect to God is like a gamble, and that belief in God is the best bet—thus turning the question of theistic belief into a decision problem which he, unlike Descartes, had the tools to solve. Bayesian epistemology owes to the eponymous Reverend Thomas Bayes, who wrote a century later, an important theorem that underwrites certain calculations of conditional probability central to confirmation theory. But really ‘Bayesian epistemology’ is something of a misnomer; ‘Kolmogorovian epistemology’ would be far more appropriate. Bayes’ theorem is just one of many theorems of the probability calculus. It provides just one way to calculate a conditional probability, when various others are available, all ultimately deriving from the usual ratio formula, and often conditional probabilities can be ascertained directly, without any calculation at all. When I speak of ‘traditional epistemology’, I lump together a plethora of positions as if they form a monolithic whole. For my purposes, their common starting point is regarding the central concepts of epistemology to be knowledge and belief; they then go on to study the properties, grounds, limits, and so on of these binary notions. I also speak of ‘Bayesianism’ as if it is a unified school of thought, when in fact there are numerous intra-mural disputes. I. J. Good jokes that there are “46,656 ways to be a Bayesian”, while I will mostly pretend that there is just one. By and large, the various distinctions among Bayesians will not matter for my purposes. As a good (indeed, a Good) Bayesian might say, my conclusions will be robust under various precisifications of the position: Many traditional problems can be framed, and progress can be made on them, using the tools of probability theory. But Bayesian epistemology does not merely recreate traditional epistemology; thanks to its considerable expressive power, it also opens up new lines of enquiry. I can now bring out several points of contrast between traditional and Bayesian epistemology. I have noted that ‘knowledge’ and ‘belief’ are binary notions, to be contrasted with the potentially infinitely many degrees of ‘credence’ (corresponding to all the real numbers in the [0, 1] interval). ‘Knowledge’ is famously not merely ‘justified true belief’, but many epistemologists hope that some elusive ‘fourth condition’ will complete the analysis—some kind of condition that rules out cases in which one has a justified true belief by luck, or for some anomalous reason. Notice that three of these four conditions may be characterized as objective, with ‘belief’ providing the only subjective component. This is in sharp contrast to orthodox Bayesianism, which refines and analyzes this doxastic notion, but which has no clear analogue of the ‘objective’ conditions. Most importantly, Bayesianism apparently has nothing that corresponds to the factivity of knowledge, that one can only know truths—the convergence theorems hardly suffice. And even when our beliefs fall short of knowledge, still it is a desideratum that they be true; but the Bayesian seems to have no corresponding desideratum for intermediate credences, which are its stockin-trade. When you assign, for example, probability 0.3 to it raining tomorrow, what sense can the Bayesian make of this assignment being true? (We will return to this point at the end.) It is also dubious whether Bayesianism can capture ‘justification’ or any ‘fourth condition’ on knowledge. The Bayesian might try to parlay the convergence theorems into providing surrogates for justification or for the elusive ‘fourth condition’ for knowledge, insisting that such convergences are based on evidence, and they do not happen by luck, or for some anomalous reason, but are probabilistically guaranteed. But again, I really don’t think they do the job. I currently have a justified, non-accidentally true belief that I am typing these words in Miami. How could a convergence theorem shed light on that? Given the striking differences between traditional and Bayesian epistemology, are there reasons to prefer one to the other? 2(ii) Thesis: Bayesian epistemology is superior to traditional epistemology Jeffrey (1992), a famous Bayesian, writes: knowledge is sure, and there seems to be little we can be sure of outside logic and mathematics and truths related immediately to experience. It is as if there were some propositions – that this paper is white, that two and two are four – on which we have a firm grip, while the rest, including most of the theses of science, are slippery or insubstantial or somehow inaccessible to us … The obvious move is to deny that the notion of knowledge has the importance generally attributed to it, and to try to make the concept of belief do the work that philosophers have generally assigned the grander concept. I shall argue that this is the right move. It becomes immediately clear that Jeffrey has in mind here degrees of belief, understood as subjective probabilities. He goes on to suggest two main benefits accrued by the Bayesian framework: 1. Subjective probabilities figure in decision theory, an account of how our opinions and our desires conspire to dictate what we should do. The desirability of each of our possible actions is measured by its expected utility, a probability-weighted sum of the utilities association with that action. To complete the argument, we should add that traditional epistemology offers no decision theory (recall Descartes vs Pascal). Rational action surely cannot be analyzed purely in terms of the binary terms of knowledge and belief. 2. Observations rarely deliver certainties – rather, their effect is typically to raise our probabilities for certain propositions (and to drop our probabilities for others), without any reaching the extremes of 1 or 0. Traditional epistemology apparently has no way of accommodating such less-than-conclusive experiential inputs, whereas Jeffrey conditionalization is tailor-made to do so. We may continue the list of putative advantages of Bayesianism over traditional epistemology at some length. Here I add my top ten: 3. Implicit in the quote from Jeffrey is the thought that knowledge is unforgiving. Its standards are so high that they can rarely be met, at least in certain contexts. (This is related to the fact that knowledge does not come in degrees – near-knowledge is not knowledge at all.) This in turn plays into the hands of skeptics. But it is harder for skeptical arguments to get a toehold against the Bayesian. For example, the mere possibility of error regarding some proposition X undermines a claim of knowledge regarding X, but it is innocuous from a probabilistic point of view: an agent can simply assign X some suitable probability less than 1. Indeed, even an assignment of probability 1 is consistent with the possibility of error—a dart thrown at random at a representation of the [0, 1] interval has probability 1 of hitting an irrational number, even though it might fail to do so. 4. Moreover, it is a commonplace that doxastic states come in degrees, and the categories of ‘belief’ and ‘knowledge’ are too coarse-grained to do justice to this fact. You believe, among other things, that 2 + 2 = 4, that you have a hand, that London is in England, and (say) that Khartoum is in Sudan. But you do not have the same confidence in all these propositions, as we can easily reveal in your betting behavior and other decision-making that you might engage in. The impoverished nature of ‘belief’ attributions is only exacerbated when we consider the wide range of propositions for which you have less confidence – that this coin will land heads, that it will rain tomorrow in Novosibirisk, and so on. We may conflate your attitudes to them all as ‘suspensions of belief’ (as Descartes would), but that belies their underlying structure. Such attitudes are better understood as subjective probabilities. 5. Related, the conceptual apparatus of deductivism is impoverished, and comparatively little of our reasoning can be captured by it, either in science or in daily life (pace Popper and Hempel). After all, whether we like it or not, our epistemic practices constantly betray our commitment to relations of support that fall short of entailment. We think that it would be irrational to deny that the sun will rise tomorrow, to project ‘grue’ rather than ‘green’ in our inductions, and to commit the gambler’s fallacy. It seems that probability theory is required to understand such relations. 6. Bayesianism has powerful mathematical underpinnings. It can help itself to a century of work in probability theory and statistics. Traditional epistemology may appeal to the occasional system of epistemic or doxastic logic, but nothing comparable to the formidable formal machinery that we find in the Bayesian’s tool kit. 7. Accordingly, probabilistic methods have much wider application than any formal systematization of ‘knowledge’ or ‘belief’. Look at the sciences, social sciences, and engineering if you need any convincing of this. 8. Bayesianism has provided yeoman service in the rational reconstruction of science. I’m thinking here of Dorling’s and Franklin’s Bayesian reconstructions of some key scientific episodes – and obviously scientific knowledge is an important part of epistemology. Traditional epistemology does not seem to do so well here. Imagine trying to illuminate some scientific episode purely with ‘K’s and ‘B’s! 9. Bayesianism has a natural way of integrating explicitly probabilistic theories into epistemic states, via the Principal Principle. Thus, a chance statement from quantum mechanics, say, can be seamlessly transformed into a corresponding subjective probability statement. Likewise, if an agent spreads credences over a number of such theories, she can arrive at her credence for a particular statement by an exercise of the law of total probability. How can traditional epistemology capture this? 10. Bayesianism has a symbiotic relationship with causation – witness the fruitfulness of research on Bayesian networks. Traditional epistemologists should pay heed. 11. There are many arguments for Bayesianism, which collectively provide a kind of triangulation to it. For example, Dutch Book arguments provide an important defence of the thesis that rational credences are probabilities. An agent’s credences are identified with her betting prices; it is then shown that she is susceptible to sure losses iff these prices do not conform to Kolmogorov’s axioms. There are also arguments from various decision-theoretic representation theorems (Ramsey 19xx, Savage 19xx, Joyce 19xx), from calibration (van Fraassen 19xx, Shimony 19xx), from ‘gradational accuracy’ or minimization of discrepancy from truth (Joyce 19xx), from qualitative constraints on reasonable opinion (Cox 19xx), and so on. Moreover, there are various arguments in support of conditionalization and Jeffrey conditionalization—e.g., Dutch book arguments (Lewis 200x, Armendt, 1980) and arguments from minimal revision of one’s credences (Diaconis and Zabell). Again, there is nothing comparable in traditional epistemology. 12. Finally, a pragmatic argument for Bayesianism comes from an evaluation of its fruits. It illuminates various old epistemological chestnuts—in particular, various paradoxes in confirmation theory. The Bayesian begins with the idea that confirmation is a matter of probability-raising: (*) E confirms H (relative to probability function P) iff P(H | E) > P(H). We may also define various probabilistic notions of comparative confirmation, and various measures of evidential support (see Fitelson xx). The Bayesian then shows how important intuitions about confirmation can be vindicated—for example, that black ravens confirm ‘all ravens are black’ more than white shoes do, or that green emeralds confirm ‘all emeralds are green’ more than they confirm ‘all emeralds are grue’. Again, it seems that no analysis couched purely in terms of ‘knowledge’ and ‘belief’ could pay such dividends. But the traditional epistemologist has plenty of ammunition with which to fight back. 2(iii) Antithesis: Bayesian epistemology is not superior to traditional epistemology 1. Bayesians introduce a new technical term, ‘degree of belief’, but they struggle to explicate it. To be sure, the literature is full of nods to betting interpretations, but these meet a fate similar to that of behaviorism—indeed, a particularly localized behaviorism that focuses solely on the rather peculiar kind of behavior that is mostly found at racetracks and casinos. Other characterizations of ‘degree of belief’ that fall out of decision-theoretic representation theorems are also problematic. (See Eriksson and Hájek 2007.) ‘Belief’ and ‘knowledge’, by contrast, are so familiar to the folk that they need no explication. 2. Recall the absence of any notion of truth of an intermediate degree of belief. Yet truth is said to be the very aim of belief. It is usually thought to consist in correspondence to the way things are. Moreover, we want our methods for acquiring beliefs to be reliable, in the sense of being truth-conducive. What is the analogous aim, notion of correspondence, and notion of reliability for the Bayesian? The terms of her epistemology seem to lack the success-grammar of these italicized words. For example, one can assign very high probability to the period at the end of this sentence being the creator of the universe without incurring any Bayesian sanction: one can do so while assigning correspondingly low probability to the period not being the creator, and while dutifully conditionalizing on all the evidence that comes in. Traditional epistemology is not so tolerant, and rightly not. 3. Related, the Bayesian does not answer the skeptic, but merely ignores him. Bayesianism doesn’t make skeptical positions go away; it merely makes them harder to state. 4. The Bayesian similarly lacks a notion of ‘justification’—or to the extent that she has one, it is too permissive. Any prior is a suitable starting point for a Bayesian odyssey—yet mere conformity to the probability calculus is scant justification. Now, the Bayesian will be quick to answer this and the previous objections in a single stroke. She will appeal to various convergence theorems. But see my discussion above of the limitations of such theorems. And I don’t see how they help one iota in addressing simple skeptical challenges, such as how do I know, right now, that I have a hand? 5. Bayesian epistemology conflates genuine epistemic possibilities with impossibilities, and genuine epistemic necessities with contingencies. Probabilities have what I call ‘blurry vision’: notoriously, probability zero events can happen, and probability one events can fail to happen. 6. The triumphs of Bayesian confirmation theory touted above are supposedly offset by the so-called problem of old evidence (Glymour 1980). Note that if P(E) = 1, then E cannot confirm anything by the lights of (*): in that case, P(H | E) = P(H E)/P(E) = P(H). Yet we often think that such ‘old evidence’ can be confirmatory. Consider the evidence of the advance of the perihelion of Mercury, which was known to Einstein at the time that he formulated general relativity theory, and thus (we may assume) was assigned probability 1 by him. Nonetheless, he rightly regarded this evidence as strongly confirmatory of general relativity theory. The challenge for Bayesians is to account for this. (See Branden’s 200x for discussion. Jim Joyce and Jim Hawthorne also have much to say about the problem.) 7. Still on Bayesian confirmation theory: when I gave above the usual Bayesian analysis (*) of confirmation, I followed the common practice of downplaying the relativity to the probability function ‘P’ by secreting it away in parentheses, as if it’s a minor caveat or afterthought. But really ‘P’ should be a completely equal partner in a 3-place relation of the form <H, E, P>—quite unlike ‘confirmation’ in ordinary English, which is a 2-place relation. A more accurate but less rhetorically effective name for the relation between E and H would be “P-enhancement”1. But said that way, one wants to revisit the putative Bayesian solutions to the ravens paradox, the grue paradox, and so on. For example, it seems less satisfying to be told that the evidence of a black raven P-enhances ‘all ravens are black’ more than the evidence of a white shoe does for some suitable P—especially since there are other probability functions for which this inequality is reversed. And more precisely, confirmation relations are relativised to a probability space, <, F, P>. But ‘E confirms H relative to <, F, P>’ hardly glides off the tongue, and sounds even less like the 2-place relation that we were trying to analyze. 1 Dave Chalmers suggested this name. 8. More generally, all Bayesian claims are model-relative. For example, claims of independence, of confirmation, and of exchangeability are relative to a probability function, and again that really means a probability space, so that they are at least 5place relations. Knowledge is not like that. We don’t say: ‘I know that I have a hand relative to such-and-such model’—there is no mediation via a model. The traditional epistemologist protests that Bayesians thus distance themselves from the world: rather than hooking up directly with the world, they are caught up in models of the world. “Cut out the middle man!”, says the traditional epistemologist—there is too much distance between the epistemic agent and the world. Moreover, the Bayesian does not have a good theory of what makes a model good. This is related to the concern that the Bayesian does not do justice to truth. It also comes down to the notorious problem of the prior for the Bayesian. Traditional epistemology has no analogue of that problem. 9. Bayesians have trouble avoiding the terms of traditional epistemology. They slip into plausible informal glosses using familiar words like ‘learning’, ‘knowledge’, ‘belief’, ‘evidence’, ‘forgetting’, etc.. One also sees this in the classic arguments for probabilism – e.g. we are to assume that ‘the Dutch Bookie doesn’t KNOW anything that the agent doesn’t KNOW’. But the official Bayesian story is about ‘ideally rationally held certainties’, and our intuitions about that rather obscure, rarified notion are less secure. The Bayesian changes the topic from things that we have a good grip on (‘knowledge’, etc.): first, to ‘probabilistic certainty’ (which is not even genuine certainty, as we have seen); second, it’s RATIONALITY, which is a term of art, not all that familiar on the street; third it’s IDEALLY rationally held certainty, and who knows what that is? By the time we’re done, we’re a long way from ‘learning’, ‘knowledge’, etc.—the core epistemological concepts that we understood well. Don’t get fooled, then, by the informal gloss when judging some Bayesian claim (“the agent comes to learn X …”). Keep in mind the strict interpretation of rational credence, and so on. Even Jeffrey, the author of “Probable Knowledge”, backslides and uses the terms of traditional epistemology, having claimed to eschew them. For example, he speaks of “learning”. But “learning” has a success grammar; merely pumping up or pumping down subjective probabilities across a partition, à la Jeffrey conditioning, does not guarantee any such success. Similarly, Bayesians sometimes acknowledge a source of contextualism by conditionalizing on background knowledge ‘K’. But this arguably mixes the traditional and Bayesian epistemologies in an awkward way – what is the Bayesian treatment of that ‘K’? If it is merely ‘rationally held probabilistic certainty’, will that meet all the epistemologist’s needs? Synthesis [Again, time allowing, I expect to add more.] There should be more cross-fertilization between traditional and Bayesian epistemology. It’s not as if one must swear allegiance to one of the epistemologies and shun the other. Indeed, a single philosophical paper could fruitfully traffic in both. But often it does seem that research programs on each side run in comparative isolation and ignorance of each other. Let me draw attention to some issues that play a comparatively minor role in traditional epistemology, but which are central in the Bayesian framework – more power to that framework for drawing our attention to them. Traditional epistemologists would do well to find ways of embracing them. (If that requires them to become Bayesians, more power to Bayesianism!) But as we will see, the traditional epistemologist has some lessons for the Bayesian as well. 1. Bayesians offer a new notion of consistency: probabilistic coherence. We could argue about the extent to which it merely generalizes deductive logic’s notion of consistency, or rather offers a genuinely new notion, but either way it is important. See Jim Hawthorne on related issues: while in deductive logic, consistency goes hand-in-hand with truth-preservation, the two notions cleave apart in inductive logic. 2. Some Bayesians codify a kind of diachronic consistency, most famously in van Fraassen’s Reflection Principle. He analogizes violations of Reflection to Moore’s paradoxical sentences, something the traditional epistemologist has long cared about. I am not aware of any analogue of Reflection in traditional epistemology, which is less interested in diachronic principles as far as I can tell. 3. Bayesianism gives us a new notion of independence that does not seem to reduce to other such notions—logical, causal, counterfactual, metaphysical, or what have you. Yet we do have firm intuitions about some cases of probabilistic independence – think of the way we are supposed to recoil at the gambler’s fallacy. There are obvious ramifications for induction here. Now there’s a traditional epistemological concern if ever there was one! 4. Exchangeability has proved to be a fecund notion in the Bayesian’s hands. In particular, it has earned its keep in formulating and illuminating problems in induction—more grist for the traditional epistemologist’s mill. Now, going in the other direction: how can traditional epistemology inform Bayesian? One way is in the very statement of Bayesianism. We all know that it portrays rational credences as obeying the probability calculus—but what is that exactly? We all know that one of its axioms is a normalization axiom—but what is that exactly? On one formulation, it says that all logical truths should receive probability 1. But why stop there? How about a priori truths more generally? So perhaps the normalization axiom could require all a priori truths to be assigned probability 1 by an agent. Here we enter traditional epistemology’s concern with knowledge of the a priori. I think the most important way in which traditional epistemology should inform Bayesian is epistemology is by emphasizing the importance of truth of epistemic states. I see three ways the Bayesian might try to respect the desideratum of alignment with truth: - A la Joyce: gradational accuracy - A la Carnap: agreement with logical probability - A la me: agreement with objective chance. (Elsewhere I argue in favor of this.) I’d be interested in our discussion to hear your thoughts of how traditional epistemology in turn can further inform and guide Bayesian epistemology. May the dialectic continue!2 2 I thank Dave Chalmers for helpful discussion at some idyllic Caribbean locales, and Lina Eriksson for helpful discussion in the rather less exotic Canberra.