FACIAL FEATURE DETECTION PROJECT REPORT

advertisement

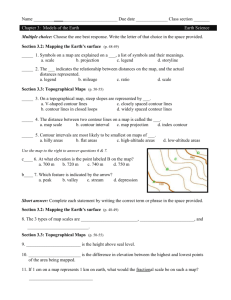

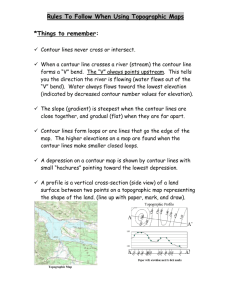

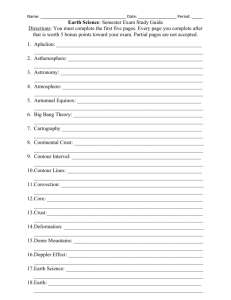

FACIAL FEATURE DETECTION PROJECT REPORT Amit Pillay &Ravi Mattani Instructor: Dr. Roger Gaborski Course: 405.757 Abstract The aim of this project was to develop an algorithm that detects human facial features like the mouth, nose and eyes in a full frontal face image. We adapted a multi-step process in order to achieve the goal. To detect the face region a skin-color segmentation method was used. Morphological techniques were used to fill the holes that were created after the segmentation process. From the skeletonization process, a skeleton of the face was obtained from which face contour points were extracted. Facial features can be located in the interior of the face contour. We used several different facial-images to test our method. We achieved a detection rate of over 90%. Introduction Face feature extraction is considered to be a key requirement in many applications such as Biometrics, Facial recognition systems, video surveillance, Human computer interface etc. Therefore reliable face detection is required for the success of these applications. The task of human facial feature extraction is not easy. Human face varies from person to person. The race, gender, age and other physical characteristics of an individual have to be considered thereby creating a challenge in computer vision. Facial feature detection aims to detect and extract specific features such as eyes, mouth and nose. Previous work & Research in the field of face recognition There are many face extraction methods reported by researchers in the field of face recognition. To combine image information and knowledge of the face is the main trend. In order to discriminate face candidates from the neck, ears and the incorrect segmented parts, shape analysis of the color segmentation result is necessary. One method (Saber and Tekalp, 1998; Lee et al., 1996) involves first filling an ellipse to the segmented face for registration. Most of them do not take into consideration the misalignment caused by ear and neck. Ian-Gang Wang, Eric sung in their article have proposed a morphological procedure to analyze the shape of segmented face region. This is an unexplored approach in face detection research. We have incorporated some of the methods proposed in this article. Several rules have been formulated for the task of locating the contour of the face. These rules were primarily based on the facial skeleton and knowledge of the face. The searching region of the face features reduces. The facial features such as mouth, nostrils and eyes can be more accurately located within the face contour. Terrillon et al., 1998 mentions the problem of how other body parts such as neck may lead to face localization error. A different approach was presented in (Sobottka and Pitas, 1998) where the features are first detected and then the contour was tracked using a deformable model. Brunelli and Poggio,1993 use dynamic programming to follow the outline in a gradient intensity map of elliptical projection of face image. Haalick and Shapiro, 1993 demonstrate how morphological operations can simplify the image data while preserving their essential shape characteristics and can eliminate irrelevances. Face feature detection is a multi step process. We have incorporated several methods and suggestions proposed in the articles mentioned in the above section. We tried several methods for each step and the one that gave the best results was selected. In our project in order to detect the face region a skin-color segmentation method was used. This method was developed by Yang and Waibel(1995) This method is described in section 2.1. To segment the face region we used morphological processes of dilation and erosion. Section 2.2 describes them in detail. The skeletonization process was used to extract face contour points. This process is described in section 2.2. The Skeleton thus obtained is used in the process of line segmentation which includes line tracing and line merging. Facial features can be located in the interior of the face contour. The process of Facial feature extraction is described in section 2.3. Flowchart depicting the entire process Input image skin color segmentation Morphological image-processing Skeletonization Line segmentation and contour detection Facial feature extraction using facial geometry. Output image Section 2.1: Skin Segmentation This is one the important steps in face feature extraction process. The efficiency of the color segmentation of a human face depends on the color space that is selected. We used the finding by Yang and Waibel (1995,1996) who noted that the skin colors of different people are very closely grouped in the normalized r-g color plane. So we took some seed pixels from the face area obtained the normalized r-g spread and classified the pixels that were close enough to this spread as belonging to the face. The Figures below demonstrate our results. Fig1 : Skin color segmentation a)Original image b) Skin color segmentation Section 2.2 Morphological Image Processing (Process of filling interior holes of the face region and extraction of the face skeleton for determining contour points) Morphological image processing is a collection of techniques for digital image processing based on mathematical morphology. Since these techniques rely only on the relative ordering of pixel values, not on their numerical values, they are especially suited to the processing of binary images and grayscale images whose light transfer function is not known. [2] Definitions of some morphological operations that we used in our project: Erosion: Erosion is an operation that thins or shrinks the objects in the binary image.Erosion are performed by IPT function imerode(). Dilation: Dilation is an operation that grows or thickens the objects in the binary image. Dilation is performed by IPT function imdialate(). For successful facial feature extraction the accurate computation of the contour points is very important. This helps in locating searching regions. Both neck and ears have the same color as that of the face. Hence they are connected to the face region. Therefore we need to separate them so as to better locate the facial features. In order to detect the skeleton, the face region was filled by applying multiple morphological dilation operations. Then the same number of erosion operations was applied. There exist holes that corresponds to the eyes, nose and mouth etc. We fill these holes using the dilation operation. The erosion operation is applied to the dilation result in order to restore the shape of the face. Then we obtained the skeleton of the face. We used the IPT function bwmorph() in MATLAB to extract the skeleton. The ears and the neck can be separated from the segmented face region by analyzing the skeleton. The figures on the next page show the output we obtained after performing operations. Figure a) The output after performing skin color segmentation Figure b) The output after filling the interior holes of the face region using dilation and erosion operations. Figure c) Overlay skeleton with face region. Contour Tracing Some vertices of the skeleton lines can fit the contour of the human face while excluding the points that lie on the ears and the neck. The fitting points can be found using the set of rules.[1] Rule 1. Some face contour fitting points can be found from the line tracing as a result of the skeleton. The contour points should satisfy Rule 1.1 The contour fitting points should be the vertices of the roughly horizontal skeleton line segments that are long enough (the threshold is set proportional to the longest skeleton line segment). Rule 1.2 The left vertex will be selected as candidate for contour fitting if most of the horizontal line segments are positioned at the left of the symmetry axis. Rule 1.3. The right vertex will be selected as candidate for contour ftting if most of the horizontal line segments are positioned at the right of the symmetry axis. Rule 1.4. The contour points should be above a vertical position that is set at 3/4 of the height from the top of the symmetry axis Rule 1.5. The points satisfying the above conditions are separated into two sets (left and right contour points, respectively). They are each sorted from top to bottom, respectively. Rule 1.6. If the difference between the horizontal coordinates of a point from right sorted points set and any one of its previous and succeeding point is large enough (a threshold is set), then the Rule 2. The point set satisfying the above will be doubled using symmetry axis, i.e., for a left fitting point there exists a right point that can be calculated using the symmetrical axis and vice versa. All the resulting skeleton lines are checked form Rules 1 to 2 in turn. The points for fitting the contour of the human face can be collected while excluding the points that lie on the ear and neck. Section 2.3 Feature extraction within the ROI After the contour has been found we obtained the Region of interest. Features such as eyes, nose and mouth can be found within the ROI. We locate the mouth first. Once the mouth is detected we can determine the eyes and nose easily. For finding the lips we tried several methods. We performed edge detection using Sobel operator that finds the horizontal line within the ROI .Finding vertical position of the line between the lips was done by using horizontal integral projection ph of the binary image in the search region. ph is obtained by summing up the pixels values in each row of the search. Because lip line will have the longest horizontal line, its vertical position can be located where ph has maximum value. We also used some prior knowledge about the face in order to determine the lips. We knew that lips are centered horizontally in the lower half of the face and are isolated .We took advantage of this information in order to constrain the vertical position of the search area. Once we have located the lips finding the eyes and nose was done in similar fashion, each time constraining the search area in vertical direction. Figure: Contour points extracted from earlier process Figure: Edges removed for detecting FF. Figure : The Region of Interest Figure: Final Results Results & Analysis : Number of images tested: 30 Success: 90% The following Table shows the results of the first 13 images. Image no Name Lips Nose Mouth 1 c1m.jpg 2 c2m.jpg 3 c37m.jpg 4 c4m.jpg 5 c5m.jpg 6 c6m.jpg 7 c7m.jpg 8 c8m.jpg 9 c9m.jpg 10 c10m.jpg 11 c11m.jpg 12 c12m.jpg 13 c13m.jpg The Results and analysis of the experiments conducted are demonstrated in the next page. One of the experimental results with steps is shown in figures below. The experimental face images were downloaded from www.faceresearch.org site. A total of 30 images of different people were selected randomly. The experimental results shown above illustrate that our method is quite good. A correct recognition rate of 90% is obtained using out method. There were some images which showed wrong detection of facial features. An analysis of these images shows that our method failed to extract enough contour points from these images which resulted from ill skeleton-extraction. Lack of contour points resulted into restricted ROI in which needed features failed to appear. One way of improving the skeleton extraction could be to more generalize the face region extraction process followed by sufficient dilation and erosion process. Better the effect of skin-region extraction process, better will be the resulting skeleton obtained and thus better will be the contour points extracted. Other issue that limits given experiment is the image size. Since the experiment is performed using MATLAB IPL toolbox, running time of the program is a major issue. Therefore large size images take longer time to compute the result and some may not give desired results cause of image complexity. Figure: a) Original Face Image b) Skin Color Segmentation Figure: c) After Dilation & Erosion d) Skeleton of the image Figure: e) Contour points f) ROI Figure: g) After applying Edge Detection to the ROI h) Final image with features detected References: [1]Frontal-view face detection and facial feature extraction using color and morphological operations by Jian-Gang Wang, Eric Sung * [2] A Model-Based Gaze Tracking System by Rainer Stiefelhagen, Jie Yang, Alex Waibel [3] Digital Image Processing Using MATLAB by Gonzalez, Woods &Eddins,Prentice [4] Images taken from : www.faceresearch.org [5] Prof. Gaborski’s lecture slides. [4] www.wikipedia.com