scientific research merely on the off chance that what we hope to

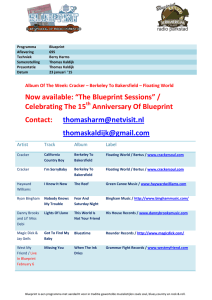

advertisement