Risk Analysis and the Safety of Dams

advertisement

THE PRACTICE OF RISK ANALYSIS AND THE SAFETY OF DAMS

Baecher, G.B.1 and Christian, J.T.2

ABSTRACT

Despite the best efforts of engineers to design conservatively, dams and other geotechnical structures do fail at relatively constant rates. While no engineer or engineering

organization designs dams to have a finite probability of failure, the use of risk analysis

techniques is growing in popularity as a means of dealing with the uncertainties in geotechnical performance. Risk analysis forces the engineer to confront uncertainties directly and to use best estimates of site conditions and performance in predicting performance. Uncertainties, rather than being dealt with by conservative assumptions, are

themselves treated as quantifiable entities. Methodologies that originated in the aerospace and nuclear industries are now being applied to geotechnical structures, and with

notable success. Nonetheless, the development of geotechnical risk analysis procedures

requires that the unique considerations of geotechnical uncertainties, different in many

ways from structural uncertainties, be confronted and dealt with. This is leading to risk

analysis procedures which are in themselves specially tailored to geotechnical applications.

INTRODUCTION

Engineers, and the organizations for which they work, do not build dams with an intentional probability of failure. Engineers work on one dam at a time, and they design it to

be safe. If they are uncertain about site conditions or flood frequencies, they design

conservatively. Engineers exercise the public trust. They are not gamblers, and for the

most part do not believe that nature is random. They believe that the world behaves according to fixed rules of physics, and their job is to work with those rules to plan, design, and build structures that behave as intended.

1

Professor and Chairman, Department of Civil and Environmental Engineering, University of Maryland,

College Park, MD 02472, USA. gbaecher@eng.umd.edu

2

Consulting Engineer, Waban, Massachusetts. christians@mediaone.net

Page 1 of 29

But dams do fail, and not just occasionally. Modern, well-designed dams operated by

competent authority fail at a rate about 10-4 per dam-year. Failure here means loss of

pool. Dam incidents, as defined by ICOLD, which are serious events that do not cause

loss of pool, happen at a rate more than ten times greater than the rate of failures. The

number 10-4 per dam-year sounds small, but in the United States, to take one example,

there are 75,000 dams over 7.7m (25 feet). The 10-4 rate implies an average of 7.5 dam

failures a year. Indeed, in the last ten years, the National Performance of Dams Program (McCann 1999) has recorded 440 dam failures in the U.S., of structures conforming to the Federal Guidelines on Dam Safety, that is, structures more than 7.7m (25 feet)

high or impounding more than 32 thousand cubic meters (25 acre-feet) of water. These

include many privately owned and many relatively small structures, which have a higher

rate of failure than large, government-owned structures. Most, but not all, of these failures occur during major storms. Hurricane Agnes (1972) alone may have caused 200

dam failures in the eastern United States.

Regulatory authorities and the public in general have grown ever more aware of the

risks posed by chemicals, consumer goods, and other products of industrial society.

They have also grown more aware of risks posed by infrastructures, including dams,

levees, and other water resource structures. They do not necessarily believe the engineering community’s assurances that a dam is safe. How should designers and the organizations that build and operate dams respond to this challenge? Despite misgivings

by some factions of the profession, increasingly the response is to turn to the risk analysis procedures pioneered and proven in the aerospace and nuclear power industries.

RATE OF FAILURE OF MODERN DAMS

Despite the difficulties, the International Congress on Large Dams (ICOLD) and its national affiliates, for example, the United States Committee on Large Dams (USCOLD)

and the Australian Committee on Large Dams (ANCOLD), have devoted a great deal of

attention to compiling information on dam failures and their causes. These are voluntary professional societies, but most dam-building agencies and engineers participate in

their activities. From the efforts of ICOLD and from the information generated by or-

Page 2 of 29

ganizations examining their own operations, engineers have developed a fairly clear picture of the causes of dam failures (International Commission on Large Dams 1995; International Commission on Large Dams. 1973)

The foremost cause of failure as cited in the catalogs is overtopping. More water flows

into the reservoir than the reservoir can hold or pass through its spillway. The excess

water has to go somewhere, and the most likely place is over the top of the dam. This

does serious damage to the dam, especially to an embankment dam, which is likely to

fail. At some dams, even when the outlet gates are fully open, the spillways are not

large enough to carry the water piling up behind the dam. Overtopping and inadequate

spillway capacity tend to be lumped together in the catalogues of dam failures.

Actually, the rate of failure by overtopping of modern, well-build dams operated by

competent authorities is small. Most of the overtopping failures recorded in the catalogs

are of dams build in earlier times, or dams that were poorly maintained, or operated by

other than competent authority. Almost all modern, large dams have benefited from

significant advances in hydrological science, including hydrological risk analysis, over

the past few decades, and are designed to conservative assumptions about the largest

flood they must be prepared to store or pass, the so-called, “probable maximum flood”

(PMF) in US practice. Indeed, Lave and Balvanyos (Lave and Balvanyos 1998) maintain that no major US dam has ever experienced a PMF, although probable maximum

precipitations (PMP) have been approached or exceeded (U.S. Bureau of Reclamation

1986).

Spillway capacity has a major influence on the likelihood of overtopping, but the way

the reservoir is operated is equally important. Organizations develop manuals to instruct operators in what to do in various situations, and the organizations assume that

operators know the procedures and follow them. Yet, this is not always the case. As

with failures in many spheres, some failures happened because the operators did not follow the prescribed procedures. An example is the Euclides da Cunha dam in Brazil. In

1977, during a torrential rainstorm, water in the reservoir rose faster than the rate at

which the spillway gates were supposed to be opened. Operators were reluctant to open

Page 3 of 29

the gates because the resulting flood would affect their families, friends, and property

downstream. They waited too long, and the result was a major dam failure.

The next most common cause of failure is internal erosion. This starts when the velocity of the water seeping through an embankment or abutment becomes so large that it

starts to move soil particles. Once particles are removed, the channel becomes larger, it

attracts more flow, which picks up more particles, and enlarges the channel further. The

end of this process can be a channel so large that the flow through it destroys the dam or

abutment. On June 5, 1976, the Bureau of Reclamation’s 300 foot-high Teton Dam

failed. The dam had only recently been completed, and the reservoir had never been

filled. Unusually large snow melt in the Grand Teton mountains sent water into the reservoir more rapidly than had been anticipated, filling the reservoir to capacity. The outlet works were not yet operating, so the water could not be diverted. Engineers still debate how the failure occurred, but internal erosion created a full breach near the right

abutment that allowed the pool to escape in a wave that engulfed the towns downstream.

Engineers have learned a great deal about internal erosion and the effects of seepage at

dam sites. They go to great lengths to control seepage under and around dams. This

can involve constructing walls to contain the seepage or pumping concrete at high pressure into the rock to seal openings. Embankments have multiple layers with different

permeabilities and grain sizes, some to prevent seepage, some to channel the flow safely

into drains, and some to prevent particles from migrating under seepage pressures and

initiating piping. To make sure that all this is working properly, engineers install devices to measure movements and pressures and monitor the readings regularly. A modern

dam is a complicated and ever-changing structure with which the operators interact continuously.

People who deal with older dams recognize that they were not built with the same

knowledge and experience as a modern dam. This is particularly true of dams that were

built and maintained by inexperienced groups without adequate engineering support.

The Johnstown flood of 1889, one of the worst public disasters in U. S. history, killed

about 2200 people. It happened because a badly designed embankment dam, operated

Page 4 of 29

by a private club to retain water for a resort lake, and maintained poorly if at all, collapsed during a heavy rainstorm. In 1977 the Toccoa Falls Dam, built originally with

volunteer labor at a religious camp, failed under similar circumstances; 39 people died

in the resulting flood.

The risk of dam failure is also not uniform over the life of the dam. Like most engineered products, the chance that a dam will fail is highest during first use, which for a

dam is first-filling, the first time that the reservoir is filled to capacity. If something was

overlooked, or if some adverse geological detail was not found during exploration, then

this is usually the time that it will first become apparent. As a result, about half of all

dam failures occur during first filling. The other half occur more or less uniformly in

time during the remaining life of the dam. So, if the rate of failure averaged over the

whole life of a dam is about 1/10,000 per dam-year, the rate during the first, say, five

years reaches almost 1/1,000 per dam-year, or ten times higher. This is exactly what the

historical record shows.

That about half of all dam failures occur during first filling is a troubling observation,

for the following reason. In the arid areas, which use dams primarily for irrigation and

only secondarily for flood control, reservoirs are often kept full. If a heavy storm is

forecast, the reservoir is lowered to make room for the larger inflows coming from upstream. But in temperate regions, where dams primarily serve flood control needs and

irrigation is not an important benefit, reservoirs are typically kept low. If a flood comes,

either its entire flow is caught behind the dam, or if it is a very large storm, at least its

peak flow is caught. But since most flood control reservoirs are designed for floods of a

size that essentially never comes—the probable maximum flood (PMF)—many dams in

temperate regions, such as the eastern US, have never experienced design pool levels,

they have never seen first filling, and thus have never been proof tested. The probability

of failure of these dams, should an extreme flood come, could be ten times greater than

that of a load-tested dam. Of course, the chance of PMF is purposely remote.

Page 5 of 29

HOW RISK ANALYSIS IS CARRIED OUT

How do we think about the risk of dam failure? Most risk analyses begin with a systematically structured model of the events that could, if they happened in a particular

way, lead to failure. This model is called, an event tree.3

An event tree begins with an initiating event, and graphs the sequences of subsequent

hypothetical events that ultimately could lead to failure. Examples of initiating events

include earthquakes, floods, and hurricanes. An example of something other than a natural hazard that might be an initiating event is excessive settlement, which may cause

equipment failure, say, a spillway gate, and simultaneously disrupt utility services needed to deal with that equipment failure.

The steps in a risk analyses are:

1.

2.

3.

4.

5.

6.

7.

Define what “failure” means.

Identify initiating events.

Build an event tree of the system.

Develop models for individual components.

Identify correlations among component failures or failure modes.

Assess probabilities and correlations for events, parameters, and processes.

Calculate system reliability.

It is often said that a principal benefit of risk analysis lies simply in structuring the problem as an event tree and in trying to identify interactions and correlations—what reliability engineers call “failure modes and effects analysis”—whether or not quantitative

reliability calculations are ever carried out or used.

STRUCTURING RISK IN AN EVENT TREE

An event tree is nothing more than a graphical device for laying out chains of events

that could lead from an initiating event to failure. Each chain in this tree leads to some

performance of the system. Some of these chains lead to adverse performance, some do

3

An alternative approach is via the so-called fault tree, common in analyses of equipment failures, such

as aircraft, machinery, or power plants. For brevity, fault trees are not treated here.

Page 6 of 29

not. For each event in the tree, a probability is assessed presuming the occurrence of all

the events preceding it in the tree, that is, a conditional probability. The total probability for a particular chain of events or path through the tree is found by multiplying the

sequences of conditional probabilities.

Simple random experiments can be used to show how event trees are useful in diagramming outcomes and identifying sample spaces. These event trees for simple experiments are the same in concept as those used to analyze complex system reliability, only

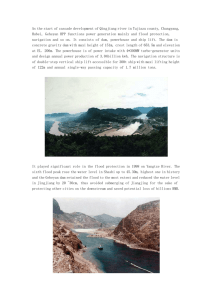

much simpler. The event tree shown in Figure 1 represents the experiment comprising

two successive tosses of a fair coin. On the first toss, the coin lands either heads-up or

heads-down, and similarly on the second toss. If one presumes these tosses to be independent, the branch probabilities in all cases are 0.5. The probability of each of the four

possible outcomes is the same, 0.25. If a wager is placed such that the player looses if

and only if two tails occur (T,T), then the “probability of failure” from the event tree is,

0.25. The use of event trees in analyzing complex system behavior is exactly the same

as in this simple example.

HH 1/4

1

ads

e

H

Ta

ils

1

/2

/2

/2

s1

d

a

He

Tails

1/2

s 1/2

Head

Ta

ils

1/2

HT 1/4

TH 1/4

TT 1/4

Figure 1. Simple event tree for tossing a coin

Bury and Kreuzer (1986) and Vick (1997) describe in simple terms how event trees can

be structured for gravity dams. Usually, analytical calculations or judgment are more

easily applied to smaller components, and research suggests that more detailed decomposition, within reason, enhances the accuracy of calculated failure probabilities. One

Page 7 of 29

reason, presumably, is that the more detailed the event tree is, the less extreme the conditional probabilities which need to be calculated or estimated.

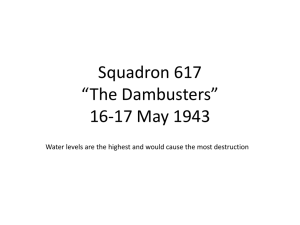

Whitman (1984) describes a simple event tree which is part of the risk assessment for

erosion in an earthen dam (Figure 2). The issue being addressed is scour in the channel

downstream of the dam, caused by large releases over a concrete spillway. The spillway is capable of passing large flood flows, the natural channel below may be eroded

by these large discharges. Headward erosion of the channel may undermine the spillway basin, and then possibly undermine the spillway itself. If the spillway fails, this

may directly lead to breaching of the dam, or to erosion of an adjacent earth embankment which in turn could lead to breaching of the dam.

PMF

Occurs

Downstr

eam

channel

erodes

back to

stilling

basin

Spillway

undermined

Deep

scour

hole at

stilling

basin

0.8

1.0

10-4

Stilling

basin

collapse

0.7

0.3

0.6

Erosion

of earh

embank

-ment

0.17x10-4

0.34x10-4

Figure 2. Event tree for breaching of earth dam (Whitman 1996)

The initiating event in this case is a flood discharge of some specified range of magnitudes centered on the probable maximum flood, PMF. The subsequent events leading

from this initiating event are,

1. The natural downstream channel erodes back to the stilling basin, causing scour

holes of various depths.

2. The foundation of the stilling basin collapses as a result of a scour hole.

3. Collapse of the stilling basin leads to undermining of the spillway and consequent

breaching of the earthen dam.

4. Collapse of the stilling basin leads to erosion of an adjacent earthen embankment

and thus to breaching of the dam.

Page 8 of 29

The probability of the initiating event, occurrence of a large flood flow of given discharge, is established from hydrologic studies. In this case, the probability of the flood

flow within the design life of the dam was estimated as 10-4. Based on model hydraulic

tests, it was concluded that the natural channel was certain to erode under the discharge

of the flow. Thus, the branch probability at the first node after the initiating event was

taken to be 1.0. Using stability calculations and other hydraulic model tests, the various

other branch probabilities were estimated and filled into the event tree. Each branch

probability is conditional on the occurrence of events leading into its node. The probability of any path of branches through the tree is found by multiplying the individual

branch probabilities. The final result is shown at the right hand side of the figure. The

total probability of the earth dam failing by loss of containment is the sum of the probabilities of the two ways in which that failure could occur, or in this case, about 0.51x10-4

not much less than the probability of the initiating event. In other words, if the initiating

event occurs, it is reasonably likely (about a 50:50 chance) that the dam will fail.

The event tree provides a convenient way for decomposing a system reliability problem

into smaller pieces that are easier to analyze, and then provides a vehicle with which to

recombine the results obtained for the smaller pieces in a logically coherent way to obtain the reliability of the system itself. Other examples of relatively simple event trees

used in geotechnical practice are provided by Vick and Bromwell (1989) for dam failure

caused by the collapse of a sinkhole, and by Wu, et al (1989) for liquefaction of a sand

caused by seismic ground shaking. More complex event trees for existing dams to assess reliability are given by Vick and Stewart (1996) for Terzaghi and Duncan Dams in

British Columbia, and by Von Thun (1996) for Nambe Falls Dam in New Mexico.

One sub-tree from the US Bureau of Reclamation’s event tree for Nambe Falls Dam is

shown in Figure 3. This particular sub-tree is associated with the initiating event that an

earthquake occurs along the Santa Fe Fault, and “fault offset loading” causes damage to

the dam. The tree is separated into panels. The first simply identifies the loading case,

seismic (faulting) Santa Fe Fault. The second panel describes the loading condition,

starting from an earthquake occurring, with its associated probability, and continuing

through events which affect the dam. The third panels enumerates the ways in which

Page 9 of 29

the dam may react to the various loading conditions (e.g., failure conditions, damage

states, and no-failure events). Finally, the fourth and fifth panels enumerate the potential consequences of each chain of events and show the calculated probability associated

with each chain.

In most cases, the activity of constructing event trees for a system is in itself instructive,

whether or not the resulting probabilities are used in a quantitative way. The exercise

requires project engineers to identify chains of events that could potentially lead to a

failure of one sort or another. This explicit activity, and especially when carried out

with a group of people, may lead to insights that might not otherwise have been obvious, and therefore which might have been overlooked. In some cases, the event tree becomes a “living” document that follows the progress of design, and is changed or updated as new information becomes available, or as design decisions are changed.

Event and fault trees require a strict structuring of a problem into sequences. This is

what allows probabilities to be decomposed into manageable pieces, and provides the

accounting scheme by which those probabilities are put together. In the process of decomposing a problem, however, it is sometimes convenient to start not with highly

structured trees, but with an influence diagram (Stedinger et al. 1996). An influence

diagram is a graphical device for exploring the inter-relationships of events, processes,

and uncertainties. Once the influence diagram has been constructed, it can be readily

transformed into event or fault trees.

Decomposition of a probability estimation problem relies on disaggregating failure sequences into component parts. Usually, these are the smallest pieces that can be defined

realistically and analyzed using available models and procedures. Decomposition can

be used for any failure mode that is reasonably well understood. Clearly, decomposition cannot be used for failure modes for which mechanistic understanding is lacking.

Internal erosion leading to piping is arguably one such, poorly understood failure mode.

In most cases, the extent of decomposition, that is the size of the individual events into

which a failure sequence is divided, is a decision left to the panel of experts. Most real

Page 10 of 29

Figure 3. Partial event tree for Nambe Falls Dam (Von Thun 1996)

Page 11 of 29

problems can be analyzed at different levels of disaggregation. Considerations in arriving at an appropriate level of disaggregation include the availability of data pertinent to the components, the availability of models or analytical techniques for the components, the extent of intuitive familiarity experts have for the components, and the

magnitude of probabilities associated with the components. Typically, best practice

dictates disaggregating a failure sequence to the greatest degree possible, subject to

the constraint of being able to assign probabilities to the individual components.

Usually, it is a good practice to disaggregate a problem such that the component probabilities that need to be assessed fall with the range 0.01 to 0.99 (see, e.g., Vick, 1997).

If this range can be limited to 0.1 to 0.9, all the better. As will be discussed below, people have great difficulty accurately estimating judgmental probabilities outside these

ranges.

DEPENDENCIES AMONG COMPONENT FAILURES

The interdependencies of component failures or failure modes, whether caused by mechanical interaction as in step four of the steps in risk analysis or by correlation as in

step five, are extremely important to assessing system reliability. This can be clearly

seen in the case of an oil tank farm (Figure 4), in which tanks are grouped within patios

surrounded by firewalls to contain spills. Presume that the annual probability of the

tank failing and spilling its contents into the patio is PT=0.01, and that the overflow capacity of the patio is sufficient to retain the full volume of the tank. For oil to leak out

of the patio, the tank must fail and then the firewall must fail, too. Let the probability of

the firewall failing given an oil load behind it be PF=0.01. The joint probability of both

the tank and firewall failing, presuming the probabilities independent, is the product,

Pr{oil loss}=PTPF=0.0001, a fairly small number. But, what if liquefaction of the site

caused by seismic ground shaking had an annual probability of occurring of 0.001, and

should liquefaction occur, both the tank and firewall would fail. While the probability

of liquefaction is inconsequential to the annual risk of tank failure alone, the probabilistic dependence it causes between tank and firewall failure increases the annual probability of loss of oil off the site (system failure) by a factor of ten.

Page 12 of 29

Dependencies in component failure probabilities can arise in at least three ways:

1. Mechanical interaction among failure modes (e.g., the tank fails and in so doing uproots the soil under the firewall, and the wall then fails, too).

2. Probabilistic correlation (e.g., a common initiating event affects both the tank and

firewall).

3. Statistical correlation (e.g., uncertainty about the consolidation coefficient of the

foundation soils affects the performance of the tank and firewall in the same way;

excessive settlement of each occurs together).

Figure 4. Oil tank farm showing storage tanks within

firewall protected patios

PROBABILITIES

Were we to stop at this point, with a fully articulated event tree, we would have a systematic representation of the modes of failure of the dam and their possible effects.

This is valuable in itself, and akin to what is called “failure modes and effects analysis.”

Indeed it is notable that traditional, deterministic geotechnical analysis seldom breaks

apart failure mechanisms and organizes its series of analyses so systematically as does

this initial step of risk analysis.

Page 13 of 29

Risk analysis begins to depart from traditional analysis in that it forces the assignment

of probabilities to branches of the event tree. These probabilities are combined using

the logic of the tree, and multiplied together to obtain probabilities of system failure.

But what do these probabilities mean? Most engineers have an intuitive sense of what

probabilities mean, but closer examination leads to ever more questions, and sometimes

to ever more confusion. What does it mean for something to be, “random.” Is there a

difference between “random” and “uncertain?”

RANDOM OR UNCERTAIN?

The evolution of the notion of “randomness,” since the time of ancients, has concerned

natural processes that are unpredictable. The role of dice, patterns of the weather,

whether or not an earthquake occurs. Such unpredictable occurrences have been called

aleatoric by Hacking (1975) and others, from the Latin aleator, meaning a die-caster or

gambler (see also, (David 1962)). This term is now widely used in risk analysis, especially in applications dealing with seismic hazard, nuclear safety, and severe storms.

The term probability, when applied to random events, is usually taken to mean the frequency of occurrence in a long or infinite series of similar trials. In this sense, probability is a property of nature. We may or may not know what the value of the probability

is, but the probability in question is a property of reality for us to learn. There is, presumably, a “true” value of this probability. We may know the true value only imprecisely, but there is a value to be known. Two observers, given the same evidence, and

enough of it, should converge to the same numerical value.

The evolution of the notion of “uncertainty,” at least since the Enlightenment, has concerned what we know. The truth of a proposition, guilt of an accused, whether or not

war will break out. Such unknown things have been called epistemic, from the Greek,

meaning knowledge or science. This term, too, is now widely used in risk analysis, to

distinguish imperfect knowledge from randomness. The term probability, when applied

to imperfect knowledge, is usually taken to mean the degree of belief in the occurrence

of an event or the truth of a proposition. In this sense, probability is a property of the

individual. We may or may not know what the value of the probability is, but the prob-

Page 14 of 29

ability in question can be learned by self-interrogation. There is, by definition, no

“true” value of this probability. Probability is a mental state, and therefore unique to the

individual. Two observers, given the same evidence, may arrive at different probabilities, and both be right!

In modern practice, event trees usually incorporate probabilities of both the aleatoric

and epistemic variety, and many that are both aleatoric and epistemic simultaneously.

This have proved problematic, because it is confusing to separate out the two components of an individual probability assignment, and, unfortunately, the separation is extremely important. Furthermore, the separation is not an immutable property of nature,

but an artifact of analysis. As a result, there is tremendous propensity for confusion.

Consider the case of tossing a coin. Were one to ask, before a coin was tossed, the

probability of its landing “heads up,” most observers would say, ½. In principle, in a

long series of tosses, about ½ of them land “heads up,” and the other ½, “heads down.”

The frequency of “heads up” is ½, and thus the probability. But, what if one were to

toss the coin and look at the result, but not tell the observer the outcome. When asked

the probability of the coin being “heads up,” what should the observer say? This is no

longer a “random” event. Its outcome is known, if not to the observer. The first case

was, to the observer, an aleatoric probability.

On can take this example further. Presume that an extremely practiced analyst comes

along, and says that he can predict the dynamic behavior of the coin using an advanced

model of mechanics and aerodynamics. If he were given all the impulse and material

properties, he could precisely predict whether the coin would land “heads up.” The only

problem is, he does not precisely know the parameters. So he makes an imperfect prediction, subject to the probabilities inherent in not knowing the parameters of the model

with precision. Is the probability of the coin landing “heads up” now aleatoric or epistemic?

Page 15 of 29

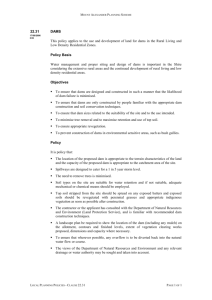

Water Stage (height)

Flood Damage ($)

Probability

Flood Discharge

Flood Damage ($)

Flood Discharge

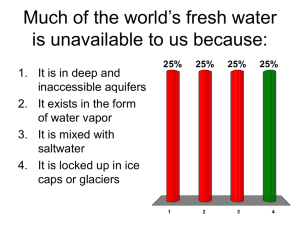

Figure 5. Calculation procedure for assessing damage risk due to levee overtopping

(U.S. Army Corps of Engineers 1996).

Bringing this question back into the realm of dams and water resources, we predict the

potential for flood damages in a leveed basin in three steps: (i) a flood-frequency relation is used to express exceedance probabilities of specified flows (discharges), (ii)

specified flows are related to water profiles in a rating curve of water depth vs. discharge, and (iii) water depths are related to property damages in a regression equation

based on regional surveys (Figure 5). In each relationship there is a mean curve based

on the best estimate, with a standard deviation of individual cases about it. There is also

a set of uncertainty envelopes (confidence limits) about the best estimate, based on limited lengths of record and consequent statistical error. We treat the former as aleatoric

randomness and the latter as epistemic uncertainty. Yet, by changing the assumptions

of the analysis slightly, one can move variations from the aleatoric category to the epistemic category at will. By moving from historical data analysis of flood flows to at-

Page 16 of 29

mospheric modeling, one changes randomness to parameter uncertainty in the floodfrequency relation. By more careful hydraulic modeling of bed configurations one does

the same with the rating curve. The trade-off between aleatoric and epistemic probability is a decision of the analyst; it is not a property of nature. In fact, many engineers

might agree that nature itself is never random; we only model nature as random. All

uncertainties, and therefore all probabilities, are epistemic. “Randomness” is only a

convenience of analysis.

GEOTECHNICAL UNCERTAINTIES

There are many uncertainties in geotechnical risk analyses, each is assessed somewhat

differently from the others, and each affects the conclusions of a risk analysis in different ways. Important among these are,

External loads (e.g., seismic accelerations, water elevations);

Model and parameter uncertainty, including soil engineering properties;

Undetected (or “changed”) site conditions;

Poorly understood behavior (lack of adequate models); and

Operational practices and human performance.

For the present, we ignore those uncertainties pertaining to external loads and human

performance, and concentrate on those pertaining to geotechnical performance.

As above, one normally divides these geotechnical uncertainties into one set treated as

aleatoric (naturally random) and one set treated as epistemic (lack of knowledge). Uncertainties about soil engineering parameters, for example, are usually considered aleatoric. There is natural variability of soil properties within a formation, and this is characterized by a mean (or trend), variance, other moments, and a distribution function.

Uncertainties about model representation, on the other hand, are usually considered epistemic. But the distinction becomes hazy when one is faced with actually assigning

numbers to probabilities.

Page 17 of 29

Consider in more detail the estimates of soil parameters. We observe scatter in test data,

and treat that scatter as if deriving from some random process. We then use statistical

theory to summarize the data and to draw inferences about some hypothetical population of soil samples. But most people would agree that soil properties are not random.

One may not know the properties at every point in a formation, but the properties are

knowable. The variation is spatial, not random. This is like a deck of playing cards.

The order of the cards is spatial, not random. The players simply do not know the order

before the game begins, and they must infer the order as the play proceeds.

Having made the decision to treat some part of the variation in soil properties as aleatoric, the question becomes, how much? We may, on the one hand, model the randomness by a constant spatial mean, constant (homoskedastic) variance, and some probability distribution function (pdf) of variation about the mean. On the other hand, we may

model the randomness by a polynomial trend, some residual variance, and pdf. In the

second case, we have moved the boundary between what is modeled as aleatoric and

what as epistemic. The polynomial trend explains more of the data scatter, and the variance of residuals around it is smaller than with the constant trend; but more parameters

are needed to fit the trend, and the statistical error attending their estimation is larger

because there are fewer degrees of freedom.

Soil Property Uncertainty

Data Scatter

(treated as aleatoric)

Spatial

Variation

Measurement

Noise

Bias Error

(treated as epistemic)

Measurement

& Model Error

Statistical

Error

Figure 6. Contributions to uncertainty in soil parameter estimates

The scatter we observe in soil property data also comes in part from measurement errors

(Figure 6). Measurement errors are of two types, (i) individually small, liable to be positive and negative, and cumulative; or (ii) large, consistently either positive or negative,

and systematic. The former are sometimes called, “noise,” and treated as aleatoric; the

Page 18 of 29

latter sometimes called, “bias,” and treated as epistemic. The former are due to the sum

effect of a large number of real disturbances, too many and individually too small to be

treated separately. The latter are due (usually) to simplifications in the models used to

interpret observations.

Statistical error derives from limited numbers of observations. Having made a set of

field measurements {x1, …, xn}, an estimate of the mean in the field can be made by

using the sample mean, mx=(1/n)xi, as an estimator. Of course, were one to have

made another set of n measurements at slightly different places, the numerical values of

{x1, …, xn} would have differed somewhat from the original set, and mx would be correspondingly different. So there is error due to statistical fluctuations among data sets,

and this leads to error in the estimate of the pdf of the presumed aleatoric variation of

the soil properties. Furthermore, this error is systematic. If the mean is in error at one

location, it is in error by the same amount at every location. Even if one does assume

that spatial variation and measurement noise of soil properties can be modeled as aleatoric, the statistical error is epistemic.

IMPLICATIONS FOR PREDICTING ENGINEERING PERFORMANCE

How does the distinction between aleatoric and epistemic uncertainty affect the conclusions of a risk analysis? Consider an example. Flood protection along a reach of river

is provided by a levee. A risk analysis has concluded that the “probability of failure” of

the levee is pf=0.1. Does this mean that one-tenth of the length of the levee will fail?

Or does it mean that the entire levee will fail in one project out of ten? The answer is

that it depends on whether the uncertainties are aleatoric, epistemic, or a mixture of

both. If the uncertainties are aleatoric, then 1/10 of the levee should fail. If the uncertainties are epistemic, then the entire levee should fail in one project out of 10. Almost

always, the uncertainties are a mixture, so the meaning of the risk calculation lies between the extremes. The implication for public safety is obvious. If 1/10 of the levee

will almost surely fail, people living behind the levee will almost surely get wet. The

levee provides little protection.

Page 19 of 29

Risk analysis can be thought of as a form of accounting. The great geotechnical designers and consultants are conceptual people, who think about big issues, and grapple with

fundamental principles of geology and mechanics. By contrast, risk analysts are beancounters, who try to put numbers on event trees and in spreadsheets, and to keep track

of different types of uncertainties. Like the accounting of finances, risk analysis needs

double-entry. A wall needs to be placed between aleatory and epistemic uncertainties,

and those uncertainties need to be accounted for separately, and treated as distinct.

As probability theory evolved over the past 400 years, philosophical distinctions developed between relative frequentist meanings for the concept of probability and degreeof-belief meanings (Hacking 1975; Porter 1986) This distinction followed into statistical methods (Barnett 1982; Stigler 1986). Much of the methodology one finds in modern statistical texts comes out of the relative frequentist school of thought. Because

such methods do not return probability distributions directly on epistemic uncertainties,

such as parameter uncertainty, they are not compatible with the need in geotechnical

risk analysis to combine aleatoric and epistemic uncertainties in drawing conclusions.

The flood damage calculations discussed earlier present a clear example. The procedure

propagates uncertainties starting from a flood frequency curve, through a regression

equation relating discharge to water level (the rating curve), and finally through a regression equation relating water level to property damage. The aleatoric uncertainty, as

the calculation is constructed, is that associated with combining a known flood frequency curve—a probability distribution—and the two known regression equations. The

epistemic uncertainty is that associated with imprecision in the specification of the flood

frequency curve, and with regression parameter uncertainties in the rating curve and

damage function. To calculate risk, one would like to combine the aleatoric and epistemic uncertainties according to the total probability theorem,

f (damage) f (damage | ) f ( )d

-1-

Page 20 of 29

in which f(·) is a pdf, f(·|·) a conditional pdf, and the model parameter(s). Clearly,

this requires a pdf expressing epistemic uncertainty directly over the model parameters,

and in turn necessitates a Bayesian approach.

Bayesian methods, associated with degree of belief probability, use Likelihood as the

basis for inference. The Likelihood principle says, the weight of evidence in a set of

data in favor of some parameter value o is proportional to the conditional probability of

the data, given o. Parameter values for which the observed data are probable are given

more weight than parameter values for which the observed data are improbable. The

pdf of based on a set of data is,

f ' ( | data) f o ( ) L( | data)

-2–

in which f′(|data) is the inferred pdf over , the posterior distribution; fo() is the pdf

before the data are observed, the prior distribution; and L(|data)=f(data|) is the likelihood of the data actually observed (i.e., the conditional probability of the data given ).

The normalizing constant is, N f o ( ) L( | data)d . There are a number of inter

esting implications of adopting Bayesian methods, which have been discussed elsewhere (Baecher 1983)

Returning to the flood damage example, in practice, the parameter uncertainties in the

three models are estimated using confidence intervals, which is a relative frequentist

notion. These do not give a pdf directly over but rather give bounds describing the

range of regression relationships that might have led to the data observed. That is, they

describe the conditional probability of the data, not the conditional probability of the

parameters. In practice, the sampling variances of the regression parameters which result from traditional, relative frequentist regression analysis are close to the Bayesian

results in the special—but common—case of no prior information (i.e., fo()~uniform),

so compensating errors may lead to approximately the right numerical answer used in

the risk analysis, even if the method of arriving at it is conceptually wrong.

Page 21 of 29

THE OPPORTUNITIES AND PITFALLS OF “EXPERT ELICITATION”

The geotechnical practice of risk analysis differs significantly from structural and hydrological practice in its strong reliance on subjective probability. All risk analyses intermix aleatoric and epistemic uncertainties, but geotechnical practice is especially rich

in the latter. The formal elicitation of expert opinion as subjective probability allows

the inclusion of epistemic uncertainties that might otherwise be difficult to calculate or

quantify. Experienced engineers have evaluated opinions on many of these uncertainties, and one would like to incorporate these opinions in a risk analysis. This practice is

not without pitfalls. To turn a phrase on Casagrande (Casagrande 1965), we would like

to pick these numbers out of the ground, not out of the air. There is a difference between a subjective probability and the first number that pops into an expert’s head.

NEED FOR A PROTOCOL

A common misconception in eliciting subjective probability is that people carry fullyformed probabilistic opinions around in their heads and that the focus of elicitation is to

accurately access these pre-existing opinions. Actually, people do not carry fully

formed constructs around in their heads but develop them during the process of elicitation. Thus, the elicitation process needs to help experts think about uncertainty, needs

to instruct and clarify common errors in how people quantify uncertainty, and needs to

lay out checks and balances to help improve the consistency with which probabilities

are assessed. The process should not be approached as a ‘cookbook’ procedure.

The steps in using eliciting expert judgment are the following (Morgan and Henrion

1990):

Decide on the uncertainties which need to be assessed.

Select a balanced panel of experts.

Refine issues with the panel, and decide on the specific uncertainties.

Train the experts with training in methods of eliciting judgmental probability.

Elicit the judgmental probabilities of individual experts.

Facilitate the panel in combining individual probabilities into a consensus.

Document the process.

Page 22 of 29

For credibility and defensibility the process of elicitation should be well documented

and open to peer review.

ASSESSING PROBABILITIES

The goal is to obtain coherent, well-calibrated numerical representations of subjective

probability. This is accomplished by presenting comparative assessments of uncertainty

and interactively working toward probability distributions. The elicitation process

needs to help experts think about uncertainty, needs to instruct and clarify common errors in how people quantify uncertainty, and needs to lay out checks and balances to

help improve the consistency with which probabilities are assessed. A successful process of elicitation is one that helps experts construct carefully reasoned judgments. Expert elicitation is art, not science, and there is much disagreement—and a large literature—on how to do elicitations.

In the early phases of expert elicitation, people find verbal descriptions more intuitive

than they do numbers. Such descriptions are sought for the branches of an event or fault

tree. Then, empirical translations are used to approximate probabilities (Table 1). A

warning about using verbal descriptions is that, while empirical studies show encouraging consistency from one person to another, the range of responses is large, and the

probabilities an individual associates with verbal descriptions often change with context

(Lichtenstein et al. 1982). It is common for experts who have become comfortable using verbal descriptions to wish to assign numerical values directly to those probabilities.

This should be discouraged. The opportunity for systematic error in directly assigning

numerical probabilities is great. At this initial point, no more than order of magnitude

bounds are a realistic goal.

The theory of judgmental probability is based on the notion that numerical probabilities

should be inferred from behavior, that subjective probabilities are not intuitive. This

means that the most accurate judgmental probabilities are obtained by having an expert

compare the uncertainty in question with other, standard uncertainties as if he were

faced with placing a bet.

Page 23 of 29

Table 1. Verbal to Numerical Probability Transformations

Verbal Descriptor

virtually impossible

very unlikely

equally likely

very likely

virtually certain

Probability Equivalent

Usual Convention

Behavioral Studies

0.01

0 to 0.05

0.1

0.02 to 0.15

0.5

0.45 to 0.55

0.9

0.75 to 0.90

0.99

0.90 to 0.995

Consider the following decision, the expert is given the choice between two uncertainties: one presents a probability p of winning a modest prize, C; the other presents the

same prize C if a discrete event A occurs. The expert is asked to consider a value of p

that would lead to indifference between the two gambles. Presumably, p should be the

same as the judgmental probability of A.

Research on expert elicitation has addressed a number of methodological issues of how

probability questions should be formulated. Should questions ask for probabilities, percentages, odds ratios, or log-odds ratios? In dealing with relatively probable events,

probabilities or percentages are often intuitively familiar to experts; but in dealing with

rare events, odds ratios (such as, “100 to 1”) may be easier because they avoid very

small numbers. Do aids such as probability wheels--which spin like a carnival game

and represent probability as a slice of the circle--help experts visualize probabilities?

Definitive answers to these and many similar questions are lacking, and in the end, facilitators and experts much choose a protocol that is comfortable to the individuals involved.

ONce a set of probabilities has been elicited, it is important to check for “coherence.”

Coherence means that the numerical probabilities obtained are consistent with probability theory. This can be done, first, by making sure that simple things are true, such as

the probabilities of complementary events adding up to 1.0. Second, it is good practice

to restructure questions in logically equivalent ways to see if the answers change, or to

ask redundant questions. The implications of the elicited probabilities for risk estimates

and for the ordering of one set of risks against other sets is also useful feedback to the

experts.

Page 24 of 29

HOW WELL-CALIBRATED ARE EXPERTS?

The heuristics people use to estimate probabilities lead to a number of common errors.

Perhaps most common of these—but by no means the only one—is overconfidence.

Overconfidence seems to be a persistent bias in the way people estimate values and assign probabilities. Overconfidence has been shown to occur both in naïve and expert

subjects. The simplest manifestation of overconfidence occurs when people are asked to

estimate the numerical value of some unknown quantity, and then to assess probability

bounds on that estimate. For example, an expert might be asked to estimate the undrained sheer strength of a foundation clay, and then asked to assess the 10 percent and

90 percent bounds on that estimate. People almost always respond with probability

bounds that are much narrower than the outcomes of the estimated parameters suggest

(Alpert and Raiffa 1982).

A second way overconfidence manifests is in the assessment of probabilities. People

consistently overestimate high probabilities and underestimate low probabilities. This

bias is particularly acute in the assessment of small probabilities, where small means

less than 0.01, and possibly only less than 0.1. With training and calibration, experts

can learn to overcome overconfidence in their estimates of probabilities between, say,

0.1 and 0.9. However, when required to estimate smaller probabilities, experts' overconfidence can be substantial. Vick (1997) shows data from a study in which groups of

subjects were asked general knowledge questions, and also asked to estimate the probability that their answers were correct. With training, the estimates of error probabilities

corresponded reasonably well to actually observed error frequencies for error rates

above 0.1. In contrast, for error probabilities estimated at less than 0.01, the actually

observed probabilities decreased insignificantly. Research also suggests that the harder

the estimation task the greater the overconfidence people exhibit in their assessments of

the probability of being correct.

Page 25 of 29

IS THERE A “TRUE PROBABILITY” OR A “TRUE RISK” OF DAM FAILURE?

Degree-of-belief probability theory (so-called, “Bayesian” theory) holds that subjective

probability is unique to the individual. Two people shown the same data can legitimately arrive at different probabilities for the same event, because experiences differ. Most

engineers would like to believe that a "true probability" exists for an event, and judgmental probabilities should converge to this true value given enough time. The corollary

is that a “true value of risk” exists for a dam, and that risk analysis should converge to

this true value given enough effort. This view is inconsistent with degree-of-belief theory, and can be harmful to the goal of reaching usable elicitations for risk assessment.

Subjective probability has to do with opinion, not physics.

IS RISK ANALYSIS WORTHWHILE?

Not everyone in the geotechnical community supports the use of risk analysis for evaluating dam safety, at least not as the technology is now being used. Suffice it to say that

the hydrological design of dams, based on flood-frequency analysis, has implicitly long

used statistics and risk concepts. But two things have changed. First, statistics, probability, and risk concepts are now being applied to aspects of dam design and performance other than hydrological. Second, epistemic uncertainties (parameter uncertainty

and model error, for example) are being incorporated in addition to data-based statistical

(i.e., aleatoric) uncertainties. Each of these is a change from earlier practice.

Many criticisms of risk analysis are based on anecdotal quotes by famous engineers,

who stressed the importance of judgment, experience, and conservative design as the

rudiments of risk management. This plays well with the engineering community, but

unfortunate experiences with technology have made the public as a whole skeptical of

“the experts know best” approach to safety and suspicious of reliance on “engineering

judgment.”

Risk analysis intends to be a form of accounting in which a comprehensive set of event

chains leading to failure is identified and probabilities are assigned to the events that

must occur in those chains. Jones (1999) discusses several objections to the risk analy-

Page 26 of 29

sis approach, including (i) not all accident modes are identified, (ii) component failure

interdependencies may be ignored, (iii) design deficiencies may not be analyzed, (iv)

human error is inadequately considered, and (v) there are almost always more than one

cause of a failure. These objections would appear to apply to any engineering analysis

of dam safety and not be limited to risk analysis.

Outcomes of risk analysis reflect the combined opinion of a set of experts and analysts

at an instant in time. A risk analysis may be logically consistent, and as comprehensive

as practicable, but it is neither unique nor entirely replicable. The value in risk analysis

lies in its systematic, explicit approach, and in its replacing “conservative” assumptions—the real safety of which is unknown—with best estimates and explicit statements

about uncertainty. Risk analysis will never replace the wizened-but-wise expert of lore,

but it is an accounting scheme to support the genuine exercise of informed judgment.

REFERENCES

Alpert, M., and Raiffa, H. (1982). “A progress report on the training of probability assessors in.” Judgment Under Uncertainty, Heuristics and Biases, D. Kahneman, P.

Slovic, and A. Tversky, eds., Cambridge University Press, Cambridge, Cambridge,

pp. 294-306.

Baecher, G. B. “Professional judgment and prior probabilities in engineering risk assessment.” 4th International Conference on Applications of Statistics and Probability to Structural and Geotechnical Engineering, Florence.

Barnett, V. (1982). Comparative statistical inference, Wiley, Chichester ; New York.

Bury, K. V., and Kreuzer, H. (1986). “The assessment of risk for a gravity dam.” Water

Power and Dam Construction, Vol. 38(No. 2), pp. .

Casagrande, A. (1965). “The role of the 'calculated risk' in earthwork and foundation

engineering.” Journal of the Soil Mechanics and Foundations Division, ASCE, Vol.

91(No. SM4), pp. 1-40.

David, F. N. (1962). Games, gods and gambling; the origins and history of probability

and statistical ideas from the earliest times to the Newtonian era, Hafner Pub. Co.,

New York,.

Hacking, I. (1975). The Emergence of Probability, Cambridge University Press, Cambridge.

Page 27 of 29

International Commission on Large Dams. (1995). Dam failures statistical analysis,

Paris.

International Commission on Large Dams. (1973). Lessons from dam incidents, Paris.

Jones, J. C. “An independent consultant's view on risk assessment and evaluation of hydroelectric projects.” International Workshop on Risk Analysis in Dam Safety Assessment, Taipei, 117-125.

Lave, L. B., and Balvanyos, T. (1998). “Risk analysis and management of dam safety.”

Risk Analysis, 18(4), 455462.

Lichtenstein, S., Fischhoff, B., and Phillips, L. (1982). “Calibration of Probabilities: The

State of the Art to 1980.” Judgment Under Uncertainty: Heuristics and Biases, P.

"Slovic and A. Tversky, eds., Cambridge Univ. Press, New York, 306-334.

McCann, M. (1999). “National Performance of Dams Program.”

http://npdp.stanford.edu/.

Morgan, M. G., and Henrion, M. (1990). Uncertainty : a guide to dealing with uncertainty in quantitative risk and policy analysis, Cambridge University Press, Cambridge ; New York.

Porter, T. M. (1986). The rise of statistical thinking, 1820-1900, Princeton University

Press, Princeton, N.J.

Stedinger, J. R., Heath, D. C., and Thompson, K. (1996). “Risk assessment for dam

safety evaluation: Hydrologic risk.” IWR-96-R-13, USACE Institute for Water Resources, Washington, DC.

Stigler, S. M. (1986). The History of Statistics, Harvard University Press, Cambridge,

Massachusetts.

U.S. Army Corps of Engineers. (1996). “Risk-based analysis for flood damage reduction studies.” Washington, DC.

U.S. Bureau of Reclamation. (1986). “Comparison of Estimated Maximum Flood Peaks

with Historic Peaks.” , Denver.

Vick, S. G. (1997). “Dam Safety Risk assessment: New Directions.” Water Power and

Dam Construction, 49(6).

Vick, S. G., and Bromwell, L. F. (1989). “Risk analysis for dam design in karst.” Journal of the Geotechnical Engineering Division, ASCE, Vol. 115(No. 6), pp. 819-835.

Vick, S. G., and Stewart, R. A. “Risk analysis in dam safety practice.” Uncertainty in

the Geologic Environment, Madison, 586-603.

Page 28 of 29

Von Thun, J. L. (1996) “Risk assessment of Nambe Falls Dam.” Uncertainty in the Geologic Environment, Madison, 604-635.

Whitman, R. V. (1984). “Evaluating the calculated risk in geotechnical engineering.”

Journal of the Geotechnical Engineering Division, ASCE, 110(2), 145-188.

Whitman, R. V. “Organizing and evaluating uncertainty in geotechnical engineering.”

Uncertainty in the Geologic Environment, Madison, 1-28.

Wu, T. H., Tang, W. H., Sangrey, D. A., and Baecher, G. B. (1989). “Reliability of offshore foundations--state of the art.” Journal of Geotechnical Engineering, ASCE,

Vol. 115(No. 2), pp. 157-178.

Page 29 of 29