Belief, Reason & Logic

advertisement

Belief, Reason & Logic

Scott Sturgeon

Birkbeck College London

(a)

(b)

(c)

(d)

Sketch a conception of belief

Apply epistemic norms to it in an orthodox way

Request more norms

Check the order of explanation between orthodox & requested

norms by looking at the role of logic in epistemology

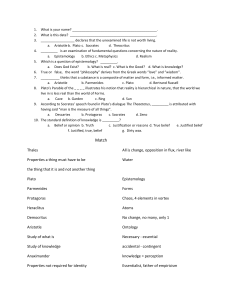

--------------------------------------------------------Belief----------------------------------------------------[1]

Coarse and fine belief

[2]

The Threshold View

Ø

----------------------------------------100%

Belief

----------------------------------Threshold

*Suspended*

*Judgement*

---------------------------Anti-Threshold

Disbelief

-------------------------------------------0%

On this picture: to believe is to have sufficiently strong confidence; to disbelieve is have

sufficiently weak confidence; and to suspend judgement is to have confidence neither

sufficiently strong nor sufficiently weak. In all three cases “sufficiency” will doubtless

be vague and contextually-variable.

[3]

Confidence first!

--------------------------------------------------------Reason--------------------------------------------------[4]

The Two-Cell Partition Principle for Credence

If Ø1 and Ø2 form into a logical partition, and your point-valued subjective probability

for them—or "credence", as it's known— is cr1 & cr2, then cr1 plus cr2 should equal

100%.

For instance: credence in Ø and ¬Ø should add up to 100%.

The Logical Implication Principle for Credence

If Ø1 logically implies Ø2, and your rational credence in Ø1 is cr1, you should not invest

credence in Ø2 less than cr1.

For instance: When you are rationally 70% sure of Ø, you should not be 65% sure

of (Øv).

1

[5]

Probabilism: ideally rational degrees of belief are measured by probability functions; and

such degrees of belief are updated by conditionalization (or perhaps Jeffrey's rule).

[6]

How does the epistemology of coarse belief fit into this picture? Probabilism invites the

view that coarse epistemology is at best a by-product of serious epistemology:

Richard Jeffrey: "By 'belief' I mean the thing that goes along with valuation in

decision-making: degree-of-belief, or subjective probability, or personal

probability, or grade of credence. I do not care what you call it because I can

tell you what it is, and how to measure it, within limits...Nor am I disturbed by

the fact that our ordinary notion of belief is only vestigially present in the

notion of degree of belief. I am inclined to think Ramsey sucked the marrow

out of the ordinary notion, and used it to nourish a more adequate view."

('Dracula meets Wolfman: Acceptance vs. Partial Belief', in Marshal Swain

(ed.) Induction, Acceptance & Rational Belief (Reidel: 1970), pp. 171-172.)

Robert Stalnaker: "One could easily enough define a concept of belief which

identified it with high subjective or epistemic probability (probability greater than

some specified number between one-half and one), but it is not clear what the

point of doing so would be. Once a subjective or epistemic probability value is

assigned to a proposition, there is nothing more to be said about its epistemic

status. Probabilist decision theory gives a complete account of how probability

values, including high ones, ought to guide behaviour, in both the context of

inquiry and the application of belief outside of this context. So what could be the

point of selecting an interval near the top of the probability scale and conferring

on the propositions whose probability falls in that interval the honorific title

‘believed’?" (Inquiry (MIT: 1984), p.138.)

[7]

The worry, then, is simple: if coarse epistemology springs from its fine cousin via a

belief-making threshold, coarse epistemology is pointless; it is at best a theoretical

shadow cast by real explanatory theory (i.e. Probabilism).

------------------------------------------------More Norms Please!-----------------------------------------[8]

My view is that Probabilism is an incomplete epistemology of confidence. To see why,

consider a few thought experiments.

[9]

Case 1. You are faced with a black box. You are rationally certain of this much: the box

is filled with a huge number of balls; they have been thoroughly mixed; exactly 85% of

them are red; touching a ball will not affect its colour. You reach into the box, grab a

ball, and wonder about its colour. You have no view about anything else relevant to your

question. How confident should you be that you hold a red ball?

You should be 85% confident, of course. Your confidence in the claim that you

hold a red ball is well modelled by a position in Probabilism’s "attitude space":

85%

|---------------------------------------------------------------|

0%

100%

con(R)

2

Here we have one attitude ruled in by evidence and others ruled out. The case suggests a

principle:

Out-by-In Attitudes get ruled out by evidence because others get ruled in.

In Case 1, after all, it seems intuitively right that everything but 70% credence is ruled

out by your evidence precisely because that very credence is itself ruled in.

[10]

Case 2. The set up is just as before save this time you know exactly 80-to-90% of balls in

the box are red. How confident should you be that you hold a red ball?

You should be exactly 80-to-90% confident, of course. Your confidence in the

claim that you hold a red ball cannot be well modelled with a position in Probabilism’s

attitude space. Your evidence is too rough for that. Certain attitudes within credal space

are ruled out by your evidence in Case 2; but no attitude in that space is itself ruled in.

This puts pressure on the Out-by-In principle.

[11]

I want to resist that pressure; for I want to insist that there are more attitudes in our

psychology than are dreamt of in Probabilism’s epistemology. There are more kinds of

confidence than credence. It seems to me this is both obvious after reflection and

important for epistemology. For one thing it means that Probabilism is an incomplete

epistemology of confidence. After all, it lacks an epistemology of non-credal confidence.

Or as I shall put the point: it lacks an epistemology of thick confidence.

[12]

To get a feel for this think back to Case 2. Evidence in it demands more than a point in

credal space. It demands something like an exact region instead. Evidence in Case 2

rules in a thick confidence like this:

80%

90%

|---------------------------------------------------------------|

0%

100%

con(R)

Everyday evidence is normally like this. It tends not to rule in credence, being too

coarse-grained to do so. This does not mean that everyday evidence tends not to rule in

confidence. It means that such evidence tends to rule in thick confidence.

[13]

The key point here is simple: evidence and attitude should match in character. Precision

in evidence should make for precision in attitude; and imprecision in evidence should

make for imprecision in attitude. Since evidence is normally imprecise, levels of

confidence normally warranted by it are thick.

[14]

This makes room for a reduction of coarse belief to confidence:

3

t

|---------------------------------------------------------------|

0%

100%

con(R)

Probabilism may give a full view of rational credence; but it does not give a full view of

rational confidence. Probabilism entails that ideally rational agents always assign pointvalued subjective probability to questions of interest. It is clear that this is not so. Often

our evidence is too coarse for subjective probability. When that happens epistemic

perfection rules out credence in favour of thick confidence; and that is because there

should be character match between attitude and evidence on which it is based. Since we

deal in coarse evidence in everyday life, we should often adopt an attitude at the heart of

both coarse and fine epistemology. We should often adopt a thick confidence spreading

from the belief-making threshold to certainty. For this reason, coarse epistemology is of

theoretical moment even if Probabilism is the full story about credence. Coarse

epistemology captures the heart of everyday rationality.

--------------------------------------------------------Logic----------------------------------------------------[15] Where does logic fit in the theory of epistemic rationality? Probabilism invites the view

that logic's role is pinned down by probability theory. The basic idea is that logic helps

shape rational belief by helping to shape probability functions which measure rational

degree of belief. What do we say, though, once thick confidence shows up in theory?

[16]

David Christensen sticks to a Probabilist line even after thick confidence—or 'spread out

credence', as he calls it—is admitted into epistemic theory. He notes that thick

confidence is naturally modelled by richly-membered sets of probability functions rather

than single probability functions. And he infers from this that an epistemology of thick

confidence will preserve the central insights of Probabilism. "On any such view," he says

"ideally rational degrees of belief are constrained by the logical structure of

the propositions believed, and the constraints are based on the principles of

probability. Wherever an agent does have precise degrees of belief, those

degrees are constrained by probabilistic coherence in the standard way.

Where her credences are spread out, they are still constrained by coherence,

albeit in a more subtle way. Thus the normative claim that rationality

allows, or even requires, spread-out credences does not undermine the basic

position that I have been defending [in this book]: that logic constrains ideal

rationality by means of probabilistic conditions on degrees of confidence."

(Putting Logic in Its Place (OUP: 2004), p150.)

[17]

The Two-Cell Partition Principle for Confidence

If Ø1 and Ø2 form into a logical partition, and your confidence in them is [a,b] & [c,d]

respectively, then a plus d should equal 100%, and b plus c should equal 100%.

For instance: if you are 20-to-30% confident in Ø, you should be 70-to-80%

confident in ¯.

4

But notice: the two-celled principle for credence is simply a limit case of the two-celled

principle for confidence. The latter does not hold because the former holds. It is rather

the other way around. The order of explanation goes from general fact to limit-case

instance.

The Logical Implication Principle for Confidence

If Ø1 logically implies Ø2, and your rational confidence in Ø1 is [a,b], then you should

not invest confidence [c, d] in Ø2 when c is less than a.

For instance: When you are 70-to-80% sure of Ø, you should not invest a confidence

[c, d] in (Øv) when c is less than 70%.

Here too the general principle is explanatorily fundamental. The logical implication

principle for credence holds because it is a limit case of more general fact. That more

general fact is captured by the logical implication principle for confidence.

[18]

Christensen notes that thick confidence is normally modelled by rich sets of probability

functions. Those sets are literally built from point-valued probability functions. Their

behaviour is thus fixed by that of those probability functions. In turn this suggests that

probabilistic norms are explanatorily fundamental in epistemology. But that's not quite

right; for thick confidence is not built from credence, and its norms do not derive from

those for credence. It’s the other way around. Explanatorily basic norms for confidence

are those for thick confidence. The truth in Probabilism is but a limit case of more

general truth. And that more general truth overlaps, in part, with the epistemology of

everyday life. After all, the full-dress epistemology of confidence overlaps, in part, with

the epistemology of coarse belief.

5