doc file - Speech Signal Processing

advertisement

COMPUTER RECOGNITION OF TABLA BOLS

Samudravijaya K, Sheetal Shah, Paritosh Pandya

Tata Institute of Fundamental Research, Homi Bhabha Road, Mumbai 400005

{chief, pandya}@tifr.res.in

ABSTRACT

The study of music signals is not only of intrinsic interest, but also useful in teaching and

evaluation of music. Tabla playing has a very well developed formal structure and an

underlying "language" for representing its sounds. A tabla `bol' is a series of syllables which

correlate to the various strokes of the tabla. The present work deals with computer

transcription of tabla bols spoken by a person. Hidden Markov models have been used to

represent spoken tabla bols in terms of sequences of cepstrum based features. The recognition

accuracies of the system for training and test data are 100% and 98% respectively.

1 INTRODUCTION

Music is used by human beings to express feelings and emotions. The study of music signal is

not only of intrinsic interest, but also useful in teaching and evaluation of music. The human

vocal apparatus which generates speech also generates music. Therefore, there are some

similarities in the spectral and temporal properties of music and speech signals. Hence, many

techniques developed to study speech signal can be employed to study music signals as well.

Tabla is a percussion musical instrument used widely as an accompaniment in Indian music

and dance. Tabla may be played solo or as an accompanying instrument. As an accompanying

instrument, Tabla serves the purpose of keeping rhythm by repeating a theka (beat-pattern)

and embellishing the vocal/instrumental music that it is accompanying. As a solo instrument,

Tabla is used to exhibit the vast repertoire and abundant compositions. Most compositions are

set to a taal which is a cycle of a fixed number of beats with well defined sections, repeated

over and over again.

Tabla playing has a very well developed formal structure and an underlying "language" for

representing its sounds. The basic strokes are denoted by syllables such as "dha", "ge". A

tabla `bol' is a series of syllables which correlate to the various strokes of the tabla. These bols

are used as a notation for transcribing tabla playing; e.g. "dha-dha, ti-re-ki-te, dha-dha, tu-na".

Using these bols, tabla compositions can be also spoken and written. Written tabla bol

notation can be found in books and it is widely used in teaching tabla. Moreover, there is a

tradition of "Padhant" or speaking out the tabla composition before playing it for explanatory

purposes. Unfortunately, much of the elaborate tabla playing has not been written down and

survives only in the oral form. Availability of computerised tools which can convert spoken

tabla bols into written notation would considerably increase the reportaire of written material

on tabla compositions. It would enable the knowledge of compositions and their elaborations

to be archived, to be studied and taught. In this paper, we address the question of generating

such textual tabla notation from spoken tabla bols.

RELATED WORK

Analysis of tabla bols using computer based signal processing tools has evinced interest of a

few researchers in the past.

A hidden Markov model (HMM) based tabla phrase recognizer is a component of an audiovisual system meant for naive users to learn some basic aesthetic ideas of tabla drumming was

developed in [1]. The input to the recognizer is NOT the audio signal, but rhythmic "metatabla" input in the form of a serial MIDI signal generated by pressure-sensitive drum pads on

each of the drums in response to user's hits. The tabla phrase recognizer is explicitly fed with

the time between the onset of the current hit and the next hit, and the identification of the

drum pad; no feature extraction from the audio signal is performed here.

An attempt to detect the presence of a given syllable in an utterance containing a sequence of

syllables (a bol) was done in [2]. This work uses word spotting technique. Given a waveform

of a bol, a pattern is constructed as a sequence of feature vectors extracted from the

waveform; each feature vector comprises of 30 coefficients of a 30th order LP analysis.

During the test phase, a reference pattern is matched with a segment of the test pattern of

equal length and cumulative Euclidean distance between two patterns is computed. Such

matchings are done against each of 8 reference patterns and at every frame of test pattern.

When the distance with a pattern is lower than a preset threshold, the bol is said to be

`triggered'. Using pitch or cepstral coefficients did not improve the system performance.

A recent study demonstrated the spectro-temporal similarity between basic tabla sounds and

the corresponding bols [3].

The present work deals with computer transcription of tabla bols spoken by a person. The

output of the recognition system is a sequence of syllables generated by matching the input

acoustic signal of a bol to a set of trained statistical models of the basic syllables. The current

work differs from earlier work on recognition of tabla strokes/bols as follows. In [1], a "metatabla" input in the form of a serial MIDI signal generated by pressure-sensitive drum pads is

the input to the recognizer. In contrast, the current system accepts the acoustic signal recorded

through a microphone. In [2], word spotting technique is used to detect a syllable in a bol

using statistical pattern matching technique. The system performed well in qualitative tests;

no quantitative accuracy figures are available. The recognition system described here

performs unconstrained recognition of a bol as a sequence of syllables. Also, the performance

of the system on train and test data is evaluated quantitatively.

The rest of the paper is organized as follows. The details of the work such as the database,

training and testing methodologies and tools used in this work are presented in section 2.

Section 3 presents the recognition accuracies of the base system. It also gives an account of

various optimization experiments conducted with different initialization techniques, feature

sets, word hypothesis criterion. The conclusions of the work are drawn in Section 4.

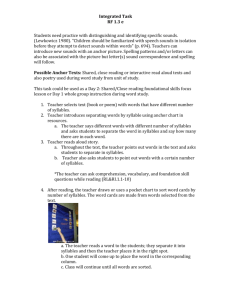

2 EXPERIMENTAL DETAILS

This section describes the database used for training and testing, the features extracted from

the acoustic waveform for characterizing the syllables, the statistical model used to represent

bols.

THE DATABASE

A kaida defines the basic pattern of bols (words) and their arrangement in cyclic rythm (taal).

Palta literally means variation. Paltas present the permutations and combinations of bols

(phrases) of the original kaida keeping within the rythmic structure of the kaida. The database

consists of utterances corresponding to 2 kaidas in teental (16 beat cycle) spoken by a male

subject. In addition to the basic kaida, the database also has 3 paltas corresponding to each

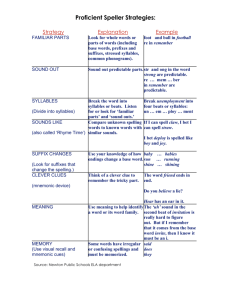

kaida. One of the two kaidas in the database and one of its paltas are listed in Table 1.

Each basic kaida and the corresponding 3 paltas were spoken twice in the first recording

session. This data was used for training statistical models of syllables. Thus, the training data

comprises of 16 bol sequences. The bols were recorded using a high quality, directional

microphone (Shure SM48 low impedance); the signal was sampled at 16kHz and quantized

with 16 bits using Sound Blaster Live card.

Kaida # 2

dha-dha terekita dha-dha tu-na; dha-dha terekita dha-dha tu-na

ta-ta terekita ta-ta tu-na; dha-dha terekita dha-dha tu-na

Palta #1 of Kaida #2

dha-dha terekita dha-dha terekita; dha-dha terekita dha-dha tu-na

ta-ta terekita ta-ta terekita; dha-dha terekita dha-dha tu-na

Table 1: A kaida and its palta from the full collection recorded for training and testing the

tabla bol recognition system.

Six months later, the same kaidas and paltas were spoken by the same speaker. This formed

the data for evaluating the performance of the system on test (unseen) data. The test data

contains 144 syllables whereas the train data contains 288 syllables.

SIGNAL PROCESSING

An energy based speech endpoint detection algorithm was used remove silence on either ends

of the input speech data. Twelve Mel Frequency Cepstral Coefficients (MFCC) [4] were used

as the features to represent speech data in the base recognition system. Advanced versions of

the system experimented with first (delta-MFCC) and second time-derivatives (delta-deltaMFCC) of cepstral coefficients as well as cepstral mean normalisation.

SPEECH MODEL

The base system employed a left-to-right hidden Markov model (HMM) [5] with 6 emitting

states to represent each unit of recognition. There were 6 units of recognition corresponding

to 5 syllables (dha-dha, te-ta, tu-na, ta-ta, terikita) and silence. In order to account for the

influence of neighboring units on of acoustic characteristics of a unit, (left and right) context

dependent (triphone-like) models were used. In order to reduce the number of model

parameters, data driven tying of state emission probabilities as well as transition matrices was

employed. The observation vectors of HMMs were the sequences of feature vectors generated

from training utterances. The HMM toolkit (HTK) [6] was used in this experiment. The

system used an ergodic language model, wherein any word can follow any word with equal

probability.

PERFORMANCE EVALUATION

The primary performance figures used were CORRECT and ACCURACY which are defined

as

CORRECT

= 100 * H / N %

ACCURACY = 100 * (H - I) / N %

where N, H, and I denote the total number of syllables in the test data, the number of correctly

recognized syllables, and number of insertions respectively. Similarly, one can evaluate the

performance of the system at the bol level. We define bolCorrect as 100 times the ratio of the

number of complete bols recognized correctly to the total number of bols in the test suite.

3 RESULTS AND DISCUSSION

Training of models need not only training data but also their correct transcriptions in terms of

the basic units of recognition. In addition, the transcriptions (the sequence of syllables) can be

time-aligned with the acoustic waveform. In the latter case, the training algorithm has the

knowledge of the segment boundaries between consecutive syllables of a bol; this results in

better training and higher recognition accuracy.

The training data were not only transcribed in terms of syllables, but were manually timealigned with the waveform. This enabled us to conduct two recognition experiments: one with

models trained with segmented training data, and another without segment boundary

information. Naturally, the performance of the former system is expected to be better and is

reported below. The performance of the latter system is reported towards the end of this

section.

The performance of the system in terms of CORRECT and ACCURACY for training data

and test data are shown in Table 2. The base system which uses 12 MFCCs as features

correctly recognizes all the syllables of the train data, i.e., CORRECT = 100%. However, the

output of the system also contains a few extra syllables, resulting in insertion error. Thus the

syllable recognition ACCURACY of the system is 88%. Please note that CORRECT deals

with the percentage of correct syllables in the output of the system without taking into

account extra syllables in the output and ACCURACY treats insertions as errors as defined in

the previous section.

Train

Test

Feature

C

A

C

A

MFCC

100

88

95

62

MFCC_D

100

96

100

94

MFCC_D_Z

100

90

100

82

MFCC_E_D_Z 100

86

100

80

Table 2: Syllable recognition accuracies (in %) of the system for train and test data with

different feature vectors. ACCURACY (A) takes insertion errors into account while

CORRECT (C) does not. The suffixes _D, _E and _Z denote the use of delta-coefficients,

energy and cepstral mean normalization respectively.

FEATURE VECTORS

Different feature vectors were used to characterize syllables and the performances of the

corresponding systems were measured. The features are listed in the first column of the Table

2 and the recognition accuracies in the subsequent columns. The suffixes _D, _E and _Z

denote the use of delta-coefficients, energy and cepstral mean normalization (CMN)

respectively. As can be seen from the second row of Table 2, the usage of delta coefficients

(which represent temporal variation of spectrum) improves the ACCURACY for train data

from 88% to 96%. The accuracy for test data increases from 62% to 94%. On the other hand,

cepstral mean normalisation (MFCC_D_Z) reduces accuracy. This behavior is understandable

because CMN minimises the effect of channel and speakers on performance of speaker

independent systems. The current system is trained with the voice of a single person in one

recording environment. Thus, CMN removes speaker and channel characteristics from the

signal, resulting in reduced recognition accuracy for both train and test data.

Adding the frame energy to the feature set (MFCC_E_D_Z) reduces the performance of the

system. The amplitude of different bols can vary considerably. Thus, absolute energy is not a

reliable feature, the addition of which has resulted in increased confusion among syllables.

INSERTION PENALTY

From Table 2, we can see that all the words are recognized correctly (CORRECT = 100%)

whenever delta-coefficients are employed. Therefore, there are no deletion errors. On the

other hand, insertion errors were present. The system can be tuned to reduce this type of error.

The insertion error was found to be the lowest for the feature MFCC_D. This insertion error

can be further reduced by adding a penalty whenever a new word is added to the output

transcription by the system.

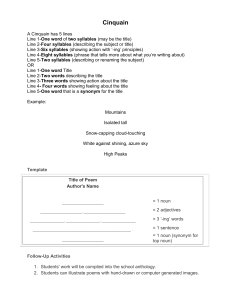

Train

Penalty B

Test

C

A

I

B

C

A

I

0

69.4

100

96.1

11

37.5

100

93.7

9

-10

86.1

100

98.2

5

50.0

99.3

95.8

5

-20

91.6

100

98.9

3

75.0

98.6

97.9

1

-30

100

100

100

0

75.0

98.6

97.9

1

-40

100

100

100

0

75.0

98.6

98.6

0

Table 3: The performance of the system for train and test data corresponding to different

values of insertion penalty introduced to reduce insertion errors. In this table, B, C, A, I

denote bolCorrect (% of bols recognized correctly), Correct(%), Accuracy(%) and number of

insertion errors respectively. The system uses 12 MFCCs and their time derivatives. The

performance is best when the insertion penalty is about -30.

We conducted experiments with different values of insertion penalty while using the best

feature set (MFCC_D). The performance of the system for various values of insertion penalty

is shown in Table 3. The number of insertions decrease for both train and test data when the

insertion penalty is increased. However, it was observed that when the insertion penalty is -20

or lower, deletion error is introduced in case of test data. The ACCURACY of the system

reaches a plateau for both train and test data when the insertion penalty is about -30. The best

recognition accuracies are 100% and 98% for train and test data respectively. We set the

insertion penalty at -30 and repeated experiments with various features and confirmed that

MFCC_D is the best feature vector.

TRAINING DATA WITHOUT SEGMENT INFORMATION

As mentioned at the beginning of the section, models trained with information about

boundaries between syllables in the time waveform perform better than with the models

trained without such timing information. The performance of the former system was

described in the previous sub-section. Now, we report the recognition accuracy of the system

composed of models trained with just transcriptions of the training data; i.e., timing

information is ignored while training.

As expected, the recognition accuracy of the system with poorly trained models is lower.

With MFCC_D as features and insertion penalty set to -30, the syllable accuracies of the

system for train and test data are 80% and 94% respectively. These are lower than the

corresponding figures for the system with better trained models (100% and 98% respectively).

The ACCURACY improved to 83% and 96% for train and test data when the insertion

penalty was increased to -40.

NUMBER OF STATES PER HMM

Most syllables of tabla bol in the current database consist of about 4 phonemes. The HMM

based system described above use 6 states per HMM. This amounts to 1.5 states per phone on

an average; this may not be an adequate number. We conducted an experiment by increasing

the number of states per syllable from 6 to 8 in steps of 1. The syllable recognition

ACCURACY was maximum (92% and 98% for train and test data respectively) when the

number of states per HMM was 10.

4 CONCLUSION

Experiments have shown that speech recognition technique can be gainfully employed to

implement a system which transcribes tabla bols with good accuracy. The performance of the

can be improved further by using larger training data and discriminative training. The

automatic recognition of tabla bols has the potential of a software tool for generation of

written tabla archives from spoken tabla compositions. Such archives can be used in

musicology for studies about form and structure of tabla compositions. They can serve as an

aid in teaching tabla. Automatic recognition of tabla bols can also be used retrieve user

specified tabla phrases from a large database of compositions.

6 REFERENCES

[1] "Exploring Tabla Drumming Using Rhythmic Input" by jae Hun Roh and Lynn Wilcox,

CHI '95 proceedings. http://www.acm.org/sigchi/chi95/proceedings/shortppr/rwx_bdy.htm

[2] "Real-Time Recognition of Tabla Bols" by Amit A Chatwani, May 2003,

http://www.cs.princeton.edu/ugprojects/projects/a/achatwan/senior/IW.pdf

[3] Aniruddh D Patel and John R Iversen, “Acoustic and Perceptual Comparison of Speech

and Drum Sounds in the North Indian Tabla Tradition: An empirical Study of Sound

Symbolism”, Proc. 15th Int. Congress of Phonetic Sciences, Barcelona, 2003.

[4] Davis S and Mermelstein P, ``Comparison of Parametric Representations for

Monosyllabic Word Recognition in Continuously Spoken Sentences'', IEEE Trans. on ASSP,

vol. 28, pp. 357-366.

[5] Rabiner L R, ``A Tutorial on Hidden Markov Models and Selected Applications in Speech

Recognition'' Proc. IEEE, vol. 77, 1989, pp. 257-286.

[6] http://htk.eng.cam.ac.uk/download.shtml