Rough Draft

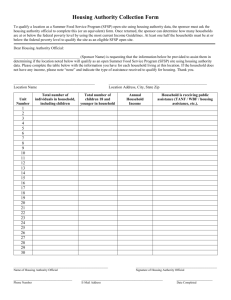

advertisement

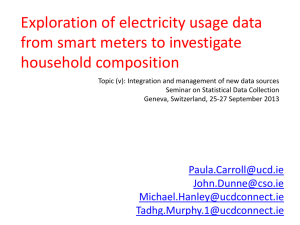

Imputation of Missing Values for Hierarchical Population

data via Data Mining and Machine Learning

Muhammad Aurangzeb Ahmad

Nupur Bhatnagar

Project Outline

1. Abstract

2. Introduction

2.1 Motivation

2.2 Problem Statement

3. Related Work

3.1 Algorithm

3.2 Drawbacks and Results

4. Introduction to Bayesian Networks

4.1 Bayesian Theory

4.2 Bayesian Networks

4.2.1 Definition

4.2.2 Algorithm

4.2.3 Relevance to the project

5. Proposed Approach

5.1 Data Analysis

5.2 Data Reformatting

5.3 Algorithms

6. Validation Framework

6.1 Experimental Setup

6.2 Metrics

6.3 Evaluation

7. Conclusion and Future Work

Introduction

Motivation:

The census data being used in this project is a high dimensional historical micro-data for the United

States census for the years 1850-1880. The historical data thus extends to four decades in the

nineteenth century. It should be noted that the questions for the census for the respective years were

created by a different group of people and thus the questions evolved over the course of this time.

Consequently some information is “missing” in the earlier datasets as compared to the later datasets.

Thus there is a need for harmonizing the census data across decades to ensure consistency among

different surveys. Additionally information is valuable for researchers at the Minnesota Population

Center who are doing trend analysis with the census data to impute missing values of “significant”

variables that were present in one survey year but absent in another. In the current project our aim is

apply various techniques from machine learning to impute the missing variables. Thus we seek to

preserve the relationships of variables and build a probabilistic graphical model using Bayesian

belief networks that can make use of prior knowledge to impute the missing values. Another

approach is to use a constrained version of the Naïve Bayes algorithm for imputing the values. If the

two approaches do not fare well then the alternative approach could be to use Conditional Random

Fields and Maximum Margin Markov Networks. The use of the last two approaches is contingent

upon the breakdown of the first two approaches

Problem Statement:

The United States census micro data consists of a large number of variables which were collected

responses to different population surveys. One such variable of interest is the RELATE variable.

This particular variable describes an individual's relationship to the head of household or

householder. From the 1880 United States census onwards, a question regarding relationship of

every person in the household to every other person in the household was added to the census

survey. However for the previous three censuses i.e., 1850, 1860 and 1870 censuses this variable

was not part of the census survey. Thus from the point of view of a demographics researcher this

variable is missing for those datasets. The variable is of significant importance since it is very vital

in trend analysis of the household structure for the researchers.

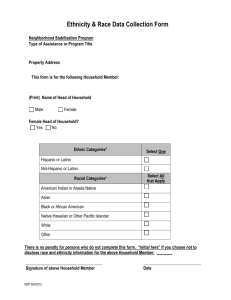

The relationship codes are divided into two categories: relatives (codes 1-10) and non-relatives (codes 11-13).

Relatives

Head/Householder

Spouse

Child

Child-in-law

Parent

Parent-in-Law

Code

01

02

03

04

05

06

1850

Missing

Missing

Missing

Missing

Missing

Missing

1860

Missing

Missing

Missing

Missing

Missing

Missing

1870

Missing

Missing

Missing

Missing

Missing

Missing

1880

Available

Available

Available

Available

Available

Available

Sibling

Sibling-in-Law

Grandchild

Other relatives

Non – Relatives

Partner, friend,

visitor

Other nonrelatives

Institutional

inmates

07

08

09

10

Missing

Missing

Missing

Missing

Missing

Missing

Missing

Missing

Missing

Missing

Missing

Missing

Available

Available

Available

Available

11

Missing

Missing

Missing

Available

12

Missing

Missing

Missing

Available

13

Missing

Missing

Missing

Available

The given data is in form of a hierarchal census micro data. Microdata refers to the person level information.

The dataset comprises of 100,000 person records for the year 1880. There are about 200 different attributes

corresponding to the characteristics of a particular person.

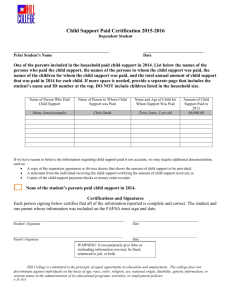

Given: Data Set

Household_id

(h_id)

1

1

1

Person_id

(p_id)

1

2

3

Number_person

(numper)

03

03

03

Age

Gender

46

40

18

M

F

F

Marital

Status(MS)

Married

Married

Not Married

Relate Code

(RC)

01

02

03

Objective : To Learn the data and use it to impute unclassified records

Household_id

(h_id)

1

1

1

Person_id

(p_id)

1

2

3

Number_person

(numper)

03

03

03

Age

Gender

46

40

18

M

F

F

Marital

Status(MS)

Married

Married

Not Married

Relate Code

(RC)

?

?

?

Constraint : Intrinsic ordering scheme of the persons in a household.

Related Work :

Minnesota Population Center

The Minnesota Population Center (MPC) is an interdisciplinary organization for demographic

research at the University of Minnesota. The dataset under consideration is part of the IPUMS – USA project at the

MPC. The following is a description of IPUMS from the official website.The description should also make it

clearer how the current project relates to the research goals at MPC. The Integrated Public Use Microdata Series

(IPUMS) consists of thirty-eight high-precision samples of the American population drawn from fifteen federal

censuses and from the American Community Surveys of 2000-2004. Some of these samples have existed for years,

and others were created specifically for this database. The thirty-eight samples, which draw on every surviving

census from 1850-2000, collectively comprise our richest source of quantitative information on long-term changes

in the American population. However, because different investigators created these samples at different times, they

employed a wide variety of record layouts, coding schemes, and documentation. This has complicated

efforts to use them to study change over time. The IPUMS assigns uniform codes across all the samples and brings

relevant documentation into a coherent form to facilitate analysis of social and economic change.

Data Sets:

1860 and 1870 data sets have missing relationship codes for entire households and the task is the

prediction of these codes. Some prior (unpublished) work has been done on the problem of

imputation at the MPC with somewhat mediocre results from the point of view of the demographic

researchers. The prediction accuracy is around 75% for the test set for the 1880 dataset. The

following algorithm is used to compute the relationship code of the person with respect to the

Approach:

The Existing approach used for imputing missing value is known as the “HOT DECK” allocation method. This

technique involves searching the data file for a "donor" record which shares key characteristics with the missing

record. Hot deck allocation assigns the value of the most proximate suitable donor and as the census files are

organized geographically, the results reflect local and regional variations in characteristics.

Algorithm:

In this algorithm 1880 dataset is treated as the “donor” file in order to impute relationship of 1870 data.

1. The First pass of algorithm substitutes the missing values on the basis of simple rules that are

hard coded explicitly. Almost 75% of the missing values are imputed.

2. In the second pass of the algorithm a donor a temporary table is created that comprises of the

characteristics or the predictor variables used to predict the missing relate code of the record.

3. In the third pass of the algorithm the predictor variables of each record with a missing relate

label is compared against the temporary table of the donors.

4. The process goes on iteratively comparing the characteristic of donors with the predictor

variables of missing record and increasing the score of the donors.

Result:

The donor with maximum score qualifies for substituting the missing relate label of the recipient

record. The overall accuracy of prediction in this method is low. The code was written in FORTRAN and then

converted into Java. Results of the accuracy are listed below:

Number of imputed 01: 101228, percent correct: 99.37%

Number of imputed 02: 80256, percent correct: 98.6%

Number of imputed 03: 244175, percent correct: 99.01%

Number of imputed 04: 1822, percent correct: 79.04%

Number of imputed 05: 3343, percent correct: 87.28%

Number of imputed 06: 2123, percent correct: 87.58%

Number of imputed 07: 5335, percent correct: 85.77%

Number of imputed 08: 2566, percent correct: 89.59%

Number of imputed 09: 6800, percent correct: 87.6%

Number of imputed 10: 4449, percent correct: 79.56%

Number of imputed 11: 0, percent correct: 0%

Number of imputed 12: 37281, percent correct: 95.31%

Number of imputed 13: 2289, percent correct: 87.83%

Proposed Approach:

Constrained Naïve Bayes Approach:

The Naïve Bayes algorithm can be described as follows. If Xi represents the training set and h

represents a class then the mean can be computed as follows.

Similarly the variance can be computed as follows:

Finally the task of finding the posterior can be accomplished by the following formula.

Prediction is done on the basis of the following.

The predicted class is the one with the highest value for this quantity. Although the Naïve Bayes can

be expected to perform reasonably well on the current dataset, there may be a few cases where the

Naïve Bayes approach will predict an incorrect answer i.e., consider the case where the Naïve Bayes

predicts the “head of the household” class label for multiple cases for the same household. Thus a

constrained has to be added to Naïve Bayes so that after given the label to the person with the

highest probability for the label, the head of the household label will not be used again.

Bayesian Network Approach:

Any census data comprises of variables that may or may not be dependent. The variables that have

been identified by the experts (demographic researchers and statisticians) which are most suitable for

prediction highly dependent on one another. In order to impute missing value of an attribute, a model

should be used that captures the dependencies among the variables. The model should be a probabilistic graphical

model, capable of using prior knowledge to impute the missing values and representing the causal relationships.

Doing a literature survey and having experience with data mining classifiers we decided learning our probabilistic

data model based on Bayesian belief networks.

In this project we will implement methods for learning both the parameters and structure

of a Bayesian network to impute the RELATE code on a person level basis.

Data Preprocessing:

The given data is in form of a hierarchal census micro data. Microdata refers to the person level information.

We have around 100,000 person records. There are about 200 different attributes corresponding to the

characteristics of a particular person. With the help of the researchers and there existing domain knowledge; we

have taken a subset of variables known as predictor variables that would help us in predicting the relationship code

of the people with respect to the head of the household.

A snapshot of how the initial data looks with few variables is listed below. A combination of Household_id

and person_id uniquely identifies a person in a household. The order in which the person appear in the data set are

very important. The ordering scheme and the relationship code below states that the first person is the head of the

household; the second is the spouse of the head and third person is the child of the head. The intrinsic ordering of

the rows in a household has significant important.

Given : 1880 data set with relationship codes

Household_id

(h_id)

1

1

1

Person_id

(p_id)

1

2

3

Number_person

(numper)

03

03

03

Age

Gender

46

40

18

M

F

F

Marital

Status(MS)

Married

Married

Not Married

Relate Code

(RC)

01

02

03

01: head of the household

02: spouse of the head

03: child of the head

H_id

P_id

numper

Age_1

Gender_1

MS_1

1

1

03

46

M

married 01

RC_1

Age_2

Gender_2

MS_2

40

F

Married 02

RC_2

Age

Gender

46

40

18

M

F

F

Marital

Status(MS)

Married

Married

Age_3

Gender_3

18

F

MS_3

Not

married

To Impute: Relationship code of 1850-1860-1870 Dataset

Household_id

(h_id)

1

1

1

Person_id

(p_id)

1

2

3

Number_person

(numper)

03

03

03

Not Married

Relate Code

(RC)

?

?

?

We reformatted the data and captured the entire household in a single row with each row entailing the

characteristics of every person in a household.

For the household_id :1 ;the attributes of all the persons present in that household are flattened out in a single table.

As a relationship in a household is not independent of the other people in the household; this reformatted form of

data gives us the advantage of capturing an entire household in a single row.

Algorithm:

A Bayesian network for a set of variables U = {x1,x2…..xn} consists of :

1 A network structure S that encodes a set of conditional independence assertions about variables in X.

2 A set P of local probability distributions associated with each variable.

Together these components give the joint probability distribution for X. The network structure S is

a directed acyclic graph. The joint Probability is given by:

Where pa(u) is the set of parents of U in Bayesian Network. A Bayesian network represents a probability

distribution

Learning algorithms:

Bayesian network first learns a network structure, then learns the probability tables.

The learning structures that we have used in our project:

Local Score Metrics : Learning a network structure can be considered an optimization problem where a

quality measure of a network structure given the training data needs to be maximized. The quality measure

can be based on a Bayesian approach, minimum description length, information and other criteria. Those

metrics have the practical property that the score of the whole network can be decomposed as the sum (or

product) of the score of the individual nodes. This allows for local scoring and thus local search methods.

Conditional independence tests: These methods indicate uncovering causal structure. The assumption is

that there is a network structure that exactly represents the independencies in the distribution that generated

the data. Then it follows that if a (conditional) independency can be identified in the data between two

variables that there is no arrow between those two variables. Once locations of edges are identified, the

direction of the edges is assigned such that conditional independencies in the data are properly represented.

Global score metrics: An intuitive way to measure how well a Bayesian network performs on a given data

set is to predict its future performance by estimating classification accuracy

We divided the entire data set into 13 networks wherein each network signifies the probabilistic distribution of a

relationship. So we have 13 different networks for the available relationship codes. Within each network we

segregate the cases based on the number of persons in a household. Then for a particular relationship and a specific

household structure we define the probabilistic ordering of the places where the person can be placed. Learning

structure algorithm is used to learn the underlying structure of a relationship with respect to the type of household

and the place of the person in a household.

In the below listed figure we show dissemination of two relationship codes(03 and 04) for the households with

number of persons three and four. We find the probability of an unclassified record to fall in one of the relationship

categories by using the joint probability distribution of his characteristics when matched with other persons in a

household.

Numper : 03

1P 03 3P

1P 2P 03

Numper : 04

1P 03 3P 4P

1P 2P 03 4P

1P 2P 3P 03

Relationship : 03

Numper : 03

1P 04 3P

1P 2P 04

Relationship : 04

Numper : 03

Numper : number of

persons in a household

1P 04 3P 4P

1P 2P 04 4P

1P 2P 3P 04

Fig: dissemination of a relationship code wrt the structure of the household and position of the person

in the household.

Key Assumption:

Given a Bayesian Network we have a directed acyclic graph where

Nodes representing variables,

Arcs representing probabilistic dependency relations among the variables and local

Probability distributions for each variable given values of its parents.

Explicit independency assumptions between variables.

For the constrained Naïve Bayes the main assumption is that the variables are independent of one

Validation:

We are using Experimental validation as well as domain’s researcher’s expert evaluations to validate our results.

We are creating test data sets by reformatting the data using SQL SERVER 2005 and using the Bayesian Classifiers

in Weka as well as Microsoft Bayesian Network to add and modify Bayesian networks.

Weka uses Java to implement the Bayesian Classifiers. We are adding our own networks that would handle the

imputation more accurately.

Future Work:

If our approach is successful in imputing the general versions of the relationships we will extend it up to the

detailed version. The general version only identifies the child in the family; the specific would identify if the child

was an adopted child or a step child. Like this all the thirteen relationship codes have a detailed version of the

relationships.

Relatives

Code

Head/Householder 01

Spouse

201

202

Child

301

Adopted Child

302

StepChild

303

1850

Missing

Missing

1860

Missing

Missing

1870

Missing

Missing

1880

Available

Available

Missing

Missing

Missing

Available