Assessing Mineral Status

advertisement

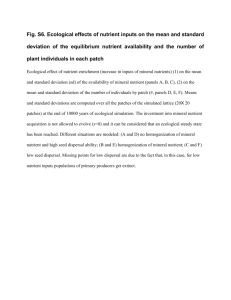

ASSESSING MINERAL STATUS Assessing mineral status is tantamount to determining how much of a mineral in our diet is too little, too much or just the right amount. Picture yourself as a medical doctor who must render a decision for a person suspected of having a mineral deficiency. What do you need to know in order to make a decision? This is a fundamental issue in medicine every since it was realized that pathologies develop when nutrient intake is sub adequate. Your first impulse is to suggest a test. A test of what? The blood? The urine? The tissues? Keep in mind this is a live patient you are dealing with and you are bound by medical ethics to find a cause for the ailment. Once done, and you are sure of the results, only then can you begin treatment to fulfill the other half of your ethic’s contract, the cure. FUNCTION ASSAYS In mineral nutrition (and other areas of nutrition as well) many of the decisions referring to nutrient adequacy are based on a function assay. The function assay is a quantitative test that measures the response of the system to the mineral’s input. Sometimes a simple biochemical assay is all that is needed to detect the mineral’s presence or absence. Other times, it’s a physical symptom that reflects a deviation from normal functioning. Weak bone density, for example, could be a sign of inadequate calcium and anemia could indicate insufficient iron in the diet. It is referred to as “function” assay because decisions are based on a function performed within the organism not on the amount taken in the diet. Because other minerals or extraneous factors can also elicit any given response, functional assays can be misleading. This happen when two minerals may be required to fulfill a function, such as copper being required to mobilize iron which in turn can correct an anemia. Either an absence of copper or iron could be the cause of the anemic condition. Hormones or cytokines could also influence the response. For example, serum ceruloplasmin, a copper protein that is used to assess copper status in humans, is strongly influenced by estrogen and other hormones. Unfortunately, ceruloplasmin is also an acute phase protein and as such is subject to many physiological signals of stress. Concluding ceruloplasmin levels are a reliable measurement of copper intake is tenuous at best and risks serious errors in interpretation. BIOMARKERS Related to function assays are biomarkers. By definition a biomarkers is a measurable biochemical or physiological signal elicited by some factor in the diet. In their least contributable way, biomarkers could simply be signs of a deficient state. The importance of a biomarker in making a crucial decision in nutrition cannot be overstated but there are caveats. To be valid and reliable, a biomarker must be both sensitive and specific for the eliciting agent. In addition, the measurements with the biomarker must be repeatable and not subject to change based on the time of day the reading were taken, the age and gender of the individual, and other health issues afflicting the individual. Moreover, any observed differences must correlate strictly with changes in the mineral’s exposure. This is tantamount to saying an ideal biomarker must be activated by very low concentrations of the mineral and must accurately discriminate between the mineral in question and other minerals or mitigating factors. Moreover, the magnitude of the response must clearly relate to the quantity of mineral. The advantages of a biomarker are numerous. For one, the biomarker can be tested by instrumentation common to a clinical laboratory. A change in a biomarker level could be the first clinical sign of abnormal mineral status. Most changes observed in turn are closely associated with bioavailability. It is comforting to know that a decision based on a biomarker is not simply based on knowing what was in the food source…which basically ignores the organism’s role entirely. Thus, biomarkers more accurately reflect what is happening in the internal milieu. This obviates concerns for absorption and transport in the assessment. Biomarkers can relate to a biochemical impairment that leads to pathology. For example, a selenium-dependent enzyme is required to synthesize the thyroid hormone T3 from T4. Either the enzyme or T3 in the plasma could be a biomarker for selenium requirement and a window to whether the current amount is adequate or below average. The disadvantages of biomarkers are their sometimes dubious link to the nutrient under study. Iron intake, for example, is judged adequate based on blood hemoglobin concentration or blood cell count. Both fall below steady-state when intake is low. Hemoglobin synthesis depends on iron, but, as noted previously, iron bioavailability is subject to other factors. Copper is required to mobilize iron via the plasma copper protein ceruloplasmin which oxidizes iron to its ferric form in order to bind to transferrin. Another biomarker for iron is serum transferrin itself. This plasma protein has two binding sites for iron which take part in iron delivery to the cells. Transferrin saturation occurs when both binding sites are filled with iron atoms. Typically, however, most transferrin molecules have only one iron bound or none at all. A high saturation, therefore, is a sign of good iron status. Unfortunately, transferrin saturation can vary depending on the time of day. Night time is conducive to low iron saturation whereas early morning reading are always much higher. This time-dependence factor of transferrin saturation is believed to be the reason for between laboratory discrepancies. Finally, one must consider that a physiological biomarker of low iron, outward signs of anemia, is itself subject to other factors such as a low intake of folate and B12 as well as copper. The problems encountered in defining iron status should alert the student to the difficulties of finding ideal biomarkers of a nutrient mineral. TABLE: Examples of Biomarkers of Mineral Status Mineral Marker Physiological System Calcium Magnesium Sodium Potassium Phosphorous Chloride blood calcium blood and urine levels blood and urine levels blood and urine levels blood and urine levels blood and urine levels bone turnover or resorption bone structure, energy electrolyte balance electrolyte balance bone structure electrolytes balance Iron blood hemoglobin, transferrin saturation, ferritin oxygen transport, energy Copper Zinc plasma ceruloplasmin plasma zinc, zinc enzymes energy, pigment, antioxidant growth and development Manganese manganese enzymes antioxidant Selenium Vanadium Molybdenum Chromium Iodine selenium enzymes none molybdenum enzymes none T3, T4 antioxidant uncertain nucleic acid metabolism glucose tolerance thyroid hormone In the table above note how the biomarkers for many of the minerals are similar. A deficiency in sodium or potassium is generally not a nutritional concern. Indeed, for sodium, its excess that is to be avoided. Sodium, potassium, magnesium and calcium can usually be detected by a simple blood test or measurement in the urine. On the other hand micronutrients which include most of the minerals listed in the table may be too small to assess by a direct chemical analysis. Because copper, zinc, manganese, cobalt, etc. are in micromolar quantities in bodily fluids, a direct analysis would require samples taken over a 24 hour period in order to obtain measurable quantities. Thus, it is better to base the status of these minerals on a function analysis, which means measure an enzyme system or physiological process that requires the mineral’s presence. Both copper and zinc are cofactors for numerous enzymes and the measurement of these enzymes can be readily made. In experimental nutrition the ebb and flow of enzyme activity is a window into the effectiveness of the diet designed to meet a level of stasis and avoid a nutritional deficiency. These same enzymes can be biomarkers of adequacy or inadequacy when a nutritional deficiency is suspected, which is common in a clinical setting. ASSESSING ADEQUACY, RISK AND TOXICITY With the above tools in hand, it is now possible to define a range of intake of a certain mineral (or any nutrient) that can meet the daily requirement. There are two concerns in the assessment; risk of deficit and risk of excess. First, a biomarker must be selected that will set the criteria for the decisions. Next, one seeks the “estimated average requirement” or EAR which can be defined as the nutrient intake level that meets 50% of an average “healthy” population in a particular age (or gender) group of individuals. Once an EAR is set the recommended nutrient intake (RNI) can be determined. RNI (or RDA) is the daily intake represented by two standard deviations from the EAR, which in a normal Gaussian distribution is the level that satisfies the needs of 97.5% of the population. This means that at most an RNI or RDA when followed puts only about 3% of the population at risk of a deficiency. In addition one must always be mindful of the “upper limit” or UL which looks at the opposite extreme, i.e., the limit of safe consumption before crossing into the danger (toxic) zone. Risk of Excess Together risk by insufficiency or by excess is the focus or a recommendation. There is a different vocabulary of acronyms associated with the latter, however. In this instance one is concerned with bad signs, more specifically, the highest level or the “no observed adverse effect level” (NOAEL). Concomitant to NOAEL is the LOAEL or “lowest observed adverse effect level” which refers to the point when adverse signs are first noticed. Most of these terms are shown in the figure below. The figure above is taken from the Food and Nutrition Board. EAR is the estimated daily intake to meet the requirement of half a population of apparently healthy individuals, whereas RDA (RNI) is estimated to meet 97-98 percent. Thus, RNI/RDA when heeded presents a risk of inadequacy of only 2 to 3 percent, which is deemed acceptable. Remember, setting an RNI/RDA depends setting an EAR first. In essence the 50th percentile must be defined to allow the 97-98th percentile to be calculated. Recall in statistics, the value for the standard deviation is determined after one determines the mean. This is because standard deviation represents the deviation from the mean. The uniqueness of the UL is sometimes overlooked. In essence UL defines the point where the risk of inadequacy or risk of excess is zero. That is comforting to know for an individual, but for a manufacturer who is adding a nutritional supplement to thousands of cartons of a product, putting in more than is needed could be a strong financial burden. To appreciate how these terms in the real world translate into meaningful recommendations and assessments for pregnant women, read the abstract from an article that appeared in the Journal of American Dietetic Association, 2003, titled “Comparing Nutrient Intake from Food to the Estimated Average Requirement Shows Middle to Upper Income Pregnant Women Lack Iron and Possibly Magnesium”. OBJECTIVE: To determine whether nutrient intake from food alone was adequate across trimesters for middle- to upper-income pregnant women when compared with estimated average requirements (EAR), and to determine whether food intake exceeded the tolerable upper intake level (UL) for any nutrient. DESIGN: Observational study in which pregnant women completed 3-day diet records each month during their pregnancy. Records were analyzed for nutrient content, and usual intake distributions were determined. SUBJECTS/SETTING: Subjects were low-risk women in their first trimester of pregnancy (living in middle- to upper-income households). Ninety-four women were recruited, and sixty-three participated. STATISTICAL ANALYSIS PERFORMED: Nutrient intake data were adjusted to achieve normality by using a power transformation. A mixed model method was used to assess trends in intake over time, and to estimate mean intake and within-subjects and between-subjects variance. The usual intake distribution for each nutrient was determined and compared with the EAR and UL. RESULTS: The probabilities of usual nutrient intake from food being less than the EAR were highest for iron (.91), magnesium (.53), zinc (.31), vitamin B6 (.21), selenium (.20), and vitamin C (.12). Women were not at risk of exceeding the UL from food intake for any nutrient studied. APPLICATIONS/CONCLUSIONS: Study participants did not consume adequate amounts of iron from food to meet the needs of pregnancy, and therefore iron supplementation is warranted in this population. Intake of magnesium was suboptimal using the EAR as a cut-point for adequacy. Upon reading the abstract, you should be able to answer the following questions. 1. 2. 3. 4. 5. 6. What nutrients were being evaluated? What biomarker was being used to make the evaluation? How was the test performed? What was the purpose of the 3-day diet record? Based on the results what advice would be most pertinent for the subjects in the study? How would you criticize these data as being relevant to the health status of pregnant women? Write down your own answers before you read what’s below. 1. The investigators chose four elements (Fe, Mg, Zn, Se) and two vitamins (B6-pyridoxine and CL-ascorbate) to evaluate. Their supposition was that one or more of these critical markers may suffice to answer the question as to adequacy of the diets for these pregnant women. The main concern is whether women deemed low risk based on economic status were still at risk of key nutrients during pregnancy. 2. None. They used a 3-day recall in which the subjects were told to keep track of what they ate during their pregnancy. Knowing these food items and their amount allowed them to determine the intake of the marker nutrients. 3. By asking subjects to list what they ate and record the data every three days. This was followed by using a standard set of reference tables that gave the quantity of the nutrient in the food items. 4. This was the raw data that was tabulated in order to make the evaluations and render conclusions. 5. There was evidence that the intake of iron and magnesium was likely to be below the recommended amount. The conclusion was based on the probability of intake being below the 50:50 point of 100 percent to zero risk of a deficiency, i.e., EAR. Although there was a 91% probability for iron and a 53% for magnesium (literally 9 out of 10 and 5 out of 10 for the two minerals, respectively), there was also clear evidence that zinc and selenium could be factors as well. 6. Keep in mind a diet records refers only to the levels of individual nutrients in the food source. The data say nothing about nutrient interactions that may affect bioavailability within. Likewise, they do not account for individual variations between subjects, the fact that some may absorb more even though less in provided, i.e., the tendency of the system to adapt to the low intake, and they say nothing of what is happening internally in these women. These are limitations of the study that need to be address before advice for higher intake is warranted.