Final Report

advertisement

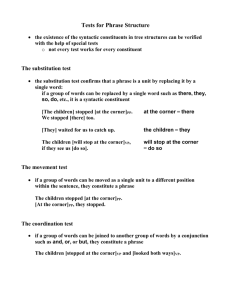

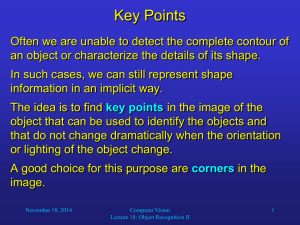

Abstract The goal of this project is to design and implement a playing card recognizer. This is accomplished with a template-matching based character recognition program running on a host PC with a Texas Instrument (TI) Evaluation Module (EVM) Board using a TMS6701 DSP chip. The system is able to recognize a constrained set of playing cards with 62% accuracy at a rate of 10 frames per seconds. 1. Introduction This project was originally intended for use in an autonomous poker card playing system. The system would consists of a card recognition portion employing a video camera interfacing to a character recognition program running on a DSP chip, and a artificial intelligence program to make decisions based on the recognition output. Due to time and hardware constraints, we have limited the scope of this project to implementing a program that takes black-and-white playing card images stored on a PC and determines the number of the card. Image recognition is a problem encountered in many applications, and many different methods have been developed. We use a template-matching based system in our recognition program due to its simplicity. This is discussed in the next section. The details of the implementation are described in Section 3. The result is in Section 4. 2. Theory of Operation 2.1 Design This project focuses on a subset of a standard deck of 52 playing cards. The card images are taken in full color with an HP 315 digital camera at 640 by 480 pixel resolution, figure 1. The images, taken against solid black background, will be from a fixed distance and a random rotation. Next, the cards’ gray scale intensity value is computed by taking the scalar product of each RGB pixel with the intensity vector from the YUV representation. The mean and variance of the pixel values are computed next, and a binary threshold applied at two standard deviations above the mean pixel value. The remaining foreground blobs are labeled, and the largest eightconnected blob is taken to be the playing card, and the smaller blobs are flood filled. Using moment calculations, the major axis of the circumscribing ellipse is calculated and used to rotate the card to a standard position. During the rotation, a nearest pixel heuristic is used to interpolate. Once the card is in a standard position, the top left corner of the card is correlated with the 180-degree rotation of the bottom right corner of the card to ensure that the proper information (number and suit identifiers) are isolated. Finally, template matching is implemented through a normalized two dimensional cross correlation procedure to identify the suit and card number. 1 Figure 1. Card recognition flow chart Down Sample & Convert to Binary High Resolution Color Image Crosscorrelate with Templates Label Connected Objects & Flood Fill Card Area Binary Image of Card Corner Compute Scores and Compare Use Major Axis to Find Corners for Scaling and Rotation Extract Corner for Comparison Card Value and Suit 3. Implementation 3.1 Overview The current implementation is able to recognize the card number from a constrained set of card images stored on the host. The images must be in black-and-white with the card in a fixed rotation. The card can be of any size. As illustrated in Section 2.2, several steps are involved in the recognition. The position of the playing card in the image must first be found and the card corner containing the card number extracted. This is discussed in Section 3.4. The corner is then scaled to match the size of the template using the bicubic spline interpolation described in Section 3.5. The resized corners are compared against the templates using the normalized cross-correlation detailed in Section 3.6. 3.2 Program Structure The card recognition program involves two cooperative processes running on separate hardware. This is illustrated in the diagram below: 2 Figure 2. Implementation diagram Image File (1) Corner Extraction Templates (2) Scaling (3) Cross-Correlation RTDX (5) User Interface Host (4) Card Identification EVM One process runs on the host, which is a personal computer using a Pentium III microprocessor; it is responsible for the user interface, corner extraction, and image scaling. The other executes on a Texas Instruments (TI) Evaluation Module (EVM) board with a TMS-6701 DSP processor and is responsible for the correlation calculation and card identification. This chip was chosen because the program uses floating-point arithmetic. The current implementation does the processing image-by-image, step-by-step, and no synchronization or buffering mechanism is used. This is quite inefficient since the program is divided into several steps each requiring different amounts of computation. Program throughput can be significantly improved by pipelining the program. This would involve adding buffers and synchronization mechanisms between each step. In dividing the program between the host and the DSP chip, we tried to match the capability of the hardware with the requirement of the program. The corner extraction involves testing pixel values in images. This is done iteratively with if statements inside for loops. Since the DSP chip is not suited for this type of control structure, it is done on the host. Furthermore, having the corner extraction done on the host avoids the large I/O overhead involved in transferring the whole image to the EVM board in real-time. The scaling is also done on the host. Although bicubic interpolation is basically a filtering operation suited for the DSP chip, the data transfer to the EVM can be further simplified if the images sent are scaled to a standard size. The cross-correlation is computed on the EVM because it involves many multiplications and additions—both well suited for the DSP chip. 3.3 Data Format Each image is stored and processed as two-dimensional arrays of 8-bit characters, with each character representing one pixel. There are several reasons for this selection of data unit. First, a character is the smallest unit of data that can be easily manipulated on both the host and the DSP chip. Using the smallest unit possible also decreases the amount of data that is to be 3 transferred between the PC and the EVM board. If 16-bit integers were used, the data size would be doubled. 3.4 Corner Extraction Corner extraction is done using the GetCorner function. Its purpose is to extract the identifying character in the corner of a playing card. The function takes in a black-and-white image of a playing card on a black background. Data is read from the image files into memory using the fread function in C. It is stored in a variable called cardImg. All cards used in the project have a standard size of ? X ?. The cardImg is then passed to the GetCorner function, and its extracted corner is returned as output. This is achieved in three steps. The first step is to find where the card is on the black background. The FindCorner function does this by starting at the top-left corner of cardImg and searching through the rows for the first non-zero pixel. This is identified as the top of the image. Starting in the same top-left corner position but searching through the columns identifies the left edge of the image. The same process is repeated from the bottom-right corner to identify the bottom and the right edge of the image. Now that the card has been located, the corner can be extracted in the second step. ExtractCorner does this by removing from cardImg a block of pixels that extends 1/6th of the height of the card from the top and 1/8th of the width of the card from the left. Because the cardImg was not perfectly aligned in the background, when the corner is extracted there are still extra zeros around its top and left edges. These are removed in the third and final step. FillEdges simply goes around the top and left edges of the card and inverts the zero pixel values to be one. The final output image is stored in the variable outImg. The data from this image is dumped to a file using the fwrite function in C. 3.5 Resizing Images with Bicubic-Spline Interpolation In order to obtain the most accurate normalized cross-correlation value it is necessary that the characters of the template and input image have as close to the same height and width as possible. There are many methods of interpolation for resizing images, but one of the most powerful is the bicubic spline interpolation method. Essentially, a continuous coordinate system ( x , y ) is defined in a region bordered on its corners by four pixel values. These four pixel values have discrete coordinates ( i , j ) within the image, figure 3. A pixel value is calculated and assigned to the continuous coordinates ( x , y) and then mapped to the discrete coordinate ( k , m ) of the output image. 4 Figure 3. Coordinate mapping idea illustration The bicubic spline algorithm uses interpolation polynomials, equations 1, in the horizontal and C 0 (t ) at 3 at 2 C1 (t ) (a 2)t 3 (2a 3)t 2 at C 2 (t ) (a 2)t 3 (a 3)t 2 1 Eq 1. C 3 (t ) at 3 2at 2 Vertical directions. These polynomials are used as weights for the input pixel values. The input to the cubic polynomials is a value derived from the magnification factor and the location of the continuous plane within the input image. That is, it depends on values of the magnification factor and the coordinates of the four corner pixels. Let the input image be denoted as f i , j , the intermediate continuous value as F ( x, y ) , and the output image value as g k , m . For ( x, y) [i, i 1), [ j, j 1) the horizontal spline is defined as H j ( x) f i , j C3 ( x i ) f i 1, j C 2 ( x i ) f i 2, j C1 ( x i ) f i 3, j C 0 ( x i ) Eq 2. and the intermediate image point is F ( x, y ) H j C3 ( y j ) H j 1C 2 ( y j ) H j 2 C1 ( y j ) H j 3C 0 ( y j ) Eq 3. and finally, the output image is g k , m F ( x, y ) Eq 4. where x k / u and y m / v . The vertical and horizontal magnification factors are v height out height in and v widthout widthin , respectively. 5 An efficient method of performing the above calculation over the entire input image is described in [2]. The way the coordinates are related from f i , j to F ( x, y ) , from F ( x, y ) to g k , m , and ultimately the dependence of the index values of g k , m on those of f i , j play a major role in the overall operation of the algorithm. Within the C program attached, two arrays, Lx and Ly, are generated that store the mappings of output image indexes to the input image indexes. They are defined as L[ k ] k / r Eq 4. where r is the magnification factor and k is iterated from zero to the desired output dimension (height or width). An intermediate array is defined to temporarily store values from the evaluation of equation 2. This is defined as hk , j H j ( k / r ) Eq. 5 After creating look-up tables for the values of equations 1, equation 5 can be written as hk , j f Ly [ k ], j cy3 [k ] f Ly [ k ]1, j cy2 [k ] f Ly [ k ]2, j cy1[k ] f Ly [ k ]3, j cy0 [k ] Eq. 6 The output image can then be found by g k ,m hk , LX [ m ] cx3 [m] hk , LX [ m ]1cx 2 [m] hk , LX [ m ] 2 cx1 [m] hk , LX [ m ]3 cx0 [m] Eq. 7 The function void bcsInterp( ) is a C function based on equations 6 and 7. The input to the function is an input image, reference to a memory location for the output image, input dimensions, and output dimensions. From equations 6 and 7 it can be seen that if there is a main loop indexed by k and subsidiary loops for j , l , and m , then the output image can be determined as long as the input output image has height greater than or equal to the width. The function uses dynamic memory allocation to create a space in memory for the output. The auxiliary function freeMDarrays() in appendix A was written to free the two dimensional array output when no longer needed. Failure to free the memory may result in a memory leak, which if unchecked in an iterative process will consume available memory. 3.6 Normalized Cross-Correlation To determine the correct playing card some numeric quantifier is needed for any intelligent front end to make a decision. The idea is compare the input image with the template and output a numeric value that will serve as an estimate of the degree to which the template resembles the image. There are many such correlation schemes that will suffice, however the most useful is one that will output a value between 0 and 1, 0 being totally uncorrelated and 1 being a perfect match. Mathematically the normalized cross correlation is given as 6 h 1 w 1 C ( x, y ) T ( x, y ) I ( x x, y y ) y 0 x 0 h 1 w 1 T ( x, y ) I ( x x, y y ) y 0 x 0 Eq. 8 h 1 w 1 2 2 y 0 x 0 where ( x , y ) are the template, T , coordinates, ( x, y ) are the Image, I , coordinates, and h and w are the height and width of the template, respectively, figure 4. Figure 4. Coordinates illustration The code that performs the normalized cross-correlation NcrossCorr( ) is basically the power behind the entire implementation. Obviously, it will require a two-dimensional loop structure with as few conditional checks as possible. The approach taken in the implementation was to define the coordinates origin at the top-left corners of images and templates, while proceeding to find the most efficient way to calculate the value. From equation 8, it can be seen from the summations that the loops will iterate according to the coordinates of T . That is pretty simple, but what if the template and image are not of the same size? The unfortunate limitation of C is that it will not perform a check on mis-indexed arrays, (e.g. an array defined to have 100 elements can still be referenced at the 105th element without throwing an error). This limitation poses the danger that a mis-indexed array will wander to some critical point in memory and tamper with its contents, which could be another variable or other program data. Three solutions would be to make both template and the image the same size and correlate once, zero pad either the template or image enough so that when sliding these over one another (changing the values of ( x, y ) ), or perform a conditional check on the coordinates at every iteration so that there is no danger. For this implementation the first solution was seen as the best method, provided that the image and template could be scaled approximately to equal size. So, ( x, y ) was held static at (0,0), which matches the top-left corners of T and I , figure 5. 7 Figure 5. At (x,y)=(0,0) perfect overlap This allows us to perform the normalized cross-correlation at one ( x, y ) location, rather than multiple, and greatly reduces the number of multiply accumulate (MAC) operations performed per input image. The calculation within the body of the function NcrossCorr( ) can be broken up into three MAC units that fit in succession within the loop structure, one from the numerator, and two from the denominator. After the final iteration is complete, the square root and division operations proceed. The output is returned to the calling function as a double between 0 and 1. 4. Test Results The final program is able to recognize cards with an accuracy of 62% at a rate of around 0.1 seconds per card. This corresponds approximately to a video rate of 10 frames per second if the program was interfaced to a video camera. The results are summarized in Table 1: Table 1. Test Results of Card Recognition Program Card Number Recognition Result Execution Time A A 0.11 2 2 0.09 3 3 0.10 4 4 0.08 5 10 0.09 6 6 0.12 7 Q 0.10 8 8 0.09 9 10 0.10 10 A 0.10 J J 0.11 Q A 0.09 K K 0.08 8 The recognition results displayed in italics are the incorrect ones. 5. Conclusion The goal of the project was to design and implement character recognition as the first stage in developing a playing card recognizer. Testing resulted in correct recognition 62% of the time. We postulate the reason for incorrect recognition of 5 of the 13 cards is due to distortion added to the input image during rotation of the card. Rotation is achieved using MATLAB’s imrotate function, which uses bicubic interpolation to map the pixels of the input image to their new locations in the output image. The amount of interpolation required depends on two factors: the quality of the input image and the amount of shift needed to rotate that image into a standard alignment. The more interpolation needed, the greater the amount of distortion added to the input image. The amount of distortion added can be measured by comparing the variation in the size of the output image to the input image. All input images were of a standard size, 480-by-640. The output images, however, vary greatly in size due to the amount of interpolation performed on them. The images with the greatest variance in both height and width dimensions (cards 5, 7, 9, 10, and Q) were the ones that were incorrectly identified. We believe this problem can be fixed in later stages by making the corner extraction function more robust against added noise distortions. As implemented currently, the corner extraction does a good but not optimal job of isolating the corner character by looking for the “corner edges” of that character. When there is a lot of noise added to the image, these corner edges can be incorrectly identified. A better way of isolating the corner character would be to look for the biggest “blob” in the binary image of the extracted corner. This “blob” would be the character we are trying to isolate and could be found by looking for the largest 8-connected segment of pixels valued at 1. With a little more time, this change could be easily added to the code. Other extensions to the project could be to pipeline the processing of the images – the card recognition is currently done on an image-by-image basis – and to add an actual video camera interface rather than doing the processing on stored images. References 1. Aramini, M. J. “Efficient Image Magnification by Bicubic Spline Interpolation”, http://www.ultranet.com/~aramini/design.html, 2002 2. Ritter, Wilson, Computer Vision Algorithms in Image Algebra, CRC Press, NY, 2001. 9