Matlab Computing for Binomial Response Models

advertisement

Matlab computing for Binomial response models

This handout provides a series of programs that can be used to fit Binomial response

models, with illustration using the beetle mortality data.

******************************Basic Functions***************************

The following three functions compute the probability vector, derivative of the link

function and starting values for Binomial response models. Each requires the user to

specify the model type, with choices 1 for the logit, 2 for the probit, and 3 for the

complementary log log links.

Function pt.m:

% probability function for 3 models: 1=LR; 2=Probit; 3=cloglog

% input: design matrix x, regression parameter beta and ty= model

function pe = pt(x,beta,ty);

eta = x*beta;

% linear predictor

if ty == 1

pe = exp(eta)./(1+exp(eta));

elseif ty == 2

pe = normcdf(eta);

else

pe = 1-exp(-exp(eta));

end

end

Function gp.m:

% derivative of link function for 3 models: 1=LR; 2=Probit; 3=cloglog

% input: probability vector p and ty= model

function g = gp(p,ty);

if ty == 1

g = 1./(p.*(1-p));

elseif ty == 2

g = 1./normpdf(norminv(p));

else

g = -1./( (1-p).*log(1-p) );

end

end

Function starts.m:

% simple starting values for 3 models: 1=LR; 2=Probit; 3=cloglog

% input: vector y of counts and m of sample sizes

%

dimension of reg coeff vector (r) and model type (ty)

%

assumes intercept is first value

function bs = starts(y,m,r,ty);

ptil = sum(y)./sum(m);

% overall proportion of successes

if ty == 1

bo = log( ptil./(1-ptil) );

elseif ty == 2

bo = norminv(ptil);

else

bo = log(-log(1-ptil));

end

bs = [bo ; zeros(r-1,1)];

end

Function loglike.m:

Computes the binomial log likelihood function from 3 input vectors: the counts, the

sample sizes and the probabilities. The function is arbitrary - works for all Binomial

models.

% binomial log likelihood function

% input: vectors: y = counts; m = sample sizes; p = probabilities

% output: log-likelihood l, a scalar

function l = loglike(y,m,p);

l = y'*log(p) + (m-y)'*log(1-p);

end

*********************Fisher Scoring with Line Search********************

Function lrmle2.m: Computes MLEs for Binomial response models via Fisher scoring

with line search. Model type is passed as a parameter to the function.

% lrmle2: Fisher scoring estimation of Binomial

%

Response model (with line search)

% input: x = n-by-a design matrix

%

y = n-by-1 vector of counts

%

m= n-by-1 vector of sample sizes

%

bs = a-by-1 vector of starting values for regression est

%

ty = link(1=LR;2=probit;3=cloglog)

% output:

%

%

%

%

%

bn

= a-by-1 vector of MLES for regression estimates

il

= number of iterations

zz

= SS of abs diff in estimates (no convergence if large)

likn = log-like at MLE

covm = covariance matrix of MLE

ithist = iteration history (il,zz,likn,bn)

function [bn,il,zz,likn,covm,ithist] = lrmle2(x,y,m,bs,ty);

eps1 = .000001;

eps2 = .0000001;

itmax = 50;

alp = -1.05:.1:2.05;

% absolute convergence criterion for beta

% absolute convergence criterion for log-like

% max number of iterations

% line search values

bn = bs;

% 1st guess

likn = loglike(y,m,pt(x,bn,ty)); % log-like at first guess

liko = likn + 100;

zz

= 5;

% dummy value

% set dummy for norm of diff between est

il = 1;

% start loop

while ( il < itmax ) & ( zz > eps1 ) & ( abs(likn - liko) > eps2 );

bo

liko

po

uo

wdo

wo

= bn;

% current beta, log-like, means

= likn;

% probs, w and v-star

= pt(x,bo,ty);

= m.*po;

= diag( 1./( gp(po,ty).*po.*(1-po) ) );

= diag( m./( po.*(1-po).*gp(po,ty).^2 ) );

ldo

del

= x'*wdo*(y- uo);

= ( x'*wo*x ) \ ldo;

% update score function

% Fisher increment

is = 1;

% line search for best multiplier

for is=1:length(alp);

btemp = bo + alp(is).*del;

ltem(is) = loglike(y,m,pt(x,btemp,ty));

end;

[likn,iwhere] = max(ltem);

bn = bo + alp(iwhere).*del;

likn = loglike(y,m,pt(x,bn,ty));

zz = sqrt( (bn-bo)'*(bn-bo) );

% locate max of log-like and location

% update beta, zz for check at next step

ithist(il,:) = [il likn alp(iwhere) zz bn' ]; % save iteration summaries

il = il+1;

% update counter

end

% bn and likn are final est of beta and log-like. get inv info at MLE

pn

= pt(x,bn,ty);

wn = diag( m./( pn.*(1-pn).*gp(pn,ty).^2 ) );

covm = inv( x'*wn*x );

end

***********************************Illustration*************************

The following script (beetanal3.m) fits a quadratic model to the beetle mortality data,

allowing user to select one of three link functions.

Script beetanal3.m:

% beetle mortality data analysis: combined reps

% using line search maximization for specified link

format short;

diary beet.out;

% data for quadratic fit

load beet;

n = size(beet,1);

y = beet(:,1);

m = beet(:,2);

% data matrix

% of cases

xs = beet(:,3)./10;

% scale concentration

x = [ ones(n,1) xs xs.^2]; % design matrix

r = size(x,2);

% no of reg vars

ty = input('enter model type: LR=1 Probit=2 Cloglog = 3');

bo = starts(y,m,r,ty);

% get starting values

[b,il,zz,likn,covm,ithist] = lrmle2(x,y,m,bo,ty);

% fit model

% save selected summaries

disp('

y

disp([y m x]);

disp('

iter

disp(ithist);

m

design matrix x' );

loglike

alpha

zz

est');

% compute observed proportions and fitted proportions, and save

phat = y./m;

pfit = pt(x,b,ty);

% observed proportions

% fitted proportions

disp('observed and fitted proportions ');

disp([(1:n)' phat pfit]);

% get fitted proportions on grid, then plot

xt = (min(xs):.01:max(xs))';

xu = [ones(length(xt),1) xt xt.*xt];

pu = pt(xu,b,ty);

plot( x(:,2),phat,'+',xu(:,2),pu);

% plot both at same time

title('Beetle mortality data with quadratic fit');

% compute and save estimates, std dev, z-stat and p-value

se = sqrt(diag(covm));

zstat = b./se;

pv = 2.*(1- normcdf(abs(zstat)));

disp('

est stddev

disp([b se zstat pv]);

diary off;

z-stat

pvalue');

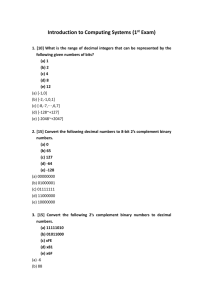

Illustrations: Fit for LR

Contents of beet.out:

enter model type: LR=1 Probit=2 Cloglog = 3 1

y

m

design matrix x

6.0000

13.0000

18.0000

28.0000

52.0000

53.0000

61.0000

60.0000

59.0000 1.0000 4.9060 24.0688

60.0000 1.0000 5.2990 28.0794

62.0000 1.0000 5.6910 32.3875

56.0000 1.0000 6.0840 37.0151

63.0000 1.0000 6.4760 41.9386

59.0000 1.0000 6.9690 48.5670

62.0000 1.0000 7.2610 52.7221

60.0000 1.0000 7.6540 58.5837

iter

loglike

1.0000 -189.7499

2.0000 -183.7300

3.0000 -182.8533

4.0000 -182.8483

5.0000 -182.8483

6.0000 -182.8483

alpha

zz

1.4500 25.7580

1.0500 26.6114

1.1500 3.5410

0.9500 0.7977

1.0500 0.0456

0.9500 0.0021

observed and fitted proportions

1.0000

2.0000

3.0000

4.0000

5.0000

6.0000

7.0000

8.0000

est

0.1017

0.2167

0.2903

0.5000

0.8254

0.8983

0.9839

1.0000

stddev

0.1126

0.1827

0.3166

0.5314

0.7655

0.9396

0.9779

0.9952

z-stat

pvalue

4.7130 10.3423 0.4557 0.6486

-3.9952 3.4781 -1.1487 0.2507

0.5328 0.2906 1.8333 0.0668

est

-24.6676

0.6495

3.9146

4.6717

4.7149

4.7130

5.8045 -0.2831

-2.3672 0.3759

-3.7310 0.5109

-3.9814 0.5316

-3.9959 0.5328

-3.9952 0.5328

Beetle mortality data with quadratic fit

1

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

4.5

5

5.5

6

6.5

7

7.5

8

Looking at the output we see that the routine converged in 7 iterations. At each step, the

log likelihood increased, and the norm of the difference between successive estimates

eventually decreased to zero. The estimates are 4.7130 for the constant term, -3.9952 for

the linear term and .5328 for the quadratic term. Also note that the observed and fitted

proportions are fairly close, which qualitatively suggests a reasonable model for the data.

For comparison, here is a SAS program to fit the same model, with selected output. SAS

reports a Wald statistic and p-value for each estimate. The Wald statistic is the square of

our z-statistic. Note the agreement between our program and SAS.

SAS program to fit logistic model to beetle mortality data:

data d1;

input y m x;

x = x/10;

x2 = x*x;

datalines;

6 59 49.06

13 60 52.99

18 62 56.91

28 56 60.84

52 63 64.76

53 59 69.69

61 62 72.61

60 60 76.54

;

proc logistic;

model y/m = x x2;

run;

The LOGISTIC Procedure

Analysis of Maximum Likelihood Estimates

Parameter

Standard

Wald

Error Chi-Square Pr > ChiSq

DF Estimate

Intercept 1

x

1

x2

1

4.7129

-3.9952

0.5328

10.3423

3.4781

0.2906

0.2077

1.3195

3.3609

0.6486

0.2507

0.0668

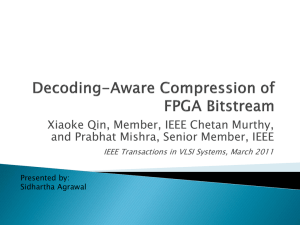

I also fit a probit and complementary log-log model. Here is the output, with a bit of

discussion.

Probit fit:

enter model type: LR=1 Probit=2 Cloglog = 3 2

y

m

design matrix x

(omitted)

iter

loglike

1.0000 -189.2735

2.0000 -183.6964

3.0000 -182.8968

4.0000 -182.8896

5.0000 -182.8896

6.0000 -182.8896

alpha

0.1017

0.2167

0.2903

0.5000

0.8254

est

1.3500 14.8841 -14.2340 3.3541

1.0500 12.7563 -2.0961 -0.5567

1.1500 1.8771 -0.3597 -1.2663

1.0500 0.5981 0.2080 -1.4541

0.9500 0.0185 0.1904 -1.4485

1.0500 0.0011 0.1893 -1.4482

observed and fitted proportions

1.0000

2.0000

3.0000

4.0000

5.0000

zz

0.1045

0.1888

0.3311

0.5326

0.7492

-0.1636

0.1511

0.2201

0.2356

0.2352

0.2351

6.0000

7.0000

8.0000

0.8983

0.9839

1.0000

0.9353

0.9808

0.9980

est

stddev

z-stat

pvalue

0.1893 5.4364 0.0348 0.9722

-1.4482 1.8173 -0.7969 0.4255

0.2351 0.1506 1.5614 0.1184

Beetle mortality data with quadratic fit

1

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

4.5

5

5.5

6

6.5

7

7.5

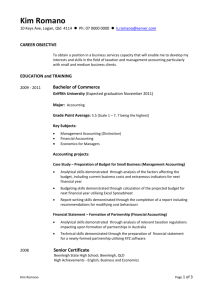

Cloglog fit:

enter model type: LR=1 Probit=2 Cloglog = 3 3

y

m

design matrix x

loglike

alpha

(omitted)

iter

1.0000 -183.0595

2.0000 -182.9447

zz

est

1.4500 16.7772 -16.4184

1.2500 2.4324 -14.1119

3.7807 -0.1844

3.0110 -0.1209

8

3.0000 -182.9446

4.0000 -182.9446

1.0500

1.0500

0.0711 -14.1793

0.0010 -14.1803

3.0333 -0.1227

3.0336 -0.1228

observed and fitted proportions

1.0000

2.0000

3.0000

4.0000

5.0000

6.0000

7.0000

8.0000

0.1017

0.2167

0.2903

0.5000

0.8254

0.8983

0.9839

1.0000

0.0999

0.1909

0.3363

0.5348

0.7468

0.9339

0.9808

0.9982

est

stddev

z-stat

pvalue

-14.1803 6.1698 -2.2983 0.0215

3.0336 1.9730 1.5376 0.1242

-0.1228 0.1566 -0.7840 0.4330

Beetle mortality data with quadratic fit

1

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

4.5

5

5.5

6

6.5

7

7.5

8

In all 3 cases, the iteration converged in a few steps. Further, the plots of the observed

and fitted proportions show that each model fits the data fairly well. It is difficult to

directly compare these models because they are not nested. One possible approach is to

use the AIC (Akaike information criterion), which is essentially minus twice the

maximized log likelihood plus a penalty for the number of parameters in the model. Each

model estimates 3 parameters, so the ordering of models based on AIC is exactly the

ordering obtained by comparing the maximized log-likelihood (with higher = better). For

the 3 models the maximized log likelihoods are (reading of the last line of the iteration

histories) -182.944 for the complementary log log, -182.89 for the probit, and -182.85 for

the logistic. Although the logistic has the highest value, the differences among the models

are tiny.