LANGUAGE AND READING: CONVERGENCES AND DIVERGENCES

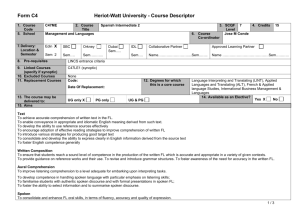

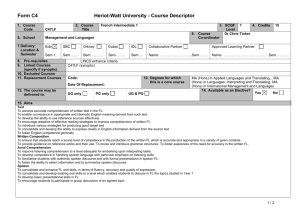

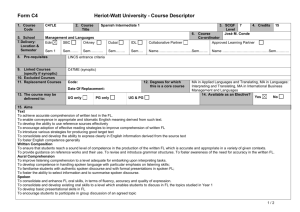

advertisement