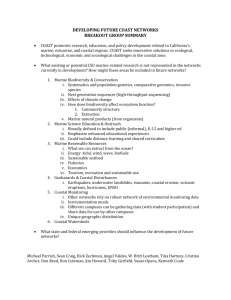

Center for Coastal Resources Management

advertisement