Does data warehousing herald the demise of the historical database

advertisement

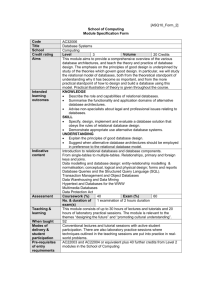

Data Warehousing: the New Knowledge Management Architecture for Humanities Research? Dr Janet Delve, Department of Information Systems and Computing Applications University of Portsmouth 1-8 Burnaby Terrace PO1 3AE Janet.Delve@port.ac.uk Telephone 02392 846669 Fax 02392 846402 Keywords Data warehousing, humanities research, knowledge management Data Warehousing: the New Knowledge Management Architecture for Humanities Research? Data Warehousing: the New Knowledge Management Architecture for Humanities Research? 1. Introduction Data Warehousing is now a well-established part of the business world. Companies like Amazon, Wal*Mart and Opodo use data warehouses to house terabytes of data about their products. These data warehouses can be subjected to complex, large-scale queries that produce detailed results. The customer occasionally catches a glimpse of what is going on behind the scenes with companies such as Opodo when searching for a plane ticket - an online message displays the large number of databases the warehouse is interrogating to locate the best ticket prices, and all carried out in a matter of seconds. It was this desire for greater analysis of business and scientific data that brought about the introduction of data warehouses - huge data repositories housing multifarious data extracted from databases and additionally from other relevant sources. As a bi-product of this phenomenon, many improvements also occurred in data quality and integrity. The outcome of all these developments is that there is now much greater flexibility when modelling data for a data warehouse (DW) via dimensional modelling, a real contrast to the strict rules imposed on relational database modelling via normalization. However, up until very recently, DWs were restricted to modelling essentially numerical data – examples being sales figures in the business arena and astronomical data in scientific research. Although some humanities research may be essentially numerical, much is not, for example memoirs and trade directories. Recent innovations have opened up new possibilities for largely textual DWs, making them truly accessible to a wide variety of humanities research for the first time. Humanities research data is often difficult to model due to the irregular and complex nature of the data involved, and it can be very awkward to manipulate time shifts in a relational database. Fitting such data into a normalized data model has proved exceedingly unnatural and cumbersome for many researchers. This paper considers history and linguistics as two exemplars of humanities research and investigates current difficulties using relational databases. The paper sets out DWs in a business context, examines advances in DW modelling, and puts forward ways these can be applied to historical and linguistic research. The concluding suggestion is that data warehousing might provide a more suitable knowledge architecture for these two fields in particular and humanities in general than the relational databases they are currently employing. 2. Computing in Historical Research 2 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? Overview Historians have been harnessing computing power in their research for at least the last two decades. The Association for History and Computing1 has held national and / or international conferences since the mid eighties and has produced / inspired many publications (for example Denley et al., 1989; Mawdsley et al., 1990) and journal articles2. These and other scholarly works (for example Mawdsley and Munck, 1993) display the wide range of ways in which historians have used computing, encompassing database management systems (DBMSs), artificial intelligence and expert systems, quantitative analysis, XML and SGML. In their dedicated book on historical databases, Harvey and Press (1996, xi ) have observed that historical database creation has been widespread in recent years and that the popularity of such database-centred research would appear to derive from its applicability to a broad spectrum of historical study. Hudson (2001, 240) also reported that 'the biggest recent growth areas of computer use in new sorts of historical research have involved relational database software...'. Historical databases have been created using different types of data from diverse countries over a range of time periods. Various historical communities have used databases, from medievalists to economic historians. Some databases have been modest and stand alone3, others part of a larger conglomerate4. Databases involving international collaboration on this scale require vast resources (Harvey and Press 1996, xi). One issue that is essential to good database creation is that of data modelling; which has been the subject of contentious debate in historical circles over recent years. Before embarking on this, a brief summary of the relational model is necessary as there are inherent perceptions regarding relational modelling for a business community that contrast with those in academia. The Relational Model The relational model is currently the business standard (Begg and Connolly 1999, vii) and is based on relations (tables). Each relation represents a single 'entity' and contains attributes defining only that entity and there is a formal system of relating these relations via relationships. The relational model is based upon mathematical relations (Date 2003; Elmasri and Navathe 2003) and is strictly controlled by normalization (Codd 1972 and 1974), which ensures that each relation contains a unique key that determines all the other attributes in that relation. Unfortunately this is problematic, even in the business world as '(t)he process of normalization generally leads to the creation of relations that do not correspond to entities in the 'real world'. The fragmentation of a 'real world' entity into many relations, with a physical representation that reflects this structure, is inefficient, leading to many joins during query processing' (Begg and Connolly 1999, 732). These joins are very expensive in terms of computer processing. In addition to processing issues, there are other matters to take into account. 1 http://grid.let.rug.nl/ahc/ The associated journal of the AHC is History and Computing, Edinburgh University Press 3 For example, the Register of Music in London Newspapers, 1660-1800, as outlined in Harvey and Press, 40-47 2 4 The current North Atlantic Population Project (NAPP) project entails integrating international census data 3 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? In practice, integrity and enterprise constraints are not implemented as rigorously as relational theory demands. Also, the relational model is 'semantically overloaded' (Begg and Connolly 1999, 733) as it is unable to build semantics into the simple relation / relationship model. New object-oriented data models are part of third generation DBMSs and they do address the problem of semantics and 'real world' modelling, but they are not clearly defined (Begg and Connolly 1999, 752) and have not, as yet, replaced the relational model. As noted above by Hudson and Harvey and Press, the relational model is the database model of choice for increasing numbers of historians. Data Modelling in Historical Databases When reviewing relational modelling as used for historical research, (Bradley, 1994) contrasted seemingly straightforward business data with often incomplete, irregular, complex- or semi-structured data. He noted that the relational model worked well for simply-structured business data, but that it could be tortuous to use for historical data. (Breure, 1995) pointed out the advantages of inputting data into a model that matches it closely, something that is very hard to achieve, as noted above by Begg and Connolly. (Burt (Delve) and James, 1996) considered the relative freedom when using sourceoriented data modelling as compared to relational modelling with its restrictions due to normalization, and drew attention to the forthcoming possibilities of data warehouses (DWs). Source-Oriented Data Modelling Dr Manfred Thaller5 decided that historical data modelling was so important that he developed a dedicated DBMS called ‘a semantic network tempered by hierarchical considerations’ (Thaller 1991, 155). His principle aim was to carry out source-oriented data modelling, which entailed encapsulating an entire data source in a model, without having to split data up into separate tables as occurs in normalization. He felt that it was imperative to keep each entire source for posterity as it had been all too common for historians to create datasets by picking out just the parts of a source they were interested in at a particular time for a particular project. This could prove very wasteful should other historians at a later date wish to analyse other parts of that source that had not been included in the database. In terms of modelling, flexible nature gives a ‘rubber band data structures’ facility (Denley 1994, 37), thus overcoming the problems of semantic modelling. The fluid nature of creating a database with marks it out as an ‘organic’ DBMS. is a sophisticated DBMS that handles image processing as well as geographical coordinates. There was much debate in historical circles about which data modelling was superior, or relational, (Denley 1994, 33-44). However, despite its versatility and power, never replaced relational databases as the main DBMS for historians, who continue using relational modelling and normalization to prepare data for RDBMSs, despite the inherent difficulties of so doing. 5 Max Planck Institute of Historical Studies, Göttingen 4 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? Examples of Difficulties Modelling Historical Data Burt and James (1996, 160-168) discussed four historical projects that found the relational model very constraining, and outlined how using as the DBMS had greatly improved their data modelling. The Educational Times project entailed the creation and querying of a database of mathematical problems found in the mathematical department of this 19th century journal. The format of the data was irregular, and the appearance of some sections within it was erratic. The data appeared monthly and using a relation model it was impossible to preserve the hierarchical nature of the data as the process of normalization caused it to be split by subject and placed in 28 tables. A similar problem was encountered during the modelling stage of the Winchester census Database where the removal of uninhabited properties into a separate table following normalization would make it impossible to retain the hierarchical nature of the data, thus greatly adding to the complexity of the data model. The Britmath database contained data pertaining to British mathematicians and institutions acquired from a wide range of disparate archives. The data was not uniform and it was not possible to predict in advance a model that could accurately represent all the data for the database. For the Winchester Probate Database, the problem is more profound. Previous researchers have looked for evidence of personal status by searching through wills and inventories for objects conferring such status. Crucially, these objects have been decided in advance, and the data has been searched for a given list of objects. The desire when creating the Winchester Probate Database was to encapsulate several entire 17th century wills and inventories, and then search them for a variety of status indicators. In this way it was possible to redefine queries as the project developed, instead of having a predetermined set of queries before the project even began or the data was thoroughly known. All of these projects found that it would be a problem to model extraneous detail in a normalized data model. Another difficulty with historical material arises from the wealth of different dating systems in use in a variety of countries over time. Historical dating systems encompass a number of different systems, including the western, Islamic, Revolutionary and Byzantine calendars. Historical data may refer to ‘the first Sunday after Michaelmas’, which would need calculations to be undertaken before a date can be entered into a database. Even then, not all databases and spreadsheets have been able to cope with dates other than those of the 20th century. Similarly, for researchers in historical geography, it might be necessary to calculate dates based on the local introduction of the Gregorian calendar. These difficulties can be timeconsuming and arduous for researchers. They were addressed by the DBMS, but as this is not a widespread system, researchers still have to contend with these issues in the relational DBMS. To summarise, awkward and irregular data with abstruse dating systems do not fit easily into a relational model that does not lend itself to hierarchical data. Many of these concerns also occur in linguistics computing. 3. Linguistic Databases Lawler and Aristar Dry (1998, 1) claimed that 'in the last decade computers have dramatically altered the professional life of the ordinary working linguist, altering the things we can do, the ways we can do them, and even the ways we can think about them'. 5 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? and then went so far as to say that the technology 'is shaping the way we conceptualise both linguistics and language'. Linguistic databases have a well-established place in language research, with at least two dedicated conferences in the last decade; 1995 in Groningen, with proceedings edited by Nerbonne, (1998) and the IRCS Workshop on Linguistic Databases at Philadelphia 2001 and their accompanying web-based proceedings (http://www.ldc.upenn.edu/annotation/database/proceedings.html). Nerbonne (1998) highlighted in his introduction that 'linguistics is a data-rich study' and then proceeded to delineate the multi-faceted nature of this discipline. He outlined the abundance of languages with their myriad words having many possible word forms, and then alluded to the plethora of coding rules for phrases, words and sounds. In addition, many factors are studied for their effect on linguistic variation for example sex, geography, social and educational status. The sheer volume of data in linguistics has encouraged researchers to look to databases as natural tools for storage and analysis of linguistic data and metadata. Nerbonne stated 'Databases have long been standard repositories in phonetics... and psycholinguistics ... research, but they are finding increasing use not only in phonology, morphology, syntax, historical linguistics and dialectology but also in areas of applied linguistics such as lexicography and computer-assisted learning.' He proceeded to discuss the fact that data integrity and consistency is of particular importance, and that deductive databases (providing logical power) and object-oriented databases (providing flexibility) both have their place in the field. He concluded his preliminary remarks by claiming that '(t)he most important issues are overwhelmingly practical' rather than conceptual. However, many conceptual issues appear in the IRSC 2001 proceedings, linguistic data modelling playing a central role. Linguistic Data Modelling The benefits of using the relational model together with the process of normalization is put forward by Hayashi and Hatton (2001 IRCS conference proceedings) who stated that '(b)ecause the repository of classes is normalized, the user can use the same class in a number of visual models. This is very helpful when modelling integrated linguistic data structures.' Brugman and Wittenburg (2001 IRCS conference proceedings) acknowledged the central role played by data models and object oriented models right from the start of their work on linguistic corpora, annotation formats and software tools. They traced the evolution of various data models to date and concluded by saying: The choice of what models to create has a profound influence on how a problem is attacked and how a solution is shaped. There is a close relation between a model on one side and a set of problems and their solution in the form of a software system on the other side. A different problem requires a different model. Over the years, user requirements changed, partly because new technology made new things possible, and partly because of the user's experience with our own tools. The models changed with the user requirements and will continue to do so. Bliss and Ritter (2001 IRCS conference proceedings) discussed the constraints imposed on them when using 'the rigid coding structure of the database' developed to house pronoun systems from 109 languages. They observed that coding introduced interpretation of data and concluded that designing 'a typological database is not unlike trying to fit a square object into a round hole. Linguistic data is highly variable, database structures are highly rigid, and the two do not always "fit".' Brown (2001 IRCS 6 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? conference proceedings) outlined the fact that different database structures may reflect a particular linguistic theory, and also mentioned the trade-off between quality and quantity in terms of coverage. Summary of data modelling problems in historical and linguistic research The choice of data model has a profound effect on the problems that can be tackled and the data that can be interrogated. For both historical and linguistic research, relational data modelling and normalization often appear to impose data structures which do not fit naturally with the data and which constrain subsequent analysis. Coping with complicated dating systems can also be very problematic. Surprisingly, similar difficulties have already arisen in the business community, and have been addressed by data warehousing. 4. Data Warehousing in the business context DWs came into being as a response to the problems caused by large, centralized databases (Inmon 2002, 6-14). Users in each department would resent the time taken to query the slow, unwieldy database and would write queries to siphon off a copy of the portion of the central database pertinent to their needs. They would store this compact 'extract' database locally and benefit from the quicker response rate when running their queries against it. The advantages to the department were obvious as they now had control of their own database that they could customize, but the problems to the company were legion when managers tried to get an overall picture of the state of the business. A call would go out for reports from each department, which would respond by querying their own extract database. However, since each of these had been created without reference to any standard, an eclectic set of often-contradictory reports from the departments would be received by head office. (Also there is a problem of copies being altered and then no longer reflecting the original they came from) Inmon described this as the cabinet effect (1991) and the 'spider-web' problem (2002). The need was thus recognized for a single, integrated source of clean data to serve the analytical needs of a company. DW Queries Behind the business analyst's desire to search the company data is the theory that there is a single question (or a small suite of questions) that, if answered correctly, will provide the company with a competitive edge over their rivals. More fundamentally, a DW can provide answers to a completely different range of queries than those aimed at a traditional database. Using an estate agency as a typical business, the type of question their local databases should be able to answer might be 'How many three-bedroomed properties are there in the Botley area up to the value of £100,000? The type of overarching question a business analyst (and company managers) would be interested in might be of the general form 'Which type of property sells for prices above the average selling price for properties in the main cities of Great Britain and how does this correlate to demographic data?’ (Begg and Connolly, 1999, 917). To trawl through each local estate agency database and corresponding local county council database, then correlate 7 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? the results into a report would take a long time and a lot of resources. The DW was created to answer this need, set in the wider context of data mining. Data Mining Somewhat confusingly, data mining is the name given to the whole field comprising data cleansing, data warehousing and data mining (Byte, 1995). Data cleansing is the process of painstakingly checking the vast quantities (terabytes) of data for consistency and coherence. Data warehousing is the actual storing of the data and data mining (DM) is the procedure carried out to search for meaningful patterns. DM involves neural nets, machine learning, cluster analysis and decision trees which are just some of the AI methods used to try to model and classify the vast quantities of data typically stored in a DW. Traditional database querying tools like SQL are also utilised. Having established the place of DW within the field of DM, it is still not obvious how to precisely define data warehousing. The Philosophy of Data Warehousing ‘Data warehousing is an architecture, not a technology. There is the architecture, and there is the underlying technology, and they are two very different things. Unquestionably there is a relationship between data warehousing and database technology, but they are most certainly not the same. Data warehousing requires the support of many different kinds of technology (Inmon 2002, xv)’. A detailed survey of the hardware needed to run a DW is beyond the scope of this paper, but a separate DW server is advantageous to avoid draining the main database server. In addition, the processing power of parallel-distributed data processors is needed to mine (query) the data. There is a plethora of supporting technology from e.g. Oracle (Oracle Warehouse Builder, OWB, outlined in Begg and Connolly) Prism Solutions, IBM, SAP and others. Building a DW can be a complex task because it is difficult to find a vendor that provides an ‘end-to-end’ set of tools. Thus a data warehouse is often built using multiple products from different suppliers. Ensuring the coordination and integration of these products can be a major challenge (Begg and Connolly 1999, 927). Data warehousing thus fits into the wider field of data mining. Basic Components of a DW Inmon (2002, 31), the father of data warehousing (Krivda, 1995), defined a DW as being subject-oriented, integrated, non-volatile and time-variant. Emphasis is placed on choosing the right subjects to model as opposed to being constrained to model around applications. As an example, an insurance company would have as subjects - customer, policy, premium and claim whereas previously data would have been modelled around the applications - car, health, life and accident. Here it is necessary to clarify the fundamental relationship between databases and DWs. DWs do not replace databases as such - they co-exist alongside them in a symbiotic fashion. Databases are needed both to serve the clerical community who answer day-today queries such as 'what is A.R. Smith's current overdraft?' and also to 'feed' a DW. To do this, snapshots of data are extracted from a database on a regular basis (daily, hourly and in the case of some mobile phone companies almost real-time). The data is then 8 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? transformed (cleansed to ensure consistency) and loaded into a DW. In addition, a DW can cope with diverse data sources, including external data in a variety of formats and summarized data from a database. The myriad types of data of different provenance create an exceedingly rich and varied integrated data source opening up possibilities not available in databases. Thus all the data in a DW is integrated. Crucially, data in a warehouse is not updated - it is only added to, thus making it non-volatile, which has a profound effect on data modelling as the main function of normalization is to obviate update anomalies. Finally, a DW has a time horizon (that is contains data over a period) of five to ten years, whereas a database typically holds data that is current for two to three months. Data in general has thus taken on a new significance in the whole DM process, and meta data is of vital importance in the whole process. Meta Data Meta data is extremely important in a DW (Inmon 2002, 25,26; 113, 171-2). It is used to create a log of the extraction and loading of data into the warehouse, record how the data has been mapped when carrying out data cleansing and transformations, locate the most appropriate data source as part of query management and also to help end users to build queries, and lastly manage all the data in the DW. With the sheer quantity of data and the large number of indexes to ensure smooth querying in the DW, it is a matter of the highest priority that meta data is created and stored efficiently. The fact that data is held over such a long period of time adds to the urgency, especially given the fact that the data stewards responsible for putting the data into the DW may have long since moved on. If an accurate record of the data that captures all aspects of the meaning of the data does not exist, then the consequences for those using the DW are potentially dire. The DW contains a meta data manager to control this aspect of the DW. As with data cleansing, the tools now on the market specifically geared towards meta data management may be of interest to those in the linguistic and historical communities with their vast quantities of data and meta data. Data modelling in a DW is of greater relevance, however. 4. Data Modelling in a Data Warehouse There is a fundamental split in the DW community as to whether to construct a DW from scratch, or to build them via data marts. Data Marts A data mart is essentially a cut-down DW that is restricted to one department or one business process. Whilst acknowledging the pivotal role they play, the industry is divided about data marts. Inmon (2002, 142) recommended building the DW first, then extracting the data from it to fill up several data marts. The DW modelling expert Kimball (2002) advised the incremental building of several data marts that are then carefully integrated into a DW. Whichever way is chosen, the data must first be modelled via dimensional modelling. Dimensional Modelling Dimensional models need to be linked to the company's corporate ERD (Entity Relationship Diagram) as the data is actually taken from this (and other) source(s). 9 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? Dimensional models are somewhat different from ERDs, the typical star model having a central fact table surrounded by dimension tables. Fact Tables Kimball (2002, 16-18) defined a fact table as 'the primary table in a dimensional model where the numerical performance measurements of the business are stored…Since measurement data is overwhelmingly the largest part of any data mart, we avoid duplicating it in multiple places around the enterprise.' Thus the fact table contains dynamic numerical data such as sales quantities and sales and profit figures. It also contains key data in order to link to the dimension tables. Dimension Tables Dimension tables contain the textual descriptors of the business process being modelled and their depth and breadth define the analytical usefulness of the DW. As they contain descriptive data, it is assumed they will not change at the same rapid rate as the numerical data in the fact table that will certainly change every time the DW is refreshed. Dimension tables can have 50-100 attributes and these are not usually normalized. The data is often hierarchical in the tables and can be a accurate reflection of how data actually appears in its raw state (Kimball 2002, 19-21). There is not the need to normalize as data is not updated in the DW, although there are variations on the star model such as the snowflake and starflake models which allow varying degrees of normalization in some or all of their dimension tables. Coding is disparaged due to the long-term view that definitions may be lost and that the dimension tables should contain the fullest, most comprehensible descriptions possible (Kimball 2002, 21). The restriction of data in the fact table to numerical data has been a hindrance to academic computing. However, Kimball has recently developed 'factless' fact tables (Kimball 2002, 49) that can accommodate textual data in the central fact table, thus opening the door to a much broader spectrum of possible DWs. The freedom to model data without heed to the strictures of normalization is a very attractive feature of DWs and one which may appeal to those trying to construct linguistic or literary databases (or indeed any humanities database) with abstruse or awkward data. 5. Applying the Data Warehouse Architecture to Historical and Linguistic Research One of the major advantages of data warehousing is the enormous flexibility in modelling data. Normalization is no longer an automatic straightjacket and hierarchies can be represented in dimension tables. The expansive time dimension (Kimball 2002, 39) is a welcome by-product of this modelling freedom, allowing country-specific calendars, synchronization across multiple time zones and the inclusion of multifarious time periods. It is possible to add external data from diverse sources and summarised data from the source database(s). The DW is built for analysis that immediately makes it attractive to humanities researchers. It is designed to continuously receive huge volumes (terabytes) of data, but is sensitive enough to cope with the idiosyncrasies of geographic location dimensions within GISs (Kimball, 2002, 227). Additionally a DW has advanced indexing facilities that make it desirable for those controlling vast quantities of data. 10 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? With a DW it is theoretically possible to publish the ‘right data’ that has been collected from a variety of sources and edited for quality and consistency. In a DW all data is collated so a variety of different subsets can be analysed whenever required. It is comparatively easy to extend a DW and add material from a new source. The data cleansing techniques developed for data warehousing are of interest to researchers, as is the tracking facility afforded by the meta data manager (Begg and Connolly 1999, 931933). In terms of using DWs 'off the shelf', some humanities research might fit into the ‘numerical fact’ topology, but some doesn’t. The 'factless fact table' has been used in several American universities, but expertise in this area would not be a widespread as that with numerical fact tables. The whole area of data cleansing may perhaps be daunting for humanities researchers (as it is to those in industry). Ensuring vast quantities of data is clean and consistent may be an unattainable goal for humanities researchers without recourse to expensive data cleansing software. The DW technology is far from easy and is based on having existing databases to extract from, hence double the work. It is unlikely that researchers would be taking regular snapshots of their data, as occurs in industry, but they could equate to data sets taken at different periods of time to DW snapshots (e.g. 1841 census, 1861 census). Whilst many DWs use familiar WYSIWYGs and can be queried with SQL-type commands , there is undeniably a huge amount to learn in data warehousing. Nevertheless, there are many areas in linguistics where DWs may prove attractive. DWs and Linguistics Research Brugman and Wittenberg (2001 IRCS conference proceedings) highlighted the importance of metadata for language resources since the launch of the EAGLES/ISLE metadata initiative. The problems related by Bliss and Ritter (2001 IRCS conference proceedings) concerning rigid relational data structures and pre-coding problems would be alleviated by data warehousing. Brown (2001 IRCS conference proceedings) outlined the dilemma arising from the alignment of database structures with particular linguistic theories, and also the conflict of quality and quantity of data. With a DW there is room for both vast quantities of data and a plethora of detail. No structure is forced onto the data so several theoretical approaches can be investigated using the same DW. Dalli (2001 IRCS conference proceedings) observed that many linguistic databases are standalone with no hope of interoperability. His proffered solution to create an interoperable database of Maltese linguistic data involved an RDBMS and XML. Using DWs to store linguistic data should ensure interoperability. There is growing interest in corpora databases, with the recent dedicated conference at Portsmouth, November 2003. Teich, Hansen and Fankhauser drew attention to the multi-layered nature of corpora and speculated as to how 'multi-layer corpora can be maintained, queried and analyzed in an integrated fashion.' A DW would be able to cope with this complexity. Nerbonne (1998) alluded to the 'importance of coordinating the overwhelming amount of work being done and yet to be done.' Kretzschmar (2001 IRCS conference proceedings) delineated 'the challenge of preservation and display for massive amounts of survey data.' There appears to be many linguistics databases containing data from a range of locations / 11 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? countries. For example, ALAP, the American Linguistic Atlas Project; ANAE, the Atlas of North American English (part of the TELSUR Project); TDS, the Typological Database System containing European data; AMPER, the Multimedia Atlas of the Romance Languages. Possible research ideas for the future may include a broadening of horizons - instead of the ‘me and my database’ mentality with the emphasis on individual projects, there may develop an ‘Our integrated warehouse’ approach with the emphasis on even larger scale, collaborative projects. These could compare different languages or contain many different types of linguistic data for a particular language, allowing for new orders of magnitude analysis. DWs and Historical Research There are inklings of historical research involving data warehousing in Britain and Canada. A DW of current census data is underway at the University of Guelph, Canada and the Canadian Century Research Infrastructure aims to house census data from the last 100 years in data marts constructed using IBM software at several sites across the country. At the University of Portsmouth, UK, a historical DW of American mining data is under construction using Oracle Warehouse Builder. These projects give some idea of the scale of project a DW can cope with, that is, really large country / state -wide problems. Following these examples, it would be possible to create a DW to analyse all British censuses from 1841to1901 (approximately 108 bytes of data). Data from a variety of sources over time such as hearth tax, poor rates, trade directories, census, street directories, wills and inventories, GIS maps for a city e.g. Winchester could go into a city DW. Such a project is under active consideration for Oslo, Norway. Similarly, a Voting DW could contain voting data – poll book data and rate book data up to 1870 for the whole country (but it must be noted that some data is missing). A Port DW could contain all data from portbooks for all British ports together with yearly trade figures. Similarly a Street directories DW would contain data from this rich source for whole country for the last 100 years. Lastly, a Taxation DW could afford an overview of taxation of different types, areas or periods. 19th century British census data doesn’t fit into the typical DW model as it doesn’t have the numerical facts to go into a fact table, but with the advent of factless fact tables a DW could now be made to house this data. The fact that some institutions have Oracle site licenses opens to way for humanities researchers with Oracle databases to use Oracle Warehouse Builder as part of the suite of programs available to them. These are practical project suggestions which would be impossible to construct using relational databases, but which, if achieved, could grant new insights into our history. Comparisons could be made between counties and cities and much broader analysis would be possible than has previously been possible. 6. Conclusions The advances made in business data warehousing are directly applicable to many areas of historical and linguistics research. DW dimensional modelling would allow historians and linguists to model vast amounts of data on a countrywide basis (or larger), incorporating data from existing databases and other external sources. Summary data could also be included, and this would all lead to a DW containing more data than is currently possible, plus the fact that the data would be richer than in current databases 12 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? due to the fact that normalization is no longer obligatory. Whole data sources could be captured, and more post-hoc analysis would result. Dimension tables particularly lend themselves to hierarchical modelling, so data would not need splitting into many tables thus forcing joins while querying. The time dimension particularly lends itself to historical research where significant difficulties have been encountered in the past. These suggestions for historical and linguistics research will undoubtedly resonate in other areas of humanities research. As feedback becomes available from the humanities DW projects outlined above, other researchers will be able to ascertain whether data warehousing is the new knowledge management architecture for humanities research. 7. References Begg, C. and Connolly, T. (1999) Database Systems. Addison-Wesley, Harlow. Bliss and Ritter, IRCS (Institute for Research into Cognitive Science) Conference Proceedings (2001) http://www.ldc.upenn.edu/annotation/database/proceedings.html Bradley, J. (1994) Relational Database Design, History and Computing, 6.2: pp. 71-84. Breure, L. 1995 ‘Interactive data Entry’, History and Computing, 7.1, pp. 30-49 Brown, IRCS (Institute for Research into Cognitive Science) Conference Proceedings (2001) http://www.ldc.upenn.edu/annotation/database/proceedings.html Burt, J. and James, T. B. (1996) Source-Oriented Data Processing, The triumph of the micro over the macro? History and Computing, 8.3: pp. 160-169. Brugman and Wittenburg, , IRCS (Institute for Research into Cognitive Science) Conference Proceedings (2001) http://www.ldc.upenn.edu/annotation/database/proceedings.html Codd, E. F. (1972) Further normalization of the data base relational model. In Rustin, R. (ed) Data Base Systems, Englewood Cliffs, NJ. Codd, E. F. (1974) Recent Investigations in relational data base systems. Proceedings IFIP Congress. Dalli, IRCS (Institute for Research into Cognitive Science) Conference Proceedings (2001) http://www.ldc.upenn.edu/annotation/database/proceedings.html Date, C. (2003) An Introduction to Database Systems. Addison-Wesley, Reading MA. Elmasri, R. and Navathe, S. (2003) Fundamentals of Database Systems. AddisonWesley, Reading MA. Harvey, C. and Press, J. 1996 Databases in Historical Research, Basingstoke Hayashi and Hatton, IRCS (Institute for Research into Cognitive Science) Conference Proceedings (2001) http://www.ldc.upenn.edu/annotation/database/proceedings.html Hudson, P. 2000 History by numbers. An introduction to quantitative approaches, London Inmon, W.H. (1991) The Cabinet Effect, Database Programming and Design, pp. 7071. Inmon, W. H. (2002) Building the Data Warehouse. Wiley, New York. IRCS (Institute for Research into Cognitive Science) Conference Proceedings (2001) http://www.ldc.upenn.edu/annotation/database/proceedings.html Kimball, R. and Ross, M. (2002) The Data Warehouse Toolkit. Wiley, New York. Krivda, C.D. (1995) Data-Mining Dynamite, Byte, pp. 97-100. Nerbonne, J. (ed) (1998) Linguistic Databases. CSLI Publications, Stanford, California. 13 Data Warehousing: the New Knowledge Management Architecture for Humanities Research? Stackpole, B. (2001) Wash Me, Data Management, CIO Magazine, Feb. 15th. Teich, Hansen and Fankhauser, IRCS (Institute for Research into Cognitive Science) Conference Proceedings (2001) http://www.ldc.upenn.edu/annotation/database/proceedings.html Thaller, M. (1991) 'The Historical Workstation Project', Computers and the Humanities, 25, pp. 149-162 14