Chapter 9 Signal Detection

advertisement

Chapter 9 Signal Detection

9.1 Introduction

- So far, we have gone through the basics of random processes. This chapter is to put

these basics in application for solving practical problems.

- One of the most common problems in automation is signal detection. A typical

example is data communication. Because of the effect of noise, a “1” signal may be

received and / interpreted as “0”. The other example is voice communication. You

may find that your friend’s voice in the other end of the cellular phone is not the same

as what used to be.

- In general, the first type of problem is called signal detection while the second type is

referred to as signal estimation. In this chapter, we will study the signal detection

methods. The signal estimation methods will be discussed in the next chapter.

9.2 Binary Signal Detection

(1) Formation of the problem

- The simplest signal detection is to detect between a “0” signal and a “1” signal. Note

that at this time, we do not need random processes as there is only one signal, y,

presented. Simple statistic analysis will do.

- To do this, we form a hypothesis:

H0: “0” was transmitted

H1: “1” was transmitted

-

The decision on the hypothesis can be made based on the signal. Suppose a random

signal Y is observed, then

Choose H0 if P(H0 / y) > P(H1 / y) and

Choose H1 if P(H1 / y) > P(H0 / y)

The equivalent but concise notation is:

H1

P( H 1 / y )

1

P( H 0 / y)

H1

which means choose H1 when the ratio is greater than 1 and choose H0 when the ratio

is smaller than 1.

(2) Maximum a posteriori decision (MAP)

- The simplest and most commonly used signal detection method is the maximum a

posteriori decision (MAP) method.

- The MAP method is based on the Bayes’ rule:

f Y / H i ( y / H i ) P( H i )

P( H i / y )

fY ( y)

-

where, i = 0, 1.

Substitute into the above equation, the decision rule is:

H1

f Y / H1 ( y / H 1 ) P ( H 1 )

1

f Y / H 0 ( y / H 0 ) P( H 0 )

H0

or:

H1

f Y / H1 ( y / H 1 ) P ( H 0 )

f Y / H 0 ( y / H 0 ) P( H 1 )

H0

-

-

-

Note that the ratio:

f Y / H1 ( y / H1 )

L( y )

fY / H0 ( y / H 0 )

is called the a likelihood ratio. The MAP decision rule consists of comparing this

ratio with the constant P(H0) / P(H1), which is called the decision threshold.

If we have multiple observations, Y1, Y2, …, Yn, the a likelihood ratio become:

f Y / H1 ( y1 , y 2 ,..., y n / H1 )

L( y1 , y 2 ,..., y n )

f Y / H 0 ( y1 , y 2 ,..., y n / H 0 )

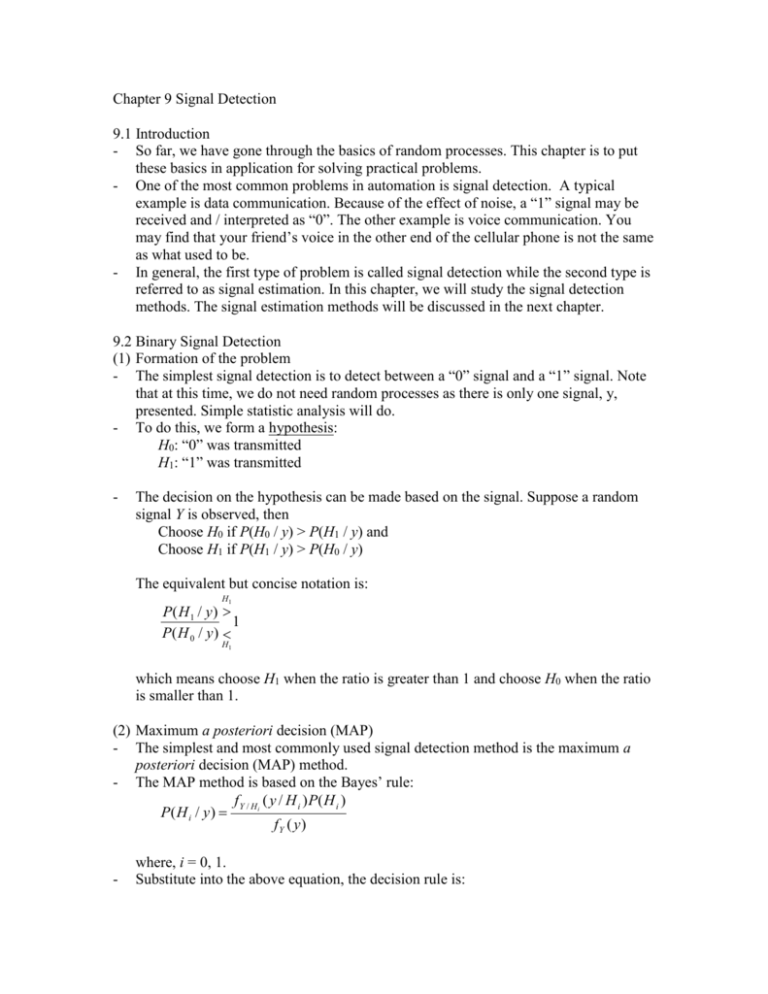

When decision are based on noisy observations, there is always a chance that some of

the decisions may be incorrect. In the binary hypothesis testing problem, we have, as

shown below, four possibilities:

(a) Decide in favor of H0 when H0 is true (correct decision)

(b) Decide in favor of H1 when H1 is true (correct decision)

(c) Decide in favor of H1 when H0 is true (false decision, type-I error)

(d) Decide in favor of H0 when H1 is true (false decision, type-II error)

The probability of error is:

Pe = P(H0)P(D1 / H0) + P(H1)P(D0 / H1)

Type I error

Type II error

Moved threshold

will reduce Type

II error but

sacrifice Type I

error

H0

threshold

H1

-

-

An example: In a binary communication system, the received signal is:

Y=X+N

where, X = 0, 1 represents the signal and N ~ N(0, 1/9) is an independent white noise.

Given P(X = 0) = ¾ and P(X = 1) = ¼

(a) Derive the MAP decision rule

(b) Calculate the error probability

Solution:

- Form the hypothesis:

H0: “0” was transmitted

H1: “1” was transmitted

-

Calculate the a likelihood ratio:

9

9

f Y H 0 ( y / H 0 ) f Y / X ( y / 0)

exp y 2

2

2

9

9

f Y H1 ( y / H1 ) f Y / X ( y / 1)

exp ( y 1) 2

2

2

9

2

exp y 1

2

L( y )

9

exp y 2

2

-

Calculate the threshold. Since P(X = 0) = ¾ and P(X = 1) = ¼, the threshold is:

P(H0) / P(H1) = ¾ / ¼ = 3

-

Therefore, the decision rule is:

H1

9

exp 2 y 1 3

2

H0

or by taking logarithm:

H1

y

0.622

H0

-

Based on the threshold, we can calculate the error probability:

P(D0 / H1) = P[Z > (1 - 0.662)/(1/3)] = 0.128

P(D1 / H0) = P[Z > (0.662 - 0)/(1/3)] = 0.031

Hence:

Pe = (3/4)(0.128) + (1/4)(0.031) = 0.55

(3) Bayes’ decision

- The cost of making a false alarm and missing an alarm could be different. Let

C00 – the cost of detecting H0 when H0 is true

C01 – the cost of detecting H0 when H1 is true (miss alarm)

C10 – the cost of detecting H1 when H0 is true (false alarm)

C11 – the cost of detecting H1 when H1 is true

Note that C00 and C11 should be very small. The total cost of the decision is:

C Cij P( H j ) P( Di / H j )

i 0 ,1 j 0 ,1

-

The Bayes’ decision is the one that minimize the above cost and can be written as

follows:

H1

f Y / H1 ( y / H 1 ) P( H 0 )C10 C00

f Y / H 0 ( y / H 0 ) P( H 1 )C01 C11

H0

-

Note that when C00 = C11 = 0 and C01 = C10 = 1, Bayes’ decision is the same as the

Binary decision (there are books consider Binary decision as a Beyes’s decision).

In the above example, suppose that C00 = C11 = 0, C01 = 2 and C10 = 1, (miss alarm

causes twice as much damage as that of false alarm), then:

[(1)P(H0)] / [(2)P(H1)] = 3/2

Therefore, the decision rule is:

H1

9

3

exp 2 y 1

2

2

H0

(4) Decision with multiple (continuous) observations

- When continuous (multiple) observations are presented, we have to deal with random

processes:

Y(t) = X(t) + N(t), 0 t T

where,

s (t ) under hypothesis H 0

X (t ) 0

s1 (t ) under hypothesis H1

-

-

Note that s0(t) and s1(t) are deterministic but may be in many different forms. The

simplest one is s0(t) = -1 and s1(t) = 1, 0 t T (or s0(t) = 0 and s1(t) = 1, 0 t T).

Assuming that we take m samples in the time interval (0, T): Yk = Y(tk), k = 1, 2, …,

m, then the detection hypothesis is:

yk = s0,k + nk, under H0

yk = s1,k + nk, under H1

This problem can be solved by MAP method. Let Y = [Y1, Y2, …, Ym]T, and y = [y1,

y2, …, ym]T, then:

H1

f Y / H1 ( y / H 1 ) P ( H 0 )

L( y )

f Y / H 0 (y / H 0 ) P( H 1 )

H0

-

The forms of L(y) function depends on the problem distribution of the noise

disturbance. Let us consider two cases: N(t) is a white noise and N(t) is a “color

noise”.

(5) Signal detection under white noise

- We have learned that a white noise, N(t), has a Gaussian distribution with:

- E{N(t)} = 0

- E{N2(t)} = 2 = N0B

sin 2B

- RNN ( ) N 0 B

2B

N0

f B

- S NN ( f ) 2

0 elsewhere

-

Hence, the conditional distribution of Y is Gaussian with:

m

y s 2

1

f Y / H i y / H i

exp k 2i ,k

2

2

k 1

-

Through calculus, we can show that the detection rule is:

H1

P( H 0 ) 1 T

T

y s1 s 0 2 ln

s1 s1 s 0 s 0

P( H 1 ) 2

T

H0

where, si = [si,1, si,2, …, si,m]T, i = 0, 1.

-

-

An example: A binary communication system sends an equiprobable sequences of 1’s

and 2’s. However, we receive the signal together with a white noise that has the

power spectral density:

10 7

f 106

S NN ( f )

0 elsewhere

Furthermore, suppose the receiver samples 10 data points for each bit, what is the

detection rule and the average probability of error?

Solution:

- Since the sequence has equiprobable 1’s and 2’s, P(H0) / P(H1) = 1. The detection

rule becomes

H1

y T s1 s 0

1 T

s1 s1 sT0 s 0

2

H0

where, yi = [yi,1, yi,2, …, yi,m]T, s1 = [2, 2, …, 2]T, s0 = [1, 1, …, 1]T, Hence, the

detection rule is:

H1

m

y

i 1

i

3m

2

H0

or:

H1

1 m

yi 1.5

m i 1

H0

-

the probability of error can be found by comparing the threshold 1.5 to the

average value of the samples:

1 m

Z Yi

m i 1

Note that Z has a Gaussian distribution with zero mean and variance of

1 m

2

Z2 var Z var N (ti ) N

m i 1

m

where:

N2 S NN ( f )df 0.2

Hence, Z2 = 0.004

-

based on the definition:

Pe = P(H0)P(D1 / H0) + P(H1)P(D0 / H1)

= (½)P[Z > (1.5 - 1)/(0.063)] + (½)P[Z > (2 - 1.5)/(0.063)]

= 0.0002

(6) Signal detection under color noise

- The difference between white noise and “color noise” is the covariance. The later has

a covariance matrix:

[ ij ] E N (t i ) N (t j )

-

-

which has nonzero terms in the non-diagonal terms.

Considering the covariance and taking the logarithm, we have:

1

1

ln L(y ) y T N1s1 y T N1s 0 s T0 N1s 0 s1T N1s1

2

2

Therefore, the detection rule is:

H1

P( H 0 )

1

1

y T N1s1 y T N1s 0 sT0 N1s 0 s1T N1s1 ln

2

2

P( H 1 )

H0