robach report-2 - School of Computer Science

advertisement

RoBach: A Case Study in the Use of Genetic

Algorithms for Automatic Music Composition

Adam Aaron, Adam Bildersee, Rebecca Cooper, Malamo Countouris, Carlin Eng

Melissa Fritz, Tim Heath, Kyle Leiby, Yunxue Xu, Luke Zarko

Advisor: Peter Lee

TA: Joel Donovan

Abstract

For our team project, we attacked a problem that has eluded resolution for

decades: automated music composition. Almost since the time the computer has

been powerful enough to handle the task, people have tried to create programs

that could compose good music, but most have failed. We attempted to solve

the problem by using a genetic algorithm, a very powerful search tool that we

hoped would lead us in the right direction. To improve our chances of success,

we narrowed our search to Bach’s chorales. Using a series of building blocks,

we defined billions of possible composers to write music, along with critics to

evaluate them.

By combining the same basic blocks in different sequences,

different composers and critics could be formed which would follow different rules

when writing or listening to music. Once we defined the composers and critics in

terms of a chromosome, we ran them through the algorithm in a form of coevolution, to produce superior critics and composers.

We hoped the music

produced by the composers would gradually improve, and perhaps even become

enjoyable, after long periods of computational time. The results showed that

although the composers did improve, they never achieved the same level of

excellence as Bach. To obtain better results in another experiment, we would

increase population size, chromosome size, the number of building blocks, and

evolution time.

I. Introduction

RoBach is a project designed to compose music artificially with a computer, through programs

which produce and sort through many generated music-making bit strings. The programs are

designed to use information about structure and harmony and to generate completely new music

based on these qualities.

Since music composition is such a broad area, we focused our

experiment on creating music in the general style of Bach’s chorales. These have a relatively

clear chord and melody structure that is easy to evaluate mathematically and reproduce

artificially. Basically, our goal was to produce music completely through a computer that would

be at least tolerable enough to listen to, if not exactly like Bach.

Page 2 Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

To produce music, one approach is to take individual aspects, such as chord patterns or melody

structure, and mix and match various generators to create a composer that can produce an

infinite stream of music.

If we use a 32-bit representation for a composer, then there are

essentially billions of results. We must have a way of sorting through them, for most of them will

produce music that sounds nothing like Bach. We therefore also produce critics, which use the

characteristics of Bach’s music that we have isolated and defined. These characteristics judge

the music produced by the composers based on how closely the structure of the music is to

Bach’s chorales. In our experiment, we use a method similar to a chi-square to compare the data

from the Bach chorales to the data from the composers’ music. The closer the relationship is, the

more highly the critic will rate the composer.

Composers are generated by genetic algorithms. In this process, the composers rated highest by

the critics will have a higher probability of being selected for a future generation of composers.

This future generation is formed by cross-breeding and sometimes slightly mutating these higherrated composers, and then by repeating the process many times until optimization is reached: in

our case, when we obtain a composer that produces good music, preferably like Bach. Our

project implements this idea to produce and sort through the composers and critics to try and find

at least one that makes music somewhat in the style of Bach’s chorales.

A. Music Generation

Automatic music composition is one of the unsolved problems of computer science. The more

generalized form of the problem, algorithmic music composition, actually pre-dates the computer.

Humans have long sought to compose music by executing a procedure with a finite number of

steps. Mozart, for example, took musical fragments and rolled dice to determine randomly the

order in which they were strung together to form new compositions. The advent of computers

greatly extended the possibilities for algorithmic music composition.

1. Introduction to Music Generation

In 1956, Lejaren Hiller and Leonard Isaacson became the first to create computer-generated

music when they released the Illiac Suite, a four-movement piece written for string quartet by the

Illiac computer at the University of Illinois. The machine began with some crude input it had

produced and altered that input by applying a series of operations. From the pool of resulting

outputs, it then chose the best works according to a set of criteria. This model of the composer as

a generator, modifier, and selector has been adopted a number of times since Hiller and

Isaacson.1

A central question of automatic music composition is how to balance the amount of structure and

novelty in the music. The dilemma has continually resisted solution. Placing more constraints on

the computer increases the likelihood that its compositions will follow musical conventions and be

agreeable to the human ear, but it decreases inventiveness and the capacity to surprise. On the

other hand, giving more freedom to the composer increases innovation but also increases the

Journal of the PGSS

RoBach: Automatic Music Composition

Page 3

chance of generating wild, poorly structured pieces. Different approaches to automatic music

composition yield results that fall along different regions of the structure/novelty continuum.

Optimal solutions would occur near the middle of the continuum; these solutions have yet to be

found.

2. Music Generation Strategies

After Hiller and Isaacson sparked serious interest in the problem of music generation, various

strategies to solve it have been developed and tested. A brief but good survey is found in

“Frankensteinian Methods for Evolutionary Music Composition,” an article by Peter M. Todd and

Gregory M. Werner that appears in their book Musical Networks: Parallel Distributed Perception

and Performance.

Rule-based systems devise a set of laws, based on a statistical analysis of music or principles of

music theory, to govern the composition process. For instance, one such rule-based system

banned parallel fifths. The rules might include penalties for breaking them, and the system can be

designed to have a certain amount of tolerance for rule breaking, since human creativity often

transcends musical conventions. Perhaps the penalties must accrue to a certain amount before

the computer abandons its current path down the decision tree, returns to a prior step in the

process, and starts anew from that point.2

A purely rule-based composer, however, pays the price of novelty for structure. Besides, it is very

difficult to formulate a set of rules that would define how to generate good music in the first place.

Some people ask whether it is even possible to codify creativity in this manner. The system has

the additional disadvantage of not being able to learn and grow, in the sense of changing the

rules on its own or creating new ones to adapt to new situations. 3

A second approach attempts to develop a composer by training the machine on a corpus, or

repertoire of pre-selected “good” music, instead of imposing a series of rules. Early versions of

this approach calculated the probability of a particular note following another note in the

compositions of the corpus and then employed Markov chains to create new music by selecting

notes according to those probabilities. It performed well on a note-by-note level, but the

compositions possessed no meaningful larger-scale structure such as phrasing, themes, and so

forth.4

Researchers also tried a technique known as neural nets to train the composer, again with a

corpus, to acquire a sense of higher-level musical structures. Neural nets echo the physical

architecture of the human mind. If the brain’s anatomy is mimicked, one hopes that its behavior

can be simulated. A neural net is comprised of layers that receive input, hidden layers that modify

the input, and layers that export output, each layer being a collection of neurons. One begins with

a set of inputs called the training set for which the correct outputs are known. For instance, the

inputs might be the starting measures of a piece in the corpus and the correct outputs the

subsequent measures. The system is trained so that when given the training set, it generates

Page 4 Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

outputs as close to the correct ones as possible. Once it has learned the patterns to mimic the

corpus, it may be able to take inputs outside of the training set and compose music like that of the

corpus. In experiments, however, neural networks still composed at a rather shallow level. A

reason might be that so far scientists have not been able to entirely duplicate the hugely parallel

neural structure of the brain.5

A more recent approach to automatic music composition looks in yet a new direction; it is based

on natural selection. This is the approach our team implemented to automatically compose Bach

chorales. Intuitively, the approach makes sense. Humans have acquired the ability to do

complicated tasks through evolution, in the course of which large advances develop through

small improvements each generation. Evolution is also capable of generating a considerable

amount of diversity, as it has in nature, while converging upon a desired set of features. The

evolutionary strategy of applying genetic algorithms has been proven mathematically to give a

very good compromise between efficiency and effectiveness.

In this approach, a population of composers generates a large quantity of music. A fitness

function, the critic, evaluates the music and assigns it a score. The composers that receive higher

scores have a better chance of propagating themselves and surviving to the next generation.

Actually, a single critic is not enough because composers might find some loophole in the fitness

function they can exploit, some trivial way of satisfying the critic and getting a high score without

really producing quality music. Thus, the team maintains a population of critics as well as

composers for its project. Furthermore, the two populations are co-evolved, so that critics will

acquire more discerning power as the composers generate better product across generations.

It is important to note that the different approaches described above are not mutually exclusive

and can be implemented together. This project uses genetic algorithms to carry out the search for

the best composer, but it also incorporates ideas from rule-based strategies and systems that are

trained on corpuses to construct composers and critics.

B. Genetic Algorithms

Developed by John Holland and his colleagues at the University of Michigan, genetic algorithms

combine the ideas of modern computer science with the traditional biological ideas of genetics

and natural selection. In typical implementations, these algorithms use a special, case-specific

coding of parameter data and a scoring routine to drive various emulations of natural processes

(often reproduction, crossover, and mutation) on that coding. 6

Genetic algorithms are of primary concern to the task of optimization. They are applied to some

function with output that can be evaluated objectively, and their goal is to constantly increase the

favorability of this evaluation. Any algorithm of this type ought to be both robust (able to work on

many different types of data) and efficient (even in very large search spaces). Robustness is

important because it encourages software reuse and decreases the cost of implementation 7;

Journal of the PGSS

RoBach: Automatic Music Composition

Page 5

efficiency is important to decrease the cost of actually running the software and to give users

more freedom for experimentation. Genetic algorithms have both of these traits.

Other optimization algorithms usually lack one of these two key features. Calculus-based

approaches, sometimes called ‘hill climbing’ algorithms, depend on the existence of continuity

and derivatives. This greatly limits the domain of any implementation of such an algorithm, as it

would be completely unsuited to the noise and probable discontinuity of real-world data. They are

also limited in search scope: as they seek to maximize by simply climbing hills in the parameter

space, they can be easily tricked into thinking that a local maximum is a global maximum 8.

Enumerative searches, wherein the parameter space is simply iterated over, are easy to

understand and are very robust, but they are terribly inefficient for search spaces of even

moderate size. Random searches are, on average, as inefficient as enumerative searches 9.

Genetic algorithms work so well because they need no auxiliary information: they work only with

the function and its fitness (calculated from the function’s output). The probability of misidentifying

local maxima as absolute maxima is minimized both because their mechanics allow them to

search from more than one place at once, and because they seek improvement rather than the

absolute best result10. They are efficient because, although part of the search is driven by

randomness, every step moves in a direction toward likely improvement; thus, only a small and

necessary part of the function’s landscape is observed 11.

Genetic algorithms operate on a coding of parameters for the function being maximized. This

coding is a string in some problem-specific alphabet, and could mean completely different (or

even contrary) things in different situations. One of the implementation specific requirements of a

genetic algorithm is a routine to interpret these strings and turn them into a new function. This

routine is treated as a black box for purposes of the optimization, and only the codings are

modified12. In order for a particular coding to be given a fitness value, another component is

required that objectively measures the output of the interpreted string. Strings are compared to

one another based on these values and not to some constant that lives outside the system.

The greatest difficulty in implementing a genetic algorithm usually is coming up with a fitness

function that is difficult to ‘cheat’; this could be compared to the tax code, in which a few

enterprising taxpayers always seem to find a handy loophole to exploit. In both a genetic

algorithm and real life, this kind of information spreads very quickly. One solution is to constantly

change the tax code or fitness function to either remove or devalue the benefits a loophole can

provide. It is important that these changes not be too drastic, though; otherwise, the target of

optimization would move too quickly for any satisfying result to develop.

Page 6 Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

String

Fitness

String

1

x

=

1

2

x

=

Fitness

+M

?

+

?

?

?

2

3

x

3

Reproduction

=

?

+

?

Crossover & Mutation

New Population

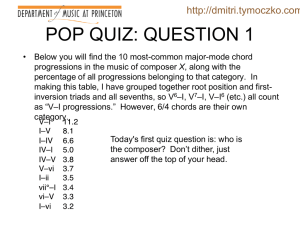

Figure 1: Genetic Algorithm Operation

The algorithm’s core operations are very simple—see figure 1 for a graphical representation.

First, a large population of random strings is generated. This is necessary to give the rest of the

process a point from which to start. Next, the main generational loop begins. All of the strings in

the population are evaluated with the supplied fitness function. In the first stage, reproduction,

strings are copied into a new population based on their fitnesses. One can visualize this process

by imagining a roulette wheel combined with a pie chart, where each string is given a section of

the wheel. Strings with higher fitness values have larger sections, and are therefore more likely to

be picked for copying. It is still possible for strings with lower fitnesses to be selected, though,

because they might contain advantageous sequences of genes.

13

In the next step, crossover, random pairs of strings from the new population are chosen to mate.

A point, the same in both strings, is picked; the data of the strings past that point are swapped.

This way, partial best solutions can combine to form a full solution. Strings that were not very

strong before crossover can be greatly enhanced by codings from their paired strings as well.

These first two operations form the heart of the genetic process; the average fitness of a

population grows by swapping information about best practices. 14

The last stage in the generational loop is mutation, usually taking the form of a bit flip in a random

string in the population. This step ensures that no good codings can be completely lost through

disadvantageous crossover or reproduction. Its probability must be kept significantly low;

otherwise, the algorithm degenerates into an inefficient random search.

15

Every iteration of the loop yields a ‘current best’ result—the string with the highest fitness value.

This must be passed back through the string interpreter to convert it into something useful to the

problem being solved. It is up to the implementer to decide when the generational loop should

terminate altogether. This could be based on human input, where an operator constantly monitors

the outputs from the best strings until one yields a satisfactory result. Alternatively,

implementations could choose to halt once a string achieves a certain target fitness value, or

even once the generational loop has been executed a certain number of times.

C. The Corpus: Bach Chorales

Journal of the PGSS

RoBach: Automatic Music Composition

Page 7

In order for a critic function to assign a fitness value to a given composer, it must have a basis on

which it forms its evaluation. Since the goal of this case study is to develop a composer capable

of writing songs similar to the chorales of Johann Sebastian Bach, the basis used for evaluation

must effectively compare the works of composers against Bach’s actual chorales. This can be

done either by theoretical analysis, comparing the theoretical attributes of chorales and generated

works, or by statistical analysis, comparing quantitative measurements of chorales against those

of generated works. For the purpose of this case study, we chose to use statistical analysis for

the critic functions and for some composing functions. Some theoretical qualities of music are

considered by composer blocks but are insignificant when compared to the depth of theory

needed for critical analysis.

The works of composers are intended to emulate not one specific chorale but rather Bach’s whole

style of chorale composition. Therefore, a large sampling of his chorales needed to be compiled

into a corpus, or a “body” of works. Histograms were generated for all analyzed statistics, and

were used as a method of comparison against the artificially generated works.

The choice to use the chorales of J.S. Bach as a model was in part influenced by the methods of

analysis. For various reasons, these chorales make excellent candidates for both statistical and

theoretical analysis. First, musical composition in the Baroque period when Bach lived followed a

rigid set of rules dictating theory and structure. This allows our composers to make basic

assumptions about musical structure and format.

For example, in the Baroque period,

composers would have used only some of the many chords and harmonic patterns used today;

therefore, making basic assumptions, we allow our composers to use chords only from a

predefined set of six chords.

Under normal circumstances, six chord qualities would be

insufficient for representing the harmonic progressions of a piece, but the majority of chords in

these chorales can be categorized as one of those six types. One of the structural qualities of

chorales is that most tend to be short, often between eight and sixteen measures. This allows for

ease in the assembly of songs. Most importantly for the critics, a large corpus of these works

exists; each chorale in this body fits the format of chorales that the genetic algorithm attempts to

emulate. We obtained our corpus of chorales from www.jsbchorales.net and used 287 of the 371

available chorales for analysis.

The critics in this case study depended heavily upon statistics from the corpus, and none relied

solely on theoretical qualities. The functions responsible for composing, however, frequently

made use of both statistical data and theoretical “rules.”

For example, some of the chord-

sequencing functions used by the harmony composition functions make use of statistics from

histograms to determine the weights for random generators and chord changes.

The structural and stylistic similarity between songs in the corpus allows a standard interface

between critics and composers to be established. This interface, the Composition object,

essentially codifies the standard format of a Bach chorale. We made general assumptions about

the structure of chorales and applied them to the Composition object; the object can serve as

either an interface or as a template for composers to use.

Page 8 Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

Bach chorales are almost always composed as short songs for four voices: soprano, alto, tenor,

and bass. In addition, the combination of these voices often is organized into a sequence of even

chords connected with passing tones or simple runs. To codify this, we designed the

Composition object to accommodate not only the four individual voices but also a list of chords

that is used for analytical purposes only. The chord sequence is a list of Chord objects. These

objects are designed to describe the theoretical nature of chords.

Each Chord has three

attributes: a key, a quality (major, minor, seventh, minor seventh, diminished, suspended 4), and

an inversion. The quality of each chord defines a set of intervals that make up a given chord, so

a Chord object does not specify a certain set of notes; instead, a function is used to arbitrarily

translate chords from the list into notes for each of the four independent voices.

D. Introduction to Haskore™16

Haskore is a programming environment designed for music composition, implemented in the

functional language Haskell™17. It provides simple access to basic music structures such as

notes, lists of notes, and chords. A note is defined by a pitch, duration, and tone attributes, while

lists and chords are ways of combining notes to be played sequentially and simultaneously,

respectively.

Haskell is useful for list processing and recursion, allowing music objects to be manipulated with

ease. Haskore also has the ability to convert its music types to and from midi files, a critical

functionality for our project. Conversion to midi files was convenient, as it allowed us to hear our

music as soon as it was created, and conversion from midi files to other music types was also

critical so that our program could learn “good” music by example.

These properties of Haskore and Haskell make this language and environment perfect for our

project. Instead of viewing the contents of the Bach chorales as a bunch of arbitrary notes, we

organized them into lists of voices and chords.

In this manner, careful selection of the

programming environment allowed us to invest more time in the fundamentals of the project

instead of tedious manners like conversion and interpretation.

II. Components of the Experiment

A. The Composition Object

In order to efficiently collect statistics from the corpus, we used a parser to translate the chorales

(in MIDI format) into Composition objects. However, since a Composition is only four lists of

notes and an un-played list of chords, simplification takes place in the translation. As far as our

case study is concerned, we are not considering dynamics, tempo, articulation, and other musical

expressions. Therefore, the Composition object need only be able to represent note values

and durations, and chord structure.

B. The Composers

Journal of the PGSS

RoBach: Automatic Music Composition

Page 9

In the everyday sense, a composer is a person who creates music. When applied to a computer,

however, the name takes on a new meaning. A composer, in computational terms, should be an

automated program that can create music by itself. For our purposes, we defined a composer as

a compilation of functions that can create an infinite string of values, which can each be evaluated

as musical compositions unique to that composer. Because they are assembled out of multiple

functions that are almost like fundamental building blocks, multiple composers can be created out

of the same few functions.

1. Composer Blocks

There are many ways to create a composer using a binary bit string. One such method involves

a decision tree in which each bit represents a certain tendency of the composer. For example, if

the first bit is a 1, the composer would prefer major chords to minor chords and vice versa if the

bit is a 0; or perhaps each bit could decide what genre of music the composer would compose

most often. If this were successfully implemented, a huge range of composers could potentially

be generated: everything from a classical composer who favors the key of A minor to a blues

composer who favors the key of G major. Unfortunately, undertaking a project this complicated

would require much more time than five weeks.

Constructing a group of composers who

compose in a single genre is already a difficult process. Instead, we decided to use a set of

music generating and modifying building blocks to define each composer, and for a single genre

only.

A composer building block is a function that controls various tendencies of a composer. Every

time a composer is asked to create a piece of music, it runs through each of these building block

functions in a specific order. For example, if we have a composer made of building blocks {a, b,

c}, the composer will first call upon building block ‘a.’ Building block ‘a’ will perform an action,

such as chord generation or melody creation. After block ‘a’ completes this task, it will call upon

block ‘b.’ Block ‘b’ will then perform whatever actions it was programmed to perform on the music

generated by block ‘a,’ and it will next call upon block ‘c.’ Since ‘c’ is the last building block in the

composer, after it has performed its actions, the music composition is complete and ready to be

played.

Changing the order of the building blocks within a composer can drastically modify its musical

style. If the composer mentioned above was rewritten as {a, c, b}, block ‘c’ would be modifying a

different piece of music than it previously did, since it sees the chords created by block ‘a’

unmodified by block ‘b.’ Consequently, block ‘b’ would also act differently after seeing music

modified by block ‘c’. Composer building blocks can be used more than once in each composer.

If we do not set a limit to the number of building blocks in each composer, it quickly becomes

clear that there is an infinite number of possibilities for combinations of building blocks, even if

only a few composer building blocks are defined.

Even though there are infinitely many composers who can theoretically generate an infinite

amount of music, the actual generation of the music is not the difficult part of automatic music

Page 10

Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

composition. The amount of music actually generated that could be considered pleasing to the

ear is infinitesimal. With a search space so large, it is virtually impossible to sift through the pool

of music and find a satisfactory composer; therefore, it is unrealistic to allow the composers to go

unrestricted.

The foundation for composer building blocks is the harmony generator. This block is composed

of two primary sections: the chord sequencer and the phrase assembler, each of which is

controlled by two bits in the genome. The chord sequencer receives a decimal number from 0 to

3 (02 to 112 binary) and assembles a chosen number of quarter note chords in one of four ways.

The sequencer randomly chooses chords, randomly chooses chords with weights from the

corpus, chooses chords based on common tones between chords, or uses a set of theoretically

determined weights to generate simple cadences linked by common-tone chord sequences. The

phrase assembler then uses the chosen method of chord production to assemble a set of

phrases within an eight-measure song. Depending on its two controlling bits, it can make an

eight-measure phrase, two four-measure phrases, four two-measure phrases, or a combination of

four- and two-measure phrases. This assembler always has a tendency to repeat phrases that

have the same progression, so that a listener might identify recurring themes in each chorale.

The end of each phrase has a very strong tendency to end in one of the following two-chord

cadences: Isus4 – I, V7 – I, or V – I.

2. Composer Chromosome

All of the composing blocks can be combined in many ways to create an extremely large amount

of composers. But to be able to distinguish one composer from the next and call upon it whenever

we want, we need a standard way to represent the unique combination of composer blocks that

define a certain composer. In our design, a composer is a 32-bit length string of binary digits, a

Word32 data type. This string acts as a chromosome that dictates what composer blocks are

used to create the entire composer.

In order to represent the multiple composer blocks, the 32 bits are divided up into regions that

govern a certain quality of music to be created. We refer to each of these qualities as a trait, and

our composers are thus described in terms of harmony, phrasing, melody, and rhythm. In an

actual chromosome for any one of our composers, the first two bits represent the harmony

generation; the second two, the phrasing; the next eighteen bits, the melody; and the following six

bits, the rhythm. (There are still four bits that are unaccounted for. This set of four bits, which we

call the order tweaker, plays a crucial role in the originality of the composer, but they will be

explained later.) See Figure 2.

Journal of the PGSS

RoBach: Automatic Music Composition

Page 11

Figure 2: Genetic Structure of a Composer

The traits are divided further into genes that correspond to a certain composer block. Each trait

consists of multiple genes, so that multiple blocks can define one trait. The number of possible

binary combinations, however, needs to be greater than or equal to the total number of composer

blocks that can possibly be represented by that gene; thus, the number of bits per gene is directly

dependent upon the number of composer blocks available for that trait. For example, we created

seven composer blocks for melody, so we needed a melody gene that could code for any of

these functions. We thus used a 3-bit length gene, because three digits (each either a 1 or 0) can

create eight different combinations of 1’s and 0’s. A gene of 001 would correspond to a different

composer block than a gene of 010, etc. The leftover eighth combination, which we defined to be

000, is used to code for no function, allowing the composer to use less than the full number of

composer blocks.

The genes, themselves, are what facilitate the use of multiple composer blocks (or even multiples

of the same composer block) within the composer. We were limited, again, in the number of

composer blocks that could make up a composer because only so many genes would fit into

each trait, which were also limited to their own bit-length by the total 32-bit string length. After

much deliberation, the harmony had one 2-bit gene; the phrasing, one 2-bit gene; the melody, six

3-bit genes; and the rhythm, three 2-bit genes. (See Figure 3). This meant that one harmony

block, one phrasing block, six melody blocks, and three rhythm blocks could be used.

Figure 3: Genetic Structure of a Composer: Bitwise Breakdown

For the actual creation of music, the harmony and phrasing genes were purposefully positioned at

the beginning of the chromosome because together they generate the basis of our music; the rest

of the block cannot function without them. Beyond that, the melody and rhythm blocks can be

applied to basic music in any order. The design of the chromosome, though, does not allow the

melody genes and rhythm genes to intermix; therefore, we integrate the order that the composer

blocks are applied into the chromosome itself. This is where the last four bits, collectively called

the order tweaker, come into play. The order tweaker has sixteen possible binary combinations,

each of which is arbitrarily assigned to some pattern of rhythm and melody composers that are

applied but do not disturb the actual order of the genes. (See Figure 4). Finally, a translating

function, knowing all of this, is able to read a single composer’s chromosome and call the correct

composer blocks in the intended order.

Page 12

Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

Figure 4: The Order Tweaker

C. The Critics

The composers now have a way to create music and alter it as they see fit. They even have a

way to change themselves, but how will they know when to do this? Genetic algorithms provide a

framework for alteration by using evaluations of each composer to create odds of reproduction in

a mock process of natural selection. By listening to each composer, a rating could be assigned to

each piece for our evaluation, but unfortunately, it would take days for a person to do this. Even

for a group of people this process would take too long.

One solution to this problem is to just pass the job onto the computer. The computer should be

able to rate the composers in a reasonable amount of time; however, it does have one major

disadvantage when compared to humans. The computer has no idea what a good song is.

Somehow the computer must be given a way to like one composer over another. Designing an

efficient, accurate method of evaluation is key to creating a useful genetic algorithm.

1. Competing Critics

In order to ensure that the composer functions evolve in a way that improves the sound of their

music, it is necessary to base their evolution on evaluations of their songs. The evaluation

process is crucial to the genetic algorithm’s ability to converge on a group of “good” composers.

The simplest way to do this is to have a function that evaluates the music on several attributes,

picking out what it finds to be desirable characteristics and rewarding the composer for their

inclusion.

Journal of the PGSS

RoBach: Automatic Music Composition

Page 13

There are, however, problems with using only one critic. Just as what makes a song good is

somewhat unclear, so is what makes a desirable characteristic. If a critic was just given a set of

criteria, it would eventually converge on what it thought was the best composer, but humans

would not necessarily agree with it. Since no one is interested in what a machine thinks is a good

song, it is important to find some way to make sure the criticism would match that of people.

An alternative approach to a single critic would be to have several of them. These, at first, would

be just as random. Each one would have its own concept of what a good piece. It is likely that

with the right types of criteria, a critic could be made that agrees with humans. Trying to find a

critic best fitting the tastes of humans is like finding a good composer: genetic algorithms can be

used to solve the problem.

Assuming a genetic algorithm can be made to do this, the method should give the desired results.

However, genetic algorithms would require judging the critics themselves. Continually creating

set after set of critics to evaluate other sets of critics would still be random in the end unless at

some point the critics were judged against something that was known to result in good music.

This is where the critics turn to the corpus. It is already assumed that this corpus consists of

good music. If the critics were used to evaluate the music of the corpus, we could find how

accurately they assessed the songs. It is hoped that these critics like the music and give it a high

score, but the term “high score” is arbitrary unless examined in relative terms. Comparing the

scores of good music in the corpus to the “worse” music already being made by the current

composers should give a basis for the evolution of the critics such that, as a whole, they redefine

their criteria to a higher standard after each set of generations. Since this method allows the

computer, by itself, to continually improve its ability to rate music, it made the best choice for

creating critics in this experiment.

2. Critic Blocks

The critic is composed of a series of blocks, each looking for a specific feature in the composition

object that is desired. The blocks of the critic are like the organs of a human; each one has it own

function, perhaps an action to govern, and all are essential to the survival or success of the unit.

The features specifically examined by the blocks in this study include similarities between Bach’s

chord structure and melodic patterns, common Bach rhythms, and phrase structure found in most

of his chorales.

The first critic block created detects the frequency of all the intervals between notes through our

entire corpus. To gather data, two consecutive notes are stored, their numeric values are found,

and the second value is subtracted from the first. The interval between the notes is stored as a

value in a histogram. Using various methods explained in parts four and five of section C in this

literature, the histograms from our “good music” and our “bad music” are compared and the critics

give a rating out to the composer of its music.

Page 14

Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

Next we construct histograms from data on the chord progressions. We can simply convert the

note of a chord to a numeric value as we did with the pitches above. As for the chord type

transition, we use a base eight counting system that would relate each of the types (Major, Minor,

Suspended, Seventh, Minor Seventh, Diminished, None, or Unknown) to the next and assign a

number starting with “1 -> Major to Major, 2 -> Major to Minor, …” all the way up to “…63 ->

Unknown to None, 64 -> Unknown to Unknown.” Combining the data of the pitch change with the

chord type change accurately gives us a kind of chord progression that can be used as a

category in a histogram. Again the critic constructs a histogram based off the corpus to compare

to the music produced by the composers. This critic block is a better test than the other one

above because it tests not for one pitch but a number of pitches. This allows us to establish more

of a structure among all the voices rather than in just a single voice.

With the two above methods, blocks were additionally formed with variations on their basic

concept. These variations included only seeking every other chord, seeing as they are the vital

chords that define the piece, and making comparisons among them. Another variation was to

seek similarities and differences between the first and last chords in a given piece and how they

compared to the composition objects.

Rhythms are a key component of music so it is only proper that there be a critic function

established to evaluate them. Our critic function takes two measure phrases, breaks them into

four half note sections and evaluates each down to a sixteenth note resolution. Comparisons are

then made between the structure of the first and third section and then between the second and

fourth sections. These, like the rest, are loaded into histograms and compared by the critics to

give a rating.

Phrasing was one of the most vital aspects for which to create a critic block. The phrasing critic

block is responsible for chopping the music into sections accurately and comparing their

similarities and differences to the data gathered from the corpus. If the separation of sections is

not properly executed, an inaccurate rating will be returned and the critic function will break down.

The phrase critic operated similarly to the critic above with regard to rating.

3. Feature Extraction

The purpose of a critic is to analyze the music mathematically in different aspects such as pitch,

chord progressions, rhythms, and phrasing. There are many ways to go about this, but we chose

to train the critics based on the corpus of Bach chorales and then rate our generated music. The

first step in this process is feature extraction.

Whether making calculations on a spreadsheet, drawing a graphic, or producing a stream of

music, computers truly recognize only one thing: numbers. Because of that, we need to establish

a means for the computer to take streams of music in the form of MIDI files and convert them into

five strings, one for each of the four voices, soprano through bass, and a fifth string for the chord

Journal of the PGSS

RoBach: Automatic Music Composition

Page 15

progressions. The strings are then analyzed to extract the desired features. For our study, we

extracted features from three aspects and another feature through human analysis of the music.

First, we analyzed chord progression in our corpus or collection of “good music.” The analysis of

these chords is broken down mainly into two aspects: a pitch and a chord type. The pitch can be

one of any of the 12 pitches in the standard chromatic scale. Basic arithmetic is performed to get

the difference in pitches. The chord type can be one of any chosen from a list of eight that we

defined as follows: Major, Minor, Suspended, Seventh, Minor Seventh, Diminished, None, or

Unknown. Because a transition can be made from any given chord type to another, we assign

numeric values to all possible transitions using a base 8 method. The data collected is converted

into histograms and compared to our composer’s works.

Next, a method is provided to detect common pitch variance in our corpus. This is solved with the

same method as with pitch above, but applied to each of the four voices as opposed to the chord

list. By doing this and finding the frequency of given intervals, we can program the composers to

rule out intervals Bach never used and select his common intervals at a much higher frequency.

Likewise, the critic blocks can measure how closely the composition objects approach Bach’s

pieces by comparing the frequencies of particular intervals.

Detecting rhythms, although thought to be elementary because it is solely based on numbers, is

really quite difficult because there are so many different combinations of note durations equal to a

measure. Even if one were to assume the shortest valued note to be an eighth note, there is still

a vast number of combinations of notes per measure, ranging from a single whole note to eight

eighth notes and everything in between. While the data structure for rhythms is easy to work with,

the combinations are endless.

Phrasing for music is a vital aspect that gives a feeling of progression through the composition

and eventually the feeling of an ending. Phrases are the hardest aspect of music to code for

because of their arbitrary nature. A piece, for example, may follow a simple a-b-a structure where

the beginning and ending are virtually the same, barring a pickup note and ending fermata, or

there might be an a-b-c structure where there is no direct similarity among phrases but there is an

overall idea to the piece. Bach wrote using two and four measure phrases, all of which had

distinct structure that could be easily extracted given the proper algorithms and features for which

to search.

4. Evaluation by Means of Histograms

In order to use a genetic algorithm, it has already been established that an evaluation method is

necessary. The way this evaluation is carried out is very important; otherwise the computer will

think the wrong composer is optimal. One key thing to keep in mind is that every block must be

evaluated in such a manner that no one block is naturally disproportionate in weighting compared

to the others (instead, the weighting should be taken care of by the 32-bit critic).

Page 16

Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

One way to make sure that each function starts on the same scale is to have it evaluate through

the same process, in this case, by means of histograms. Each function looks for certain patterns

in any assigned piece. The critic will listen to a song by a composer counting the occurrences of

each event it wants to find. When it has finished, there will be a total number of frequencies for

each event.

This data is raw, though, and in its current state, of little value. One problem is that the more

notes a song has, the more likely it is to create a desired pattern. The critic would favor long

pieces full of 16th and 32nd notes. To take away this undeserved advantage, each total is taken in

terms of a ratio to the possible opportunities for this pattern to appear in the piece; that is, each

total is divided by the total number of notes in the composition.

The other major problem with this method is that not every pattern is a good one and even if it is,

it will probably not be wanted for each note of the piece. Once again, the corpus is useful.

Instead of just saying that a pattern is bad or good, the function can now decide how far off the

piece is from the desired amount. Using a histogram generated from the corpus, the desired

amount is simply the ratio of the occurrences of an event in all the pieces of the corpus to all the

times the event could have been presented. The variance between these two is a relative scoring

base that creates a notion of what a piece should really be like.

5. Variance Through Chi-Square

Unfortunately, there is no exact way to determine variance between two histograms. There are

several methods for estimating such a value, but each has its weakness. One of the most

common methods for accomplishing this is the chi-square function:

score

(obs exp) 2

exp

where obs equals the observed values and exp the expected values.

In statistics, a chi-square test can, among other things, be used to determine whether a sample of

data fits a predetermined pattern, so one can see its potential applicability for comparing a

histogram of characteristics from the corpus of “good” music to a histogram of computer

generated music. Unfortunately, the variance in data collected from the computer-generated

music will not be well-represented by the chi-square function.

There was concern over the

consequences of having a very low expected value or, even worse, an expected value of 0. With

very low expected values, chi-square tests can become chaotic, and when there are expected

values of 0, chi-square tests are impossible to perform. Also, the chi-square distribution would

penalize the composition more if the observed values were much higher than the expected values

than if the expected values were higher than the observed values.

Journal of the PGSS

RoBach: Automatic Music Composition

Page 17

The first proposed solution was a logarithmic factor that attempted to rectify this situation by

weighting high and low expected values differently to counterbalance the naturally inaccurate

weighting distribution of the chi-square function; however, when applied, the function created a

loophole for the genetic algorithm to exploit. Whenever an observed value was generated where

the expected value was equal to 0, as a consequence of the logarithmic factor, the score would

be set to 0. Since scores closer to 0 are better, the genetic algorithm would consistently generate

composers that would create compositions full of unexpected events.

Other ideas were considered, such as evaluating the integrals of power regressions of the

expected and observed histograms. This can be very deceptive. Just because one graph's

integral has the same numerical value as another's does not by any reasoning mean that the two

graphs are similar in shape.

There was another tempting concept considered where the computer would only consider certain

values of the histograms such as the most frequent or least frequent values. Better scores are

given when observed and expected histograms agree more in their lowest and highest values.

Although many of the functions would create useful distributions of values between the highest

and the lowest, it is possible that the genetic algorithm could exploit these ignored areas, creating

chaotic amounts within the needed range.

The final decision returned to the use of the chi-square function. The composition's score would

be calculated by:

score

2(obs exp) 2

obs exp

where obs is the number of times a pattern was observed in the piece being evaluated and exp

was the number of times it was found in the corpus, i.e. the expected values. These values were

normalized as ratios in terms of how many possible occurrences of these events were in the

pieces that were being analyzed. This is done to ensure that no piece receives a better score just

because it is longer or because it uses shorter notes to fit more in.

In our variation of chi-square, the squared difference between observed and expected is divided

by the average of observed and expected rather than just the expected value. This makes the

score dependent on both the observed and expected values equally. If both the values are close

the function will be dividing by something similar to what was expected as their average will be

close to the expected, but when there is an extreme difference normally causing chaotic answers

in the chi-square function, the additional observed in the denominator brings the score to a more

reasonable representation of variance. This function is still unusable when obs and exp are both

zero, but since this is an optimal situation anyway, a perfect score of zero can be assigned. With

this scoring system in place, it can be applied to each individual critic block to come up with an

overall evaluation for the piece.

Page 18

Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

6. Critic Identity

The critic blocks give the critic a way to look at the music, but if the critics use all of the same

critic blocks and use them all in the exact same way, then there will be no variety in the

population of critics we create.

Knowing that this is an essential component of the genetic

algorithm, some way of creating diversity must be determined.

One way to make the critics unique would be to have them all only use certain critic blocks, but

simply limiting their view can only hurt each individual critic. Instead, the way the critics use these

blocks is changed. What differs between the critics is the weight they give to each block. The

genetic algorithm should continuously be moving towards the combination of weights that creates

the greatest disparity between the good music and the bad music. This constantly raises the bar

for the music, forcing it to evolve into better music.

Figure 5: 32-bit Critic String Model

With this concept in mind critics can be created as 32-bit string chromosomes.

These bits

represent specific ways to affect the weighting of the critic blocks. They are grouped in terms of

genes which are bits that work together to define a certain factor. By manipulating these factors,

it should be possible to create critics who accurately rate music.

The first 28 of these chromosome bits are split into fourteen bit pairs (labeled c 1, c2, c3… c14 in

figure 5), each responsible for exactly one critic block. They range from zero to three in binary

and when fed into our weighting function, are the only values which vary from critic block to critic

block.

The next three bits after the first fourteen pairs are dedicated to our “scaling factor” (labeled d in

figure 5). The value of these three bits ranges from zero to seven. They are index values to an

array containing possible scaling factors. The scaling factors were chosen arbitrarily under the

assumption that the genetic algorithm would evolve towards the most desirable constant.

Now that the scaling factor has been chosen, it is not immediately clear how, in conjunction with

our weighting factors from the first fourteen bit pairs, a formula should be made. There were two

similar formulas being discussed for use, but rather than leaving this decision up to human

intuition, the final bit was added to our 32-bit string (labeled e in figure 5). This bit switches

between the two functions based on whether its value is a zero or a one. The formula for each

block’s overall weighting factor is then

Journal of the PGSS

RoBach: Automatic Music Composition

Page 19

w a ci for e=0 and

i

d

w c ad for e=1

i

i

where w is the overall weighting factor, i is the number of the block currently being evaluated, and

a is the array of scaling factors.

The final score for each piece evaluated by the critic is then just the sum of the products of the

scores found by each critic block and the overall weighting factor for that block. The resulting

scores are only useful when used in a relative fashion, but this is all that will ever be necessary.

When being used to evaluate the corpus, it will be compared to the evaluation of the bad music,

and when used to evaluate the different composers it will be used to make relatively sized

portions of the roulette wheel. This effective scoring system is the key to using critics for evolving

our composer functions.

III. The Experiment

A. Experiment Setup

1. Experiment Elements

With designs for the composers and critics in place, the genetic algorithm could start to do its

work. The composers and critics could be represented adequately by bit strings of 32 digits,

making them ideal for manipulation by such an algorithm. In the case of our application, the set

of composer bit strings is the population we evolved, using the critic evaluations as the fitness

function. This way, better critic evaluations led composers to be deemed more fit, allowing them

to replicate and exchange genes with other successful composers. However, with only a single

set of critics, it is possible that the composers would find a loophole. For example, if the original

random critics really enjoyed compositions consisting of all the same note, we would get some

pretty boring results; if they liked compositions involving strange chords and intervals, the music

would sound very dissonant to us.

Therefore, both the composers and the critics must be dynamic. By evolving the composers as

well, we had a fitness function that changed continually so that the composers always had a

challenge to meet. This way the critics would refine their tastes to like the Bach chorales and

music with the same features, which in turn triggers the composers to evolve in a direction toward

the same. The critics already had a bit string chromosome to represent them, and their fitness

function was the relative difference between how they rate the songs in our “good” category – our

corpus – and the music in our “bad” category, which will be explained shortly. In this manner, the

critics were also run through the genetic algorithm to find high-quality evaluators.

In order to evolve the critics, we needed to know what “bad” music was. We defined bad music to

be the music produced by current composers. Since the critic blocks are designed to favor

Page 20

Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

resemblance to good music and punish features in common with bad music, this would

encourage composers to evolve their genes away from the current generation and toward the

ideal goal of a Bach composition. In other words, we hoped to move closer to the goal of

composing good music each time we redefined the set of bad music. Composers in the next

generation would rank highly if their music was closer to our corpus than their peers’, as desired.

These three elements constituted the basic components for co-evolution of critics and

composers, the foundation of the project. But it could only be a success if they were put together

in the correct way.

Once we had the initial randomly-generated critics and composers, we

followed a regular cycle of steps, collectively called an epoch. Each epoch had three steps:

evolve the composers for many generations, redefine the bad corpus, and evolve the critics for

many generations. Within the generations of evolution for both the composers and critics are

contained the core of the genetic algorithm: evaluation, replication, cross-over, and mutation.

This way, the composers and critics evolved for several generations in an alternating pattern,

improving gradually as the definitions of fitness slowly raised the standards of excellence.

2. Why It Works

Redefining the bad corpus every generation of composers would have been a bad idea, since

although populations of genetic algorithms tend to increase in fitness over long periods of time,

there is no guarantee that a particular generation is more fit than the preceding. The exact value

for the number of generations in each epoch, along with other values such as population size, is

somewhat guesswork, in an attempt to find a balance between computational time and

thoroughness.

By using this definition of an epoch, and running many of these epochs end on end, we hoped the

algorithm would converge on many of the highest-possible ranked composers and critics allowed

by our definitions. After a great number of epochs had passed, the fittest composer would be

extracted, and the infinite compositions generated by it would be considered the ultimate product

of our algorithm.

Before running our primary experiment, we set up two smaller experiments to test the

effectiveness of our genetic algorithm. First, we created an environment where the critics favored

composers who started compositions with a particular chord. There is no gene in the composer

that specifies initial chords, so as expected it was unable to consistently converge on the same

composer. Next, we created another environment where the critics favored composers that used

no sixteenth notes. Since there are specific composer blocks that insert sixteenth notes, we

expected these would be selected against, leaving a population that did not use them in

composition after some time. Once these exercises gave us the outputs we expected, we were

able to move on to the main experiment.

3. Expectations

Journal of the PGSS

RoBach: Automatic Music Composition

Page 21

There were essentially three possible results of our proposed experiment. If the compositions

bore no resemblance to the Bach chorales, we would have to examine our approach for possible

errors in conception, or loopholes in the algorithm, particularly the fitness functions of the critics

and corpus evaluations. This is often the major failing of a genetic algorithm: the inability to

define a precise fitness function. Although we knew we wanted to end up hearing Bach-like

strings of music, it was very difficult to explain this to the computer programmatically in such a

way that it could evaluate the results accordingly. A second possibility is that our compositions

could sound adequate, but far from perfect. In this case, the music would probably be interesting

or harmonious, but not both. There may have been faults in the way the composer chose to

phrase notes together, or selected sequences of chord progressions. This is approximately the

level of success we expected.

Ideally, and least likely, our compositions would be

indistinguishable from Bach’s true chorales. This is our target for the project. Unfortunately, we

have neither enough variety in the composer blocks nor enough time and computing power for

such a result to be probable. The historical fact that such a result has never been accomplished

before is also a major obstacle.

With our algorithm pieced together and our initial parameters ready, we let the system run

according to the rules laid out above for a long time, in order to evolve our composer hatchlings

into reputable musicians.

B. Experiment Results

1. Trial Runs

Before running the primary experiment, we ran a few tests to see if our setup would function as

expected. These tests did not involve co-evolution; rather, they used one critic and evolved

solely the composers. We ran these sub-experiments using 100 generations, population size 20,

and evaluating 2 songs per composer per generation.

Using a trivial critic that gave the same score to every composer, the algorithm sometimes

converged, but not on the same composer. Since there is no selection bias, one composer’s

genes aren’t favored over any other, so it is pure luck for one of them to end up dominating the

gene pool.

Another critic we created hated fast notes. It would give no points to any composer whose

composition contained even a single note faster than a quarter note. This actively selected

against some composer blocks, such as those that made runs consisting of eighth and sixteenth

notes. The algorithm converged to a composer which selected totally random chord progressions

organized into one phrase, with no rhythm or melody generation.

The third critic liked C Major chords, and would give points to any composition which contained

one of them, but none if they didn’t have any. There is no portion of the composer genome that

Page 22

Aaron, Bildersee, Cooper, Countouris, Eng, Fritz, Heath, Leiby, Xu, Zarko

selects chord preference, so there was no gene to favor or discourage.

In this case, the

algorithm behaved similarly to the trivial critic, in that it did not consistently converge.

2. Primary Experiment Results

After running six epochs as defined above, we stopped the algorithm and extracted the results.

Each epoch converged upon a single composer or critic (depending up on which epoch was

being run), and after the algorithm had isolated an individual, the epoch was stopped before all

one hundred generations could be created. In the end, one composer survived. The results, as

we expected, were mixed. Although we were unable to develop composers that could generate

music indistinguishable from Bach’s, we converged on a composer each epoch, meaning that our

genetic algorithm had been successful.

C. Experiment Analysis

Because a composer was singled out from each epoch in which it was evolved, we were able to

analyze the music the fittest composer from each epoch produces.

The ratings of these

composers improved after each epoch (see figure 6).

Figure 6: Composer Fitness vs. Epoch

We also sampled the music generated by the composers that were eliminated after the first

epoch. Their music is most definitely less pleasing than the music produced by the surviving

composer.

Though the end composer created better music compared the beginning composers, our final

results did not sound indistinguishable from Bach’s chorales.

The music was mediocre, as

expected, sacrificing musical appeal for interesting characteristics. This is most likely because

the composer’s chromosome and building blocks as defined are incapable of producing a

composer that can mimic Bach with every composition. Similarly, the critics lack the ability to

distinguish Bach’s music from other pieces. The simplicity of our approach injured the chance of

its success; more elaborate preparation for the data objects would have produced better results.

Journal of the PGSS

RoBach: Automatic Music Composition

Page 23

IV. Conclusion

Our experiment had a mixed outcome overall. We learned a lot from observing the genetic

algorithm in action, and were fortunate enough to see it converge each time it ran. However, we

did not achieve the ultimate goal of creating a perfect music composer.

In the future, if we could run this experiment again, we would use more composer and critic

blocks, with larger and more detailed chromosomes.

The composers and critics would

communicate back and forth, and would make greater use of statistical data. Also, the population

size, epoch duration, and number of epochs would be increased, with hopes of enhancing the

diversity of the simulation.

V. Acknowledgements

We would like to thank Dr. Peter Lee and our TA, Joel Donovan, for putting much more of their

own time into this project than was healthy, and without whom we never would have been able to

finish—or start, for that matter.

1

John A. Maurer IV, “A Brief History of Algorithmic Composition,” http://ccrmawww.stanford.edu/~blackrse/algorithm.html (accessed 27 July 2004).

2

Bruce L. Jacob, “Algorithmic Composition as a Model of Creativity,”

http://www.ee.umd.edu/~bljalgorithmic_composition/algorithimicmodel.html (accessed 27 July 2004).

3

Peter M. Todd and Gregory M. Werner, Musical Networks: Parallel Distributed Perception and

Performance, (MIT Press, Cambridge, MA, 1998), 3.

4

Todd and Werner, Musical Networks: Parallel Distributed Perception and Performance, 4.

5

“Neural Networks,” http://www.ramalila.net/Adventures/AI/neural_networks.html (accessed 27 July

2004).

6

David E. Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, (AddisonWesley, 1989), 1.

7

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 2.

8

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 3-4.

9

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 4.

10

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 9.

11

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 10.

12

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 7-8.

13

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 10-13.

14

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 12-13.

15

Goldberg, Genetic Algorithms in Search, Optimization, and Machine Learning, 14.

16

Paul Hudak, “The Haskore Computer Music System,” http://www.haskell.org/haskore (accessed 30 July

2004).

17

John Peterson and Olaf Chitil, “Haskell: A Purely Functional Language,” http://www.haskell.org

(accessed 30 July 2004).