Draft_ Ecological Benefit Quantification

advertisement

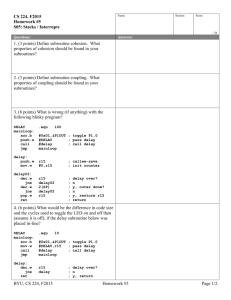

Draft Draft Draft Proposal for Data-Driven Hierarchical Decision Making I. Introduction The selection of alternative restoration plans and operational strategies for the highly complex system that is the Florida Everglades constitutes a multiobjective decision making problem of unprecedented dimension. From the perspective of the scientist, the system is characterized by uncertainty; of measurements, model representation and interconnectivity of the restoration objectives. For stakeholders, scientist or not, the evaluation of competing plans is impeded by myriad, often competing and likely overlapping criteria known as the Performance Measures (PMs). Decision making is further complicated by the lack of guidelines for weighting of the PMs, a subjective process subject to concerns regarding consistency, transparency and repeatability of results. Finally, the spatial extent of the system and spatial distribution of PMs, reliance on model results versus monitoring and uncertain sensitivity of ecological assets to perturbations of the PMs must be considering in restoration plan evaluations. This proposal will describe a two part process for evaluating restoration plan alternatives addressing the two major uncertainties that that impact selection. The first uncertainty relates to the information content of the PMs and data used to evaluate them. The second addresses the uncertainty associated with the weighting of the many PMs for use in scoring alternative plans. In accordance with this characterization of the decision problem, the proposal components are: Optimization of PM data information content in support of decision making. Methods to reduce uncertainty and characterize relationships, if they exist, between performance measures based on pattern recognition and noise reduction techniques, among others. Such techniques may be used to optimize the information content of the available data such that the subjective process of criteria weighting is based on true trade-offs. Analytical Hierarchical Process (AHP) incorporating the Delphi Method of decision making based on expert opinion elicitation. AHP systematizes the multi-objective decision making to enforce consistency while the Delphi Method uses an iterative process that engenders consensus and reduces uncertainty (spread) in judgments produced by diverse decision makers. The result is a multi-objective decision process specifically tailored for the complexities of the Florida Everglades restoration. -1- Draft Draft Draft II. Optimization of Information Content in Data Performance Measures and model output are used to evaluate the expected outcomes of different operational alternatives. Each has associated uncertainty and unrevealed connectivity that jeopardizes the meaningfulness of straightforward combinations and evaluations of resulting values. Furthermore, overlap and redundancy in measures, measures that are difficult to model, and obscuring of key causative processes due to a focus on endpoints may lead to decision making based on false tradeoffs and evaluation of inferior alternatives in a Pareto sense. For example, Performance Measures may appear nominatively independent as defined by stakeholders but in fact be highly correlated with PMs independently derived by separate stakeholders. Some PMs are distributed over space and time and it is not transparent that all locations or timeframes should be treated equally. Yet each PM is an indication of a particular impact that is important to at least one group of stakeholders and these evaluation points should not be discarded. For this reason a method is required that preserves the information content of each PM while accounting for these various sources of uncertainty. Tools are available that define, in a statistical sense, relationships between correlated and causally connected data, such as the PMs. One example of such a method is Principal Component Analysis (PCA). PCA is a mapping of a data set of n measures and m realizations from their original n x m dimensional space to a new n x m dimensional space that maximizes the modes of variability within the data set and is defined by orthogonal axes. Using PCA on the set of PMs, including spatially distributed PMs, will assign a weight to each PM objectively based on its contribution to each mode of variability. In addition, the use of PCA with a dataset with significant cross-correlations often yields the result that there are p modes that explain most of the variability of the measures and that p << n. This is a welcome result as it allows the subjective process of prioritizing PMs to be performed on a much smaller set of measures. The benefits of PCA as applied to the PMs extend beyond the reduction in dimension of performance measures. The nebulous interconnections between PMs defies identification of true tradeoffs. In that sense, it is not clear that any alternative is located on the Pareto frontier of non-inferior, feasible alternatives. At the Pareto frontier for a set of PMs, the PMs would be maximized to the extent that any further improvement in one via a decision variable necessitates a decrease in another. Identification of the Pareto frontier requires an orthogonal, or uncorrelated relationship between PMs. Figure 1 displays the hypothetical case of two orthogonal PMs and a hypothetical set of PM values for competing alternatives. In this case a lower value of the PM is better for each and thus the -2- Draft Draft Draft Pareto frontier is the subset of values that have no values located below and left of them. Realignment of the PMs along the orthogonal modes of maximum variability allows one to move from within the alternative space to the Pareto frontier, as depicted in the figure, although in actuality it is the axes that move. The PCA of PMs insures orthogonality of the resultant measures, which are linear combinations of the individual PM values. Any point along this frontier is optimal in the Pareto sense, although each point assigns a different priority or weight to each PM. Thus within this subset the optimal tradeoffs can be decided. The final benefit of the PCA of the PMs is the possible identification of key causal processes. Once the major modes of variability within the set of PMs are defined, one can analyze investigate these modes are linked to a key causal process, one that influences a large subset of PMs but may not have been identified as an important objective in and of itself. It is further possible that these key processes may be physically monitored and provide important feedback regarding the performance of selected alternatives and adaptively managed operation that would not be possible for a large set of spatially distributed PMs. A Bayesian network analysis will conducted to assess the causes of variability in the PM set and for identification of the key causal processes for each mode of variability. III. Criteria (Performance Measure) Weighting Once the available data has been processed to yield the transparent information content the second stage of the proposal can begin implemented. Here, again, there are two challenges: achieving consensus opinion for the ranking of the criteria, or PM, and quantification of that ranking. Returning the example of two orthogonal PM, one can envision this step as moving along the Pareto frontier to -3- Draft Draft Draft select the weightings, wi and wj that in turn reveal the optimal alternative. The Analytic Hierarchy Process will be used to quantify the subjective rankings. Within this process, the Delphi Method will be used for eliciting expert opinion. Each is described below. Analytic Hierarchy Process Analytic Hierarchy Process (AHP) is a quantitative technique of subjective judgments (Saaty, 1980; Saaty and Alexander, 1981). AHP has been successful in cases where multi-objectives (i.e., multiple criteria or multi-attribute decision making) have to be satisfied for decision making when there are several alternatives and a hierarchy of objectives does not exist. Let n again be the number of items (i.e., performance measures) being considered with the goal of providing and quantifying judgments on the relative weight, importance, and priority of each item with respect to the rest. In AHP, the problem is formulated in several layers, the first being the satisfaction of the objectives represents the top, followed by the criteria and finally the alternatives. The challenge that AHP solves is subjectively assigning ranks to rival criteria, i.e., the performance measures. While this subjective element can not be eliminated from multi-objective decision making, AHP systematizes the process to insure consistency and transparency in the rankings The weighting process is conducted as follows. Pair-wise comparisons are carried out by stakeholders between all measures with respect to their contribution toward the overarching objectives of the restoration. Returning to our previous example, stakeholders or decision makers need to decide which of the two PMs in figure 1 is more important. The preference strength is expressed on a ratio scale of 1-9 (Saaty, 1980). The resulting matrix of the pairwise comparison A R nn is necessarily reciprocal with aii 1 and aij 1 a ji . If judgments are completely consistent then by providing the first row of A one could deduce the rest of the elements due to the transitivity of the relative importance of the items. In practice, consistency is not assumed and the process of comparison for each column of the matrix is carried out. Suppose that at the end of the comparisons, we have filled the matrix A with the exact relative weights; if we multiply the matrix with the vector of preferences w ( w1 , w2 ,..., wn ) we obtain: (1). Aw nw or A nIw 0 To recover the overall score from the matrix of ratios the homogeneous linear equations from (1) must be solved. Since n is an eigenvalue of A thus we would have a nontrivial solution, unique to within a multiplicative constant, with all positive entries. Small perturbation in the coefficient implies perturbations in the resulting eigenvalues. From the nature of the matrix the maximum eigenvalue has to equal n and its corresponding eigenvector w gives a unique estimate of the underlying ratio scale between the elements of the problem. The consistency -4- Draft Draft Draft index could be easily measured to evaluate the degree of uniqueness in the solution and it measure the error due to inconsistency. The consistency ratio (CR) reflects the consistency of the pair-wise judgments and shows the degree to which various sets of importance relativities can be reconciled into a single set of weights a to verify the goodness of the judgments (Satty, 1981; Ahti and Raimo, 2001). In literature there are threshold CR’s that could be estimated and they provide guidelines for recommendations regarding the pair-wise comparison matrix. This process is described for only one level in the hierarchy. Hierarchical composition should be used to combine all the levels. The sum is taken over all weighted eigenvector entries corresponding to those in the lower level, and so on, resulting in a global priority vector for the lowest level of the hierarchy. Implementation of AHP is shown schematically in figure 2. Delphi Method Participatory decision-making is frequently advocated for resolving issues involving common pool resources that have been traditionally decided by governments or their agencies. Participatory approaches are still subject to social asymmetries in power and access and may fall prey to the usual inequities common to centralized approaches, where certain economic or social classes, ethnic groups or genders dominate. In addition, it is not clear that the results of participatory decision-making are better than the results that would be derived from a single informed decision maker. Results may, in fact, be worse. Still, involving stakeholders in the decision process for resources that affect their livelihoods is deemed beneficial it its own right. The decrease in the accuracy or quality of decision is less than the benefit gained by including stakeholders in the decision. We make the assumption that stakeholder decision-making is desirable and will be a factor in most future common pool resource decisions. The questions we would like to address are: what elements of group decisionmaking degrade the quality of the decision; and with these elements identified, can the process be designed to improve the quality of the decision. We are especially interested in decision-making under uncertainty regarding natural resources, and we examine the specific case of water resources. While the adage “two heads are better than one” is generally accepted as true and could be extended to the “n heads” case, the way in which the heads interact may detract from the benefit of the increased number of participants. The Delphi Method evolved from this observation. It was developed by the RAND Corporation as a method to maximize the benefit of opinions from groups of experts on complex and uncertain questions. The Delphi Method was designed to minimize detrimental elements of group decision-making, namely the biasing effects of dominant individuals, irrelevant communications (noise) and group pressure toward conformity. This was accomplished through soliciting opinions -5- Draft Draft Draft on questionnaires, providing selective feedback to the group based on the first round answers, then repeating the solicitation of opinions with a modified questionnaire. Their trials found that the resulting answers were often more accurate than those produced by face to face meetings of experts in controlled studies. Dinar et al. (2004) used this approach to estimate the probability of success for irrigation reforms in Pakistan. -6- Draft Draft Draft 2 1 3 Ecological benefit quantification Analyze rank reversal using ANOVA and F-test (Problem recognition) Perform pairwise comparison Run PCA for evaluation of the relationships between PMs and their importance Define the probability distribution and probability matrix Estimate the impact of variation Replicate the eigenvectors Select a set of PM for further analyses (Monte Carlo Simulation or Bayesian networks) Perform "what if" scenario using sensitivity charts Select a group of subject matter experts for preference assignments Yes Is the consistency index < threshold? No 4 Perform "confirmatory AHP Refine the preference assignment Use sensitivity analysis Define scope and boundaries of AHP Hierarchy decomposition and strategy analysis Benefits quantification and alternative selection Eliminate insensitive simulations Analysis of confidence intervals Figure 2: Integrated framework for ecological benefit quantification. -7- Draft Draft Draft References: Saaty, T.L.,1980. The Analytic Hierarchy Process. McGraw Hill, New York. Saaty, T.L., and J. M. Alexander, 1981. Thinking with Models. Pergamon Press. Banuelasy, R. and J. Antony, 2004. Modified analytic hierarchy process to incorporate uncertainty and managerial aspects. International Journal of Production Research, 42 (18), pp: 3851–3872. Ahti A. S. and P. H. Raimo, 2001. Preference Ratios in Multiattribute Evaluation (PRIME)—Elicitation and Decision Procedures Under Incomplete Information. IEEE Transactions on Systems, Man, and Cybernetics, 31 (6). Notes ulall: I have it as follows --> All PMs derive from water variables and are typically monotonic functions of them...hence we know that they will be correlated, so it makes sense to come up with a weighted measure that reduces them to an index. However, there may be a hierarchy of relations between them and some may be negatively correlated (e.g., flood reduction and ecological releases), so important to filter out how they are related as well and present it --- this corresponds to your data analysis step. Underlying is the thought that the importance variable or index needs to be connected back to simple observable and measurable hydrologic and ecologic state variables, not just model computed stuff. So, if we can compare model hydro variable (time series) with observed and characterize ulall: reliability/uncertainty in the primary variable, then we can propagate that into the PM and hence into the weighted PM based index, using Bayes nets, thus recognizing explicitly how we connected the many pieces. Even though they have 10 or so PMs now, these are defined at many spatial locations and over time, so aggregation into an index that can be logically disaggregated and maintaining the hierarchy of relations -- ie recovering where the index is coming from and how each measurable piece simultaneously impacts many PMs is important to reconstruct ulall: We can do the reduction via PCA on model time series of PMs. In this case we'll have +ve and -ve coeffs in each eigenvector and we focus on identifying simultaneous +ve and -ve impacts -- for obviously +ve and -vely correlated variables this makes sense, but the eigencoeffs can be + and - for even +vely correlated variables which would make subsequent interpretation difficult. ulall: So, at this stage we can use also Archetype Analysis which is like PCa except that it uses only +ve weights and then we can say that a particular PC is composed of x1, x2, xi % of each each of the PMs. Of course if we know that the PMs fall in 2 groups -- mutually +vely and mutually -vely correlated then we can just multiply one group by a -ve and then have archetype PCs that are meaningful. ulall: So, in the first stage, we use a dimension reduction step -- likely PCA or archetype but also explore BNs -8- Draft Draft Draft caseyobrown: got it so far. am thinking delphi method used to come to consensus for interpretation of pcs after our initial cut prior to AHP, make sure raters are using same info ulall: yes --!! however, we still need to deal with space and time to some extent -ulall: lets take time -- they will have one PM number from 1 run I think which will be the total time average for that PM over the model run for that spatial box or region caseyobrown: ok ulall: so, ideally if PM=PMbase or value for base conditions, it would be good to know the number of years in which PMalt >PMbase caseyobrown: ok ulall: so ideally I would want to compute PM as a time series for all alts including base and then use the diff from base to develop my aggregated PM score -- and then I also have its PDF instead of just mean value ulall: so the idea is that we could then convert the PC of the PMs (defined as this diff PM) into a pdf as well caseyobrown: ok i see this, but problem is pc < or> not clear which is better possibly ulall: Then we are still stuck with as many such pdfs for each PM as there are spatial locations caseyobrown: but can ignore this as detail for later ulall: well -- the simplest case is that the mean of the PC (of PM) time series is their equivalent score or index ulall: and we now have a pdf for that score -- I expect that the pdf is more for our benefit to design an internal algorithm than for overt discussion with stakeholders caseyobrown: ok, yep, remembering we're in time dimension only. ulall: yes space is messy ulall: so now space -- first thought is that one should average over space for each time step -- but how should such an average be computed caseyobrown: within single PM? ulall: 1) arith mean of the diff based PM at each location ? -- this gives me a time series for each PM averaged over space ulall: yes within single PM -- else hard to make any sense caseyobrown: PCA provides weight according to effect on variance-seems good ulall: however, we expect that there may be strong correlations across space within a PM -some areas are all +vely impacted while others are -vely impacted -9- Draft Draft Draft caseyobrown: and some pms are deadends, others key points that influence downstream ulall: yes u r right so 2) is to take PCA across space using time correlation for a given PM and that gives us our PM time series aggregated over space ulall: then we do pca across these spatial PCs for PMs -- fear is that if the leading PC or 2 does not pick up most of the variance we are starting to lose spatial detail and then pm detail ulall: but these are details to worry about when we work and not so much for proposal perhaps ulall: Now, if we can get stakeholders to see the degree of overlap between 2 alts in terms of leading pcpms, if one clearly dominates the other they may "vote" for that alt -- if it does not, then they will want to understand more, and perhaps revise even their weights on original PMs -where they can now be quickly shown how changing those weights impacts the PCPM structure. -- iek maintianing correspondence between aggregate and disaggregate representations while the hydro variable that is measured remains the same ulall: so this still sounds more like delphi than AHP in that we are trying to facilitate the process of their voting for alternatives rather than expressing prefs for PM weights or scores ulall: I think this is an important difference --since the AHp tryies to get weights for PMs which then are used to choose an optikmal solution and the stakeholder is asked to accept the blackbox caseyobrown: ok, i see. iterative with explanations of why choosing one over the other as effective of ranking pms caseyobrown: transparency and ability to iterate probably extremely important for acceptance ulall: whereas in Delphi with votes as to alternatives and discussion re intermediates and impacts of weights etc you proceed more directly towards participatory selection of the alternative rather than participatory slection of weights and then black box for solution caseyobrown: exactly ulall: so you agree -- good -- then we need to work this into the strategy in a coherent way ulall: and mention AHP and Delphi as possibly complementary but likely competing ways to address the problem -- complementary if weights pre-PCA are selected using AHP -- but not sure if that makes sense caseyobrown: maybe abed can answer that best. seems that still presents the problem of no consensus/negotiation on weighting if done prior to pca because meaning will not be clear -10-