Tutorial: Fundamentals of Remote Sensing Introduction What is

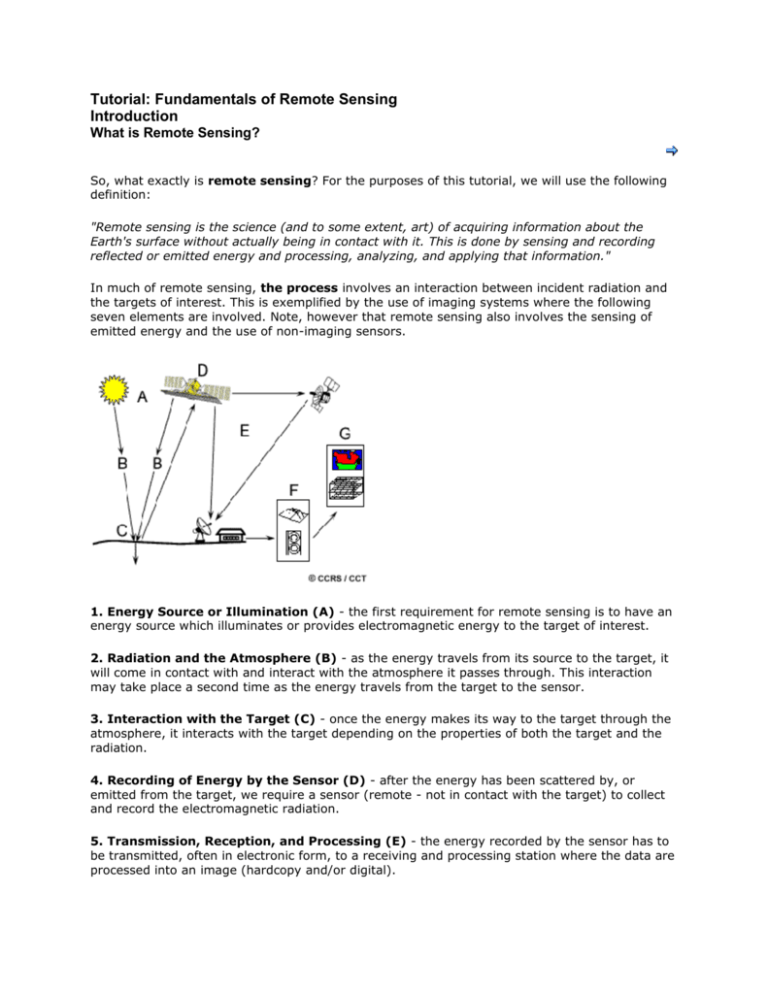

advertisement