1 - ITU

advertisement

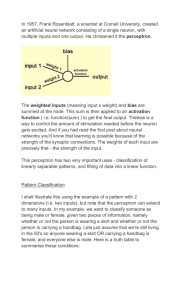

Perceptron Claus Schneider, schneider@itu.dk Mads Kofod, kofoden@itu.dk Julian Ridley Schou, jars@itu.dk 1. We initialized our weights randomly to get different solutions for each new training session (We assume that we would have some kind of bias if we initialized the weights ourselves). Depending on how the weights correspond to the error, the initialization can be very important. When we train the perceptron, the weights are adjusted using steepest decent. After each session (either incremental or batch), the weights are adjusted either up or down using the delta rule: Δw = ηx(t-y) Where Δw is the change applied to the weight, η is the learning rate, t is the desired output and y is the actual output. The larger the error and the larger the input, the more the weight of input is adjusted. We calculate the error of an output using the least mean square error: E = ½(t-y)² The difference between the desired and actual output is squared to make sure the degree of the error is the same no matter if the output is too large or too small. If we look at a simple perceptron with only one input, the size of the weight and the degree of the error it makes can be illustrated as shown below. In the case of A, the initialization of the weights does not seem to matter, since the perceptron will eventually adjust the weight towards the lowest degree of error. In the case of B, the final value might end in a local minimum, and the optimal solution can only be found by chance. In the NoisyDamage the assigned value to the weights turned out to be very important. In our implementation we reached a local minimum when we assigned the weights low, which meant that our result was a bit off compared to a desired result. The training reached it’s end point in at In order to get a result that was more desirable we assigned the value of the weights to the local minimum from the first test run. This resulted in the training reached an almost perfect match Since our perceptron consists of several inputs, the illustration of this would be in as many dimensions (which quickly become quite abstract). However, the idea is the same, and instead of adjusting in one dimension we adjust in many dimensions. Therefore we might have the same problem as in case B, and we have no way of knowing which initial configuration of the weights will lead us to the best solution. As long as we choose to use steepest descent, our best chance is to try many different random configurations, and see what solutions they come up with. 2: When using incremental learning, every input changes the weights (unless the input is correct). This means that a single, very wrong input might change the weights quite much and lead to large errors in the following outputs. Since batch learning does not update the weights until the entire set has been processed the changes are based on the average error of the set, one input will have less significance. Incremental learning will also make the perceptron better at adapting quickly to new input, which would take longer with batch learning. 4: When a dataset is relative simple, it is possible get an idea as to which way the input should be altered to get a small error-rate. The data looks quite like sinus curve, but since sin(x) is always between -1 and 1, it is probably multiplied, and since all the values are above 0, it is probably added a constant. We can also see that it has an offset on the x-axis, so x is probably added another constant: f(x) = A*sin(x + K) + B In the illustration, perceptron A will probably make a good estimate but B will come closer. Instead of initializing the weights randomly, we could try and estimate them. The highest value seems to be around 90, and the lowest around 10, so an estimate could be B = 50 and A = 40. As we can see f(1) is around 50, so K might be -1. f(x) = 40*sin(x-1)+50 The line in the diagram above is drawn based on this estimate. Transferred to the weights it would look like this: Input Weight x1 1 x2 -1 Sin(x -1) 40 1 (bias) 50 Initializing the weights with these values should find a rather good solution. However, we do realize that datasets are rarely this easy to figure out and instead it might be an idea to make a guess based on what we know, namely that the output is based on an agents rotation. Therefore it might be a good idea to alter the data with sin(x) and cos(x). For the percdeptron, we used sin(x-1) as input, since we wanted to avoid back propagation. We do undstand using sin(x-1) might be viewed ‘cheating’, or at least as a very lucky guess. The figure below shows the solution our perceptron found for different altered inputs. The actual weights of the solutions have been left out for now. The Error is the average error on the training set. Weights found by the perceptron: Input Sin(x -1) X3 Linear Second order Third order 0,9 Sinus 40 - x2 -6 -15 - x -7 31 54,5 - 1 (bias) 70 33 21 47,7 3/8: The blue line is actually only the input x and a bias, and it represent the solution our perceptron would have found if the input was not alteret. As we can clearly see, an agent with this perceptron would find that turning close to 0 degrees will inflict most damage, while turning close to 360 degrees will inflect the least damage. This is a very bad representation of the data, and could possibly lead to an agent always turning around counterclockwise. The error explained on the side of the graph is the average error derived from the error function mentioned earlier. As seen in the graph, the solution that fits the most is the sin(x-1) + B solution. The actual error graph from that solution with a bias set to 48 can be seen below. The weights assigned to the function in the above example differs from which solution to choose. The linear solution has a very low weight in order to get an almost straight line which represents the solution. In our optimal solution, the weight of both the bias and the input is relatively high and approximately the same with a low difference. The problem with ‘nonlinear’ perceptrons is to find the best inputs to describe the data. If some inputs continue to get their weights set to zero for several different initializations, they probably don’t describe the data very well, and leaving them out of the perceptron might give some better results. So adding a lot of different altered input and then letting the perceptron decrease the weights on the inputs that doesn’t fit, will give an idea of which inputs to remove. But unfortunately the perceptron might also end up in some local minimum where none of the weights are close to zero, and has a low error. But chances are that the testsample have been too small, and testing on new data might give large errors, which simpler perceptrons with fewer inputs might have predicted better. The problem with a linear solution can be it might not represent the best result for every input. At some point the linear function will have an error at almost zero, but with other input the error will be very high. This function will then only resemble an average optimal solution. Using a linear function in the noisyXOR example the optimal linear solution would always have a 25 % error solution. A non-linear function can be more precise, in the example above a non-linear function would be the most optimal one. The average error always elaborate this, the reason for the error still being this high can be explained by the noise in the outputs at it is not possible to connect every point in an optimal solution. 7: After looking at the data we came up with a possible solution for the perceptron. We figured, that since something negative times something positive is always positive, and something positive times something positive or some something negative times something negative is always positive, it might be useful to center the outputs around the x and y axis. In this case, they should be subtracted by ½. After that, if we multiply one output with the other, all the ones should be negative and the zeros positive, and we can use either the activation function to separate these: (x1 – 0,5)(x2 – 0,5) = x1x2 + 0,5x1 + 0,5x2 + 0,25 This suggests these inputs and weights: Input Weight x1x2 1 x1 0,5 x2 0,5 1 (bias) 0,25 To illustrate one of the weaknesses of perceptrons, we also used our perceptron to find the best linear solution to the data, as well as a solution as described above. In both illustrations, x is simply just the order of the inputs, y is the output before the activation function and the color is the correct result (ie. White is 0, black is 1). As expected, the linear perceptron could only find a very poor solution. If we define the activation function right, it could recognize all inputs below 0,5 or above -0,5 as either 0 or 1, but either way, it will always have an error around at least 25%, in this case probably more. The result from the other perceptron seems a bit more promising. With an activation function as either a step-function or a very steep sigmoid, all outputs above 0 could be recognized as 0 and below 0 as 1. The actual weights we found with our perceptron: Input x1x2 x1 x2 Perceptron 0,99 0,5081 0,5102 weight 1 (bias) 0,2493 This shows that the XOR problem can be solved by a perceptron, and it is not necessary to use a Neural Network. 5: To study the development of an error, we have tried mapping how large errors different weight makes with only one input. The input is sin(x-1), and the bias (which has a constant weight) is 48. The illustration on the left side shows how big the error is for different weights between 0 and 100 and the illustration on the right shows the graph for different weights. The illustration shows the development of the error and the graph after 40 to 240 iterations over the same dataset with incremental learning. As we can see, the graph is corrected less and less the closer it gets to the best solution. Learning Rate / Error 1400 1200 1000 800 600 400 200 0 0 100 Learning rate: 0,03 200 300 Learning rate: 1 400 500 Learning rate: 0,00003 It is easy to see what the difference is. The most effective learning rate is 1, but also it is quite unstable at a later state, whereas the learning rate of 0.03 fairly fast gets to a good prediction. The 0.00003 are actually learning quite slowly. It is hard to but is actually learning.. ;). After around 1000x500=500.000 runs it actually have learned the weights quite well and have a very good stable weights. A possible solution could also be to make the learning adjustable, so it decreases as more iteration have been made.