Avg. Doc Size - Columbia University

advertisement

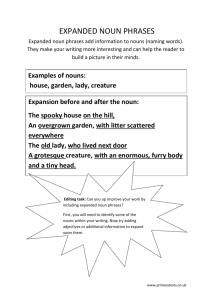

AUTOMATIC IDENTIFICATION OF INDEX TERMS FOR INTERACTIVE BROWSING Nina Wacholder David K. Evans Judith L. Klavans 1st author's affiliation 2nd author's affiliation 3rd author's affiliation 1st line of address 1st line of address 1st line of address 2nd line of address 2nd line of address 2nd line of address Telephone number, incl. country code Telephone number, incl. country code Telephone number, incl. country code nina@cs.columbia.edu devans@cs.columbia.edu ABSTRACT Indexes -- structured lists of terms that provide access to document content -- have been around since before the invention of printing [31]. But most text content in digital libraries cannot be accessed through indexes. The time and expense of manual preparation of standard indexes is prohibitive. The quantity and variety of the data and the ambiguity of natural language make automatic generation of indexes a daunting task. Over the last decade, there has been an improvement in natural language processing techniques that merits a reconsideration of automatic indexing. This paper addresses the problem of how to provide users of digital libraries with effective access to text content through automatically generated indexes consisting of terms identified by statistically and linguistically informed natural language processing techniques. We have developed LinkIT, a software tool for identifying significant topics in text. [16]. LinkIT rapidly identifies noun phrases in full-documents; each of these noun phrases is a candidate index term. These terms are then sorted based on filters a significance metric called head sorting [39]. Wacholder et al. 2000 [41] shows that these terms are perceived by users as useful index terms. We have also developed a prototype dynamic text browser, called Intell-Index, that supports user driven navigation of index terms. The focus of this paper is on the usefulness of the terms identified by LinkIT in dynamic text browsers like We analyze the suitability of the terms identified by LinkIT for use in an index by analyzing its output over a 250 MB corpus consisting of text in terms of coverage, quality and consistency. We also show how the usefulness of these terms is enhanced by automatically bringing together subsets of related index terms. Finally, we describe Intell-Index, a prototype dynamic text browser for user-driven Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Conference ’00, Month 1-2, 2000, City, State. Copyright 2000 ACM 1-58113-000-0/00/0000…$5.00. klavans@cs.columbia.edu browsing and navigation of index terms. Keywords Indexing, phrases, natural language processing, browsing, genre. 1. OVERVIEW Indexes are useful for information seekers because they: support browsing, a basic mode of human information seeking [32]. provide information seekers with a valid list of terms, instead of requiring users to invent the terms on their own. Identifying index terms has been shown to be one of the hardest parts of the search process, e.g., [17]. are organized in ways that bring related information together [31]. In spite of their well established utility as an information access tool, expert indexes are not generally available for digital libraries. Human effort cannot keep up with the steady flow of content. Indexes consisting of automatically identified terms have been legitimately criticized by information professionals such as Mulvany 1994 [31] on the grounds that they constitute indiscriminate lists, rather than synthesized and structured representation of content. ((results)) LinkIT implements a linguistically-motivated technique for the recognition and grouping of simplex noun phrases (SNPs). LinkIT efficiently gathers minimal NPs, i.e. SNPs, and applies a refined set of post-processing rules to these SNPs to link them within a document. The identification of SNPs is performed using a finite state machine compiled from a regular expression grammar, and the process of ranking the candidate significant topics uses frequency information that is gathered in a single pass through the document. Our data consists of 250 megabytes of text taken from the Tipster 1994 CD ROM [36] . Text is from four different sources, the Wall Street Journal, the Associated Press, the Federal Register and Ziff –Davis Publications. We consider several questions related to the usability of the terms extracted by LinkIT from this data in an automatically generated index, as measured both by the quality of the index terms and the number of index terms. Are automatically identified terms of sufficient quality and consistency to serve as useful access points to text content? What automatic techniques are available for organizing and sorting these terms in order to bring related terms together and eliminate terms not likely to be relevant for a particular information need? ((On average, how many useful index terms are identified for individual documents and corpora?)) The research approach that we take in this paper emphasizes fully automatic identification and organization of index terms that actually occur in the text. We have adopted this approach for three reasons: 1. Human indexers cannot keep up with the volume of text. For publications like daily newspapers, it is especially important to rapidly create indexes for large amounts of text. 2. New names and terms are constantly being invented and/or published. For example, new companies are formed (e.g., Verizon Communications Inc.); people’s names appear in the news for the first time (e.g., it is unlikely that Elian Gonzalez’ name was in a newspaper before November 25, 1999); and new product names are constantly being invented (e.g., Handspring’s Visor PDA). These terms frequently appear in print before they appear in reference sources. Therefore, indexing programs must be able to deal with new terms. 3. Techniques that rely on existing resources such as controlled vocabularies are usually not readily adopted for domains where these resources do not exist. For these reasons, the question of how well state-of-the-art natural language processing techniques perform index term identification and organization without any manual intervention is of practical as well as theoretical importance. This paper also makes a contribution toward the development of methods for evaluating indexes and browsing applications. In addition, index terms are useful in applications such as information retrieval, document summarization and classification [45], [2]. The rest of this paper is organized as follows ((to add later)): 2. Automatically identified index terms The promise of automatic text processing for automatic generation of indexes has been recognized for several decades, e.g., Edmundson and Wyllys 1961 [13]. However, the quantity of text and the ambiguity of natural language have made progress in this task more difficult than was originally expected. For example, ((need nice ambiguous example)) In the 1990’s, increased processing power and the decreasing cost of storage space made it feasible to process large quantities of text in a reasonable period of time ((need example)). At the same time, advances in natural language processing have led to new techniques for extraction of information from text. Information identification is the automatic discovery of salient information, concepts, events, and relationships, in full-text documents. Stimulated in part by the US government sponsored MUC (Message Understanding Conference) competitions [11],[12], natural language processing techniques for identifying coherent grammatical phrases, proper names, acronyms and other information in text have been developed. The lists of terms identified by information extraction techniques are not identical to those compiled by human beings. They inevitably contain errors and inconsistencies that look downright foolish to human eyes. It is nevertheless possible to identify coherent lists with precision in the 90%s for certain kinds of terms typically included in indexes. For example, Wacholder et al. 1997 [40] and Bikel et al. 1997 [5] achieve these rates for proper names using rule-based and statistical techniques respectively. Cowie et Lehnert 1996 [7] observe that 90% precision in information extraction is probably satisfactory for every day use of results. The most important development vis a vis identification of indexing terms has been the development of efficient, relatively accurate techniques for identify phrases in text. Most index terms are noun phrases, coherent linguistic units whose head (most important word) is a noun. It is no accident that expert indexes and controlled vocabularies consist primarily of noun phrases, as a scan of almost any back-of-the-book index will show. The widespread availability of part-of-speech taggers, systems that identify the grammatical category, makes it possible to identify lists of noun phrases and other meaningful units in documents (e.g., [8], [37], [1], [15]). Jacquemin et al. 1998 [23] have used derivational morphology to achieve greater coverage of multiword terms for indexing and retrieval. In addition, there has been a great deal of research on identifying subgroups of noun phrases such as proper names [5],[33],[38], technical terminology [10],[24],[6],[22] and acronyms [28], [44]. These are the types of expressions that are traditionally included in indexes. There is also an emerging body of work on development of interactive systems to support phrase browsing (e.g., Anick and Vaithyanathan 1997 [2], Gutwin et al. [19], Nevill-Manning et al. 1997 [32], Godby and Reighart 1998 [18]). This work shares with the work described here the goal of figuring out how to best use phrases to improve information access and how to structure lists of phrases identified in documents. But in spite of a long history of indexes as an information access tool, there has been relatively little research on indexing usability, an especially important topic vis a vis automatically generated indexes [20][30]. There are a lot of issues that need to be explored: the difference between using a printed index and an electronic index, useful numbers of index terms for browsing, user issues… ((In the rest of this paper, we focus on noun phrases, and on techniques for automatically subclassifying, organizizing and structuring them for presentation to users.)) 3. Automatically identified noun phrases as index terms In this section, we consider two issues related to automatic identification of noun phrases as related to indexing and browsing applications. 1. What is the right type of noun phrase to use? 2. What subtypes of noun phrases are available for sorting indexes? The first problem that concerns us is what the optimal definition of noun phrase is for indexing purposes. This is more than a theoretical question because noun phrases as they occur in natural language are recursive, that is noun phrases occur within noun phrases. For example, the complex noun phrase a form of cancer- causing asbestos actually includes two simplex noun phrases, a form and cancer-causing asbestos. A system that lists only complex noun phrases would list only one term, a system that lists both simplex and complex noun phrases would list all three phrases, and a system that identifies only simplex noun phrases would list two. A human indexer would choose whichever type is appropriate for each term, but natural language processing systems cannot yet do this reliably. From the point of view of natural language processing systems, For large indexes, Because of the ambiguity of natural language, it is much easier to identify the boundaries of simplex noun than complex ones [39]. The decision to focus on simplex noun phrases rather than complex ones is therefore made for purely practical reasons. 4. Multiple views of index terms Index terms are organized in ways that represent either the content of a single document or the content of multiple documents. For example, Figure 1 shows the terms in the order in which they occur in the Wall Street Journal article WSJ0003 (Penn Treebank). The index terms in Figure 1 were extracted automatically by the LinkIT system as part of the process of identification of all noun phrases in a document (Evans 1998 [15]; Evans et al. 2000[16]). The first column is the sentence number, the second column represents the token span. (This information will be suppressed below.) Table 1: Topics, in document order, extracted from first sentence of wsj0003 Sentence Tokens Noun Phrase S1 1-2 A form S1 4-4 asbestos S1 9-11 Kent cigarette filters S1 14-16 a high percentage S1 18-19 cancer deaths S1 21-22 a group S1 24-24 workers S1 30-31 30 years S1 33-33 researchers For most people, it is not difficult to guess that this list of terms has been extracted from a discussion about deaths from cancer in workers exposed to asbestos. One reason is that the list takes the form of noun phrases, a linguistically coherent unit that is meaningful to people. It is no coincidence that manually created indexes usually are composed of lists of noun phrases. The information seeker is able to apply common sense and general knowledge of the world to interpret the terms and their possible relation to each other. At least for a short document, a complete list of terms extracted from a document in order can relatively easily be browsed in order to get a sense of the topics discussed in a single document. But in general such ordered lists have limited utility, and other methods for organizing phrases are needed. One way that the number of terms is reduced is by use of the Head Sorting method (Wacholder 1998), an automatic technique for approximating the identification of significant terms performed by humans in manual indexing. The candidate index terms in a document, i.e., the noun phrases identified by LinkIT, are sorted by head, the element that is linguistically recognized as semantically and syntactically the most important. The terms are ranked in terms of their significance based on frequency of the head in the document. After filtering based on significance ranking and other linguistic information, the following topics are identified as most important in WSJ0003 (Wall Street Journal 1988, available from the Penn Treebank; heads of terms are italicized). Table 2: Most significant terms in document, based on head frequency asbestos workers cancer-causing asbestos cigarette filters researcher(s) asbestos fiber crocidolite paper factory This list of phrases (which includes heads that occur above a frequency cutoff of 3 in this document, with content-bearing modifiers, if any) is a list of important concepts representative of the entire document. Another view of the phrases identified by LinkIT is obtained by linking noun phrases in a document with the same head. A single word noun phrase can be quite ambiguous, especially if it is a frequently-occurring noun like worker, state, or act. Noun phrases grouped by head are likely to refer to the same concept, if not always to the same entity (Yarowsky 1993) [43], and therefore can convey the primary sense of the head as used in the text. For example, in the sentence “Those workers got a pay raise but the other workers did not”, the same sense of worker is used in both noun phrases even though two different sets of workers are referred to. Figure 3 shows how the word workers is used as the head of a noun phrase in four different Wall Street Journal articles from the Penn Treebank; determiners such as a and some have been removed. Table 3: Comparison of uses of worker as head of noun phrases across articles workers … asbestos workers (wsj 0003) workers … private sector workers … private sector hospital workers ... nonunion workers…private sector union workers (wsj 0319) workers … private sector Steelworkers (wsj 0592) workers … United workers … United Auto Workers … hourly production and maintenance workers (wsj0492) terms, only a few of which will be of interest to a particular user. This contrasts with information retrieval systems, where the user typically specifies their information need through the query. Out of the context of an individual user’s information need, it is hard to determine what terms should be included. The standard evaluation metrics, precision and recall are not helpful here because ((…)). This view distinguishes the type of worker referred to in the different articles, thereby providing information that helps rule in certain articles as possibilities and eliminate others. This is because the list of complete uses of the head worker provides explicit positive and implicit negative evidence about kinds of workers discussed in the article. For example, since the list for wsj_0003 includes only workers and asbestos workers, the user can infer that hospital workers or union workers are probably not referred to in this document. We therefore assess the quality of our terms by two different measurements. First we assess the quality of terms randomly extracted from our 257MB corpus. 475 index terms (0.25) % of the total were randomly extracted from the corpus and alphabetized. Then we gave each term one of three ratings: useful -- a term is arguably a coherent noun phrase. Therefore it makes sense as a distinct unit, even out of context. Examples of good terms are sudden current shifts, Governor Dukakis, and terminal-to-host connectivity. The three figures above show just a few of the ways that automatically identified terms are organized and filtered in our system. We have developed a prototype Dynamic Text Browser, called Intell-Index, that allows users to interactively sort and browse terms that we have identified. Figure 1 on p.8 shows the Intell-Index opening screen. useless – a term is neither a noun phrase nor coherent. Examples of useless terms identified by the system are uncertainty is, x ix limit, and heated potato then shot. Most of these problems result from idiosyncratic or unexpected text formatting. Another source of problems is the part-ofspeech tagger; for example, if it erroneously identifies a verb as a noun (as in the example uncertainty is), the resulting term is not a noun phrase. intermediate – any term that does not clearly belong in the useful or useless categories. Typically they consist of one or more good noun phrases, along with some junk. In general, they are enough noun phrase like that in some ways they fit patterns of the component noun phrases. Examples are up Microsoft Windows, which would be good if it didn’t include up. This is a reference that justifies being listed in a list of NPs referring to Windows or Microsoft. Another example is th newsroom, where th is presumably a typographical error for the. ; Acceptance of responsibility 29, which would be fine if it didn’t include the 29. Many of these examples result from the difficulty in deciding where one proper name ends and the next one begins, as in General Electric Co. MUNICIPALS Forest Reserve District. The user first selects the collection to be browsed and then either browse the entire set of index terms identified by LinkIT or a subset. Figure 2 on p.8 shows the browsing screen, where the user may either click on a head, which will give them a list of all of the terms with that head, or on a particular term, which gives only the specific expansion of the head (or only the head) Alternatively, the user may enter a search string, and specify criteria to select a subset of terms that will be returned. This gives the user better control over the search. For example, the user may specify whether or not the terms returned must match the case of the user-specified search string;. This facility allows the user to view only proper names (with a capitalized last word), only common noun phrases, or both. This is an especially useful facility for controlling terms that the system returns. For example, specifying that the a in act be capitalized in a collection of social science or political articles is likely to return a list of laws with the word Act in their title; this is much more specific than an indiscriminate search for the string act, regardless of capitalization. As the user browses the terms returned by Intell-Index, they may choose to view a list of the contexts in which the term is used; these contexts are sorted by document and ranked by normalized frequency in the document. This is a version of KWIC (keyword in context) that we call ITIC (index term in context). Finally, if the information seeker decides that the list of ITICs is promising, they may view the entire document. 5. QUALITY OF TERMS The problem of how to identify what are good enough terms for browsing is a difficult one. Indexes are designed to serve diverse users and information needs, and by design they contain multiple Table 4 shows the ratings by type of term and overall. Proper names includes all terms that look like proper nouns, as identified by a simple regular expression based on capitalization of the head. The acronym category contains all terms that might be acronyms, using a regular expression that selects terms with two or more capitalized letters. While both of these heuristics are crude, they are easily applied to the list of terms, and efficiently separate out the terms into general classes. The common category contains all other terms. (For this study, we eliminated terms that started with non-alphabetic characters. ((the types need to be defined above)) ((Also, I wrote some explanatory text with the tables that I gave you earlier about the utility of breaking the terms into classes, which might fit well here – or a discussion of why we would want to do this.)) Table 4: Quality rating of terms, as measured by comprehensibility of terms. Total Common Proper Acronym Total 392 147 35 574 Useful Moderate Useless 344 18 30 87.8% 4.6% 7.7% 105 36 6 71.4% 24.5% 4.1% 8 1 35 74.3% 22.9% 2.9% 475 62 37 82.8% 10.9% Table 1. The numbers in parenthesis are the number of words per document and per corpus for the full-text columns, and the percentage of the full text size for the noun phrase (NP) and head column. Table 5 Corpus Size Corpus AP 6.5% FR The percentage of junk terms is well under 10%, which puts our results in the realm of being suitable for every day use according to the metric of Cowie and Lehnert mentioned in Section 1. WSJ ZIFF Another way to get at this question is to give users a task and an index and find out how easy/hard it is to find information. We leave this possibility to future research. 6. STATISTICAL ANALYSIS OF TERMS In this section, we consider the problem of quantity of text and quantity of terms as metrics of index usability. Thoroughness of coverage is one of the standard criteria for index evaluation [20]. However thoroughness is a double-edged sword when it comes to computer generated indexes. The ideal is thoroughness of coverage of important terms and no coverage of unimportant terms, though of course the term important is problematic in this context because we assume that different users will be consulting the index for a variety of different purposes and will have different levels of domain knowledge. We do not know of any research which evaluates user processing of lists; however, we assume that larger lists and more diverse lists are harder to process. Diverse lists are harder to process because there the context provides less information about how to interpret the list. ((example)) We also need to establish a uniform standard for evaluation of browsing/indexing tools. Our test corpus consisted of 257 MBs of text from Disks 1 and 2 of the Tipster CD-ROMs prepared for the TREC conferences (TREC 1994 [11]). We used documents from four collections— Associated Press (AP), Federal Register (FR), Wall Street Journal 1990 and 1992 (WSJ) and Ziff Davis (ZD). To allow for differences across corpora, we report on overall statistics and per corpus statistics as appropriate. However, our focus in this paper is on the overall statistics. The first question that we consider is how much the reduction of documents to noun phrases decreases the size of the data to be scanned. For each full-text corpus, we created one parallel version consisting only of all occurrences of all noun phrases (duplicates not removed) in the corpus, and another parallel version consisting only of heads (duplicates not removed), as shown in Full Text Non Unique Nonunique NPs Heads 12.27 MB 7.4 MB 5.7 MB (2.0 million words) (60%) (47%) 33.88 MB 20.7 MB 13.7 MB (5.3 million words) (61%) (41%) 45.59 MB 27.3 MB 18.2 MB (7.0 million wds) (60%) (40%) 165.41 MB 108.8 MB 67.8 MB (26.3 million wds) (66%) (41%) The number of non-unique NPs and Non-unique heads reflects the number of occurrences (tokens) of NPs and heads of NPs. From the point of view of the index, however, this is only a first level reduction in the number of candidate index terms: for browsing and indexing, each term need be listed only once. After duplicates have been removed, approximately 1% of the full text remains for heads, and 22% for noun phrases. (( Should we put this in the table? Or maybe we don’t need this first table at all, but should just state this information.)) This suggests that we should use a hierarchical browsing strategy, using the shorter list of heads for initial browsing, and then using the more specific information in the SNPs when specification is requested. Interestingly, the percentages are relatively consistent across corpora. Table 6 shows the relationship between document size in words and number of noun phrases per document. For example, for the AP corpus, an average document of 476 words will typically have about 127 ((unique—non-unique??) NPs associated with it. can list all nouns; this list is constantly growing, but at a slower rate than the possible number of noun phrases). Table 6 SNP per document Corpus Avg. Doc Size 2.99K AP Avg NPs ((Avg NPs/ /doc word in document?)) 338 In general, the vast majority of heads have two or fewer different possible expansions. There is a small number of heads, however, that contain a large number of expansions. For these heads, we could create a hierarchical index that is only displayed when the user requests further information on the particular head. In the data that we examined, on average the heads had about 6.5 expansions, with a standard deviation of 47.3. 132 ((In this table, we’ve lost a number for the Ziff data. Also, is it really correct that the max for Ziff is 15877?? is this true?)) 129 Table 8: Average number of head expansions per corpus 127 (476 words) 7.70K FR (1175 words) WSJ 3.23K (487 words) ZIFF 2.96K (461 words) Tolle and Chen 2000 [35] identified approximately 140 unique noun phrases per abstract for 10 medical abstracts. They do not report the average length in words of abstracts, but a reasonable guess is probably about 250 words per abstract. In contrast, LinkIT identifies about 130 SNPs for documents of just under 500 words. This is a much more manageable number, and we get the advantage of having an index for the full text, which by definition includes more information than an abstract. ((length issues—not considering here what happens here to index terms as text gets longer, e.g., 20,000 word articles or _____ word book)) ((Dave do unique SNPs and heads maintain capitalization distinctions? what about plural?) increases much faster than the number of unique heads – this can be seen by the fall in the ratio of unique heads to SNPs as the corpus size increases. Table 7 shows another property of natural language—the number of unique noun phrases increases much faster than the number of unique heads – this can be seen by the fall in the ratio of unique heads to SNPs as the corpus size increases. Table 7: Number of Unique SNPs and Heads Corpus Unique NPs Unique Heads Ratio of Unique Heads to NPs AP 156798 38232 24% FR 281931 56555 20% WSJ 510194 77168 15% ZIFF 1731940 176639 10% Total 2490958 254724 10% Corp Max % <= 2 2<% Avg < 50 % >= 50 Std. Dev. AP FR 557 72.2% 26.6% 1.2% 4.3 13.63 1303 76.9% 21.3% 1.8% 5.5 26.95 WSJ 5343 69.9% 27.8% 2.3% 7.0 46.65 ZIFF 15877 75.9% 21.6% 2.5% 10.5 102.38 Additionally, these terms have not been filtered; we may be able to greatly narrow the search space if the user can provide us with further information about the type of terms they are interested in. For example, using simple regular expressions, we are able to roughly categorize the terms that we have found into four categories: regular SNPs, SNPs that look like proper nouns, SNPs that look like acronyms, and SNPs that start with non-alphabetic characters. It would be possible to narrow the index to one of these categories, or exclude some of them from the index. Table 9: Number of SNPs by category Corpus AP # of SNPs 156798 FR 281931 WSJ 510194 ZIFF 1731940 # of Proper Nouns 2490958 # of nonalphabetic elements 20787 2526 12238 (13.2%) (1.61%) (7.8%) 22194 5082 44992 (7.8%) (1.80%) (15.95%) 44035 6295 63686 (8.6%) (1.23%) (12.48%) 102615 38460 (2.22%) (11.16%) (5.9%) Total # of Acronyms 189631 (7.6%) 45966 (1.84%) 193340 300373 (12.06%) Table 7 is interesting for a number of reasons: 1) the variation in ratio of heads to SNPs per corpus—this may well reflect the diversity of AP and the FR relative to the WSJ and especially Ziff. 2) as one would expect, the ration of heads to the total is smaller for the total than for the average of the individual copora. This is because the heads are nouns. (No dictionary Over all of the copora, about 10% of the SNPs start with a nonalphabetic character, which we can exclude if the user is searching for a general term. If we know that the user is searching specifically for a person, then we can use the list of proper nouns as index terms, further narrowing the search space (to approximately 10% of the possible terms.) [12] DARPA (1995) Proceedings of the Sixth 7. CONCLUSION/DISCUSSION Message Understanding Conference (MUC-6). Morgan Kaufmann, 1995. 8. ACKNOWLEDGMENTS [13] Edmundson, H.P. and Wyllys, W. (1961) “Automatic This work has been supported under NSF Grant IRI-97-12069, “Automatic Identification of Significant Topics in Domain Independent Full Text Analysis”, PI’s: Judith L. Klavans and Nina Wacholder and NSF Grant CDA-97-53054 “Computationally Tractable Methods for Document Analysis”, PI: Nina Wacholder. [14] Evans, David A. and Chengxiang Zhai (1996) "Noun-phrase 9. REFERENCES [1] Aberdeen, J., J. Burger, D. Day, L. Hirschman, and M. Vilain (1995) “Description of the Alembic system used for MUC-6". In Proceedings of MUC-6, Morgan Kaufmann. Also, Alembic Workbench, http://www.mitre.org/resources/centers/advanced_info/g04h/ workbench.html. [2] Anick, Peter and Shivakumar Vaithyanathan (1997) “Exploiting clustering and phrases for context-based information retrieval”, Proceedings of the 20th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’97), pp.314323. [3] Baeza-Yates, Ricardo and Berthier Ribeiro-Netto (1999) Modern Information Retrieval, ACM Press, New York. [4] Bagga, Amit and Breck Baldwin (1998). “Entity based crossdocument coreferencing using the vector space model, Proceeding of the 36th Annual Meeting of the Association forComputational Linguistics and the 17th International Conference on Computational Linguistics, pp.79-85. [5] Bikel, D., S. Miller, R. Schwartz, and R. Weischedel (1997) Nymble: a High-Performance Learning Name-finder”, Proceedings of the Fifth conference on Applied Natural Language Processing, 1997. [6] Boguraev, Branimir and Kennedy, Christopher (1998) "Applications of term identification Terminology: domain description and content characterisation”, Natural Language Engineering 1(1):1-28. [7] Cowie, Jim and Wendy Lehnert (1996) “Information extraction”, Communications of the ACM, 39(1):80-91. [8] Church, Kenneth W. (1998) “A stochastic parts program and noun phrase parser for unrestricted text”, Proceedings of the Second Conference on Applied Natural Language Processing, 136-143. [9] Dagan, Ido and Ken Church (1994) Termight: Identifying and translating technical terminology, Proceedings of ANLP ’94, Applied Natural Language Processing Conference, Association for Computational Linguistics, 1994. [10] Damereau, Fred J. (1993) “Generating and evaluating domain-oriented multi-word terms from texts”, Information Processing and Management 29(4):433-447. abstracting and indexing--survey and recommendations”, Communications of the ACM, 4:226-234. analysis in unrestricted text for information retrieval", Proceedings of the 34th Annual Meeting of the Association for Computational Linguistics, pp.17-24. 24-27 June 1996, University of California, Santa Cruz, California, Morgan Kaufmann Publishers. [15] Evans, David K. (1998) LinkIT Documentation, Columbia University Department of Computer Science Report. Available at <http://www.cs.columbia.edu/~devans/papers/LinkITTechDo c/ > [16] Evans, David K., Klavans, Judith, and Wacholder, Nina (2000) “Document processing with LinkIT”, Proceedings of the RIAO Conference, Paris, France. [17] Furnas, George, Thomas K. Landauer, Louis Gomez and Susan Dumais (1987) “The vocabulary problem in humansystem communication”, Communications of the ACM 30:964-971. [18] Godby, Carol Jean and Ray Reighart (1998) “Using machine-readable text as a source of novel vocabulary to update the Dewey Decimal Classification”, presented at the SIG-CR Workshop, ASIS, < http://orc.rsch.oclc.org:5061/papers/sigcr98.html >. [19] Gutwin, Carl, Gordon Paynter, Ian Witten, Craig NevillManning and Eibe Franke (1999) “Improving browsing in digital libraries with keyphrase indexes”, Decision Support Systems 27(1-2):81-104. [20] Hert, Carol A., Elin K. Jacob and Patrick Dawson (2000) “A usability assessment of online indexing structures in the networked environment”, Journal of the American Society for Information Science 51(11):971-988. [21] Hatzivassiloglou, Vasileios, Luis Gravano, and Ankineedu Maganti (2000) "An investigation of linguistic features and clustering algorithms for topical document clustering," Proceedings of Information Retrieval (SIGIR'00), pp.224231. Athens, Greece, 2000. [22] Hodges, Julia, Shiyun Yie, Ray Reighart and Lois Boggess (1996) “An automated system that assists in the generation of document indexes”, Natural Language Engineering 2(2):137-160. [23] Jacquemin, Christian, Judith L. Klavans and Evelyne Tzoukermann (1997) “Expansion of multi-word terms for indexing and retrieval using morphology and syntax”, Proceedings of the 35th Annual Meeting of the Assocation for Computational Linguistics, (E)ACL’97, Barcelona, Spain, July 12, 1997. [11] DARPA (1998) Proceedings of the Seventh Message [24] Justeson, John S. and Slava M. Katz (1995). “Technical Understanding Conference (MUC-7). Morgan Kaufmann, 1998. terminology: some linguistic properties and an algorithm for identification in text”, Natural Language Engineering 1(1):9-27. Large Corpora, Association for Computational Linguistics, June 22, 1993. [25] Klavans, Judith, Nina Wacholder and David K. Evans (2000) [38] Wacholder, Nina, Yael Ravin and Misook Choi (1997) “Evaluation of Computational Linguistic Techniques for Identifying Significant Topics for Browsing Applications” Proceedings of LREC, Athens, Greece. "Disambiguating proper names in text", Proceedings of the Applied Natural Language Processing Conference , March, 1997. [26] Klavans, Judith and Philip Resnik (1996) The Balancing Act, [39] Wacholder, Nina (1998) “Simplex noun phrases clustered by MIT Press, Cambridge, Mass. [27] Klavans, Judith, Martin Chodorow and Nina Wacholder (1990) “From dictionary to text via taxonomy”, Electronic Text Research, University of Waterllo, Centre for the New OED and Text Research, Waterloo, Canada. [28] Larkey, Leah S., Paul Ogilvie, M. Andrew Price, Brenden Tamilio (2000) Acrophile: An Automated Acronym Extractor and Server In Digital Libraries 'Proceedings of the Fifth ACM Conference on Digital Libraries, pp. 205-214, San Antonio, TX, June, 2000. [29] Lawrence, Steve, C. Lee Giles and Kurt Bollacker (1999) “Digital libraries and autonomous citation indexing”, IEEE Computer 32(6):67-71. [30] Milstead, Jessica L. (1994) “Needs for research in indexing”, Journal of the American Society for Information Science. [31] Mulvany, Nancy (1993) Indexing Books, University of Chicago Press, Chicago, IL. [32] Nevill-Manning, Craig G., Ian H. Witten and Gordon W. Paynter (1997) “Browsing in digital libraries: a phrase based approach”, Proceedings of the DL97, Association of Computing Machinery Digital Libraries Conference, 230236. [33] Paik, Woojin, Elizabeth D. Liddy, Edmund Yu, and Mary McKenna (1996) “Categorizing and standardizing proper names for efficient information retrieval”,. In Boguraev and Pustejovsky, editors, Corpus Processing for Lexical Acquisition, MIT Press, Cambridge, MA. [34] Wall Street Journal (1988) Available from Penn Treebank, head: a method for identifying significant topics in a document”, Proceedings of Workshop on the Computational Treatment of Nominals, edited by Federica Busa, Inderjeet Mani and Patrick Saint-Dizier, pp.70-79. COLING-ACL, October 16, 1998, Montreal. [40] Wacholder, Nina, Yael Ravin and Misook Choi (1997) “Disambiguation of proper names in text”, Proceedings of the ANLP, ACL, Washington, DC., pp. 202-208. [41] Wacholder, Nina, David Kirk Evans, Judith L. Klavans (2000) “Evaluation of automatically identified index terms for browsing electronic documents”, Proceedings of the Applied Natural Language Processing and North American Chapter of the Association for Computational Linguistics (ANLP-NAACL) 2000. Seattle, Washington, pp. 302-307. [42] Wright, Lawrence W., Holly K. Grossetta Nardini, Alan Aronson and Thomas C. Rindflesch (1999) “Hierarchical concept indexing of full-text documents in the Unified Medical Language System Information Sources Map”. (()) [43] Yarowsky, David (1993) “One sense per collocation”, Proceedings of the ARPA Human Language Technology Workshop, Princeton, pp 266-271. [44] Yeates, Stuart. “Automatic extraction of acronyms from text”, Proceedings of the Third New Zealand Computer Science Research Students' Conference, pp.117-124, Stuart Yeates, editor. Hamilton, New Zealand, April 1999. [45] Zhou, Joe (1999) “Phrasal terms in real-world applications”. In Natural Language Information Retrieval, edited by Tomek Strazalowski, Kluwer Academic Publishers, Boston, pp.215-259. Linguistic Data Consortium, University of Pennsylvania, Philadelphia, PA. [35] Tolle, Kristin M. and Hsinchun Chen (2000) “Comparing noun phrasing techniques for use with medical digital library tools”, Journal of the American Society of Information Science 51(4):352-370. [36] Text Research Collection (TREC) Volume 2 (revised March 1994), available from the Linguistic Data Consortium. [37] Voutilainen, Atro (1993) “Noun phrasetool, a detector of English noun phrases”, Proceedings of Workshop on Very Figure 1 Intell-Index opening screen < http://www.cs.columbia.edu/~nina/IntellIndex/indexer.cgi> Figure 2: Browse term results Browse Term Results 6675 terms match your query ability political ability ABM abuses accommodation political accommodation accomplishment significant accomplishment human rights abuses Accord Trilateral Accord Accords Background De-Nuclearization Accords acceptance widespread acceptance broad acceptance accord access full access U.S. access accession quick accession accessions earlier accessions 12/19/2000 subsequent post-Soviet accord bilateral accord bilateral nuclear cooperation accord accords nuclear-weapon-free-zone accords accounting ... Wacholder 34