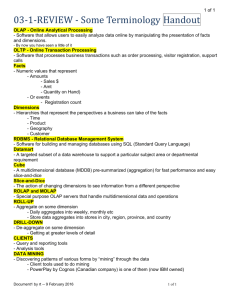

Data Warehousing And Data Mining

U7CSA33 Data Warehousing And Data Mining L T P C

3 1 0 4

UNIT I DATA WAREHOUSING 10

Data warehousing Components –Building a Data warehouse –- Mapping the DataWarehouse to a

Multiprocessor Architecture – DBMS Schemas for Decision Support – Data Extraction, Cleanup, and

Transformation Tools –Metadata.

UNIT II BUSINESS ANALYSIS 8

Reporting and Query tools and Applications – Tool Categories – The Need for Applications – Cognos

Impromptu – Online Analytical Processing (OLAP) – Need – Multidimensional Data Model – OLAP

Guidelines – Multidimensional versus Multirelational OLAP – Categories of Tools – OLAP Tools and the Internet.

UNIT III DATA MINING 8

Introduction – Data – Types of Data – Data Mining Functionalities – Interestingness of Patterns –

Classification of Data Mining Systems – Data Mining Task Primitives – Integration of a Data Mining

System with a Data Warehouse – Issues –Data Preprocessing.

UNIT IV ASSOCIATION RULE MINING AND CLASSIFICATION 11

Mining Frequent Patterns, Associations and Correlations – Mining Methods – Mining Various Kinds of

Association Rules – Correlation Analysis – Constraint Based Association Mining – Classification and

Prediction - Basic Concepts - Decision Tree Induction - Bayesian Classification – Rule Based

Classification – Classification by Backpropagation – Support Vector Machines – Associative

Classification – Lazy Learners – Other Classification Methods – Prediction

UNIT V CLUSTERING AND APPLICATIONS AND TRENDS IN DATA MINING 8

Cluster Analysis - Types of Data – Categorization of Major Clustering Methods – Kmeans – Partitioning

Methods – Hierarchical Methods - Density-Based Methods –Grid Based Methods – Model-Based

Clustering Methods – Clustering High Dimensional Data - Constraint – Based Cluster Analysis – Outlier

Analysis – Data Mining Applications.

TOTAL: 45 PERIODS

TEXT BOOKS:

1. Alex Berson and Stephen J. Smith, “ Data Warehousing, Data Mining & OLAP”, Tata McGraw – Hill

Edition, Tenth Reprint 2007.

2. Jiawei Han and Micheline Kamber, “Data Mining Concepts and Techniques”, Second Edition,

Elsevier, 2007.

REFERENCES:

1. Pang-Ning Tan, Michael Steinbach and Vipin Kumar, “ Introduction To Data Mining” , Person

Education, 2007.

2. K.P. Soman, Shyam Diwakar and V. Ajay “, Insight into Data mining Theory and Practice”, Easter

Economy Edition, Prentice Hall of India, 2006.

3. G. K. Gupta, “ Introduction to Data Mining with Case Studies”, Easter Economy Edition, Prentice Hall of India, 2006.

4. Daniel T.Larose, “Data Mining Methods and Models”, Wile-Interscience, 2006.

UNIT I DATA WAREHOUSING 10

Data warehousing Components –Building a Data warehouse –- Mapping the Data Warehouse to a Multiprocessor Architecture – DBMS Schemas for Decision Support – Data Extraction,

Cleanup, and Transformation Tools –Metadata.

DATA WAREHOUSE COMPONENTS & ARCHITECTURE

The data in a data warehouse comes from operational systems of the organization as well as from other external sources. These are collectively referred to as source systems . The data extracted from source systems is stored in a area called data staging area , where the data is cleaned, transformed , combined, deduplicated to prepare the data for us in the data warehouse. The data staging area is generally a collection of machines where simple activities like sorting and sequential processing takes place. The data staging area does not provide any query or presentation services. As soon as a system provides query or presentation services, it is categorized as a presentation server . A presentation server is the target machine on which the data is loaded from the data staging area organized and stored for direct querying by end users, report writers and other applications. The three different kinds of systems that are required for a data warehouse are:

1.

Source Systems

2.

Data Staging Area

3.

Presentation servers

The data travels from source systems to presentation servers via the data staging area. The entire process is popularly known as ETL (extract, transform, and load) or ETT (extract, transform, and transfer). Oracle’s ETL tool is called Oracle Warehouse Builder (OWB) and MS SQL Server’s

ETL tool is called Data Transformation Services (DTS).

A typical architecture of a data warehouse is shown below:

META

DAT A

HIGHLY

SUMMERIZED DATA

LIGHTLY

SUMMERIZED DATA END USER ACCESS

TOOLS

DETAILED DATA

WAREHOUSE MANAGER

Each component and the tasks performed by them are explained below:

1.

OPERATIONAL DATA

The sources of data for the data warehouse is supplied from:

(i) The data from the mainframe systems in the traditional network and hierarchical format.

(ii) Data can also come from the relational DBMS like Oracle, Informix.

(iii) In addition to these internal data, operational data also includes external data obtained from commercial databases and databases associated with supplier and customers.

2.

LOAD MANAGER

The load manager performs all the operations associated with extraction and loading data into the data warehouse. These operations include simple transformations of the data to prepare the data for entry into the warehouse. The size and complexity of this component will vary between data warehouses and may be constructed using a combination of vendor data loading tools and custom built programs.

3.

WAREHOUSE MANAGER

The warehouse manager performs all the operations associated with the management of data in the warehouse. This component is built using vendor data management tools and custom built programs. The operations performed by warehouse manager include:

(i) Analysis of data to ensure consistency

(ii) Transformation and merging the source data from temporary storage into data warehouse tables

(iii) Create indexes and views on the base table.

(iv) Denormalization

(v) Generation of aggregation

(vi) Backing up and archiving of data

In certain situations, the warehouse manager also generates query profiles to determine which indexes ands aggregations are appropriate.

4.

QUERY MANAGER

The query manager performs all operations associated with management of user queries. This component is usually constructed using vendor end-user access tools, data warehousing monitoring tools, database facilities and custom built programs. The complexity of a query manager is determined by facilities provided by the end-user access tools and database.

5.

DETAILED DATA

This area of the warehouse stores all the detailed data in the database schema. In most cases detailed data is not stored online but aggregated to the next level of details. However the detailed data is added regularly to the warehouse to supplement the aggregated data.

6.

LIGHTLY AND HIGHLY SUMMERIZED DATA

The area of the data warehouse stores all the predefined lightly and highly summarized

(aggregated) data generated by the warehouse manager. This area of the warehouse is transient as it will be subject to change on an ongoing basis in order to respond to the changing query profiles. The purpose of the summarized information is to speed up the query performance. The summarized data is updated continuously as new data is loaded into the warehouse.

7.

ARCHIVE AND BACK UP DATA

This area of the warehouse stores detailed and summarized data for the purpose of archiving and back up. The data is transferred to storage archives such as magnetic tapes or optical disks.

8.

META DATA

The data warehouse also stores all the Meta data (data about data) definitions used by all processes in the warehouse. It is used for variety of purposed including:

(i) The extraction and loading process – Meta data is used to map data sources to a common view of information within the warehouse.

(ii) The warehouse management process – Meta data is used to automate the production of summary tables.

(iii) As part of Query Management process Meta data is used to direct a query to the most appropriate data source.

The structure of Meta data will differ in each process, because the purpose is different. More about Meta data will be discussed in the later Lecture Notes.

9.

END-USER ACCESS TOOLS

The principal purpose of data warehouse is to provide information to the business managers for strategic decision-making. These users interact with the warehouse using end user access tools.

The examples of some of the end user access tools can be:

(i) Reporting and Query Tools

(ii) Application Development Tools

(iii) Executive Information Systems Tools

(iv) Online Analytical Processing Tools

(v) Data Mining Tools

Three-tier Data warehouse architecture

The bottom tier is a ware-house database server which is almost always a relational database system. The middle tier is an OLAP server which is typically implemented using either

(1) a Relational OLAP (ROLAP) model, (2) a Multidimensional OLAP (MOLAP) model. The top tier is a client, which contains query and reporting tools, analysis tools, and/or data mining tools (e.g., trend analysis, prediction, and so on).

From the architecture point of view, there are three data warehouse models: the enterprise warehouse, the data mart, and the virtual warehouse.

Enterprise warehouse : An enterprise warehouse collects all of the information about subjects spanning the entire organization. It provides corporate-wide data integration, usually from one or more operational systems or external information providers, and is cross-functional in scope. It typically contains detailed data as well as summarized data, and can range in size from a few gigabytes to hundreds of gigabytes, terabytes, or beyond.

Data mart: A data mart contains a subset of corporate-wide data that is of value to a specific group of users. The scope is connected to specific, selected subjects. For example, a marketing data mart may connect its subjects to customer, item, and sales.

The data contained in data marts tend to be summarized. Depending on the source of data, data marts can be categorized into the following two classes:

(i).Independent data marts are sourced from data captured from one or more operational systems or external information providers, or from data generated locally within a particular department or geographic area.

(ii).Dependent data marts are sourced directly from enterprise data warehouses.

Virtual warehouse : A virtual warehouse is a set of views over operational databases. For efficient query processing, only some of the possible summary views may be materialized. A virtual warehouse is easy to build but requires excess capacity on operational database servers.

Figure: A recommended approach for data warehouse development.

Building a Data warehouse

The ETL (Extract Transformation Load) process

In this section we will discussed about the 4 major process of the data warehouse. They are extract (data from the operational systems and bring it to the data warehouse), transform (the data into internal format and structure of the data warehouse), cleanse (to make sure it is of sufficient quality to be used for decision making) and load (cleanse data is put into the data warehouse).

The four processes from extraction through loading often referred collectively as Data Staging .

EXTRACT

Some of the data elements in the operational database can be reasonably be expected to be useful in the decision making, but others are of less value for that purpose. For this reason, it is necessary to extract the relevant data from the operational database before bringing into the data warehouse. Many commercial tools are available to help with the extraction process. Data

Junction is one of the commercial products. The user of one of these tools typically has an easyto-use windowed interface by which to specify the following:

(i) Which files and tables are to be accessed in the source database?

(ii) Which fields are to be extracted from them? This is often done internally by

SQL Select statement.

(iii) What are those to be called in the resulting database?

(iv) What is the target machine and database format of the output?

(v) On what schedule should the extraction process be repeated?

TRANSFORM

The operational databases developed can be based on any set of priorities, which keeps changing with the requirements. Therefore those who develop data warehouse based on these databases are typically faced with inconsistency among their data sources. Transformation process deals with rectifying any inconsistency (if any).

One of the most common transformation issues is ‘Attribute Naming Inconsistency’. It is common for the given data element to be referred to by different data names in different databases. Employee Name may be EMP_NAME in one database, ENAME in the other. Thus one set of Data Names are picked and used consistently in the data warehouse. Once all the data elements have right names, they must be converted to common formats. The conversion may encompass the following:

(i) Characters must be converted ASCII to EBCDIC or vise versa.

(ii) Mixed Text may be converted to all uppercase for consistency.

(iii) Numerical data must be converted in to a common format.

(iv) Data Format has to be standardized.

(v) Measurement may have to convert. (Rs/ $)

(vi) Coded data (Male/ Female, M/F) must be converted into a common format.

All these transformation activities are automated and many commercial products are available to perform the tasks. DataMAPPER from Applied Database Technologies is one such comprehensive tool.

CLEANSING

Information quality is the key consideration in determining the value of the information. The developer of the data warehouse is not usually in a position to change the quality of its underlying historic data, though a data warehousing project can put spotlight on the data quality

issues and lead to improvements for the future. It is, therefore, usually necessary to go through the data entered into the data warehouse and make it as error free as possible. This process is known as Data Cleansing .

Data Cleansing must deal with many types of possible errors. These include missing data and incorrect data at one source; inconsistent data and conflicting data when two or more source are involved. There are several algorithms followed to clean the data, which will be discussed in the coming lecture notes.

LOADING

Loading often implies physical movement of the data from the computer(s) storing the source database(s) to that which will store the data warehouse database, assuming it is different. This takes place immediately after the extraction phase. The most common channel for data movement is a high-speed communication link. Ex: Oracle Warehouse Builder is the API from

Oracle, which provides the features to perform the ETL task on Oracle Data Warehouse.

Data cleaning problems

This section classifies the major data quality problems to be solved by data cleaning and data transformation. As we will see, these problems are closely related and should thus be treated in a uniform way. Data transformations [26] are needed to support any changes in the structure, representation or content of data. These transformations become necessary in many situations, e.g., to deal with schema evolution, migrating a legacy system to a new information system, or when multiple data sources are to be integrated. As shown in Fig. 2 we roughly distinguish between single-source and multi-source problems and between schema- and instance-related problems. Schema-level problems of course are also reflected in the instances; they can be addressed at the schema level by an improved schema design

(schema evolution), schema translation and schema integration. Instance-level problems, on the other hand, refer to errors and inconsistencies in the actual data contents which are not visible at the schema level. They are the primary focus of data cleaning. Fig. 2 also indicates some typical problems for the various cases. While not shown in Fig. 2, the single-source problems occur (with increased likelihood) in the multi-source case, too, besides specific multi-source problems.

Single-source problems

The data quality of a source largely depends on the degree to which it is governed by schema and integrity constraints controlling permissable data values. For sources without schema, such as files, there are few restrictions on what data can be entered and stored, giving rise to a high probability of errors and inconsistencies. Database systems, on the other hand, enforce restrictions of a specific data model (e.g., the relational approach requires simple attribute values, referential integrity, etc.) as well as applicationspecific integrity constraints. Schema-related data quality problems thus occur because of the lack of appropriate model-specific or application-specific integrity constraints, e.g., due to data model limitations or poor schema design, or because only a few integrity constraints were defined to limit the overhead for integrity control. Instance-specific problems relate to errors and inconsistencies that cannot be prevented at the schema level (e.g., misspellings).

For both schema- and instance-level problems we can differentiate different problem scopes: attribute

(field), record, record type and source; examples for the various cases are shown in Tables 1 and 2. Note that uniqueness constraints specified at the schema level do not prevent duplicated instances, e.g., if information on the same real world entity is entered twice with different attribute values (see example in

Table 2).

Multi-source problems

The problems present in single sources are aggravated when multiple sources need to be integrated. Each source may contain dirty data and the data in the sources may be represented differently, overlap or contradict. This is because the sources are typically developed, deployed and maintained independently to serve specific needs. This results in a large degree of heterogeneity w.r.t. data management systems, data models, schema designs and the actual data.

At the schema level, data model and schema design differences are to be addressed by the steps of schema translation and schema integration, respectively. The main problems w.r.t. schema design are naming and structural conflicts. Naming conflicts arise when the same name is used for different objects

(homonyms) or different names are used for the same object (synonyms). Structural conflicts occur in many variations and refer to different representations of the same object in different sources, e.g., attribute vs. table representation, different component structure, different data types, different integrity constraints, etc. In addition to schema-level conflicts, many conflicts appear only at the instance level

(data conflicts). All problems from the single-source case can occur with different representations in different sources (e.g., duplicated records, contradicting records,…). Furthermore, even when there are the same attribute names and data types, there may be different value representations (e.g., for marital status) or different interpretation of the values (e.g., measurement units Dollar vs. Euro) across sources.

Moreover, information in the sources may be provided at different aggregation levels (e.g., sales per product vs. sales per product group) or refer to different points in time (e.g. current sales as of yesterday for source 1 vs. as of last week for source 2).

A main problem for cleaning data from multiple sources is to identify overlapping data, in particular matching records referring to the same real-world entity (e.g., customer). This problem is also referred to as the object identity problem, duplicate elimination or the merge/purge problem. Frequently, the information is only partially redundant and the sources may complement each other by providing additional information about an entity. Thus duplicate information should be purged out and complementing information should be consolidated and merged in order to achieve a consistent view of real world entities.

The two sources in the example of Fig. 3 are both in relational format but exhibit schema and data conflicts. At the schema level, there are name conflicts (synonyms Customer / Client , Cid / Cno ,

Sex / Gender ) and structural conflicts (different representations for names and addresses). At the instance level, we note that there are different gender representations (“0”/”1” vs. “F”/”M”) and presumably a duplicate record (Kristen Smith). The latter observation also reveals that while Cid / Cno are both sourcespecific identifiers, their contents are not comparable between the sources; different numbers (11/493) may refer to the same person while different persons can have the same number (24). Solving these problems requires both schema integration and data cleaning; the third table shows a possible solution.

Note that the schema conflicts should be resolved first to allow data cleaning, in particular detection of duplicates based on a uniform representation of names and addresses, and matching of the Gender / Sex values.

Data cleaning approaches

In general, data cleaning involves several phases

Data analysis: In order to detect which kinds of errors and inconsistencies are to be removed, a detailed data analysis is required. In addition to a manual inspection of the data or data samples, analysisprograms should be used to gain metadata about the data properties and detect data quality problems.

Definition of transformation workflow and mapping rules: Depending on the number of data sources, their degree of heterogeneity and the “dirtyness” of the data, a large number of data transformation and cleaning steps may have to be executed. Sometime, a schema translation is used to map sources to a common data model; for data warehouses, typically a relational representation is used. Early datacleaning steps can correct single-source instance problems and prepare the data for integration. Latersteps deal with schema/data integration and cleaning multi-source instance problems, e.g., duplicates.

For data warehousing, the control and data flow for these transformation and cleaning steps should be specified within a workflow that defines the ETL process (Fig. 1).

The schema-related data transformations as well as the cleaning steps should be specified by a declarative query and mapping language as far as possible, to enable automatic generation of the transformation code. In addition, it should be possible to invoke user-written cleaning code and specialpurpose tools during a data transformation workflow. The transformation steps may request user feedback on data instances for which they have no built-in cleaning logic.

Verification: The correctness and effectiveness of a transformation workflow and the transformation definitions should be tested and evaluated, e.g., on a sample or copy of the source data, to improve the definitions if necessary. Multiple iterations of the analysis, design and verification steps may be needed, e.g., since some errors only become apparent after applying some transformations.

Transformation: Execution of the transformation steps either by running the ETL workflow for loading and refreshing a data warehouse or during answering queries on multiple sources.

Backflow of cleaned data: After (single-source) errors are removed, the cleaned data should also replace the dirty data in the original sources in order to give legacy applications the improved data too and to avoid redoing the cleaning work for future data extractions. For data warehousing, the cleaned data is available from the data staging area (Fig. 1).

Data analysis

Metadata reflected in schemas is typically insufficient to assess the data quality of a source, especially if only a few integrity constraints are enforced. It is thus important to analyse the actual instances to obtain real (reengineered) metadata on data characteristics or unusual value patterns. This metadata helps finding data quality problems. Moreover, it can effectively contribute to identify attribute correspondences between source schemas (schema matching), based on which automatic data transformations can be derived.

There are two related approaches for data analysis, data profiling and data mining. Data profiling focuses on the instance analysis of individual attributes. It derives information such as the data type, length, value range, discrete values and their frequency, variance, uniqueness, occurrence of null values, typical string pattern (e.g., for phone numbers), etc., providing an exact view of various quality aspects of the attribute.

Table 3 shows examples of how this metadata can help detecting data quality problems.

Data mining helps discover specific data patterns in large data sets, e.g., relationships holding between several attributes. This is the focus of so-called descriptive data mining models including clustering, summarization, association discovery and sequence discovery [10]. As shown in [28], integrity constraints among attributes such as functional dependencies or application-specific “business rules” can be derived, which can be used to complete missing values, correct illegal values and identify duplicate records across data sources. For example, an association rule with high confidence can hint to data quality problems in instances violating this rule. So a confidence of 99% for rule “ total=quantity*unit price ” indicates that 1% of the records do not comply and may require closer examination.

Defining data transformations

The data transformation process typically consists of multiple steps where each step may perform schema and instance-related transformations (mappings). To allow a data transformation and cleaning system to generate transformation code and thus to reduce the amount of self-programming it is necessary to specify the required transformations in an appropriate language, e.g., supported by a graphical user interface.

Various ETL tools (see Section 4) offer this functionality by supporting proprietary rule languages. A more general and flexible approach is the use of the standard query language SQL to perform the data transformations and utilize the possibility of application-specific language extensions, in particular userdefined functions (UDFs) .UDFs can be implemented in SQL or a general purpose programming language with embedded SQL statements. They allow implementing a wide range of data transformations

and support easy reuse for different transformation and query processing tasks. Furthermore, their execution by the DBMS can reduce data access cost and thus improve performance. necessary data transformations to be applied to the first source. The transformation defines a view on which further mappings can be performed. The transformation performs a schema restructuring with additional attributes in the view obtained by splitting the name and address attributes of the source. The required data extractions are achieved by UDFs (shown in boldface). The UDF implementations can contain cleaning logic, e.g., to remove misspellings in city names or provide missing zip codes.

UDFs may still imply a substantial implementation effort and do not support all necessary schema transformations. In particular, simple and frequently needed functions such as attribute splitting or merging are not generically supported but need often to be re-implemented in application-specific variations (see specific extract functions in Fig. 4).

Conflict resolution

A set of transformation steps has to be specified and executed to resolve the various schema- and instancelevel data quality problems that are reflected in the data sources at hand. Several types of transformations are to be performed on the individual data sources in order to deal with single-source problems and to prepare for integration with other sources. In addition to a possible schema translation, these preparatory steps typically include:

Extracting values from free-form attributes (attribute split): Free-form attributes often capture multiple individual values that should be extracted to achieve a more precise representation and support further cleaning steps such as instance matching and duplicate elimination. Typical examples are name and address fields (Table 2, Fig. 3, Fig. 4). Required transformations in this step are reordering of values within a field to deal with word transpositions, and value extraction for attribute splitting.

Validation and correction: This step examines each source instance for data entry errors and tries to correct them automatically as far as possible. Spell checking based on dictionary lookup is useful for identifying and correcting misspellings. Furthermore, dictionaries on geographic names and zip codes help to correct address data. Attribute dependencies (birthdate – age, total price – unit price / quantity, city – phone area code,…) can be utilized to detect problems and substitute missing values or correct wrong values.

Standardization: To facilitate instance matching and integration, attribute values should be converted to a consistent and uniform format. For example, date and time entries should be brought into a specific format; names and other string data should be converted to either upper or lower case, etc. Text data may be condensed and unified by performing stemming, removing prefixes, suffixes, and stop words.

Furthermore, abbreviations and encoding schemes should consistently be resolved by consulting special synonym dictionaries or applying predefined conversion rules. Dealing with multi-source problems requires restructuring of schemas to achieve a schema integration, including steps such as splitting, merging, folding and unfolding of attributes and tables. At the instance level, conflicting representations need to be resolved and overlapping data must to be dealt with. The duplicate elimination task is typically performed after most other transformation and cleaning steps, especially after having cleaned singlesource errors and conflicting representations. It is performed either on two cleaned sources at a time or on a single already integrated data set. Duplicate elimination requires to first identify (i.e. match) similar records concerning the same real world entity. In a second step, similar records are merged into one record containing all relevant attributes without redundancy. Furthermore, redundant records are purged.

Tool support

ETL tools

A large number of commercial tools support the ETL process for data warehouses in a comprehensive way, e.g., C OPY M ANAGER (InformationBuilders), D ATA S TAGE (Informix/Ardent), E XTRACT (ETI),

P OWER M ART (Informatica), D ECISION B ASE (CA/Platinum), D ATA T RANSFORMATION S ERVICE

(Microsoft), M ETA S UITE (Minerva/Carleton), S AGENT S OLUTION P LATFORM (Sagent), and

W AREHOUSE A DMINISTRATOR (SAS). They use a repository built on a DBMS to manage all metadata about the data sources, target schemas, mappings, script programs, etc., in a uniform way. Schemas and data are extracted from operational data sources via both native file and DBMS gateways as well as standard interfaces such as ODBC and EDA. Data transformations are defined with an easy-to-use graphical interface. To specify individual mapping steps, a proprietary rule language and a comprehensive library of predefined conversion functions are typically provided. The tools also support reusing existing transformation solutions, such as external C/C++ routines, by providing an interface to integrate them into the internal transformation library. Transformation processing is carried out either by an engine that interprets the specified transformations at runtime, or by compiled code. All engine-based tools (e.g.,

C OPY M ANAGER , D ECISION B ASE , P OWER M ART , D ATA S TAGE , W AREHOUSE A DMINISTRATOR ), possess a scheduler and support workflows with complex execution dependencies among mapping jobs. A workflow may also invoke external tools, e.g., for specialized cleaning tasks such as name/address cleaning or duplicate elimination. ETL tools typically have little built-in data cleaning capabilities but allow the user to specify cleaning functionality via a proprietary API. There is usually no data analysis support to automatically detect data errors and inconsistencies. However, users can implement such logic with the metadata maintained and by determining content characteristics with the help of aggregation functions (sum, count, min, max, median, variance, deviation,…). The provided transformation library covers many data transformation and cleaning needs, such as data type conversions (e.g., date reformatting), string functions (e.g., split, merge, replace, sub-string search), arithmetic, scientific and statistical functions, etc. Extraction of values from free-form attributes is not completely automatic but the user has to specify the delimiters separating sub-values. The rule languages typically cover if-then and case constructs that help handling exceptions in data values, such as misspellings, abbreviations, missing or cryptic values, and values outside of range. These problems can also be addressed by using a table lookup construct and join functionality. Support for instance matching is typically restricted to the use of the join construct and some simple string matching functions, e.g., exact or wildcard matching and soundex. However, user-defined field matching functions as well as functions for correlating field similarities can be programmed and added to the internal transformation library.

Metadata repository

Metadata are data about data. When used in a data warehouse, metadata are the data that define warehouseobjects. Metadata are created for the data names and definitions of the given warehouse. Additional metadata are created and captured for time stamping any extracted data,

the source of the extracted data, and missing fields that have been added by data cleaning or integration processes. A metadata repository should contain:

A description of the structure of the data warehouse. This includes the warehouse schema, view, dimensions, hierarchies, and derived data definitions, as well as data mart locations and contents;

Operational metadata, which include data lineage (history of migrated data and the sequence of transformations applied to it), currency of data (active, archived, or purged), and monitoring information (warehouse usage statistics, error reports, and audit trails);

the algorithms used for summarization, which include measure and dimension definition algorithms, data on granularity, partitions, subject areas, aggregation, summarization, and predefined queries and reports;

The mapping from the operational environment to the data warehouse, which includes source databases and their contents, gateway descriptions, data partitions, data extraction, cleaning, transformation rules and defaults, data refresh and purging rules, and security

(user authorization and access control).

Data related to system performance, which include indices and profiles that improve data access and retrieval performance, in addition to rules for the timing and scheduling of refresh, update, and replication cycles; and

Business metadata, which include business terms and definitions, data ownership information, and charging policies.

UNIT II BUSINESS ANALYSIS 8

Reporting and Query tools and Applications – Tool Categories – The Need for

Applications – Cognos Impromptu – Online Analytical Processing (OLAP) – Need –

Multidimensional Data Model – OLAP Guidelines – Multidimensional versus

Multirelational OLAP – Categories of Tools – OLAP Tools and the Internet.

Features of OLTP and OLAP

The major distinguishing features between OLTP and OLAP are summarized as follows.

1. Users and system orientation : An OLTP system is customer-oriented and is used for transaction and query processing by clerks, clients, and information technology professionals. An

OLAP system is market-oriented and is used for data analysis by knowledge workers, including managers, executives, and analysts.

2. Data contents : An OLTP system manages current data that, typically, are too detailed to be easily used for decision making. An OLAP system manages large amounts of historical data, provides facilities for summarization and aggregation, and stores and manages information at different levels of granularity. These features make the data easier for use in informed decision making.

3. Database design : An OLTP system usually adopts an entity-relationship (ER) data model and an application oriented database design. An OLAP system typically adopts either a star or snowflake model and a subject-oriented database design.

4. View : An OLTP system focuses mainly on the current data within an enterprise or department, without referring to historical data or data in different organizations. In contrast, an OLAP system often spans multiple versions of a database schema. OLAP systems also deal with information that originates from different organizations, integrating information from many data stores. Because of their huge volume, OLAP data are stored on multiple storage media.

5. Access patterns : The access patterns of an OLTP system consist mainly of short, atomic transactions. Such a system requires concurrency control and recovery mechanisms. However, accesses to OLAP systems are mostly read-only operations although many could be complex queries.

Comparison between OLTP and OLAP systems.

Multidimensional DataModel .

The most popular data model for data warehouses is a multidimensional model. This model can exist in the form of a star schema, a snowflake schema, or a fact constellation schema.

Let's have a look at each of these schema types.

Star schema : The star schema is a modeling paradigm in which the data warehouse contains (1) a large central table (fact table), and (2) a set of smaller attendant tables

(dimension tables), one for each dimension. The schema graph resembles a starburst, with the dimension tables displayed in a radial pattern around the central fact table.

Figure Star schema of a data warehouse for sales.

Snowflake schema : The snowflake schema is a variant of the star schema model, where some dimension tables are normalized, thereby further splitting the data into additional tables. The resulting schema graph forms a shape similar to a snowflake. The major difference between the snowflake and star schema models is that the dimension tables of the snowflake model may be kept in normalized form. Such a table is easy to maintain and also saves storage space because a large dimension table can be extremely large when the dimensional structure is included as columns.

Figure Snowflake schema of a data warehouse for sales.

Fact constellation : Sophisticated applications may require multiple fact tables to share dimension tables. This kind of schema can be viewed as a collection of stars, and hence is called a galaxy schema or a fact constellation.

Figure Fact constellation schema of a data warehouse for sales and shipping.

A Data Mining Query Language, DMQL: Language Primitives

Cube Definition (Fact Table) define cube <cube_name> [<dimension_list>]: <measure_list>

Dimension Definition (Dimension Table) define dimension <dimension_name> as (<attribute_or_subdimension_list>)

Special Case (Shared Dimension Tables)

First time as “cube definition”

define dimension <dimension_name> as <dimension_name_first_time> in cube

<cube_name_first_time>

Defining a Star Schema in DMQL define cube sales_star [time, item, branch, location]: dollars_sold = sum(sales_in_dollars), avg_sales = avg(sales_in_dollars), units_sold = count(*) define dimension time as (time_key, day, day_of_week, month, quarter, year) define dimension item as (item_key, item_name, brand, type, supplier_type) define dimension branch as (branch_key, branch_name, branch_type) define dimension location as (location_key, street, city, province_or_state, country)

Defining a Snowflake Schema in DMQL define cube sales_snowflake [time, item, branch, location]: dollars_sold = sum(sales_in_dollars), avg_sales = avg(sales_in_dollars), units_sold = count(*) define dimension time as (time_key, day, day_of_week, month, quarter, year) define dimension item as (item_key, item_name, brand, type, supplier(supplier_key, supplier_type)) define dimension branch as (branch_key, branch_name, branch_type) define dimension location as (location_key, street, city(city_key, province_or_state, country))

Defining a Fact Constellation in DMQL define cube sales [time, item, branch, location]: dollars_sold = sum(sales_in_dollars), avg_sales = avg(sales_in_dollars), units_sold = count(*) define dimension time as (time_key, day, day_of_week, month, quarter, year) define dimension item as (item_key, item_name, brand, type, supplier_type) define dimension branch as (branch_key, branch_name, branch_type) define dimension location as (location_key, street, city, province_or_state, country) define cube shipping [time, item, shipper, from_location, to_location]: dollar_cost = sum(cost_in_dollars), unit_shipped = count(*) define dimension time as time in cube sales define dimension item as item in cube sales define dimension shipper as (shipper_key, shipper_name, location as location in cube sales, shipper_type) define dimension from_location as location in cube sales define dimension to_location as location in cube sales

Measures: Three Categories

Measure: a function evaluated on aggregated data corresponding to given dimension-value pairs.

Measures can be:

distributive: if the measure can be calculated in a distributive manner.

E.g., count(), sum(), min(), max().

algebraic: if it can be computed from arguments obtained by applying distributive aggregate functions.

E.g., avg()=sum()/count(), min_N(), standard_deviation().

holistic: if it is not algebraic.

E.g., median(), mode(), rank().

A Concept Hierarchy

Concept hierarchies allow data to be handled at varying levels of abstraction

OLAP operations on multidimensional data.

1. Roll-up : The roll-up operation performs aggregation on a data cube, either by climbing-up a concept hierarchy for a dimension or by dimension reduction. Figure shows the result of a roll-up operation performed on the central cube by climbing up the concept hierarchy for location. This hierarchy was defined as the total order street < city < province or state <country.

2. Drill-down : Drill-down is the reverse of roll-up. It navigates from less detailed data to more detailed data. Drill-down can be realized by either stepping-down a concept hierarchy for a dimension or introducing additional dimensions. Figure shows the result of a drill-down

operation performed on the central cube by stepping down a concept hierarchy for time defined as day < month < quarter < year. Drill-down occurs by descending the time hierarchy from the level of quarter to the more detailed level of month.

3. Slice and dice: The slice operation performs a selection on one dimension of the given cube, resulting in a subcube. Figure shows a slice operation where the sales data are selected from the central cube for the dimension time using the criteria time=”Q2". The dice operation defines a subcube by performing a selection on two or more dimensions.

4. Pivot (rotate): Pivot is a visualization operation which rotates the data axes in view in order to provide an alternative presentation of the data. Figure shows a pivot operation where the item and location axes in a 2-D slice are rotated.

Figure : Examples of typical OLAP operations on multidimensional data.

From on-line analytical processing to on-line analytical mining .

On-Line Analytical Mining (OLAM) (also called OLAP mining), which integrates online analytical processing (OLAP) with data mining and mining knowledge in multidimensional databases, is particularly important for the following reasons.

1. High quality of data in data warehouses.

Most data mining tools need to work on integrated, consistent, and cleaned data, which requires costly data cleaning, data transformation and data integration as preprocessing steps. A data warehouse constructed by such preprocessing serves as a valuable source of high quality data for OLAP as well as for data mining.

2.

Available information processing infrastructure surrounding data warehouses .

Comprehensive information processing and data analysis infrastructures have been or will be systematically constructed surrounding data warehouses, which include accessing, integration, consolidation, and transformation of multiple, heterogeneous databases,

ODBC/OLEDB connections, Web-accessing and service facilities, reporting and OLAP analysis tools.

3.

OLAP-based exploratory data analysis .

Effective data mining needs exploratory data analysis. A user will often want to traverse through a database, select portions of relevant data, analyze them at different granularities, and present knowledge/results in different forms. On-line analytical mining provides facilities for data mining on different subsets of data and at different levels of abstraction, by drilling, pivoting, filtering, dicing and slicing on a data cube and on some intermediate data mining results.

4. On-line selection of data mining functions.

By integrating OLAP with multiple data mining functions, on-line analytical mining provides users with the exibility to select desired data mining functions and swap data mining tasks dynamically.

Architecture for on-line analytical mining

An OLAM engine performs analytical mining in data cubes in a similar manner as an

OLAP engine performs on-line analytical processing. An integrated OLAM and OLAP architecture is shown in Figure, where the OLAM and OLAP engines both accept users' on-line queries via a User GUI API and work with the data cube in the data analysis via a Cube API.

A metadata directory is used to guide the access of the data cube. The data cube can be constructed by accessing and/or integrating multiple databases and/or by filtering a data warehouse via a Database API which may support OLEDB or ODBC connections. Since an

OLAM engine may perform multiple data mining tasks, such as concept description, association,

classification, prediction, clustering, time-series analysis,etc., it usually consists of multiple, integrated data mining modules and is more sophisticated than an OLAP engine.

Figure: An integrated OLAM and OLAP architecture.

Data Cube Computation.

Data cube can be viewed as a lattice of cuboids

The bottom-most cuboid is the base cuboid

The top-most cuboid (apex) contains only one cell

How many cuboids in an n-dimensional cube with L levels?

Materialization of data cube

Materialize every (cuboid) (full materialization), none (no materialization), or some

(partial materialization)

Selection of which cuboids to materialize

Based on size, sharing, access frequency, etc.

Cube Operation

Cube definition and computation in DMQL

define cube sales[item, city, year]: sum(sales_in_dollars) compute cube sales

Transform it into a SQL-like language (with a new operator cube by, introduced by Gray et al.’96)

SELECT item, city, year, SUM (amount)

FROM SALES

CUBE BY item, city, year

Need compute the following Group-Bys

(date, product, customer),

(date,product),(date, customer), (product, customer),

(date), (product), (customer)

()

Cube Computation: ROLAP-Based Method

Efficient cube computation methods o ROLAP-based cubing algorithms (Agarwal et al’96) o Array-based cubing algorithm (Zhao et al’97) o Bottom-up computation method (Bayer & Ramarkrishnan’99)

ROLAP-based cubing algorithms

o Sorting, hashing, and grouping operations are applied to the dimension attributes in order to reorder and cluster related tuples

Grouping is performed on some subaggregates as a “partial grouping step”

Aggregates may be computed from previously computed aggregates, rather than from the base fact table

Multi-way Array Aggregation for Cube

Computation

Partition arrays into chunks (a small subcube which fits in memory).

Compressed sparse array addressing: (chunk_id, offset)

Compute aggregates in “multiway” by visiting cube cells in the order which minimizes the # of times to visit each cell, and reduces memory access and storage cost.

Indexing OLAP data

The bitmap indexing method is popular in OLAP products because it allows quick searching in data cubes.

The bitmap index is an alternative representation of the record ID (RID) list. In the bitmap index for a given attribute, there is a distinct bit vector, By, for each value v in the domain of the attribute. If the domain of a given attribute consists of n values, then n bits are needed for each entry in the bitmap index

The join indexing method gained popularity from its use in relational database query processing. Traditional indexing maps the value in a given column to a list of rows having that value. In contrast, join indexing registers the joinable rows of two relations from a relational database. For example, if two relations R(RID;A) and S(B; SID) join on the attributes A and B, then the join index record contains the pair (RID; SID), where RID and SID are record identifiers from the R and S relations, respectively.

Efficient processing of OLAP queries

1. Determine which operations should be performed on the available cuboids. This involves transforming any selection, projection, roll-up (group-by) and drill-down operations specified in the query into corresponding SQL and/or OLAP operations. For example, slicing and dicing of a data cube may correspond to selection and/or projection operations on a materialized cuboid.

2. Determine to which materialized cuboid(s) the relevant operations should be applied. This involves identifying all of the materialized cuboids that may potentially be used to answer the query, pruning the

Metadata repository

Metadata are data about data. When used in a data warehouse, metadata are the data that define warehouseobjects. Metadata are created for the data names and definitions of the given warehouse. Additional metadata are created and captured for time stamping any extracted data, the source of the extracted data, and missing fields that have been added by data cleaning or integration processes. A metadata repository should contain:

A description of the structure of the data warehouse. This includes the warehouse schema, view, dimensions, hierarchies, and derived data definitions, as well as data mart locations and contents;

Operational metadata, which include data lineage (history of migrated data and the sequence of transformations applied to it), currency of data (active, archived, or purged), and monitoring information (warehouse usage statistics, error reports, and audit trails);

the algorithms used for summarization, which include measure and dimension definition algorithms, data on granularity, partitions, subject areas, aggregation, summarization, and predefined queries and reports;

The mapping from the operational environment to the data warehouse, which includes source databases and their contents, gateway descriptions, data partitions, data extraction, cleaning, transformation rules and defaults, data refresh and purging rules, and security

(user authorization and access control).

Data related to system performance, which include indices and profiles that improve data access and retrieval performance, in addition to rules for the timing and scheduling of refresh, update, and replication cycles; and

Business metadata, which include business terms and definitions, data ownership information, and charging policies.

Data warehouse back-end tools and utilities

Data warehouse systems use back-end tools and utilities to populate and refresh their data. These tools and facilities include the following functions:

1.

Data extraction, which typically gathers data from multiple, heterogeneous, and external sources;

2.

Data cleaning, which detects errors in the data and recti_es them when possible;

3.

Data transformation, which converts data from legacy or host format to warehouse format;

4.

Load, which sorts, summarizes, consolidates, computes views, checks integrity, and builds indices and partitions;

5.

Refresh, which propagates the updates from the data sources to the warehouse.

6.

Besides cleaning, loading, refreshing, and metadata defnition tools, data warehouse systems usually provide a good set of data warehouse management tools.

OLAP Server Architectures.

Relational OLAP (ROLAP) servers : These are the intermediate servers that stand in between a relational back-end server and client front-end tools. They use a relational or extended-relational

DBMS to store and manage warehouse data, and OLAP middleware to support missing pieces.

ROLAP servers include optimization for each DBMS back-end, implementation of aggregation navigation logic, and additional tools and services. ROLAP technology tends to have greater scalability than MOLAP technology.

Multidimensional OLAP (MOLAP) servers : These servers support multidimensional views of data through array-based multidimensional storage engines. They map multidimensional views directly to data cube array structures.Many OLAP servers adopt a two-level storage representation to handle sparse and dense data sets: the dense subcubes are identified and stored as array structures, while the sparse subcubes employ compression technology for efficient storage utilization.

Hybrid OLAP (HOLAP) servers: The hybrid OLAP approach combines ROLAP and MOLAP technology, benefitting from the greater scalability of ROLAP and the faster computation of

MOLAP. For example, a HOLAP server may allow large volumes of detail data to be stored in a relational database, while aggregations are kept in a separate MOLAP store.

Specialized SQL servers: To meet the growing demand of OLAP processing in relational databases, some relational and data warehousing forms (e.g., Redbrick) implement specialized

SQL servers which provide advanced query language and query processing support for SQL queries over star and snowflake schemas in a read-only environment.

Comparison between MDDBs and RDBMSs

MDDB RDBMS

Data is stored in multidimensional arrays Data is stored in relations

Direct inspection of an array gives a great deal of information

Not so

Can handle limited size databases (< 100GB) Proven track record for handling VLDBs

Takes long to load and update Highly volatile data are better handled

Support aggregations better

New investments need to be made and new skill sets need to be developed

RDBMSs are catching up-Aggregate

Navigators

Most enterprises already made significant investments in RDBMS technology and skill sets

Adds complexity to the overall system architecture

No additional complexity

Limited no. of facts an dimensional tables No such restriction

Examples Examples

Arbor-Essbase

Brio Query-Enterprise

Dimensional Insight-DI Diver

Oracle-Express Server

IBM-DB2

Microsoft-SQL Server

Oracle-Oracle RDBMS

Red Brick Systems-Red Brick

Warehouse

UNIT III

DATA MINING

Introduction – Data – Types of Data – Data Mining Functionalities – Interestingness of

Patterns – Classification of Data Mining Systems – Data Mining Task Primitives –

Integration of a Data Mining System with a Data Warehouse – Issues –Data

Preprocessing.

PART A

What is Data?

•

Collection of data objects and their attributes

• An attribute is a property or characteristic of an object

–

Examples: eye color of a person, temperature, etc.

–

Attribute is also known as variable, field, characteristic, or feature

• A collection of attributes describe an object

–

Object is also known as record, point, case, sample, entity, or instance

Attributes

Tid Refund Marital

Status

Taxable

Income Cheat

Objects

1

2

3

4

Yes

No

No

Yes

Single

Married 100K

Single

125K

70K

Married 120K

No

No

No

No

5 No Divorced 95K Yes

6

7

8

9

No

Yes

No

No

10

10 No

Married 60K

Divorced 220K

Single 85K

Married 75K

Single 90K

No

No

Yes

No

Yes

Attribute Values

•

Attribute values are numbers or symbols assigned to an attribute

• Distinction between attributes and attribute values

–

Same attribute can be mapped to different attribute values

•

Example: height can be measured in feet or meters

–

Different attributes can be mapped to the same set of values

•

Example: Attribute values for ID and age are integers

• But properties of attribute values can be different

– ID has no limit but age has a maximum and minimum value

Types of Attributes

•

There are different types of attributes

–

Nominal

•

Examples: ID numbers, eye color, zip codes

– Ordinal

•

Examples: rankings (e.g., taste of potato chips on a scale from 1-10), grades, height in {tall, medium, short}

– Interval

•

Examples: calendar dates, temperatures in Celsius or Fahrenheit.

–

Ratio

Examples: temperature in Kelvin, length, time, counts

Evolution of Database Technology

Data mining primitives.

A data mining query is defined in terms of the following primitives

1. Task-relevant data: This is the database portion to be investigated. For example, suppose that you are a manager of All Electronics in charge of sales in the United States and Canada. In particular, you would like to study the buying trends of customers in Canada. Rather than mining on the entire database. These are referred to as relevant attributes

2. The kinds of knowledge to be mined: This specifies the data mining functions to be performed, such as characterization, discrimination, association, classification, clustering, or evolution analysis. For instance, if studying the buying habits of customers in Canada, you may choose to mine associations between customer profiles and the items that these customers like to buy

3. Background knowledge: Users can specify background knowledge, or knowledge about the domain to be mined. This knowledge is useful for guiding the knowledge discovery process, and for evaluating the patterns found. There are several kinds of background knowledge.

4. Interestingness measures: These functions are used to separate uninteresting patterns from knowledge. They may be used to guide the mining process, or after discovery, to evaluate the discovered patterns. Different kinds of knowledge may have different interestingness measures.

5. Presentation and visualization of discovered patterns: This refers to the form in which discovered patterns are to be displayed. Users can choose from different forms for knowledge presentation, such as rules, tables, charts, graphs, decision trees, and cubes.

Figure : Primitives for specifying a data mining task.

Ent ( S )

E ( T , S )

Knowledge Discovery in Databases or KDD

Knowledge discovery as a process is depicted and consists of an iterative sequence of the following steps:

Data cleaning (to remove noise or irrelevant data),

Data integration (where multiple data sources may be combined)

Data selection (where data relevant to the analysis task are retrieved from the database)

Data transformation (where data are transformed or consolidated into forms appropriate for mining by performing summary or aggregation operations, for instance),

Data mining (an essential process where intelligent methods are applied in order to extract data patterns),

Pattern evaluation (to identify the truly interesting patterns representing knowledge based on some interestingness measures;), and

Knowledge presentation (where visualization and knowledge representation techniques are used to present the mined knowledge to the user).

Figure: Data mining as a process of knowledge discovery.

Architecture of a typical data mining system.

The architecture of a typical data mining system may have the following major components

1. Database, data warehouse, or other information repository.

This is one or a set of databases, data warehouses, spread sheets, or other kinds of information repositories. Data cleaning and data integration techniques may be performed on the data.

2. Database or data warehouse server.

The database or data warehouse server is responsible for fetching the relevant data, based on the user's data mining request.

3. Knowledge base.

This is the domain knowledge that is used to guide the search, or evaluate the interestingness of resulting patterns. Such knowledge can include concept hierarchies, used to organize attributes or attribute values into different levels of abstraction. Knowledge such as user beliefs, which can be used to assess a pattern's interestingness based on its unexpectedness, may also be included.

4. Data mining engine . This is essential to the data mining system and ideally consists of a set of functional modules for tasks such as characterization, association analysis, classification, evolution and deviation analysis.

5.

Pattern evaluation module . This component typically employs interestingness measures and interacts with the data mining modules so as to focus the search towards interesting patterns. It may access interestingness thresholds stored in the knowledge base. Alternatively, the pattern evaluation module may be integrated with the mining module, depending on the implementation of the data mining method used.

6.

Graphical user interface.

This module communicates between users and the data mining system, allowing the user to interact with the system by specifying a data mining query or task, providing information to help focus the search, and performing exploratory data mining based on the intermediate data mining results.

Figure: Architecture of a typical data mining system

Data mining functionalities

Data mining functionalities are used to specify the kind of patterns to be found in data mining tasks. In general, data mining tasks can be classified into two categories

Descriptive and Predictive.

Descriptive mining tasks characterize the general properties of the data in the database.

Predictive mining tasks perform inference on the current data in order to make predictions.

In some cases, users may have no idea of which kinds of patterns in their data may be interesting, and hence may like to search for several different kinds of patterns in parallel. Thus it is important to have a data mining system that can mine multiple kinds of patterns to accommodate di_erent user expectations or applications. Furthermore, data mining systems should be able to discover patterns at various granularities. To encourage interactive and exploratory mining, users should be able to easily \play" with the output patterns, such as by mouse clicking. Operations that can be speci_ed by simple mouse clicks include adding or dropping a dimension (or an attribute), swapping rows and columns (pivoting, or axis rotation), changing dimension representations (e.g., from a 3-D cube to a sequence of 2-D cross tabulations, or crosstabs), or using OLAP roll-up or drill-down operations along dimensions. Such operations allow data patterns to be expressed from different angles of view and at multiple levels of abstraction.

Data mining systems should also allow users to specify hints to guide or focus the search for interesting patterns. Since some patterns may not hold for all of the data in the database, a measure of certainty or \trustworthiness" is usually associated with each discovered pattern.

Data mining functionalities, and the kinds of patterns they can discover, are described below.

Concept/class description: characterization and discrimination

Data can be associated with classes or concepts. For example, in the AllElectronics store, classes of items for sale include computers and printers, and concepts of customers include bigSpenders and budgetSpenders. It can be useful to describe individual classes and concepts in summarized, concise, and yet precise terms. Such descriptions of a class or a concept are called class/concept descriptions. These descriptions can be derived via (1) data characterization, by summarizing the data of the class under study (often called the target class) in general terms, or (2) data discrimination, by comparison of the target class with one or a set of comparative classes (often called the contrasting classes), or (3) both data characterization and discrimination.

Data characterization is a summarization of the general characteristics or features of a target class of data. The data corresponding to the user-specified class are typically collected by a database query. For example, to study the characteristics of software products whose sales increased by 10% in the last year, one can collect the data related to such products by executing an SQL query. There are several methods for e_ective data summarization and characterization.

For instance, the data cube- based OLAP roll-up operation can be used to perform usercontrolled data summarization along a specified dimension. This process is further detailed in

Chapter 2 which discusses data warehousing. An attribute- oriented induction technique can be used to perform data generalization and characterization without step-by-step user interaction.

The output of data characterization can be presented in various forms. Examples include pie charts, bar charts, curves, multidimensional data cubes, and multidimensional tables, including crosstabs. The resulting descriptions can also be presented as generalized relations, or in rule form (called characteristic rules).

Association analysis

Association analysis is the discovery of association rules showing attribute-value conditions that occur frequently together in a given set of data. Association analysis is widely used for market basket or transaction data analysis. More formally, association rules are of the form X ) Y , i.e.,

\A1 ^ _ _ _ ^Am !B1 ^ _ _ _^Bn", where Ai (for i 2 f1; : : :;mg) and Bj (for j 2 f1; : : :; ng) are attribute-value pairs. The association rule X ) Y is interpreted as \database tuples that satisfy the conditions in X are also likely to satisfy the conditions in Y ".

An association between more than one attribute, or predicate (i.e., age, income, and buys).

Adopting the terminology used in multidimensional databases, where each attribute is referred to as a dimension,the above rule can be referred to as a multidimensional association rule. Suppose, as a marketing manager of AllElectronics, you would like to determine which items are frequently purchased together within the same transactions. An example of such a rule is

Contains

(T; \computer") ) contains(T; \software") [support = 1%; confidence = 50%] meaning that if a transaction T contains \computer", there is a 50% chance that it contains

\software" as well, and 1% of all of the transactions contain both. This association rule involves a single attribute or predicate (i.e., contains) which repeats. Association rules that contain a single predicate are referred to as single-dimensional association rules. Dropping the predicate notation, the above rule can be written simply as \computer ) software [1%, 50%]".

Classification and prediction

Classification is the processing of finding a set of models (or functions) which describe and distinguish data classes or concepts, for the purposes of being able to use the model to predict the class of objects whose class label is unknown. The derived model is based on the analysis of a set of training data (i.e., data objects whose class label is known). The derived model may be represented in various forms, such as classi_cation (IF-THEN) rules, decision trees, mathematical formulae, or neural networks. A decision tree is a ow-chart-like tree structure, where each node denotes a test on an attribute value, each branch represents an outcome of the test, and tree leaves represent classes or class distributions. Decision trees can be easily converted to classi_cation rules. A neural network is a collection of linear threshold units that can be trained to distinguish objects of different classes.Classification can be used for predicting the class label of data objects. However, in many applications, one may like to predict some missing or unavailable data values rather than class labels. This is usually the case when the predicted values are numerical data, and is often specifically referred to as prediction. Although prediction may refer to both data value prediction and class label prediction, it is usually con_ned to data value prediction and thus is distinct from classification. Prediction also encompasses the identification of distribution trends based on the available data. Classification and prediction may need to be preceded by relevance analysis which attempts to identify at tributes that do not contribute to the classification or prediction process.

Clustering analysis

Clustering analyzes data objects without consulting a known class label. In general, the class labels are not present in the training data simply because they are not known to begin with.

Clustering can be used to generate such labels. The objects are clustered or grouped based on the

principle of maximizing the intraclass similarity and minimizing the interclass similarity. That is, clusters of objects are formed so that objects within a cluster have high similarity in comparison to one another, but are very dissimilar to objects in other clusters. Each cluster that is formed can be viewed as a class of objects, from which rules can be derived.

Evolution and deviation analysis

Data evolution analysis describes and models regularities or trends for objects whose behavior changes over time. Although this may include characterization, discrimination, association, classi_cation, or clustering of time-related data, distinct features of such an analysis include time-series data analysis, sequence or periodicity pattern matching, and similarity-based data analysis.

Interestingness Patterns

A data mining system has the potential to generate thousands or even millions of patterns, or rules. This raises some serious questions for data mining:

A pattern is interesting if (1) it is easily understood by humans, (2) valid on new or test data with some degree of certainty, (3) potentially useful, and (4) novel. A pattern is also interesting if it validates a hypothesis that the user sought to con_rm. An interesting pattern represents knowledge. Several objective measures of pattern interestingness exist. These are based on the structure of discovered patterns and the statistics underlying them. An objective measure for association rules of the form XU Y is rule support, representing the percentage of data samples that the given rule satisfies. Another objective measure for association rules is confidence, which assesses the degree of certainty of the detected association. It is defined as the conditional probability that a pattern Y is true given that X is true. More formally, support and confidence aredefined as support(X ) Y) = Prob{XUY}g confidence (X ) Y) = Prob{Y |X}g

A classification of data mining systems

Data mining is an interdisciplinary field, the conuence of a set of disciplines including database systems, statistics, machine learning, visualization, and information science. Moreover, depending on the data mining approach used, techniques from other disciplines may be applied, such as neural networks, fuzzy and/or rough set theory, knowledge representation, inductive logic programming, or high performance computing. Depending on the kinds of data to be mined or on the given data mining application, the data mining system may also integrate techniques from spatial data analysis, information retrieval, pattern recognition, image analysis, signal processing, computer graphics, Web technology, economics, or psychology. Because of the diversity of disciplines contributing to data mining, data mining research is expected to generate a large variety of data mining systems. Therefore, it is necessary to provide a clear classi_cation of data mining systems. Such a classi_cation may help potential users distinguish data mining systems and identify those that best match their needs. Data mining systems can be categorized according to various criteria, as follows._ Classi_cation according to the kinds of databases mined. A data mining system can be classi_ed according to the kinds of databases mined.

Database systems themselves can be classi_ed according to di_erent criteria (such as data

models, or the types of data or applications involved), each of which may require its own data mining technique. Data mining systems can therefore be classi_ed accordingly.

For instance, if classifying according to data models, we may have a relational, transactional, object-oriented, object-relational, or data warehouse mining system. If classifying according to the special types of data handled, we may have a spatial, time-series, text, or multimedia data mining system, or a World-Wide Web mining system. Other system types include heterogeneous data mining systems, and legacy data mining systems.

Classification according to the kinds of knowledge mined . Data mining systems can be categorized according to the kinds of knowledge they mine, i.e., based on data mining functionalities, such as characterization, discrimination, association, classi_cation, clustering, trend and evolution analysis, deviation analysis, similarity analysis, etc. A comprehensive data mining system usually provides multiple and/or integrated data mining functionalities. Moreover, data mining systems can also be distinguished based on the granularity or levels of abstraction of the knowledge mined, including generalized knowledge (at a high level of abstraction), primitive-level knowledge (at a raw data level), or knowledge at multiple levels (considering several levels of abstraction). An advanced data mining system should facilitate the discovery of knowledge at multiple levels of abstraction.

Classification according to the kinds of knowledge mined.

Data mining systems can be categorized according to the kinds of knowledge they mine, i.e., based on data mining functionalities, such as characterization, discrimination, association, classi_cation, clustering, trend and evolution analysis, deviation analysis, similarity analysis, etc.

A comprehensive data mining system usually provides multiple and/or integrated data mining functionalities.Moreover, data mining systems can also be distinguished based on the granularity or levels of abstraction of the knowledge mined, including generalized knowledge (at a high level of abstraction), primitive-level knowledge (at a raw data level), or knowledge at multiple levels (considering several levels of abstraction). An advanced data mining system should facilitate the discovery of knowledge at multiple levels of abstraction.

Classification according to the kinds of techniques utilized

Data mining systems can also be categorized according to the underlying data mining techniques employed. These techniques can be described according to the degree of user interaction involved (e.g., autonomous systems, interactive exploratory systems, query-driven systems), or the methods of data analysis employed (e.g., database-oriented or data warehouse-oriented techniques, machine learning, statistics, visualization, pattern recognition, neural networks, and so on). A sophisticated data mining system will often adopt multiple data mining techniques or work out an e_ective, integrated technique which combines the merits of a few individual approaches.

Major issues in data mining

The scope of this book addresses major issues in data mining regarding mining methodology, user interaction, performance, and diverse data types. These issues are introduced below:

1. Mining methodology and user-interaction issues.

These reect the kinds of knowledge mined, the ability to mine knowledge at multiple granularities, the use of domain knowledge, adhoc mining, and knowledge visualization.

Mining different kinds of knowledge in databases.

Since different users can be interested in different kinds of knowledge, data mining should cover a wide spectrum of data analysis and knowledge discovery tasks, including data characterization, discrimination, association, classification, clustering, trend and deviation analysis, and similarity analysis. These tasks may use the same database in different ways and require the development of numerous data mining techniques.

Interactive mining of knowledge at multiple levels of abstraction.