Change Rate and Complexity in Software Evolution

advertisement

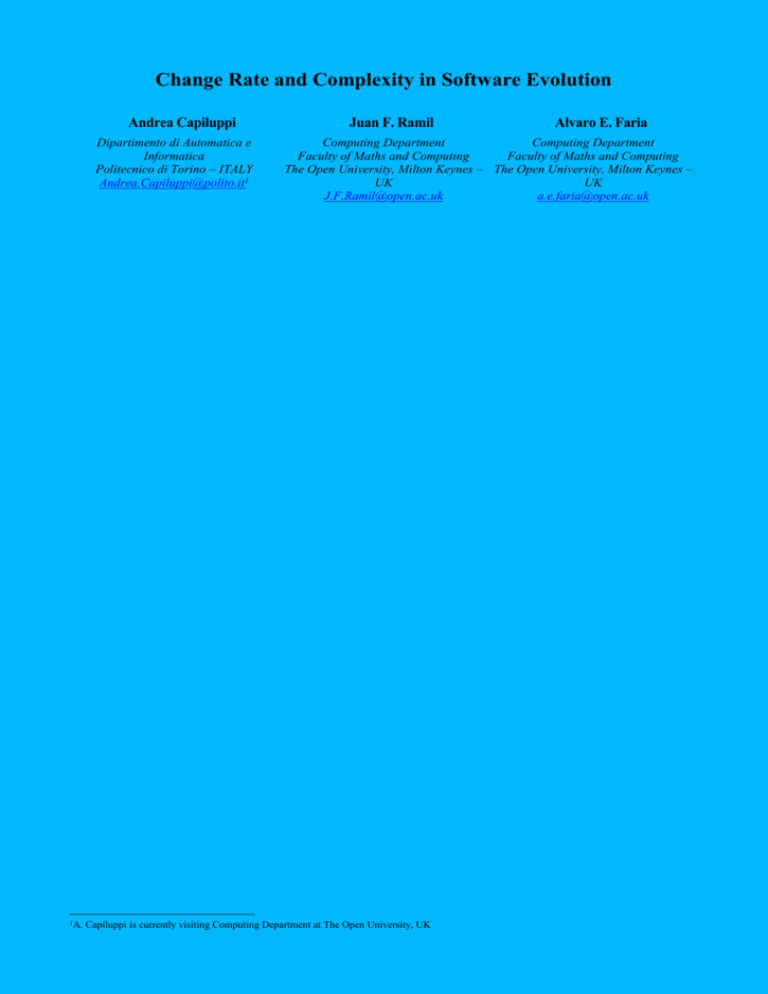

Change Rate and Complexity in Software Evolution Andrea Capiluppi Dipartimento di Automatica e Informatica Politecnico di Torino – ITALY Andrea.Capiluppi@polito.it1 1A. Juan F. Ramil Alvaro E. Faria Computing Department Computing Department Faculty of Maths and Computing Faculty of Maths and Computing The Open University, Milton Keynes – The Open University, Milton Keynes – UK UK J.F.Ramil@open.ac.uk a.e.faria@open.ac.uk Capiluppi is currently visiting Computing Department at The Open University, UK Abstract This paper deals with the problem of identifying which parts of the source code will benefit most from refactoring, that is, complexity reduction, and how this work should be prioritised for maximum impact on the evolvability of a system. Our approach ranks the files based on the value of a metric called release-touches, which simply counts the number of releases at which a given file has been changed. The metric enables us to split the code into two parts: the more stable and the less stable. The paper presents empirical data derived from the distributed file system Arla, an evolving Open Source System, with 62 public releases since 1998. The results indicate that the source files which are subject to the higher change rate include a large portion of highly complex functions. It is argued that refactoring of this less stable, more complex functions, is likely to have an impact on evolvability. Keywords: Cyclomatic Complexity, Maintenance, Metrics, Open Source, Refactoring, Software Evolution 1. Introduction and rationale The results from empirical studies of software maintenance and evolution can provide the basis for improved processes, methods and tools. In our recent work we have been studying the long-term evolution of Open Source Software (OSS). In several recent studies we have been looking at the relationship between functional growth, software structure and change rate in OSS systems [Capiluppi et al 2004a,b]. The present paper explores relationship between change rate and complexity during the evolution of an OSS software system. The results presented in this paper complement the previous studies by identifying which parts of the software system should be refactored first, so that the maximum impact on evolvability is achieved. Anti-regressive work [Lehman 1974], that is, complexity control, is an essential task in order to sustain software evolution (hypothesis 4a). Peaks of anti-regressive work are likely to be associated to discontinuities in the trends and to restructuring releases, releases in which the architectural complexity is reduced by means, for example, of re-organising modules in sub-systems (hypothesis 4b) In the early seventies, Lehman observed that as change upon change are implemented in a software system, its complexity is likely to increase, unless effort is allocated to complexity control [Lehman 1974]. Lehman used the term anti-regressive in order to refer to such complexity control and to distinguish it from progressive work. The former does not add immediate value to the system. The latter does. Re-writing code so that it implements the same function but in a less complex way is an example of antiregressive work. It adds long-term value to the system by making easier the implementation of future changes to the code. Parnas recognised the presence of software aging effects as a consequence of the accumulation of change [Parnas 1994]. Lehman and Parnas views justify the development of metricsbased methods and tools which related to anti-regressive work. In the recent years, the term refactoring, a term closely related to Lehman's antitegressive work, has become popular [refactoring]. Empirical evidence suggests that anti-regressive work is an integral part of the work undertaken during software evolution[Capiluppi & Ramil 2004]. The question is whether such work can be directed to the parts of the system in which it would provide the highest benefit in terms of improving productivity. In order to develop a metrics-based model or a tool to guide refactoring efforts, one can start, for example, by identifying the relationship between change rate and complexity using empirical data from actively evolved systems. One then can identify what parts of the system should be refactored first in order to achieve a maximum benefit. One possible approach consists of refactoring the most complex functions in the less stable [Bevan & Whitehead 2003] part of the system, the part that historically has been shown as more likely to undergo change. In this paper we explore this idea through a metrics-based approach which identifies the less stable part of a system, that is, the part of the system that has been subject to the higher evolution rate. Then, we observe the tails of the complexity distribution for the files or function belonging to this part of the system. The tails contain the subset of the most complex functions in the system that are most likely to change in the future. One can argue that refactoring these functions is likely to provide the higher gains. This paper presents initial results based on empirical data from Arla, an Open Source software which implements distributed file systems, and briefly indicates plans for our further work. 2. Approach and application to a case study In this section we introduce the sequential steps used to obtain the results presented so that other researchers may be able to replicate this study. The steps are as follows: 1. Project selection: in principle, any system, either proprietary or OSS can be studied with this approach. Access to source code is necessary to perform the analysis. Ideally one wish to have access to the source code at each one of all the past releases of the software. But it could be sufficient with having access to the code at the most recent release2 if there is also access to sufficiently complete and well-formatted change log records which can make it possible the extraction of the metrics of interest. 2. Metrics extraction: The attributes which are being examined in this study are: the number of releases for which a particular file has been touched, called release-touches, for each file in the source code, on the one hand, and the complexity of each function measured by the McCabe cyclomatic complexity index [McCabe 1976], on the other. The number of release-touches is simply the number of different releases during which a file has been manipulated, via addition of code to it, removal of existing code or modification. When the number of release-touches is one, it indicates that the file has been touched (created or modified), during one single release interval, and never touched again during the evolution of the system. The maximum possible number of release-touches for a file is the total number of releases. A number of release-touches equal to the number of releases indicates that a file has been created and then touched at every release. In the majority of the cases the investigators can only access a subset of all the releases of a software system, or the software system has inherited code from an existing software system. If this is the case, a number of release-touches equal to zero for a particular file indicates that the file was already present at the first available release and never modified again. The release-touches metric provides a way to track the number of releases during which a file has been modified, and it can also be expressed as a percentage of the total number of releases available for study in the evolution history of the system. One way of calculating the metric is to compare each distinct source file with the same file at the subsequent (or 2 In general, a software release is an executable snapshot of the evolving software which is delivered to users. We use the term release to refer to the source code, as it was at the moment in time in which it is delivered. 1.1. System studied: Arla Arla is an Open Source implementation of the AFS file system: AFS was first created by IBM, and Arla is an OSS clone emulating AFS on various configurations (Windows NT, Linux, Solaris, and so on). This system made available its first public release in February 1998 (labeled 0.0), and its most recent release is labeled 0.35.12 (considered as a minor release, February 2003). 35 major releases and 27 minor releases have been generated so far, that is, a total of 62 public releases are downloadable from the Internet for study. 1.2. Source files identification The Arla system is written mainly in C (*.c files), which together with header files (*.h) and macros (*.m4), form the basic core of the system. Figure 1 displays Arla's growth trend in total number of files: From around 330 files at its first available release, the system evolves up to 760 source files in its latest available release. By examining all the releases of the system, we identified a total of 1055 distinct source files. About 300 of them have been deleted during the evolution of the system, which explains why the latest available release consists of 760 source files only. All the Arla results in this and the next sections are obtained through PERL scripts that we developed, which can be made available to other researchers who may wish to replicate the study. Arla - evolution of source files number of source files 800 750 700 650 600 550 500 450 400 350 57 60 54 51 45 48 42 39 36 30 33 27 24 21 15 18 12 1 3 5 7 9 300 releases Figure 1 - Arla system: growth of the number of source files 1.3. Metrics extraction per file we evaluated each For each pair of Release-touches subsequent releases, distinct source file, in order to capture any delta (i.e., addition, deletion or modification). The results were summarised for each file using the release-touches metric with each of the files being assigned a fsn according to the release-touches value and then ranked. Figure 2 presents the value of the release-touches metric expressed as a percentage of the total number of releases. A small subset of the files was changed frequently (left part of Figure 2), while most of them were never changed, or changed onlyfile once (right part and tail of Figure sequence number 09 10 32 4 36 4 40 4 44 4 48 4 52 4 56 4 60 4 64 4 68 5 72 5 76 5 80 5 84 5 88 5 92 5 96 5 28 5 24 6 7 20 7 12 7 16 91 1 80% 75% 70% 65% 60% 55% 50% 45% 40% 35% 30% 25% 20% 15% 10% 5% 0% 28 60 release touches previous) release by using UNIX tools (e.g. diff). One releasetouch is counted for each release interval at which at least an addition, deletion or modification of source lines is reported by the tool. This indicator provides a measure of evolution change rate that can be applied to software repositories. 3. Identification of the less stable part of the software: our approach ranks all the files according to its number of releasetouches. One possible way of ranking the files is by assigning to each file a file sequence number (fsn), say, fsn 1 to the file that has the highest number of release-touches, and increasing the assigned fsn as the number of release-touches decreases. Files with the same release-touches number can be ranked alphabetically according to their name or following other rule. This way, the higher fsn is assigned to one the files which has the lowest number of release-touches. Then, we can use the fsn in order to split the set of files into two groups of equal size in terms of number of files. These two sets are as follows: one contains the 50 percent of the files or so with the higher number of release-touches, what we call the unstable part of the system, and the remainder 50 percent of the files which were subject to the lower change rate, are called the stable part of the system. 4. Complexity measurement for stable and unstable parts of the system: we wish to check whether the files which are subject to more frequent change (unstable part) represent the more complex part of the system, as implied independently by Lehman and Parnas. In order to do this, we use a tool [metrics] that evaluates the McCabe index for the functions belonging to the files in each of the two sets identified during step 3 above. The McCabe index is evaluated by UNIX utilities [metrics]. One issue that emerges here is that McCabe index is defined for functions (or procedures), which are contained within files, but there is no equivalent measure of complexity at file level to the McCabe index. The issue is then how to compare the measures at two different levels of granularity: the change rate at the file level (releases touches) with the complexity measure at the function level (McCabe). Possible alternatives include: calculating the median or average McCabe index of the functions in a file, evaluating the sum of the McCabe indexes per file, calculating a weighted average McCabe index for each file, which takes into account the portion of the size file which is allocated to a particular function, or alternatively, if possible, making the analysis of release-touches at the function level, instead of files. Further work will need to consider the merits of each of those. For the moment we have applied the first two: the sum and the average of the McCabe index, which are the simplest to obtain. 5. Identifying candidates for refactoring: this is done by analysing the files in the unstable part of the system and identifying those which have the highest complexity values. In general, the McCabe index is considered as an indicator of overly complex functions when its value is larger than 20 [McCabe & Butler 1989]. All the functions above that limit can become candidates for refactoring. As a final step, in order to assess whether the most complex functions have already been subject to antiregressive work in the past, we then consider, and display the evolution of their McCabe index over release sequence numbers (rsn). Figure 2 - Distribution of release-touches per file, as a percentage of total number of releases. The line and circle indicate the point that splits files into two sets of similar size. 2). deleted files does not affect the overall qualitative results of the study. Next, since we are interested in understanding whether larger files correspond to heavily changed ones, we plotted the size of the corresponding files at the latest available release. Figure 3 shows the count of lines of code when the corresponding files are ordered using the fsn (same rank order as in Figure 2). The horizontal axis shows the same files as above, while the vertical axis displays their individual size in LOCs: from this figure it's clearly visible that heavily changed files are also the larger ones. One concludes that the parts of the system subject to more frequent change are also the parts that become larger. Figure 3 - Size in lines of code for source files ranked in the same order as in Figure 2 Size of files at latest available release Lines of code (LOC) 5000 4500 4000 3500 3000 2500 2000 1500 1000 500 2 5 7 0 3 9 99 94 88 82 77 6 8 1 4 6 9 5 7 2 file sequence number 71 65 59 54 48 42 36 31 25 0 19 14 1 42 88 0 We performed a similar visualisation of Figure 3 but now for complexity. We obtained the McCabe index for complexity at the function level. Then, we obtained an indicator for complexity at the file level in two different ways: by using the sum of all complexity indexes of the functions in the same file, and by using the average of complexity indexes of all the functions in the same file. McCabe - sum of indexes per file 200 180 Sum of McCabe indexes per file Figure 160 4 - Sum of complexity index of all functions in a file 140 120 100 80 60 40 20 20 10 24 4 29 9 35 4 40 9 46 4 51 9 57 4 62 9 68 4 73 9 79 4 84 9 90 4 95 9 3 9 18 13 1 40 84 0 file sequence number The first visualisation (Figure 4) shows on the horizontal axis the files in the same rank order imposed by the number of releasetouches as in (Figure 2), while the vertical axis displays the sum of the complexities of functions inside each file. This visualisation displays the data at the latest available release of the system studied. A similar pattern than that found investigating LOCs is found here but with respect to complexity of files: the more 50 80 45 70 40 60 35 30 50 25 40 113 430 118 987 224 534 29 318 1 35 363 8 40 428 46 5 482 51 2 537 57 9 592 62 6 657 68 3 712 73 0 77 7967 82 8424 87 9081 2 9 9538 7 99 10 5 18 20 30 15 20 10 10 5 0 0 11 440 2 884 8 McCabe index Number of functions McCabe - average of indexes per file Number of functions per file Figure 5 - Number of available functions per file, at latest available 60 (latest release) Figure release 55 6 - Average complexity index of all functions per file 90 file sequence number file sequence number complex files are the ones that underwent the higher number of release-touches. The second visualization (Figure 5) displays the complexity of files when calculating the average of the complexities of their functions: the results are different from both Figure 3 and Figure 4, since complexity of files appears to be more uniformly distributed over files which have been subject to different amount of changes. This result could be a consequence of the average calculations. In order to understand better the issue, we calculate the number of functions per file and presented it as a function of the fsn in Figure 6. This shows that, when considering the latest available release, the files which have been subject to more changes are also the ones that contain a larger number of functions. The higher the number functions, the more likely that the file will contain a mixture of small and large functions with dissimilar complexity. Then the average complexity index becomes less meaningful as an indicator for the purpose of identifying candidates for refactoring. Ideally the analysis should be done at the function level. 1.4. Identification of more stable and less stable parts Observing Figure 2, there's a clear distinction between files which were heavily changed, and files which are subject of less evolution work. In order to compare the complexity of the unstable and stable parts of the systems, we split the sequence of files in Figure 2 in two halves, each containing 50% of the distinct source files. Splitting the horizontal axis of Figure 2 in two, we are interested in isolating files that were never or once changed from the others. We refer to these two subsets as the less stable part of the system and the most stable part, respectively. As presented in Figure 7, we selected the functions pertaining to the highly modified files, and displayed the distribution of complexity measures, as a McCabe number (horizontal axis). The vertical axis presents the number of functions having a particular McCabe index. A large number of small and minimally complex functions are represented by the first two bars at the left of the plot. However, a small number of functions display the highest complexity values in the tails of the distribution, with a maximum number of 136 as the McCabe index for the most complex function in the Arla system. This histogram, again, reflects the files and functions of Arla at its latest available release. We used the same approach to draw the histogram for the most stable subset of files: the results are displayed in Figure 8. At least two observations can be made here. The first observation relates to the length of tails. The tail in Figure 7 is longer than in Figure 8, which demonstrates that more frequently touched files contain the most complex functions. The second observation relates to the total sum of functions composing this subset of files: in the most stable part we can sum up to around 800 functions composing the distribution (Figure 8), while the less stable part consists of (around) 2700 functions composing the distribution (Figure 7). This indicates that the less frequently touched files are also less complex with respect of the number of functions contained. Less part stable half More stable half -McCabe - file file level level Figure 8 - Less stable of Arla: index for functions in the1000 files belonging to the less stable half of the files 900 800 Procedures functions 700 700 600 600 500 500 400 400 300 300 1. However, we have found a transformation which applied to the data have approximately make those associations linear. Figure 2 shows the plots of the associations after natural logarithmic transformations have been applied to the data. The resulting relationships are approximately linear. L 2 0 4 6 8 1 M 0 2 4 8 1 0 2 3 4 5 6 M 0 e 0c s0 C s a S b t e a b I n l e d e F x u n c t i o n s 200 200 100 100 4192 6 5123 4 4161 8 4131 0 41002 617 82 3 10 29 133 5 1641 4 19 7 53 22 59 256 5 2871 7 31 7 83 348 9 3795 00 25 411 0 0 McCabe McCabe index index Figure 7 - Most stable part of Arla: McCabe index for functions in the files belonging to the more stable half of the files set 3. Comparison between the McCabe Score function for the more and the less stable phases of an open source code development To compare the functional association of the McCabe indexes for less stable functions with the McCabe indexes for more stable functions, we make use of the statistical theory of linear models. We will be fitting the best linear regression model (in the maximum likelihood sense) to the data relative to each of the two associations and perform the appropriate hypothesis tests for the differences between them. A reason of using linear models is that the theory behind them is (apart from being coherent and robust) easy to communicate to a nonstatistical audience. Certainly, there is scope for adopting more complex (but also more refined) non-linear models in a future development such as a generalised linear model (GLM) for Poisson distributed data with logarithmic link function and possibly learning (from the data) on the Poisson’s mean. 2. 1. Testing for differences between two linear regression models To fit a linear regression model to represent the association between two variables we first must make sure the association is at least approximately linear. This is not the case in our problem where the two associations are both exponential as can be seen in the plots of Figure 0c o C r e a S b e t a I b n l d e e F x u n c t i o n s Figure 1 – The scatter plots of the original data 6 7 0 1 2 3 4 5 L 5 6 7 0 1 2 3 4 L . . 0n 0n ( 0 0 ( L M M e c o c s C C r s e a a S S b b t t a e e a b ) ) b l l e e y1x ) ) Figure 2 – the scatter plots of the transformed data. In order to verify whether there is a significant difference between the two functional relationships of the transformed data, we have adopted an approach consisting in fitting (i.e. estimating from the data) two linear regression models for each association. Note that here we are using the regression lines estimated from the data as descriptors of the associations between the variables. In this sense, the term ‘significant’ means that when comparing the two regression lines the variability of the data in each case is being taken into account. That is, the statistics used for the comparisons are normalized by measures of the data variability such that they are made appropriate to compare with the tabled value of a standard probability distribution function (that represents the ideal or normally expected value). Now, we can represent each linear regression model, in the following way. Let y = ln(McCabe), x1 = ln(LessStable), x2 = ln(MoreStable), the α’s be the intercepts and the β’s the slopes of the respective curves, and, the ε’s be the error terms (independent normally distributed zero mean errors), then we can write (1) ˆd ˆ1 ˆ2 are the estimates of αd = α1 – α2, 2 ˆ and ˆ are the data variances of each of the y2x where 2 1 2 intercepts, and n1 and n2 are the sizes of each respective data set. Zp/2 is the value of the cumulative standard normal distribution corresponding to the significance level p. Without loss, the test for the difference in the slopes is similar to the above test by substituting the α’s by the β’s accordingly. We now apply this result to each of the estimated curves. The intercept and the slope for the regression of the ln(McCabe) on the ln(LessStable) estimated by the MLE (maximum likelihood estimation) method produced the following results. 3. 2. Regressing Functions McCabe on Less Stable Coefficientsa Model 1 Unstandardized Coefficients B Std. Error 3.550 .086 -.458 .036 (Constant) lnLess Standardized Coefficients Beta -.895 a. Dependent Variable: lnCompl Note that not only the estimations for the intercept and the slope are provided in column ‘B’ of ‘Unstandardized Coefficients’ but also the corresponding standard deviations of each estimator under the ‘Std. Error’ column. They are all significant as ‘Sig’. are 0.000 for both estimates. That is, relating to our model (1) we have that (2) Comparing the two relationships represented by the equations above corresponds to comparing the intercepts and the slopes of the two linear regression models. This in its turn corresponds to testing the hypothesis: ˆ ˆ ˆ ˆ 3 . 5 5 , 0 . 4 5 8 , 0 . 0 8 6 a n d 0 . 0 3 6 . 1 1 1 1 (4) H 0 : d d 0 H a : d , d 0 Model Summary where αd = α1 – α2 and βd = β1 – β2 are the differences between the intercepts and the slopes respectively. It is a standard result in statistics that an appropriate test for the hypothesis above is to reject H0 if Model 1 R R Square .895 a .801 Adjusted R Square .796 Std. Error of the Estimate .46635 a. Predictors: (Constant), lnLess z ˆ d ˆ1 2 n1 ˆ2 2 n2 The R Square is a measure of the variability in the data explained by the estimated regression. In this case, the model is explaining about 80% of the data Z pestimated /2 variability. This means this model produces a very good fit to the data. The estimated line is represented below: (3) 0 1 2 3 4 5 6 7 O L L n b 0n i. n e( a ( es 0 L Mr r e v c e C s sd a S t b e a ) b l e ) 4 5 -L R 3 2 1 0 D S 2 1 -4 R 3 2 1 0 D S 2 1 . 0n ec e ec e 0 ( g a p g a p M r t e et r t e et c en s en s C r ds ap i e b o l no n e t t ) S V t a a r ds p i e o l no n t t A S V t d a a j rn i d a a b rn u i d s a t a b e r l d e i : z r l d d e i : ( z L e P L e r d n d n ( e R M ( s R M e s e ) c s c s P C i d C i d r a u b a e l a eu b d a e il ) c ) t e d V a l u e Before we proceed to estimating the other regression line, we visually verify whether the errors look to be independent and normally distributed with zero mean, by examining the histogram and the Normal P-P plot of the errors as well the plot of standardised errors against both the dependent variable ln(McCabe) and the predicted values. The histogram should have an approximately bell shape of a normal distribution, the P-P plot should follow the diagonal line and the standardised errors against the dependent variable and the predicted values should look scattered along the zero axis. R 3 1 8 6 4 2 0 F M S N D H 2 1 0 t er d e= ie ea s . g 4 pn D q t 2 r =e o e u e -n v g e. s 6 = . dn rs 1 a0 1 c i e .o E m 9 y n - 8n 1 8 t S 6 V t a a rn i d a a b r l d e i : le z n d C o R e m s i p l d u e a x l i t y The regression above seems to have produced approximately zero mean normally distributed errors. The standardised errors seem to have some scatter around the zero axes although there is scope for improvement on that. However, in general this model seems a good one. 4. 3. Regressing McCabe on More Stable Functions Now, the second regression (2) is estimated as: O 0 1 E D N . 2 4 6 8 0 x bo e p sp r em e e c r n av t e d e l d ed P n C - C P t u u V m P m a l oP r P i tr a r o o o b b b f l e R : e l n g C r e o s m s i p o l n e x S i t t y a n d a r d i z e d R e s i d u a l Coefficientsa Model 1 (Constant) lnMore Unstandardized Coefficients B Std. Error 3.165 .091 -.418 .032 Standardized Coefficients Beta -.940 a. Dependent Variable: lnCompl ˆ ˆ ˆ ˆ 3 . 1 6 5 , 0 . 4 1 8 , 0 . 0 9 1 a n d 0 . 0 3 2 . 2 2 (5) 2 2 R 3 2 1 0 D S 1 . 0 5 ec e g a p r t e et en s r ds p i e o l no n t t A S V t d a a j rn u i d s a t a b e r l d d e i : ( z P L e r d n e ( s R M s e ) c s P C i d r a eu b d a e il c ) t e d V a l u e Model Summary Model 1 R R Square .940 a .884 Adjusted R Square .878 Std. Error of the Estimate .32760 a. Predictors: (Constant), lnMore 0 1 2 3 4 5 6 7 L lLO R F 7 6 5 4 3 2 1 0 M S N D H n 2 1 C se 0 bn 0n i. t er d e= ie ea s . ( g 2 pn D q r or M e a t r 4 =e o e v u e e -n v om g e. s 5 ep r d = . dn rs a0 1 3 l S c i e .o E m 9 y n e - 7n ax t 1 8 t S 6 b i V t l a a t y e ) rn i d a a b l r d e i : le z n C d o R m e s i p d l u e x a l i t This model is also a good model. It explains 88.4% of the data variability and does not present any serious departures for the normality and independence assumptions of the linear regression models. y 5. 4. Testing for differences Now, substituting the estimated values shown in (4) and (5) on the test statistics (3) we can test for difference between the intercepts: ˆ ˆ ˆ ˆ 3 . 5 5 , 0 . 4 5 8 , 0 . 0 8 6 a n d 0 . 0 3 6 . 1 O 0 1 E D N . 2 4 6 8 0 x bo e p sp r em e e c n r av t e d e l d ed P n C - P C t u V u m P m a l oP r P i tr a r o o b o b b f l e R : e l n g C r e o s m s i p o l n e x S i t t y a n d a r d i z e d R e s i d u a l 1 z 2 ˆ1 ˆ 2 ˆ21 ˆ2 2 n1 1 . 0n ec e 0 ( g a p M r t e et c en s C r ds ap i e b o l no n e t t ) S V t a a rn i d a a b r l d e i : z L e d n ( R M e c s C i d a u b a e l 1 ˆ ˆ ˆ ˆ 3 . 1 6 5 , 0 . 4 1 8 , 0 . 0 9 1 a n d 0 . 0 3 2 . 2 4 -L R 3 2 1 0 D S 1 n2 2 2 3.55 3.165 2 2 ; 0.385 ; 16 0.02283 ; 0.04 8.5746 103 (0.086) (0.091) 42 24 ) z ˆ1 ˆ2 ˆ 21 ˆ 2 2 n1 n2 0.458 0.418 2 2 (0.036) (0.032) 42 24 Now, at 1% significance level (p = 0.01) we have from the table of values for the standard normal distribution that Zp/2 = Z0.005 = 2.56. and= Therefore, z 2.56 for both and we can conclude that there is evidence from the data to support the rejection of H0, i.e. the two regression lines are different. 4. Identification functions of most complex An histogram of the McCabe index for the most complex functions, at the latest available release, is drawn in Figure 9. Basically, we observe a subset of 25 functions that are part of the tail of the distribution in Figure 7, with a peak around a value of 135 for the McCabe index, which denotes a highly complex and critical function, together with other functions which should be also considered as highly complex. Based on these observations, one may wish to investigate how the complexity of these functions evolved, whether they were touched frequently, and if yes, how many times. Figure 10 and Figure 11 show two subsets of these functions, and in only one case (evidenced in Figure 10) the final complexity of the function is lower than in its initial state. All the other functions depicted Figure 9 - Most complex functions at latest available release Most complex functions - latest available release 140 130 McCabe index 120 110 100 90 80 70 60 50 40 30 20 10 0 functions show an increase value of the McCabe index. This suggest that within this system a small portion of functions is highly complex, and the several times these functions were handled, (the steps depicted in both Figure 10 and Figure 11) the outcome was an increasing complexity. Some cases of anti-regressive maintenance are visible when considering descending steps, but in general, when comparing the values for the first and the last release available, the overall complexity trend for each plotted function is increasing. Given that the distribution of the number of functions per file is not uniform, with the highest number of functions present in the most frequently touched files, we decided to split the system in two halves based on the number of release-touches as in Figure 2, but selecting the two halves, such as the two contain the same number of functions. We have found that the splitting point in Figure 2 will be now fsn 210. In order words, the first 210 files ranked by the number of release-touches, have 50 percent of the functions. We have also found that 20 of the 25 most complex functions are present in the less stable part of Arla and only 5 of 25 in the most stable part, providing further evidence between the link between change and complexity. In a different paper we have presented evidence that there have been complexity control work in the Arla system with between 1 and 2 percent of the files being subject of some refactoring [Capiluppi & Ramil 2004]. The results presented here suggests that focusing this relative small refactoring effort on the less stable, highly complex part of the system will be an advantage for the long term evolvability of this system. Note: Figure 2 displays not only the files present in the latest release but also those files that have been deleted from the Arla code repository. But it was not possible to obtain the size in LOCs and McCabe indexes for deleted files. For the sake of simplicity of data manipulation, in Figure 3,4,5 and 6, the deleted files have been ranked, plotted and given a zero value for their LOC and McCabe indexes. The presence of the deleted files with zero McCabe value has biased the histograms in Figure 3,4,5 and 6 but, we believe, not to the point of changing the overall conclusions of this analysis, which are mainly qualitative. In fact the authors are have performed statistical analysis of the histograms removing the deleted files from the dataset and the analysis confirms the conclusions stated here. The authors plan to submit for publication the results of the statistical analysis in the near future. 5. Conclusions and further work The study of the relationship between change rate and complexity is important given the lack of approaches that can provide objective guidance to developers as to which parts of the system should be refactored first than others. Requirements evolve and generally it is difficult to predict the detailed future requirements for an evolving system. Given this limitation, we use historical data reflecting the past evolution of the system. We can use the patterns we observe in historical behaviour in order to guide the future evolution of the system. In this paper we have analyzed Arla, a system released as Open Source Software, from the point of view of the number of changes and corresponding complexity of its components. We have used a metric, release-touches, which represents the number of releases where a change to a particular file was detected. We used this metric to rank the files according to their stability over releases. The corresponding size of files at the latest available release was evaluated, and we found that heavily changed files correspond in general to larger files. In addition, heavily changed files contain more functions than less changed files: this is also reflected when depicting the sum of McCabe indexes per file, which tend to follow a similar pattern to the distribution of sizes. Based on the distribution of release changes, we divided the corresponding area in two halves, each containing 50 percent of the files, containing two different profiles of files changed. We modified the granularity level of our analysis, and observed the functions rather than files. The half having the most frequent changes (the less stable part) revealed to be both the more complex in the sense of highest McCabe index, and the bigger in terms the total number of functions. We finally focused the analysis on the highly complex functions, and observed the evolution of their complexity in the application life-cycle: several steps of increasing complexity are recognizable, while efforts for reducing it (descending steps) are not enough to avoid a latest state which is more complex than the initial state. The corelation observed between high complexity and low statibility suggests that focusing refactoring efforts on the less stable parts of the system can be of benefit in order to avoid system decay and stagnation and for maximum impact on productivity. This paper presents results which we plan to further investigate as part of our research programme into Open Source Software evolution. The analysis presented in this paper is visual and mainly qualitative. We plan, however, to refine our approach. We have already started to apply statistical modeling techniques in order to encapsulate the patterns observed. Further work could involve the adaptation of our approach for object-oriented systems and the exploration of different criteria for the splitting of the system into more stable and less stable parts. We intend to perform dependency analysis between each of the most complex functions and the rest of the system in order to asses whether refactoring can be performed in a localised, function by function basis, or whether it will require modification of large parts of the system. This will enable us to estimate the cost of the refactoring and prioritize the possible refactorings in an objective manner. We also plan to replicate this initial study in other 20 Open Source software or so from which we have collected an empirical dataset. Using this dataset we plan to explore approaches to determine which parts of an evolving system will benefit most from complexity control work such as refactoring. 6. References [Bevan & Whitehead 2003] Bevan J. & Whitehead E.J., Identification of Software Instabilities, WCRE 2003, 13 - 16 November 2003, Victoria, Canada [Capiluppi & Ramil 2004] Capiluppi A., Ramil J.F.; Studying the Evolution of Open Source Systems at Different Levels of Granularity: Two Case Studies, Proc. Intl. Workshop on the Principles of Software Evolution, IWPSE 2004, 5 – 6 Sept. 2004, Kyoto, Japan [Capiluppi et al 2004a] Capiluppi A., Morisio M., Ramil J.F.; Structural evolution of an Open Source system: a case study, Proc. of the 12th International Workshop on Program Comprehension, 24 - 26 June 2004, Bari, Italy, [Capiluppi et al 2004b] Capiluppi A., Morisio M., Ramil J.F.; 2004, “The Evolution of Source Folder Structure in actively evolved Open Source Systems”, Proc. Metrics 2004, 14 - 16 September, 2004, Chicago, Illinois, USA [Lehman 1974] Lehman M.M.; Programs, Cities, Students, Limits to Growth?, Inaugural Lecture, in Imperial College of Science and Technology Inaugural Lecture Series, v. 9, 1970, 1974: 211 - 229. Also in Programming Methodology, Gries D (ed.), Springer Verlag, 1978: 42 - 62 [metrics] Metrics, a tool for extracting various software metrics, at http://barba.dat.escet.urjc.es/index.php?menu=Tools <as of May 2004> [McCabe 1976] McCabe T.J., “A Complexity Measure”, IEEE Trans. on Softw. Eng. 2(4), 1976, pp 308 – 320 [McCabe & Butler 1989] McCabe T.J. and Butler C.W.; "Design Complexity Measurement and Testing." Communications of the ACM 32, 12, 1989, pp 1415 – 1425 [Parnas 1994] Parnas D.L.; “Software Aging”, Proc. ICSE 16, May 16-21, 1994, Sorrento, Italy, pp 279 – 287 [refactoring] Refactoring, a web-site with links to sources in the topic, at http://www.refactoring.com/ <as of Sept 2004> Figure 10 - Evolution of the McCabe index for the of the 12 most complex functions in the Arla system. Note that only in one Evolution of subset first 12 most complex functions case (Function-7) the McCabe index at latest available stage is lower than the first available release 140 130 120 110 Funct-1 Funct-2 Funct-3 Funct-4 Funct-5 Funct-7 Funct-9 Funct-11 Funct-12 90 80 70 60 50 40 30 20 10 releases 62 59 56 53 50 47 44 41 38 35 32 29 26 23 20 17 14 9 11 5 7 0 1 3 McCabe index 100 Figure 11 - Evolution of the McCabe index for the functions in the Arla system ranked 13 th to 24th from higher to lower complexity. Evolution of second half of 24 most complex functions 40 35 Funct-13 Funct-14 Funct-15 Funct-19 Funct-20 Funct-21 Funct-22 Funct-23 Funct-24 25 20 15 10 5 releases 57 60 51 54 48 45 42 39 36 33 30 27 24 21 18 15 12 0 1 3 5 7 9 McCabe index 30