Encoded Octets - Intel® Developer Zone

advertisement

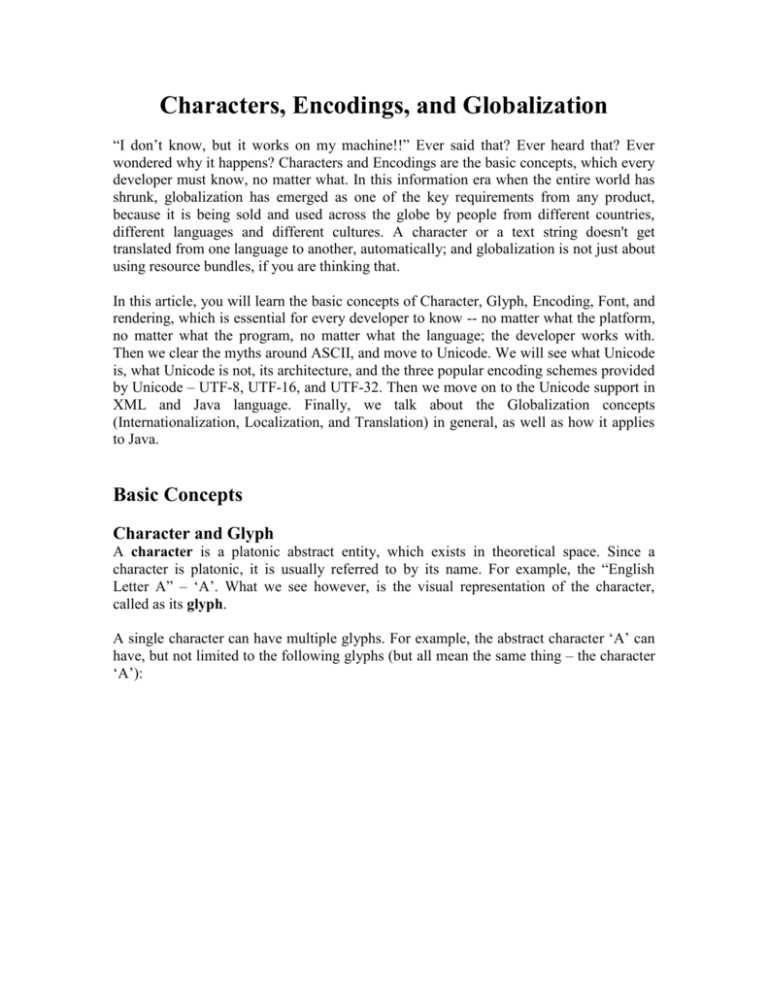

Characters, Encodings, and Globalization

“I don’t know, but it works on my machine!!” Ever said that? Ever heard that? Ever

wondered why it happens? Characters and Encodings are the basic concepts, which every

developer must know, no matter what. In this information era when the entire world has

shrunk, globalization has emerged as one of the key requirements from any product,

because it is being sold and used across the globe by people from different countries,

different languages and different cultures. A character or a text string doesn't get

translated from one language to another, automatically; and globalization is not just about

using resource bundles, if you are thinking that.

In this article, you will learn the basic concepts of Character, Glyph, Encoding, Font, and

rendering, which is essential for every developer to know -- no matter what the platform,

no matter what the program, no matter what the language; the developer works with.

Then we clear the myths around ASCII, and move to Unicode. We will see what Unicode

is, what Unicode is not, its architecture, and the three popular encoding schemes provided

by Unicode – UTF-8, UTF-16, and UTF-32. Then we move on to the Unicode support in

XML and Java language. Finally, we talk about the Globalization concepts

(Internationalization, Localization, and Translation) in general, as well as how it applies

to Java.

Basic Concepts

Character and Glyph

A character is a platonic abstract entity, which exists in theoretical space. Since a

character is platonic, it is usually referred to by its name. For example, the “English

Letter A” – ‘A’. What we see however, is the visual representation of the character,

called as its glyph.

A single character can have multiple glyphs. For example, the abstract character ‘A’ can

have, but not limited to the following glyphs (but all mean the same thing – the character

‘A’):

(Figure: An abstract character in the Abstract Character Space maps to many glyphs in

the Character Glyph Space)

The Abstract Character Space is the set of all characters in this world. Every abstract

character in the Abstract Character Space maps to multiple glyphs in the Character Glyph

Space. All such possible glyphs for every abstract character is called the Character Glyph

Space. Let’s see some of the characteristics of character and glyphs.

Upper and lower case characters

The English language (and some other languages as well, example Latin, etc.) has the

concept of upper case and lower case characters, but not all languages (e.g. Devanagari

and many other East Asian languages) in this world have this concept. Even though, the

abstract character (upper case) ‘A’ semantically means the same thing as the abstract

character (lower case) ‘a’, but for sake of simplicity, both (the upper case and the lower

case characters) are treated as two different characters in the machine world.

ligatures

We saw that one character can have multiple glyphs, but it is also true that some glyphs

represent multiple characters. For example, the glyph ‘æ’ is a combination of two

different glyphs ‘a’ and ‘e’ corresponding to the characters ‘a’ and ‘e’ respectively. Such

glyphs, which get combined to change shape based on its adjacent characters, are called

ligatures. Examples of other ligatures are ‘fi’, ‘fl’, ‘ff’, etc.

(Figure: A ligature broken into individual characters)

Decomposable characters

Decomposable characters (aka composite or precomposed characters) are one, which

can be decomposed into multiple smaller characters. For example, the French letter ‘e

with the acute accent’ is one such character, which can be broken down into the character

‘e’, and the ‘character of the acute accent’.

(Figure: A decomposable character broken into individual characters)

^

`

¸

The acute accent and other such marks like circumflex ( ), grave accent ( ), cedilla ( ),

¯

¨

macron ( ), diaeresis ( ), etc are called diacritical marks. When more than one

diacritical mark is used on a single base character, they all usually either stack up on the

top of the base character or stack down on the bottom of the base character in the order in

which they appear w.r.t. the base character.

Well, technically a ligature can also be called as a composite character, because they too

can be decomposed into individual characters, but there is a subtle difference between the

two. The individual characters in a ligature are complete and independent characters and

can also exist on their own, but it is not the same for decomposable characters. For

example, the acute accent (and other diacritical marks), which is part of decomposable

character, does not mean anything on its own, but only in the context of a complete

character (aka base character).

Character Set and Coded Character Set

A set of abstract characters is called a character set. A set by definition has no order, and

thus, we must not assume any. A character set is just a concept, which is often used and is

quite helpful in discussions, to refer to, a set, or a family of characters. For example, the

Latin character set, or the Devanagari character set, or the Japanese character set, or the

universal character set, and so on and so forth.

Most of these characters have names, but that is not sufficient to identify them uniquely.

Moreover in the machine world, everything is represented as a number; thus, every

character in the character set is assigned a number to identify it uniquely. Such a

character set, where every character is assigned a unique number (an integer number, to

be specific) is called a coded character set (aka Code Page, Character Repertoire, or

even simply as Character Set); and the unique number assigned to a character is called its

character code (aka Code Point, Code Value). A Coded Character Set is independent of

any platform, operating system, or program. Some popular Coded Character Sets are

Unicode, SHIFT_JIS, and ASCII.

Character Encoding and Decoding

A named algorithm to covert a character code to a sequence of code units is called

character encoding (aka Character Encoding Scheme), where, a code unit is a block of

bits always represented in multiples of an octet (8-bits, or casually referred to as a byte).

In other words, character encoding is an algorithm to convert a character code to octets.

For example, UTF-16 is a character-encoding algorithm, which uses a code unit of 2

octets.

Note that, a character, when encoded, may result into one or more octets depending on its

character code and the encoding algorithm used. Such an encoding algorithm, which

generates variable number of octets for different character codes, is called a variablelength encoding scheme. For example, UTF-16 is a variable-length encoding scheme,

which encodes character codes in 16-bits or 32-bits. Encoding algorithms, which always

generate fixed number of octets for different character codes, are called fixed-length

encoding schemes. For example, US-ASCII is a fixed-length encoding scheme, which

always encodes a character code in 7-bits.

A Character Code cannot exist of its own, but only as a part of a Coded Character Set. An

encoding algorithm must know what is the range of valid Character Codes, and what are

illegal characters so that it can encode a character code correctly. Therefore, an encoding

algorithm is also always associated with a Coded Character Set.

Mostly one Coded Character Set (or Code Page) is

algorithm. For example, the US-ASCII code page is

encoding algorithm. But there is no such hard and fast

code page is associated with many encoding algorithms

32.

associated with one encoding

associated with the US-ASCII

rule. For example, the Unicode

like UTF-8, UTF-16, and UTF-

The RFC for the Multipurpose Internet Mail Extensions (MIME) as well as many other

specifications officially refer to the Character Encoding as Charset, which has caused

some confusing w.r.t. the Character Set. But note that a “Charset” is not a “Character

Set”. Though it is confusing but a charset means character encoding.

The mechanism of converting a sequence of octets back to a valid character code is called

character decoding.

Character Rendering and Fonts

The process of displaying the glyph of a character on the screen is called as character

rendering, and the software, which does this, is called as rendering software. For example,

browsers, editors, etc, are all rendering softwares in their core.

There are three things required to render a character – a) the rendering software b) the

decoder, which is usually part of the rendering software, and c) the required fonts.

Apart from the font, the rendering software may use other parameters like – size, colour,

style & effects (bold, italic, underline, strikethrough, emboss, etc.), orientation, etc

depending on how sophisticated the rendering software is. But again, the primary thing

required for rendering a character is the font.

fonts

Things were different in earlier days. Earlier, when there were no concept of fonts, the

rendering softwares used to turn bits on/off on the display screen within the allocated area

of a character to render a character. Now days we use fonts. Fonts are bitmap

representation of characters. The rendering software draws this bitmap on the display

screen to render a character. Therefore, now days, we have more fancy and sophisticated

representation of characters with fancy strokes and all, than we used to have earlier.

There are various types of fonts – example, serif, sans serif, script, etc. But that’s a huge

topic in itself, and is out of the scope of this article. For now, it’s enough to understand

that a font is the bitmap representation of the glyph of a character. Typically a font file

would contain the bitmaps of all the characters for a given character set, mapped to their

respective character codes.

From a broader perspective, let’s take a small example to understand the complete endto-end story of how a text file is displayed:

1. User: Launches the rendering software (say, an editor or a browser).

2. User: Specifies the file – directory location and filename, to render. (Most often

this is the File>Open task)

3. User: Specifies the encoding that was used to create the file. (Usually in the Open

dialog box itself)

Note: This is a very important input for the rendering software, but, most of us ignore

it, and the rendering software has to fallback to rely on the system default encoding,

which, mind it, might not always work. Therefore, make sure you always specify the

right encoding.

4. Rendering s/w: Reads the file from the user specified location using the user

specified encoding.

5. Decoder: While reading, the decoder converts the octets in the file to character

codes as per the encoding algorithm and returns it to the rendering software where

they are accumulated as a sequence of character codes.

6. Rendering s/w: Then loads the user-specified or the default font map.

7. Rendering s/w: Iterates over the accumulated character codes, and looks for every

character code in the loaded font map, and uses the corresponding information

there to render the character.

8. Rendering s/w: While rendering, the rendering software uses any user-specified or

default, style & effects, based on how sophisticated the rendering software is.

So we see that what gets rendered is entirely dependent on the font file – i.e. to what

glyph the code point is associated with. For example, below is how the string “Hello

World” gets rendered in two different fonts:

This raises an important point related to data exchange. When the receiving system gets a

text file from some other machine, it might not be able to display the file properly:

1. If it doesn’t know what font to use for rendering, or

2. If it doesn’t have the correct fonts installed, or

3. If somebody has modified the font file just for kicks.

It would also be a problem, if the rendering software uses an encoding algorithm to read

the file that is different from the one used to create that file. Since encoding algorithms

are always associated with code pages, it is possible that the same character code in the

two different code pages, maps to two different characters, thereby leading to garbage

output. Any given computer (especially servers) needs to support many different

encodings; yet whenever data is passed between different encodings or platforms, that

data always runs the risk of corruption.

Therefore, make sure that:

1. You always use or specify the right encoding when reading a file.

2. You have the necessary fonts installed on your machine.

Directionality

But that’s not it. Rendering software also has to take care of the direction of display.

Most scripts have characters that run from left to right, but that’s not true for all the

scripts. For example, the Arabic script runs from right to left; and some Japanese scripts

run from top to down. The algorithm used by rendering softwares for display is

orthogonal to the way the octets are stored and decoded to character codes. The octets,

btw are always stored from left to right, therefore, it is the rendering software, which is

responsible for rendering the characters with the right orientation and direction.

(Figure: Different directionality of different languages)

ASCII – 7 bits or 8 bits?

ASCII is one of the most popular code page and encoding, so it’s worth discussing it here.

In the period (roughly around) 1963-1967, American National Standards Institute (ANSI),

released American Standard Code for Information Interchange (ASCII) code page with

the intention to standardize information interchange. ASCII defined only 128 characters,

of which there were – 33 non-printable control characters, 52 English alphabet characters

(26 upper and 26 lower case characters), 10 numeric characters (0-9), and the remaining

33 were symbol and punctuation characters.

Now, 128 characters can be accommodated within 7 bits. Most computer registers (then

and even now) are 8-bits, which means 1 bit was still empty, which when used could

store an additional 128 characters. This mistake did not go unnoticed and computer

manufacturers in various countries started using the remaining 1 bit, to accommodate

characters from their native language. And thus, there was a wave of national variants of

ASCII, defining their own characters using the unused bit, which defeated the entire

purpose of information interchange.

Officially, ASCII was, and even now, is 7-bits. The other 8-bit variants, which emerged

by extending the ASCII character set, are unofficially called as Extended ASCII, and

incorrectly still being referred to as ASCII. The correct name for these so called extended

ASCII character sets is the ISO-8859 family (there are 10 extended ASCII character sets,

from ISO-8859-1 to ISO-8859-10). This utter confusion around 7-bit vs. 8-bit could have

had been because most characters in the ASCII character set are now obsolete, and the

original ASCII specification is not available free for a common person to validate the

truths and rumors about ASCII. Anyways, those interested can still purchase the original

ASCII specification from ANSI for $18.

(Figure: Complete ASCII code page with 128 chars)

Because of the huge popularity of ASCII, people started using to refer to an ASCII

encoded text file, simply as plain text file. This was not correct even then, and it is very

wrong even now. A text file cannot exist without an encoding, and in this globalized

world ASCII is more or less obsolete. BTW, the preferred MIME name for ASCII is USASCII.

Unicode

Now that we understand the basic concept of characters, character codes, code page, and

encoding, let’s talk about the popular Unicode standard.

What is Unicode?

Unicode, is a standard, a consortium, and a non-profit organization, started in the year

1988, whose objective is similar to that of the ISO/IEC 10646, which is, to have a single

standard universal character set that addresses all the characters in this world. From

Unicode’s official site “Unicode provides a unique number for every character, no

matter what the platform, no matter what the program, no matter what the language”.

There might be some valid reasons as to why have two standard bodies doing the same

thing with the same set of objectives, which is not very clear; but what is clear, is that,

both these bodies work in collaboration with each other, rather than competing with each

other.

The primary job of Unicode is to collect all the characters from all the languages in this

world, and assign a unique number to every character. In short, Unicode is a Coded

Character Set. But its not that simple as it sounds like. The Unicode consortium does a

great deal of work. To give you an idea, following are some points, which Unicode

addresses:

1. Collecting all the letters, punctuations, etc, from all the languages in this world.

2. Assigning each character in the character set a unique code point.

3. Deciding what qualifies as an independent character and what not. For example,

should “e with an acute accent” be treated as an independent character or a

composite character sequence of e and the acute accent?

4. Deciding the shape of a character in the context of other characters. For example,

when ‘a’ appears next to ‘e’, the shape of the character becomes ‘æ’.

5. Decide the order of characters w.r.t. sorting when characters from different

language come together.

6. And many more other things... For details see: http://www.unicode.org

As mentioned earlier, there is always an encoding associated with a Code Page.

Therefore, the Unicode Code Page is associated with, not one, but many encoding

algorithms or character encoding schemes. Some popular character encoding schemes

associated with Unicode code page are – UTF-8, UTF-16 (LE/BE), and UTF-32 (LE/BE).

Other less popular encoding algorithms associated with Unicode are -- UTF-7, UTFEBCDIC, and an upcoming CESU.

Let us also see what Unicode is not:

1. Unicode is not a fixed-length 16-bit encoding scheme.

2. Unicode is a code page and not an encoding scheme. There are encoding schemes

associated with Unicode.

Note: Look at the “Save As” dialog box of MS Windows Notepad application.

The “Encoding” drop-down there misguides you by showing the option

“Unicode”. It should have been UTF-16.

3. Unicode is not a font or a repository of glyphs.

4. Unicode is not a rendering or any other kind of software.

5. Unicode does not specify the size, shape, or style of on-screen characters.

6. Unicode is not magic.

Officially, Unicode uses the U+NNNN[N]* notation to refer to the various code points in

the Unicode character set, where, N is a hexadecimal number. For example, to refer to the

“English Letter A”, whose code point is 65, the Unicode representation is: U+0041; and

to refer to the “Ugaritic Letter Ho”, whose code point is 66437 (greater than FFFF), the

Unicode representation is U+10385.

You can see the character for a given Unicode character code, at:

http://www.unicode.org/charts/

The latest version of Unicode while writing this article is v4.0, which defines a range of

characters from U+0000 to U+10FFFF, which means one needs 21-bits to represent a

Unicode code point in memory, as of now.

Combining Characters

As mentioned earlier, when a combining mark (e.g. diacritical marks) comes adjacent to

an independent character, it has an affinity to get combined with that independent

character. For example, when the acute accent comes next to the independent character

‘e’, it gets combined to become é.

For the complete list of Unicode combining diacritical characters, see:

http://www.unicode.org/charts/PDF/U0300.pdf

What if such a combining mark comes in-between two independent characters, where, it

has affinity to get combined with either of those two adjacent independent characters? In

such a case, we can use a special character called Zero Width Non Joiner (ZWNJ,

U+200C) to express what independent character the combining character should not join

to.

For example, to resolve a situation like:

C1 CM C2

where, C1 and C2 are two independent characters, and CM is a combining mark, which

has affinity towards both C1 and C2; we can do the following:

C1 ZWNJ CM C2

Or

C1 CM ZWNJ C2

In the former case, CM gets joined to C2, and in the latter case, CM gets joined with C1.

Now, if we want to combine two independent characters, provided they are combinable,

then, we can use another special character called Zero Width Joiner (ZWJ, U+200D). For

example, when the character ‘a’, comes next to character ‘e’, both remain independent

character, and do not get joined automatically to form the ligature ‘æ’. To join such

independent characters, we can do the following:

C1 ZWJ C2

On the media, everything is just bits and bytes. Therefore, it is the job of the Unicode

conformant rendering software, to ensure that the appropriate characters are joined, and

displayed properly.

Other than the ZWJ special character, many of the scripts have their own special

character to facilitate the joining of two characters. For example, in Devanagari script, the

special character (U+094D) called Halant, is used to combine such independent

characters. For example:

(Figure: Combining two independent characters using Halant)

Unicode Architecture

Let’s see the architecture of Unicode in more detail. There are 17 planes in Unicode. The

primary plane is called Basic Multilingual Plane (BMP), and the rest 16 are called

supplementary planes. These planes are nothing but just a category to group a range of

code points. For example, the Plane 2 (aka Supplementary Ideographic Plane) has code

points in the range from U+20000 to U+2FFFF, which are used to capture rare East

Asian characters.

Plane #

Range of code points

U+0000

– U+FFFF

Plane 0

U+10000

– U+1FFFF

Plane 1

U+20000

– U+2FFFF

Plane 2

– U+DFFFF

Plane 3 to U+30000

Plane 13

U+E0000

– U+EFFFF

Plane 14

U+F0000

– U+FFFFF

Plane 15

U+100000 – U+10FFFF

Plane 16

Plane Name

Basic Multilingual Plane

Supplementary Multilingual Plane

Supplementary Ideographic Plane

Reserved Planes

Supplementary Special-Purpose Plane

Supplementary Private Use Area-A

Supplementary Private Use Area-B

The idea behind having such division in so-called planes is that each plane has a special

meaning and contains special characters.

Plane Name

Basic

Multilingual Plane

Supplementary

Multilingual Plane

Supplementary

Ideographic Plane

Reserved Planes

Supplementary

Special Purpose Plane

Supplementary

Private Use Area-A

Supplementary

Private Use Area-B

Purpose

For most used characters. This plane covers characters from

almost all the modern languages like – English, Hindi, CJK,

etc…

Used for historic scripts

Used for rare East Asian characters

Unassigned and reserved by Unicode for future use

Language tag characters and some variation selection

characters

Reserved for applications that want to use characters which are

not specified by Unicode

Reserved for applications that want to use characters which are

not specified by Unicode

One can define ones own characters and assign them to the code points in the private use

area. But, to display those characters then one needs to create a new font file or update an

existing font file to assign the visual representation of those characters to the appropriate

character codes. These private areas are primarily used by applications to capture the new

characters that are defined, but not limited to the CJK languages. For example, it is very

common in Japan for people to have names, which cannot always be written using the

existing characters; and need new characters to write them. These private use areas

provided by Unicode are very helpful for such applications.

Let’s move on and see the three popular Unicode encoding schemes in detail.

Unicode Character Encoding Schemes

UTF-8, UTF-16, and UTF-32 are the three popular encodings defined by Unicode, which

encodes character codes from the Unicode code page to octets, and decodes the encoded

octets back to valid character codes, which exist in the Unicode code page. Other less

popular encoding schemes from Unicode are CESU, UTF-EBCDIC and UTF-7.

UTF-8

UTF-8 is an 8-bit code unit, variable-length encoding algorithm, with the following

properties:

1. All possible characters in the Unicode code page can be encoded in UTF-8.

2. UTF-8 is completely backward compatible with ASCII, which means, the first

128 characters in the Unicode are exactly the same as defined in the ASCII code

page, and are encoded simply as bytes 0x00 to 0x7F, just like the way ASCII does.

3. All characters beyond U+007F are encoded as a sequence of several bytes, each

of which has the most significant bit set. Therefore, no ASCII byte (0x00-0x7F)

can appear as part of any other character.

4. The first byte of a multibyte sequence that represents a non-ASCII character is

always in the range 0xC0 to 0xFD and it indicates how many bytes follow for this

character. All further bytes in a multibyte sequence are in the range 0x80 to 0xBF

(see the table below). This allows easy resynchronization and makes the encoding

stateless and robust against missing bytes.

5. UTF-8 encoded characters may theoretically be up to six bytes long, however 16bit BMP characters are only up to three bytes long.

6. The optional initial Byte Order Mark (BOM) for UTF-8 is: EF BB BF

7. The bytes 0xFE and 0xFF are never used in the UTF-8 encoding.

The algorithm (simplified)

Code Points

Encoded Octets

U+00000000

U+00000080

U+00000800

U+00010000

U+00200000

U+04000000

0xxxxxxx

110xxxxx

1110xxxx

11110xxx

111110xx

1111110x

10xxxxxx

–

–

–

–

–

–

U+0000007F

U+000007FF

U+0000FFFF

U+001FFFFF

U+03FFFFFF

U+7FFFFFFF

10xxxxxx

10xxxxxx

10xxxxxx

10xxxxxx

10xxxxxx

10xxxxxx

10xxxxxx 10xxxxxx

10xxxxxx 10xxxxxx 10xxxxxx

10xxxxxx 10xxxxxx 10xxxxxx

For example, U+00E9 (é) in UTF-8 would be encoded as:

UTF-16

UTF-16 is a 16-bit code unit, variable-length encoding algorithm, with the following

properties:

1. It uses a 16-bit fixed-width encoding algorithm to encode characters from the

BMP (U+0000 to U+FFFF)

2. It uses surrogate pairs to encode characters from Supplementary planes (i.e.

characters beyond U+FFFF), where each surrogate is 16-bits, thereby consuming

32-bits to encode characters from supplementary planes.

This is so, because originally Unicode was designed as a 16-bit, fixed-width encoding

scheme, which could only encode characters up to U+FFFF. But, as Unicode character

set grew, this had to be modified using the surrogate pair mechanism to accommodate the

characters from Supplementary planes.

Surrogate Pairs

Surrogate pairs or commonly referred to as surrogates, is a pair of two Unicode code

points from the Basic Multilingual Plane, to represent a character from the

Supplementary Plane. In a coded pair, the first value is a high surrogate and the second is

a low surrogate. A high surrogate is a value in the range U+D800 through U+DBFF, and

a low surrogate is a value in the range U+DC00 through U+DFFF. These two range of

values or code points in the BMP are reserved only for Surrogate pairs, and do not

represent any individual character.

For example, the character Ugaritic Letter Ho, whose code point is U+10385 (greater

than U+FFFF), is represented as a surrogate pair “U+D800 U+DF85” in UTF-16,

whereas, the character English Letter A, whose code point is U+0041 (less than U+FFFF),

is represented as U+0041 in UTF-16.

Endian – Big or Little

Values, which are of one octet length, have a Most Significant Bit (MSb), and a Least

Significant Bit (LSb), whereas, values which are greater than one octet (or one byte)

length have a Most Significant Byte (MSB) and a Least Significant Byte (LSB). Based on

the computer architecture, at a given memory address, the MSB of the value might be

stored first (called as big-endian), or the LSB of the value might be stored first (called as

little-endian). There is no significant advantage of one over other. It is all up to the

computer architecture. For example, SPARC machines use big-endian mechanism,

whereas, Intel machines use little-endian mechanism to store values in memory.

In the context of UTF-16, which has a code unit of 2-bytes, the endianess makes sense,

but doesn’t, for UTF-8, because UTF-8 has a code unit of 1-byte. Therefore, the UTF-16

character-encoding scheme is available in two flavours – UTF-16 BE (Big-Endian) and

UTF-16 LE (Little-Endian). UTF-16 without any endianess specified, is assumed to be

BE.

Let us take the code point for the English Letter A – U+0041, which has the MSB as 00

and the LSB as 41. This value would be stored as “00 41” in UTF-16 BE (MSB first);

and as “41 00” in UTF-16 LE (LSB first). Note that the MSbits and the LSbits are never

reversed.

UTF-32

Any Unicode character can be represented as a single 32-bit unit using UTF-32. This

single 32-bit code unit corresponds to the Unicode scalar value, which is the code point

for the abstract character in Unicode code page. The encoding and decoding of characters

in UTF-32 is much faster than UTF-16, or UTF-8, however, the downside of UTF-32 is

that it forces you to use 32-bits for each character, when only a maximum of 21 bits are

ever needed. Also, the most common characters from BMP can be encoded in only 16bits. Therefore, an application must choose the encoding algorithm wisely.

BOM

Unicode uses an optional signature in the beginning of the data stream (or file) when

encoding code points so that when no encoding is specified to read the stream, the

decoder can automatically detect from the data stream what encoding was used to

generate this, and can use it to correctly read the subsequent data stream. This signature

at the beginning of the data stream is called as initial Byte Order Mark (BOM). Unicode

uses “FEFF” as the BOM, which gets transformed as follows when encoded using the

above three encoding schemes:

Bytes

00 00 FE FF

FF FE 00 00

FE FF

FF FE

EF BB BF

Encoding Form

UTF-32 BE

UTF-32 LE

UTF-16 BE

UTF-16 LE

UTF-8

(Table: initial BOM in various encoding forms)

Unicode and XML

XML supports Unicode inherently. The first edition of W3C XML 1.0 specification,

which was published in early 1998, was based on Unicode 2.0. Unicode 2.0 was the then

latest specification from the Unicode Consortium. The Unicode Consortium releases a

new version of Unicode specification every other year (or whenever they have

accumulated and studied enough new characters); therefore, specifications, which are

dependent on Unicode, also need to be updated. In late 2000, second edition of W3C

XML 1.0 specification was released, which was based on Unicode 3.0. Recently, W3C

XML 1.0 third edition was released in early 2004, which is based on Unicode 3.2.

Surprisingly, the same day W3C also released XML 1.1. Why release two different

versions of XML, and that too the same day?

Updating the XML specification every time a new version of Unicode is released is a

tedious and cumbersome task. Therefore, XML 1.1 was released primarily to be

backward as well as forward compatible with the Unicode characters. This compatibility

with Unicode is achieved at the cost of XML 1.1 being backward incompatible with

XML 1.0. Therefore, a third edition of XML 1.0 was released to accommodate the new

characters from Unicode 4.0 as well as remain backward compatible with XML 1.0

second edition.

Okay, here is what’s new in XML 1.1

1. Fully backward and forward compatible with Unicode

2. Two end-of-line characters viz. NEL (0x85) and the Unicode line separator

character (0x2028) has been added to the list of characters that mark the end of a

line. NEL is the end-of-line character found on mainframes, but XML 1.0 does

not recognize this character.

3. Control characters from 0x1 to 0x1F, which were not allowed in XML 1.0 are

now allowed as character entity references. For example,  is valid in XML

1.1, but invalid in XML 1.0.

4. Character normalization. Those characters, which can be represented in more than

one way, must be normalized so that string related operations (like comparison,

etc.) work correctly. For example, a decomposable character, which also has an

independent character status, must be normalized, when used in XML 1.1.

When we say XML supports Unicode, this means, the name of the elements, the name of

the attributes, the values of the attributes and the character data, all can contain Unicode

characters as long as they don’t break the production rules defined in the XML

specification. For example:

Encoding of XML document

XML, is a text file, therefore, XML too, is associated with an encoding. This encoding

must be used when we want to read or write an XML document. The encoding of an

XML document is specified using the xml declaration prolog, which should be the first

line in any xml document.

<?xml version="1.0" encoding="UTF-8" standalone="yes" ?>

The encoding attribute however is optional. Therefore, if the encoding attribute is not

specified, it is assumed to be UTF-8.

This encoding, which is specified on the xml declaration prolog, must be used to parse

the xml document. But, to fetch that encoding, we need to parse the xml document with

the appropriate encoding (i.e. the encoding specified on the xml declaration prolog). No, I

am not kidding, and though it may seem, but this is not a catch-22 situation. Since the

position and content of the xml declaration prolog is restricted, and every parser supports

a finite number of encodings, the auto-detection of encoding of an xml document is a

deterministic task. By analyzing the first few bytes of an XML document, the encoding

can be determined in a deterministic way. Once the encoding is found, it is used to read

the rest of the xml document. For more details on auto detecting the encoding of an XML

document, see: http://www.w3.org/TR/REC-xml/#sec-guessing

Note:

1. It is an error if the encoding the xml document is stored in is different from the

encoding specified in the xml declaration prolog.

2. Not all xml parsers may be able to auto-detect all the possible encoding that may

be specified for an XML document, correctly.

3. This auto-detecting mechanism cannot be used to read non-xml documents

because they do not have anything like the XML declaration prolog.

Most often people use an editor to create xml documents, but while saving it, they don’t

care what encoding the document was actually saved in by their favourite editor. This

could result in xml parsing errors, if, the encoding that the document was actually saved

in, turns out to be different than the encoding specified in the xml declaration prolog of

the xml document (or different than UTF-8, which is the default encoding of XML

document, when no encoding is explicitly specified in the xml declaration prolog).

For example, on a windows machine when using the character e with the acute accent in

an xml document, and saving it using an editor, the editor may (in most of the cases)

store that document in ISO-8859-1 encoding (the Latin encoding family). Now, if no

encoding were specified in the xml declaration prolog, the parser would try to parse the

XML document in UTF-8, and would throw an error for the invalid character found,

because the way “the letter e with an acute accent” is stored in UTF-8 is different from

the way it is stored in ISO-8859-1.

Now, instead of just cribbing that “XML doesn’t recognize my character”, or “XML

doesn’t supports my character”, you can go ahead and fix this problem by making sure

that your XML document was saved in the encoding which is specified in the XML

declaration prolog. Always specify the encoding of the document you are saving

(whether it is an xml document or a non-xml document) in the “Save” dialog box (or

whatever it is), and if your favourite editor doesn’t allow you to do that, just dump it, and

go for another one, which allows you to specify the encoding of files you save.

If you cannot key-in the character you want to use in an XML document, by directly

using the keyboard, you can use a character entity reference for that character. A

character entity is declared as: &#D; or &#xH; -- where, D is a decimal number and H is

a hexadecimal number. For example, to use the character A, whose Unicode code point is

65, one can specify a character entity reference like &#65; or &#x41; -- where, both are

the same thing.

Best Practices

1. When creating XML documents, it is always a good practice to explicitly specify

the encoding of the xml document in the xml declaration prolog.

2. Make sure the document is actually stored in the encoding as specified in the xml

declaration prolog, or in UTF-8 (which is the default encoding of xml, when not

specified explicitly using the xml declaration prolog).

Globalization

The art of making software, which could run on different platforms, and be used by

people, from different geographical locations, different cultural backgrounds and

different languages, is called Globalization. In a nutshell, it’s an art of making software

for the global industry.

(Figure: Globalization = Internalization + Localization + Translation)

Internationalization

The art of making software independent of the underlying system defaults is called

Internationalization (aka I18N). Every system has defaults like encoding, and locale. Any

piece of software that unknowingly relies on these defaults may not work correctly when

ported from one system to other; because system defaults vary from one system to other;

and thus, is not internationalized.

A Scenario

Hattori Hanso creates a text file on a Japanese operating system using an editor and saves

it to the disk. Most of the time people are not aware of, or they just don’t care about the

encoding that the editor used to save their file. That’s a bad habit. Just like the name of

the file, one must always know the encoding of a text file. Anyway, let’s say the file was

saved in “Shift_JIS” encoding, which also happens to be the default encoding of that

Japanese operating system, say.

He then goes ahead and writes a program to read that text file and display its contents.

When writing the program he doesn’t specify any encoding to read that text file. This is

extremely dangerous, and such a program is highly inflammable. Never, ever, do this,

and we’ll see why in a while. He then executes that program on the same machine and

everything works perfectly fine -- just as expected.

Now, he FTP over that text file and that same program to his friend Antonio Tourino’s

machine, which has an English operating system. He executes the same program again on

the English operating system. The program runs, but boom – this time he sees garbage.

Where did those Japanese characters go? What do you say? – “I don’t know, but it works

perfectly fine on my machine!”.

The Problem

The problem is that when writing the program, the programmer relied on the source

operating system’s default encoding (Shift_JIS), which turned out to be different from the

target operating system’s default encoding (ISO-8859-1). Note that, more than 90% of

the times, the default encoding of the source and target systems are different. But when

executing the same program on the English OS, the default encoding used by the program

was ISO-8859-1. Therefore, using ISO-8859-1 to read a Shift_JIS encoded file resulted in

absolute garbage.

The Solution

The solution is that the way the program requires the name of the file to read, as an input,

it must also always ask the encoding of the file it was created in, as an input. After doing

this fix, the program becomes internationalized, but you may still not see those Japanese

characters when executing it on the English OS, because that OS might not have the

required fonts for displaying the Japanese characters. But that’s a different story we will

look into that in a short while.

Localization

The art of making software adapt to the underlying locale is called Localization (aka

L10N). A software must rely on these defaults, to display the text messages, error

messages, etc, in the locale it is running on, so that, it makes sense for the person who is

using that software.

What’s a locale?

A locale represents a language. Therefore, adapting to a locale means the software should

be able to display or take inputs in a language specific manner. For example, the French

language is a Locale. But, French as spoken in France, differs from French as spoken in

Canada. So, we augment our definition of locale to say that a locale represents a specific

language of a specific country. Again, a language spoken in a specific country can have

variations. For example, ancient traditional Chinese spoken in China, vs. simplified

Chinese spoken in China. So, we augment our definition of locale again, to say that a

locale represents a specific language of a specific country with variants.

When we write software that adapts to the underlying locale, this actually means

addressing the community, which is going to use this software. Therefore, locale specific

things like text messages, exception messages, the date and time, currency, etc, should be

displayed in a way, such that, the user of the software understands what gets displayed

(i.e. the output of the software). There is a community of user, which the localization tries

to address, because we don’t want to re-write the entire software for every language, and

every flavour of that language.

This involves writing the program, once, in such a way that every time it is executed, it

uses the default locale of the system it is executed on, to display the locale-sensitive

information.

Translation

Translation is to translate all the text messages, error messages, etc, that would be

displayed to the user, from the language it is written in, to the various languages the

software being developed, wants to support.

For example, when a software is written in English, all such text messages (initially

written in English) that will be displayed to the user are first identified and separated out

from the code in another file. Each of these messages is assigned a unique key, which the

software would use to display the message identified by that key. Then, all these

messages are manually translated by language experts in various other languages, the

software wants to support. Separating out such text messages from the code makes it easy

to manage and translate messages. It also helps the language experts to translate these

files to the various other languages independent of the software code.

Note that sentences cannot be translated from one language (or locale to be specific) to

another, automatically. This is because sentences are associated with grammar. Even if

we try to automate that, the translated sentences might not be grammatically correct, and

at times might not make any sense at all.

Therefore, somebody has to sit down and translate all these messages, etc, to different

locales. In the IT industry this field is called as Native Language Support (NLS), which

primarily comprises of Language experts.

Unicode and Java

The Java programming language inherently supports Unicode since the beginning. This

means, all the Characters and Strings used in a Java program are Unicode. The primitive

data type char used to represent a character in Java is an unsigned 16-bit integer that can

represent any Unicode code point in the range U+0000 to U+FFFF. When Unicode v1.0

was released it did not had those many characters, but Java had that support from the

beginning. Even now, after so many years, J2SE 1.4 (code named: Merlin), which

supports Unicode 3.0, can easily accommodate all the characters defined by Unicode 3.0

in 16 bits of the Java char datatype.

In Java, a character or should I say a Unicode character can be represented as:

char ch = 'A';

OR by directly using the Unicode code point as:

char ch = 65;

…and both mean the same thing. A third way of representing characters in Java, is by

using the “\u” escape sequence. Well, first of all, why have a third way at all? This was

primarily for the ease of use to directly support Unicode characters in Java Strings, rather

than all the time create a String from char[].

The “\u” escape sequence follows the form: \uNNNN, where, N is a hexadecimal

number. Therefore, to represent the character ‘A’, whose Unicode code point is 65, can

be represented in java using the “\u” escape sequence as:

char ch = '\u0041';

This escape sequence is very useful when creating Strings. For example:

String str = "h\u00E9llo world";

which is equivalent to:

String str = "héllo world";

Since Unicode is growing faster and theoretically puts no constraint on the number of

characters it defines, Java would be in trouble when the number of characters grows

beyond U+FFFF. This is because; the char datatype in Java is only 16-bits and cannot

accommodate values beyond U+FFFF.

Here is the interesting part. Unicode 4.0 is now available and it defines characters in the

range U+0000 and U+10FFFF. This means Java must do something if it is to support

those additional characters (beyond U+FFFF) from Unicode 4.0, which are called

supplementary characters. Therefore, JSR-204 was filed for supporting these

supplementary characters in J2SE, and J2SE 1.5 (code named: Tiger) supports Unicode

4.0 using the mechanism defined in JSR-204. In a nutshell, supplementary characters are

supported in Java 1.5 using the surrogate pair mechanism of UTF-16. Read the article

“Supplementary Characters in the Java Platform” for more details.

To represent characters from supplementary planes, or code points beyond U+FFFF, a

pair of escape sequences are used – “\uXXXX \uYYYY” called surrogate pairs. Refer

the Unicode UTF-16 section earlier in this article for details on surrogate pairs.

For example, to represent the character Ugaritic Letter Ho, whose code point is U+10385

(greater than U+FFFF), in Java, one should use the surrogate pair “\uD800\uDF85”.

Note that using the escape sequence “\u10385” is incorrect in Java.

Java 1.5 provides APIs to get these surrogate pairs, for a given code point, without having

the developers worry about how are they generated:

int unicodeCodePoint = Integer.parseInt(args[0]);

char[] surrogates = Character.toChars(unicodeCodePoint);

if (surrogates.length > 1) {

System.out.println("High=U+"+Integer.toHexString(surrogates[0]));

System.out.println("Low =U+"+Integer.toHexString(surrogates[1]));

}

else {

System.out.println("Value="+surrogates[0]);

}

I completely agree, that this is not a great way to handle supplementary characters in Java.

It would have been great for developers, had the supplementary characters been

supported in a way, something like: “\u{20000}”. This would have made lives much

easier, but nothing much can be done about that now. Anyways, this is not too bad either,

or is it?

Internationalization in Java

Bytes and Strings

Whenever we convert a String to Bytes, or create a String from Bytes, there is an

encoding involved. Without an encoding this conversion is not possible at all. Yes, Java

provides API which can do this conversion without a user specified encoding:

public String(byte[] bytes)

public byte[] getBytes()

Java shouldn’t have done this, because this gives an impression that no encoding is

required in these conversions. But the fact is, the system default or the JVM default

encoding is used to do this conversion. Most of the time, many of us use these APIs to

convert String to bytes and vice-versa without specifying an encoding, and without

realizing the impact of this. The impact is huge – the code is no more portable; which

means, you might get different and incorrect results when such a program is executed on

different machines, because the default encoding would vary from one machine to other.

Never do this. Instead, use the other overloaded APIs where one can specify the encoding

explicitly when doing such conversions:

public String(byte[] bytes, String charsetName)

public byte[] getBytes(String charsetName)

Remember that once the conversion is done, there is no encoding information associated

with the bytes or the characters. Bytes are bytes, and characters are characters. Period. If

you want to do conversion, you must specify a third piece of information – encoding.

Input and Output (I/O)

Java allows you to read/write streaming data from/to external (as well as internal) data

sources using the APIs in the java.io package. Broadly, the java.io package provides API

to access the data as stream of Bytes or as stream of Characters. The java.io.InputStream,

and the java.io.OutputStream (including all their subclasses) allows you to read/write

data as bytes; whereas, the java.io.Reader and the java.io.Writer (including all their

subclasses) allows you to read/write data as characters. Based on the type of data source,

and the processing required, one can choose an appropriate class to do the I/O.

Byte Streams

There is no encoding involved when using byte streams to do IO. A file, for example, is

stored as a sequence of bits on the file system. When we use a byte stream to read a file

(e.g. java.io.FileInputStream), 8-bits (or 1-byte or 1-octet) are read at a time, and

returned to the application, as it is. The IO classes supporting byte streams do not look

into, or do any sort of processing with the bytes read. It is the onus of the application to

process the raw bytes in whatever way it wants.

Char Streams

Character streams are byte streams plus encoding. A character stream cannot function

without an encoding. IO classes supporting character streams, first read appropriate

number of bytes from the underlying byte stream (depending on the encoding), and then

do some processing with the content of the bytes read (depending on the encoding), and

convert them to a code point. This code point, which represents a character in Unicode, is

then returned to the application.

From the characters, we cannot always say what was the exact sequence of bytes in the

input stream. Yes, given an encoding, we can convert the characters back to bytes, but

this sequence of bytes may not be always exactly the same as the original sequence of

bytes.

Even though Java allows, we must never do this:

public InputStreamReader(InputStream in)

The above uses the system default or the JVM default encoding to read the file. This

means, if you move the program to some other machine, which has a different encoding,

then this program might not work at all. Instead, one should always use:

public InputStreamReader(InputStream in, String charsetName)

Below are two flavours of a small program to read a file using the appropriate encoding

(i.e. the encoding which was used to create the file), and then to write it back using any

user specified encoding.

Listing 1:

import java.io.*;

...

//create a reader to read the input file

//specifying the correct encoding of the input file

BufferedReader reader = new BufferedReader(new InputStreamReader(new

FileInputStream(file_in), enc_in));

//create a writer to write the output file

//in the any encoding of choice

BufferedWriter writer = new BufferedWriter(new OutputStreamWriter(new

FileOutputStream(file_out), enc_out));

//read from the input file and write to the output file

char[] buffer = new char[BUFFER_SIZE];

int charsRead = -1;

while ((charsRead = reader.read(buffer)) != -1) {

writer.write(buffer, 0, charsRead);

}

The above program doesn’t has to do anything except for creating the readers and writers

correctly. The encoding conversion is done automatically by the IO classes.

Listing 2:

import java.io.*;

...

//create a reader to read the input file

//specifying the correct encoding of the input file

BufferedReader reader = new BufferedReader(new InputStreamReader(new

FileInputStream(file_in), enc_in));

//create an output stream to write to the output file

//without any encoding conversion

FileOutputStream fos = new FileOutputStream(file_out);

//read from the input file and write to the output file

char[] in_buffer = new char[BUFFER_SIZE];

byte[] out_buffer = null;

int charsRead = -1;

String str = null;

while ((charsRead = reader.read(in_buffer)) != -1) {

str = new String(in_buffer, 0, charsRead);

//do the conversion

out_buffer = str.getBytes(enc_out);

fos.write(out_buffer);

}

The above program explicitly does the conversion using the encoding before writing the

bytes to the output file.

Note: The default encoding of the JVM is same as the default encoding of the system,

unless it is changed explicitly as follows:

java –Dfile.encoding=UTF-8 mypack.myapp

Java vs. IANA encoding names

For whatever reason, Java defines its own name for many of the encodings it supports in

the java.lang and java.io package, rather than using the standard names for the

encodings registered with Internet Assigned Numbers Authority (IANA). List of IANA

registered encoding names and their alias can be found here. Though, some of the name

of the encodings supported by Java, are also registered with IANA, but its not true for all

the Java supported encoding names. For example, “UTF8” (without the hyphen), is one

such encoding name supported by Java which is not registered with IANA. The complete

list of the name of the encodings supported by Java can be found here.

It is always better to use standard encoding names rather than Java encoding names for

interoperability reasons. For example, when a Java program is written to generate an

XML file, the Java program cannot use the Java encoding name in the XML declaration

prolog of the output XML. This is because XML supports only standard encoding names

registered with IANA in the xml declaration prolog. It would be for the good of

developers, if Java provides a map implementation of Java encoding names to IANA

encoding names and vice-versa for such interoperability reasons.

Java modified UTF-8

Taking about UTF-8, the implementation to support Unicode standard UTF-8 in Java is a

bit modified version of the standard UTF-8. The Java version is called modified UTF-8

(or UTF8). Modified UTF-8 is different from the standard UTF-8 as follows:

1. The null character (U+0000) is encoded with two bytes instead of one,

specifically as 11000000 10000000. This ensures that there are no embedded nulls

in the encoded string.

2. The way characters outside the BMP are encoded. Standard UTF-8 doesn’t

differentiate, when encoding characters from BMP or Supplementary planes. In

modified UTF-8 these characters are first represented as surrogate pairs (as in

UTF-16), and then the surrogate pairs are encoded individually in sequence for

backward compatibility reasons.

3. When decoding a UTF-8 stream with an initial BOM, the Java implementation

reads the BOM as yet another character. This BOM is supposed to be verified and

then skipped by the decoder before it actually reads the data. A bug (#4508058)

has been filed for this at http://bugs.sun.com, and is supposed to be fixed in Java

1.6 (code named: Mustang)

Localization in Java

Locale

As per the Javadoc “A Locale object represents a specific geographical, political, or

cultural region. An operation that requires a Locale to perform its task is called localesensitive and uses the Locale to tailor information for the user. For example, displaying

a number is a locale-sensitive operation--the number should be formatted according to

the customs/conventions of the user's native country, region, or culture”.

To perform a locale-sensitive operation, a locale object needs to be created first. Java

provides pre-created Locales for ease of use. But, one could always explicitly create a

locale by specifying either a language code, or a language code and a country code. See

the Javadoc for java.util.Locale for more details.

Listing 3: This listing displays the current system date/time in a specific locale using a

pre-defined date format.

import java.util.*;

import java.text.*;

...

//current date

Date date = new Date();

print("Using DateFormat with pre-defined format to format

date/time...");

DateFormat df_jp = DateFormat.getDateTimeInstance(DateFormat.FULL,

DateFormat.FULL,

Locale.JAPAN);

DateFormat df_de = DateFormat.getDateTimeInstance(DateFormat.FULL,

DateFormat.FULL,

Locale.GERMANY);

DateFormat df_en = DateFormat.getDateTimeInstance(DateFormat.FULL,

DateFormat.FULL,

Locale.US);

//format and print the localized date/time

print("Date/Time in Japan

= "+df_jp.format(date));

print("Date/Time in Germany = "+df_de.format(date));

print("Date/Time in US

= "+df_en.format(date));

Output:

Using DateFormat with pre-defined format to format date/time...

Listing 4: This listing displays the current system date/time in a specific locale using a

custom date format.

import java.util.*;

import java.text.*;

...

//current date

Date date = new Date();

print("Using SimpleDateFormat with custom format to format

date/time...");

SimpleDateFormat sdf_jp =

(SimpleDateFormat)DateFormat.getDateTimeInstance(DateFormat.FULL,

DateFormat.FULL,

Locale.JAPAN);

SimpleDateFormat sdf_de =

(SimpleDateFormat)DateFormat.getDateTimeInstance(DateFormat.FULL,

DateFormat.FULL,

Locale.GERMANY);

SimpleDateFormat sdf_en =

(SimpleDateFormat)DateFormat.getDateTimeInstance(DateFormat.FULL,

DateFormat.FULL,

Locale.US);

//override the pre-defined pattern with a custom pattern

sdf_jp.applyPattern("EEE, d MMM yyyy HH:mm:ss zzzz");

sdf_de.applyPattern("EEE, d MMM yyyy HH:mm:ss zzzz");

sdf_en.applyPattern("EEE, d MMM yyyy HH:mm:ss zzzz");

//format and print the localized date/time

print("Date/Time in Japan

= "+sdf_jp.format(date));

print("Date/Time in Germany = "+sdf_de.format(date));

print("Date/Time in US

= "+sdf_en.format(date));

Output:

Using SimpleDateFormat with custom format to format date/time...

Listing 5: This listing parses a locale specific string representing date/time back to java

Date object.

//current date

Date date = new Date();

DateFormat df_jp = DateFormat.getDateTimeInstance(DateFormat.FULL,

DateFormat.FULL,

Locale.JAPAN);

String localeSpecificDateStr = df_jp.format(date);

Date newDate = df_jp.parse(localeSpecificDateStr);

Listing 6: Displays a currency value in different locales.

import java.util.*;

import java.text.*;

...

//formatting numbers and currencies

long value = 123456789;

//Formatting currency using NumberFormat

print("Using NumberFormat to format Currency...");

NumberFormat nf_curr_jp =

NumberFormat.getCurrencyInstance(Locale.JAPAN);

NumberFormat nf_curr_de =

NumberFormat.getCurrencyInstance(Locale.GERMANY);

NumberFormat nf_curr_en = NumberFormat.getCurrencyInstance(Locale.US);

print("Currency in Japan

print("Currency in Germany

print("Currency in US

= "+nf_curr_jp.format(value));

= "+nf_curr_de.format(value));

= "+nf_curr_en.format(value));

Output:

Note the position of comma and dots

Using NumberFormat to format Currency...

Listing 7: Displays a numeric value in different locales.

import java.util.*;

import java.text.*;

...

//formatting numbers and currencies

long value = 123456789;

//NumberFormat

print("Using NumberFormat to format Numbers...");

NumberFormat nf_jp = NumberFormat.getNumberInstance(Locale.JAPAN);

NumberFormat nf_de = NumberFormat.getNumberInstance(Locale.GERMANY);

NumberFormat nf_en = NumberFormat.getNumberInstance(Locale.US);

print("Number in Japan

print("Number in Germany

print("Number in US

= "+nf_jp.format(value));

= "+nf_de.format(value));

= "+nf_en.format(value));

Output:

Note the position of comma and dots

Using NumberFormat to format Numbers...

Resource Bundles

Till now we saw that date, time, currency, numeric values can be automatically formatted

to a specific locale.

But, what about the following piece of code, when it is executed on different locales?:

String str = “Hello”;

Jlabel label = new Jlabel();

label.setText(str);

OR

throw new Exception(“run dude, run”);

At a first glance, the code look fine, because we never bother about what if this program

is executed on a different locale. For example, what would be displayed when the above

program is executed on a Japanese locale?. Shouldn’t the string “Hello” be displayed in

Japanese? Yes, it should be. But the question is would it be displayed in Japanese? Did

you answered Yes??!! No. The answer is No. Sentences cannot be translated

automatically, because as mentioned earlier, when translating a sentence to a specific

locale, one needs to ensure that the grammar of the translated sentence is correct.

Therefore, sentences cannot be translated from one locale to another automatically.

Resource Bundle is a collection of resource files. Each resource file contains resources

(e.g. display messages, exception messages, etc.) in the native language, and every

resource in the resource file is identified by a unique key. The message may either be a

static message or a parameterized message. Resource bundles are used to display static or

parameterized messages into various languages.

The way a resource bundle works is simple. Here are the steps you should follow to use a

resource bundles:

Design Time

1. First of all, identify and remove all the displayable string literals from your code,

which you want to be localized, and write it in a text file, identified by a unique

key. For example:

MSG_1 = “Hello.”

2. Parameterize the messages required. For example:

MSG_2 = “May I speak to {0}.”

3. The NLS group now translates this single file into various other languages. The

convention used to name these resource files is:

<file name>_<lang>_<country>. This helps to easily identify what language the

resource file represents, just by looking at its file name.

4. The developers then refer to these constant names used in the resource files, in

their code to use the locale-sensitive message.

Runtime

1. The program can then load the appropriate resource file based on the locale of the

JVM, and then, use the constant name to fetch the correct localized message, at

runtime.

2. If the message is parameterized, it must be replaced with the appropriate value

before using that message.

3. Now, the message is ready to be used and is returned to be displayed either in UI

or in a exception message, or anywhere else.

Listing 8: Using resource bundle

import java.util.*;

import java.text.*;

...

//resource bundle file name

String resourceBundleBaseFileName = "MyMessages";

//locale to use

Locale myLocale = Locale.US;

//get the correct resource bundle using the appropriate locale

//See the javadoc of getBundle(), for a complete description

//of the search and instantiation strategy.

ResourceBundle localizedMessages =

ResourceBundle.getBundle(resourceBundleBaseFileName, myLocale);

//get the localized message

String localizedMessage = localizedMessages.getString("MyMessage");

//create parameter values for the localized message

Object[] messageArguments = { "Rahul",

new Date(),

"Pizza Hut",

new Date()

};

//substitue the parameters, if any, in the localized message

//with the parameter values created above

MessageFormat formatter = new MessageFormat(localizedMessage,

myLocale);

String finalMessage = formatter.format(messageArguments);

//This final localized string now can be used wherever required.

//For example as exception messages or messages in UI, etc.

System.out.println(finalMessage);

MyMessages.properties

MyMessage = Mary is going out on a date with {0} on {1, date} meeting

at {2} at {3, time}.

Output:

Mary is going out on a date with Rahul on 15.01.2006 meeting at Pizza

Hut at 16:47:03.

For details on parameterized messages, see:

http://java.sun.com/j2se/1.4.2/docs/api/java/text/MessageFormat.html

Note: When the JVM starts, its default locale is same as the default locale of the system,

unless it is changed explicitly as follows:

java –Duser.language=ja -Duser.country=JP mypak.myapp

Conclusion

Basic concepts of characters and encodings are essential for every developer.

Globalization is an art of writing programs. And, Unicode is the future.