MeetingRunner-CIKM-liu.2

advertisement

Robust Identification and Extraction of Meeting Requests from Email

Using a Collocational Semantic Grammar

Hugo Liu

MIT Media Laboratory

20 Ames St., Bldg. E15

Cambridge, MA 02139 USA

hugo@media.mit.edu

Abstract

Meeting Runner is a software agent that acts as a

user’s personal secretary by observing the user’s

incoming emails, identifying requests for meetings, and interacting with the person making the

request to schedule the meeting into the user’s

calendar, on behalf of the user. Two important

subtasks are being able to robustly identify

emails containing meeting requests, and being

able to extract relevant meeting details. Statistical approaches to meeting request identification

are inappropriate because they generate a large

number of false positive classifications (fallout)

than can be annoying to users. A full parsing approach has low fallout, and can assist in the extraction of relevant meeting details, but exhibits

poor recall because deep parsing methods break

over very noisy emails.

In this paper, we demonstrate how a broadcoverage partial parsing approach using a collocational semantic grammar can be combined

with lightweight semantic recognition and information extraction techniques to robustly identify and extract meeting requests from emails.

Using a relatively small collocation-based semantic grammar, we are able to demonstrate a

good 74.5% recall with a low 0.4% fallout,

yielding a precision of 93.8%. We situate these

processes in the context of our overall software

agent architecture and the email meeting scheduling task domain.

1

The Task: Email Meeting Schedu ling

via Natural Language 1

When we think of computers of the future, what comes to

mind for many are personal software agents that help us

to manage our daily lives, taking on responsibilities such

as booking dinner reservations, and ordering groceries to

restock the refrigerator. One of the more useful tasks

Hassan Alam, Rachmat Hartono, Timotius Tjahjadi

Natural Language R&D Division

990 Linden Dr., Suite 203

Santa Clara, CA 95050

{hassana, rachmat, timmyt}@bcltechnologies.com

that a personal software agent might do for us is to help

manage our schedules – booking an appointment requested by a client, or arranging a movie date with a friend.

Because today we rely on email to accomplish much of

our social and work-related communication, and because

emails are in some senses less invasive to a busy person

than a phone call, people generally prefer to request

meetings and get-togethers with co-workers and friends

by sending them an email message. The sender might

then receive a response confirming, declining, or rescheduling the meeting. This back-and-forth interaction

may continue many times over until something is agreed

upon. Such a task model is referred to as asynchronous

email meeting scheduling.

Previous approaches to software-assisted asynchronous

email meeting scheduling either require all involved parties to possess common software, such as with Microsoft

Outlook, and Lotus Notes, or require explicit user action,

such as with web-based meeting invitation systems like

evite.com and meetingwizard.com.

In the former approach taken by Microsoft Outlook

and Lotus Notes, users can directly add meeting items to

the calendars of other users, and the software can automatically identify times when all parties are available.

This is very effective, and can be very useful within

companies where all workers have common software;

however, such an approach is inadequate as a universal

solution to email meeting scheduling because a user of

the software cannot use the system to automatically

schedule meetings with non-users of the software, and

vice versa.

The latter approach exemplified by evite.com and

meetingwizard.com moves the meeting scheduling task to

a centralized web server, and all involved parties communicate with that server to schedule meetings. Because

all that is required is a web browser, the second approach

circumvents the software-dependency limitations of the

former. However, a drawback is that this system for

meeting scheduling is not automated; it requires users to

read the email with the invite, open the URL to the meeting item, and check some boxes. If the meeting details

were to change, the whole process would have to repeat

itself. It is evident that this approach is not amenable to

automation.

1.1 Why via Natural Language?

The approach we have taken is to build a personal software agent called Meeting Runner that can automatically

interact with co-workers, clients, and friends to schedule

meetings through emails by having the interaction take

place in plain and common everyday language, or natural

language as it is generally called. Figure 1 depicts how

the system automatically recognizes a meeting request

from incoming email and extracts the relevant meeting

details.

1.2 Identif ying Meeting Requests and

Extracting Meeting D etails

The task model of the software agent has the following

steps: 1) observe the user’s incoming emails and from

them, identify emails containing meeting requests; 2)

from meeting request emails, extract partial meeting details; 3) through natural language dialog over email, interact with the person making the request to negotiate the

details of the meeting; 4) schedule the meeting in the

user’s calendar.

In this paper, we focus on the first two steps, which are

themselves very challenging. In section 2, we motivate

our approach by first reviewing how two very common

identification and extraction strategies fail to address the

needs of our task model. In section 3, we discuss how

shallow partial parsing with a collocational semantic

grammar is applied to the task of meeting request identification. Section 4 presents how lightweight semantic

recognition agents and information extraction techniques

extract relevant meeting details from identified emails.

Section 5 gives an evaluation of the performance of the

identification and extraction tasks. We conclude by discussing some of the methodological gains of our approach, and give directions for future work.

2

Existing Strategies

Two existing strategies to the identification of meeting

requests and the extraction of meeting details are considered in this section. First, statistical model-based classification can be coupled with statistical extraction of

meeting details. Second, full parsing can be combined

with extraction of meeting details from parse trees. In

the following subsections we argue how neither strategies

address the needs of the application’s task model requirements.

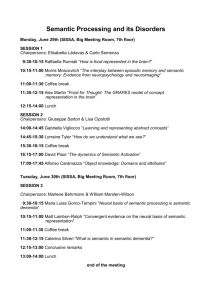

Figure 1. Screenshot of the Meeting Runner agent recognizing a meeting request in an incoming email. Email is not a

typical example and is simple for illustrative purposes.

In contrast to existing approaches to meeting scheduling,

we have chosen natural language as the communication

“protocol”. Natural language is arguably the most common format a software program can communicate in,

because humans are already proficient in this. By specifying natural language as the format of emails that can be

understood and generated by our software agent, we can

overcome the problem of required common software (a

person who has installed our agent can automatically receive meeting requests and schedule meetings with

someone who does not have our agent installed), and the

problem of required user action (our agent can interact

with the person who requested the meeting by further

emails, never requiring the intervention of the user).

2.1 Strategy #1: St atist ical

Statistical machine learning approaches are popular in the

information filtering literature, especially with regards to

the task of email classification. Finn et al. (2002) applied statistical machine learning approaches to genre

classification of emails based on handcrafted rules, partof-speech, and bag-of-words features. In one of their

experiments, they sought to classify an email as either

subjective or fact, within a single domain of football,

politics, or finance. They reported accuracy from 8588%. While these results might at first glance suggest

that a similar approach is promising in the identification

of meeting request emails, there are problems in the details.

First, error (12-15%) was equally attributable to false

positives and false negatives (the distribution of false

positives versus false negatives is hard to control in statistical classifiers). This would imply a false positive

(fallout) rate of 6-7%. In our meeting request scheduling

application, the system would take an action (e.g. reply to

the sender, or notify the user) each time it detected a

meeting. User attention is very expensive. While the

system can tolerate missing some true meeting request

emails, since the user can still discover the meeting request manually, the system cannot tolerate many false

meeting request identifications, as they waste the user’s

attention. Therefore, our task model requires a very low

fallout rate, and statistical classification would seem inappropriate.

Second, there are further reasons to believe that meeting request classification is a far harder problem for statistical classifiers than genre classification. In genre

classification, vocabulary and word choice are surface

features that are fairly evenly spread across the large input. However, email meeting requests can be as short as

“let’s do lunch”, with no hints that can be gleaned from

the rest of the email. In this sense, statistical classifiers

would have trouble because they are semantically weaker

methods that require large input with cues scattered

throughout. Though we have not explicitly experimented

with statistical classifiers for our task, we anticipate that

such characteristics of the input would make machine

learning and classification very difficult.

Third, even if we assumed that a statistical classifier

could do a fair job of identifying emails containing meeting requests, it still would not be able to identify the salient sentence(s) in the email explicitly containing the request. Explicit identification of salient sentences would

provide valuable and necessary cues to the meeting detail

extraction mechanism. Without this information, the

extraction of such details would prove difficult, especially if the email contains multiple dates, times, people,

places, and occasions. Also, in such a case, statistical

extraction of meeting details would prove nearly impossible.

2.2 Strategy #2: Full Parsing

Now that we have examined some of the reasons why

statistical methods might not be appropriate to our task,

we examine the possibility of applying a full parsing approach. In this approach, we perform a full syntactic

constituent parse of each email, and from the resulting

parse trees, we perform semantic interpretation into thematic role frames. We could then use rule-based heuristics to determine which semantic interpretations are

meeting requests and which are not. Similarly, we can

extract meeting details from our semantic interpretations.

On some levels this method is more appropriate to our

task than statistical methods. It is much easier to prevent

false positives using rule-based heuristics over a parse

tree than via statistical methods, which are less amenable

to this kind of control. Also, the full parsing approach

would yield the exact location of salient sentences and

therefore, facilitate the extraction of meeting details in

the close proximity of the salient meeting request sentences.

However, from pilot work, we found this approach to

be extremely brittle and impractical. Using the constituent output from the Link Grammar Parser of English

(Sleator and Temperley, 1993) bundled with some rulebased heuristics for semantic interpretation, we parsed a

test corpus of email. While fallout was held low, recall

was extremely poor (< 30%). Upon closer examination

of the reasons for the poor performance, we found that

the email domain was too noisy for the syntactic parser to

handle. Sources of noise in our corpus included improper capitalization, improper or lack of punctuation, misspellings, run-on sentences, short sentence fragments,

and disfluencies resulting from English as a Second Language authors. And the problem is not limited to our

Link Grammar Parser, as most chart parsers are also generally not very tolerant of noise. Such poor performance

was disappointing, but it helped to inspire another approach—one which exhibits characteristics of parsing,

without its brittleness.

3 Robust Meeting Request Identification

Unlike the relative “clean” text found in the Wall Street

Journal corpus, text found in emails can be notoriously

“dirty”. As previously mentioned, email texts often lack

proper punctuation, capitalization, tend to have sentence

fragments, omit words with little semantic content, use

abbreviations and shorthand, and sometimes contain

mildly ill-formed grammar. Therefore, many of the full

parsers that can parse clean text well would have a tough

time with a dirty text, and are generally not robust

enough for this type of input. Thankfully, we do not

need such a deep level of understanding for meeting request extraction. In fact, this is purely an information

extraction task. As with most information extraction

problems, the desired knowledge, which in our case is the

meeting request details, can be described by a semantic

frame with the slots similar to the following:

Meeting Request Type: (new meeting request, cancellation, rescheduling, confirmation, irrelevant)

Date/Time interval proposed: (i.e.: next week, next

month)

Location/Duration/Attendees

Activity/occasion: (i.e.: birthday party, conference

call)

As previously defined, the task of identifying and extracting meeting request details from emails can be decomposed into 1) classifying the request type of the email

as shown in the frame above, and 2) filling in the remaining slots in the frame. In our system, the second task can

be solved with help from the solution to the first problem. We approach the classification of email into request

type classes in the following manner: Each request type

class is treated as a language described by a grammar.

Membership in a language determines the classification.

Membership in multiple languages requires disambiguation by a decision tree. If an email is not a member of

any of the languages, then it is deemed an irrelevant

email not containing a meeting request. We will now describe the properties of the grammar.

3.1 A Collocational S emantic Grammar

Semantic grammars were originally developed for the

domains of question answering and intelligent tutoring

(Brown and Burton, 1975). A property of these grammars is that the constituents of the grammar correspond

to concepts specific to the domain being discussed. An

example of a semantic grammar rule is as follows:

MeetingRequest

Can we get together DateType for ActivityType

In the above example, DateType and ActivityType can be

satisfied by any word or phrase that falls under that semantic category. Semantic grammars are a practical approach to parsing emails for request type because they

allow information to be extracted in stages. That is, semantic recognizers first label words and phrases with the

semantic types they belong to, then rules are applied to

sentences to test for membership in the language. Semantic grammars also have advantage of being very intuitive, and so extending the grammar is simple to understand. Examples of successful applications of semantic

grammars in information extraction can be found in entrants to the U.S. government sponsored MUC conferences, including FASTUS system (Hobbs et al., 1997),

CIRCUS (Lehnert et al., 1991), and SCISOR (Jacobs and

Rau, 1990).

The type of semantic grammar shown in the above example is still somewhat narrow in coverage because the

productions generated by such rules are too specific to

certain syntactic realizations. For example, the previous

example can generate the first production listed below,

but not the next two, which are slight variations.

Can we get together tomorrow for a movie

*Can we get together tomorrow to catch a movie

*Can we get together sometime tomorrow and check

out a movie

We could arguably create additional rules to handle the

second and third productions, but that comes at the expense of a much larger grammar in which all syntactic

realizations must be mapped. We need a way to keep the

grammar small, the coverage of each rule broad, and at

the same time, the grammar we choose must be robust to

all the aforementioned problems that plague email texts

like omission of words, and sentence fragments. To meet

all of these goals, we add the idea of collocation to our

semantic grammars. Collocation is generally defined as

the proximity of two words within some fixed “window”

size. This technique has been used in variety of natural

language tasks including word-sense disambiguation

(Yarowsky, 1993), and information extraction (Lin,

1998). Applying the idea of collocations to our semantic

grammar, we eliminate all except the three or four most

salient features from each of our rules, which generally

happen to be the following atom types: subjectType,

verbType, and objectType. For example, we can rewrite

our example rule as the following: (for clarification, we

also show the expansions of some semantic types)

MeetingRequest ProposalType SecondPersonType

GatherVerbType DateType ActivityType

ProposalType can| could | may | might

SecondPersonType we | us

GatherVerbType get together | meet | …

In our new rules, it is implied that the right-hand side

contains a collocation of atoms. That is to say, between

each of the atoms in our new rule, there can be any string

of text. An additional constraint of collocations is that in

applying the rules, we constrain the rule to match the text

only within a specified window of words, for example,

ten words. Our rewritten rule has improved coverage,

now generating all the productions mentioned earlier,

plus many more. In addition, the rule becomes more robust to ill-formed grammar, omitted words, etc. Another observation that can be made is that our grammar size

is significantly reduced because each rule is capable of

more productions.

3.2 Negative Collocates

There are however, limitations associated with collocation-based semantic grammars – namely, the words not

specified, which fall between the atoms in our rules, can

have a unforeseen impact on the meaning of the text surrounding it. Two major concerns relating to meeting requests are the appearance of “not” to modify the main

verb, and the presence of a sentence break in the middle

of a matching rule. For example, our rule incorrectly

accepts the following productions:

*Could we please not get together tomorrow for that

movie? (presence of the word “not”)

*How can we meet tomorrow? I have to go to a movie with Fred. (presence of an inappropriate sentence

break)

To overcome these occurrences of false positives, we

introduce the novel notion of negative collocates into

our grammar, which we will denote as atoms surrounded

by # signs. A negative collocate between two regular

collocates means that the negative collocate may not fall

between the two regular collocates. We also introduce an

empty collocate into the grammar, represented by 0. An

empty collocate between two regular collocates means

that nothing except a space can fall between the two regular collocates. We can now modify our rule to restrict

the false positives it produces as follows:

MeetingRequest ProposalType 0 SecondPersonType

#SentenceBreak# #not# GatherVerbType #Sentence Break#

DateType #SentenceBreak# ActivityType

Using this latest rule, most plausible false positives are

restricted. Though it is still possible for such a rule to

generate false positives, pragmatics make further false

positives unlikely.

3.3 Implications of Pragmatics

Pragmatics is the study of how language is used in practice. A basic underlying principle of how language is

used is that it is relevant, and economical. According to

Grice’s maxim (Grice, 1975), language is used cooperatively and with relevance to communicate ideas. The

work of Sperber and Wilson (1986) adds that language is

used economically, without intention to confuse the listener/reader.

The implications of pragmatics on our grammar is that

although there exists words that could be added between

collocates to create false positives, the user will not do so

if it makes the language more expensive or less relevant.

This important implication is largely validated by the

fallout metric in performance evaluations which we will

present later in this paper.

So in the above example, an email whose DCF has the

request type “meeting declined” cannot be classified as

“meeting confirmed” at the present step, and a meeting

cannot be rescheduled if it has not first been requested.

3.4 Constraints on Email Classif ication

f rom Dialog Context

3.5 “Parsing” w ith a Co llocational

Semantic Grammar

One additional piece of context which can be leveraged

to improve the accuracy of the meeting request classifier

is constraint from the dialog context. In our system,

emails may be classified into the request types of: new

meeting request, cancellation, rescheduling, confirmation, or irrelevant. Using information gleaned from email

headers, we are able to track whether or not an email is

following up with a previous email which contained a

meeting request. This constitutes what we call a “dialog

context”. We briefly explain its implications to the classifier below.

Our collocational semantic grammar contains no more

than 100 rules for each of the request types. “Parsing”

the text occurs with the following constraint: the distance

from the first token in the fired rule to the last token in

the fired rule must fall within a fixed window size, which

is usually ten words. When the language of more than

one request type accepts the email text, disambiguation

techniques are applied, such as the aforementioned finite

state automaton of allowable request type transitions

(Figure 2).

Now that we have discussed how the collocational semantic grammar enables robust meeting request identification, we now discuss how linguistic preprocessing,

semantic recognition agents, and informational extraction

techniques are applied to fill in the rest of the meeting

request details.

3.4.1 Dialog Context Frames

The dialog management step examines the incoming

email header to determine if that email belongs to a

thread of emails in which a meeting is being scheduled.

To accomplish this, each email determined to contain a

meeting request is added to a repository of “dialog context frames” (DCF). A DCF serves to link the unique ID

of the email, which appears in the email header, to the

meeting request frame that contains the details of the

meeting. The slots of the DCF are nearly identical to the

meeting request frame, except that it also contains information about the email thread it belongs to. DCFs are

passed on to later processing steps, and provide the historical context of the current email, which can be useful

in disambiguation tasks. In addition, the most recent

request type, as specified in a DCF, helps to determine

the allowable next request type states. In other words, a

finite state automaton dictates the allowable transitions

between consecutive email requests. Figure 2 shows a

simplified version of the finite state automaton that does

this.

Figure 2. The nodes in this simple finite state automaton

represent request types, and the directed edges represent

allowable transitions between the states

4

Extracting Meeting Details

In our previous discussion of the collocational semantic

grammar, we took for granted that we could recognized

certain classes of named entities such as dates, activities,

times, and so forth. In this section, we give an account of

how linguistic preprocessing and semantic recognition

agents perform this recognition. We then explain how,

given an identified meeting request sentence within an

email, the rest of the meeting request frame can be filled

out. As we have thus far described processes out of order, we wish to refer the reader to Figure 3 for the overall

processing steps of the system architecture to regain

some context.

In cases where multiple qualifying tokens can fill a slot,

distance to the parts of the email responsible which were

accepted by the grammar is deemed inversely proportional to the relevance of that token. Therefore, we can disambiguate the attachment of frame slots tokens by word

distance. Sanity check rules and truth maintenance rules

verify that the chosen frame details are consistent with

the request type and the user’s calendar.

When the semantic frame is filled, it is sent to other

components of the system that execute the necessary actions associated with each request type.

Figure 3. Flowchart of the system’s processing steps.

4.1 Normalization

Because of the dirty nature of email text, it is necessary

to clean, or normalize, as much of the text as possible by

fixing spelling and abbreviations, and regulating spacing

and punctuation. We apply an unsupervised automatic

spelling correction routine to the text, recognize and tag

abbreviations, and tokenize paragraphs and sentences.

4.2 Semantic Recogn ition Agents

Each semantic recognition agent extracts a specific type

of information from the email text that becomes relevant

to the task of filling the meeting request frames. These

include semantic recognizers for dates, times, durations,

date and time intervals, holidays, activities, and action

verbs.

The resulting semantic types are also used by the parser to determine the meeting request type. For many of

the semantic types used by our system, matching against

a dictionary of concepts which belong to a semantic type

is sufficient to constitute a recognition agent. But other

semantic types such as temporal expressions require

more elaborate recognition agents, which may use a generative grammar to describe instances of a semantic type.

As with other heuristic and dictionary-based approaches, not all of the temporal and semantic expressions will

be recognized, but we demonstrate in the evaluation that

most are recognized. In the task of semantic recognition

in a practical and real domain such as email, our experience is that the 80-20 rule applies. 80% recognition performance can be obtained by handling only 20% of the

conceivable cases. Again, the implications of pragmatics

can be felt.

4.3 Filling in Meeting Details

After identifying the request type of the email, each request type has a semantic frame associated with it which

must be filled as much as possible from the text. Because each slot in the semantic frame has a semantic type

associated with it, slot fillers are guaranteed to be atoms.

Filling the frame is only a matter of finding the right t okens. Because we know the location of the salient meeting request sentence(s) in the email, we use a proximity

heuristic to extract the relevant meeting details.

5

Evaluation

The performance of the natural language component of

our system was evaluated against a real-world corpus of

5680 emails containing 670 meeting request emails. The

corpus was compiled from the email collections of several people who reported that they commonly schedule

meetings using emails. Emails were directed to the particular person in the “To:” line, and not to any mailing

list that the person belongs to, and does not include spam

mail. Emails were judged by two separate evaluators as

to whether or not they contained meeting requests.

Emails over which evaluators disagreed were discarded

from the corpus. The standard evaluation metrics of recall, precision, and fallout (false positives) were used.

An email is marked as a true positive if it meets ALL

of the following conditions.

1. the email contained a meeting request

2. the request type was correctly identified by our

system

3. the request frame was filled out correctly.

Likewise, false positives meet ANY of the following

conditions:

1. the email does not contain a meeting request but

was classified as containing a meeting request

2. the request frame was filled out incorrectly

In the test system, a collocation window size of 10 was

used by the collocational semantic grammar’s pattern

matcher. Of the 5680 emails in our test corpus, 670 contain email requests. Our system discovered 499 true positives and 21 false positives, missing 171 meeting requests. Table 1 summarizes the findings of the evaluation.

Table 1: evaluation summary

Metric

Recall

Score

74.5%

Precision

96.0%

Fallout

0.4%

Evaluation based on 5680 emails, 670 of which requested meetings

Upon examination of the results, we suggest two main

factors are to account for the false positives. First, our

use of synonym sets like “VerbType” as described in

section 3.1 led to several overgeneralizations. For example, the words belonging to the GatherVerbType may

include “get together,” “meet,” and “hook up.” Unintended meanings and syntactic usages of these phrases (e.g.

“a high school track meet”) do occur, and occasionally,

these word sense ambiguities cause false classifications,

though most are caught by fail-safes such as the dialog

context frame. However, these overgeneralizations were

expected, given that we did not employ word-sense disambiguation techniques such as part-of-speech tagging or

chunking, because these mechanisms themselves generate

error. Second, email layout was a source of errors. In

some emails, spacing and indentation was substituted for

proper end-of-sentence punctuation. This sometimes

caused consecutive sections to be concatenated together,

becoming a source of noise for the semantic grammar’s

pattern matcher.

Approximately 25% of the actual meeting request

emails were missed by the system. A variety of factors

contributed to this. The largest contributor was lack of

vocabulary of specific named entities. For example, our

semantic recognizers can recognize the sentence “Do you

want to see a movie with me tonight?” as a MeetingRequest because it recognizes seeing a movie as an Activity. However, it does not recognize the sentence, “Do you

want to see the Matrix with me tonight?” because it has

no vocabulary for the names of movies. Other meeting

requests were often missed because there was no appropriate rule in the grammar or because their recognition

required a larger window size. Upon playing with window sizes, however, we found that increasing the window

size by just 2 tokens substantially increased the occurrence of false positives. The tradeoff between varying

window sizes will be a future point of exploration. Other

less prominent contributors included the inability to recognize some date and activity phrases, the often subtle

and implicit nature of meeting requests (e.g. “Bill was

hoping to learn more about your work”), and the fact that

many meeting requests were temporally unspecified, i.e.,

there was no particular date or time proposed.

In spite of the lower-than-expected 74.5% accuracy

rate, we see the evaluation results as positive and encouraging. The most encouraging result is that fallout is minimal at 0.4%. The fact that our collocation-based semantic grammar did not create more false positives provides

some validation to the collocation approach, and to the

implications of pragmatics on the reliability of positive

classifications produced through such an approach.

The recall statistics can be improved by broadening the

coverage of our grammar, and by acquiring more specific

vocabulary, such as movie names. As of now, each request type has fewer than 100 grammar rules, and currently there are only seven semantic types. There are no

semantic types with a level of granularity that would

cover a movie name. We believe that investing more resources to expand the grammar and vocabulary can boost

our recall by 10%. Growing the grammar is fairly easy to

do because rules are simple to add, and each rule can

generate a large number of productions. Growing vocabulary is dependent on the availability of specific

knowledge bases. We plan to do more extensive evalua-

tions once we have compiled a corpus from our beta testing.

6 Conclusion

We have built a personal software agent that can automatically read the user’s emails, detect meeting requests, and

interact with the person making the request to schedule the

meeting. Unlike previous approaches taken to asynchronous email meeting scheduling, our system receives meeting

requests and generates meeting dialog email all in natural

language, which is arguably the most portable representation. This paper focused on two tasks in our system: identifying emails containing meeting requests, and extracting

details of the proposed meeting.

We examined how two prominent approaches for email

classification, statistical and full parsing, failed to address

the needs of our task model. Statistical classifiers generate

too many false positives which are an expensive proposition

because they annoy and distract users. Full parsing is too

brittle over the noisy email corpora we tested, and produce

too low a recall. By leveraging collocational semantic

grammars with two unique collocation operators, the negative collocate and empty collocate, we were able to more

flexibly identify meeting requests, even over ill-formed text.

This recognition of salient meeting request sentences

worked synergistically to help the extraction of remaining

meeting details. Our preliminary evaluation the first generation implementation, while small, shows that the approach

has promise in demonstrating high recall and very low fallout.

6.1 Limitations

There are several limitations associated with the approach

taken. One issue is scalability. Unlike systems that use

machine learning techniques to automatically learn rules

from a training corpus, our grammar must be manually extended. Luckily, the email meeting request domain is fairly

small and contained, and the ease of inputting new rules

makes this limitation bearable.

Another issue is portability. Our grammar and recognition agents were developed specifically for the email meeting request domain, so it is highly unlikely that they will be

reuseable or portable to other problem domains, though we

feel that the general methodology of using collocational

semantic grammars for low-fallout classification will find

uses in many other domains.

Despite these limitations, the availability of an agent to

facilitate email users in the identification and management

of meeting requests is arguably invaluable in the commercial domain, thus justifying the work needed to build a domain-specific grammar. Performance of the evaluated system suggests how the application can best be structured.

Meeting requests can be identified and meeting details extracted with 74.5% accuracy, with only a 0.4% occurrence

of false positives. In the Meeting Runner software agent,

meeting scheduling is currently semi-automatic. The system opportunistically identifies incoming emails that contain email requests. Because of the low fallout rate, costly

user attention is not squandered in incorrect classifications.

Thus, in measuring the benefit of the system to the user, we

make a fail-soft argument. The system helps the user identify and schedule the vast majority of meeting requests automatically, while in the remaining cases, the user does the

scheduling manually, which is what he/she would anyway

in the absence of any system.

6.2 Future Work

In the near future, we plan to extend the coverage of the

grammar to include an expanded notion of what a “meeting”

can be. For example many errands such as “pick up the

laundry” could constitute meeting requests.

We would also like to increase the power of the recognition agents by supplying them with more world semantic

resources, or information about the world. For example, our

current system will understand, “Do you want to see a movie tonight?” but it will not be able to understand, “Do you

want to see Lord of the Rings tonight?” We can envision

providing our recognition agents with abundant semantic

resources such as movie names, all of which can be mined

from databases on the Web. We have already begun to supply our recognition agents with world knowledge mined out

of the Open Mind Commonsense knowledge base (Singh,

2002), an open-source database of approximately 500,000

commonsense facts. By growing the dictionary of everyday

concepts our system understands, we can hope to improve

the recall of our system.

Acknowledgements

We thank our colleagues at the MIT Media Lab, MIT AI

Lab, and BCL Technologies. This project was funded by

the U.S. Dept. of Commerce ATP contract # 70NANB9H3025

References

Bobrow, D. G., Kaplan, R. M., Kay, M., Norman, D. A.,

Thompson, H., and Winograd, T. (1977). Gus, a frame driven dialog system. Artificial Intelligence, 8, 155-173.

Brown, J. S. and Burton, R. R. (1975). Multiple representations of knowledge for tutorial reasoning. In Bobrow, D. G.

and Collins, A. (Eds.), Representation and Understanding,

pp. 311-350. Academic Press, New York.

Evite.com Web Site. Available at http://www.evite.com

Fellbaum, C. (Ed.). (1998). WordNet: An Electronic Lexical

Database. MIT Press, Cambridge, MA.

Finn, A., Kushmerick, N. & Smyth, B. (2002) Genre classification and domain transfer for information filtering. In

Proc. European Colloquium on Information Retrieval Research (Glasgow).

Gaizauskas, R., Wakao, T., Humphreys, K., Cunningham,

H., and Wilks, Y. (1995). University of Sheffield: Description of the LaSIE system as used for MUC-6. In Proceedings of the Sixth Message Understanding Conference

(MUC-6), San Francisco, pp. 207-220. Morgan Kaufmann.

Grice, H. P. (1975). Logic and conversation. In Cole, P.,

and Morgan, J. L. (Eds.), Speech Acts: Syntax and Semantics Volume 3, pp. 41-58. Academic Press, New York.

Hobbs, J. R., Appelt, D., Bear, J., Israel, D., Kameyama,

M., Stickel, M.E., and Tyson, M. (1997). FASTUS: A cascaded finite-state transducer for extracting information from

natural-language text. In Roche, E., and Schabes, Y. (Eds.),

Finite-State Devices for Natural Language Processing, pp.

383-406. MIT Press, Cambridge, MA.

Jacobs, P. and Rau, L. (1990). SCISOR: A system for extracting information from online news. Communications of

the ACM, 33(11), 88-97.

Jurafsky, D. and Martin, J. (2000). Speech and Language

Processing: An Introduction to Natural Language Processing, Computational Linguistics and Speech Recognition,

pp. 501-661. Prentice Hall.

Lehnert, W. G., Cardie, C., Fisher, D., Riloff, E., and Williams, R. (1991). Description of the CIRCUS system as

used for MUC-3. In Sundheim, B. (Ed.), Proceedings of the

Third Message Understanding Conference, pp. 223-233.

Morgan Kaufmann.

Levin, B. (1993). English Verb Classes and Alternations.

University of Chicago Press, Chicago.

Lin, D. (1998). Using collocation statistics in information

extraction. In Proc. of the Seventh Message Understanding

Conference (MUC-7).

Lotus

Notes

Web

Site.

Available

http://www.lotus.com/home.nsf/welcome/notes

at

MeetingWizard.com

Web

http://www.meetingwizard.com

Available

at

Available

at

Site.

Microsoft

Outlook

Web

Site.

http://www.microsoft.com/outlook/

Singh, P. (2002). The public acquisition of commonsense

knowledge. In Proceedings of AAAI Spring Symposium:

Acquiring (and Using) Linguistic (and World) Knowledge

for Information Access. Palo Alto, CA, AAAI.

Sleator, D. and Temperley, D. Parsing English with a Link

Grammar, Third International Workshop on Parsing Technologies,

August

1993.

Available

at:

http://www.link.cs.cmu.edu/link/papers

Sperber, D., and D. Wilson. (1986). Relevance: Communication and Cognition. Oxford: Blackwell.

Woods, W. A. (1977). Lunar rocks in natural English: Explorations in natural language question answering. In Zampolli, A. (Ed.), Linguistic Structures Processing, pp. 521569. North Holland, Amsterdam.

Yarowsky, D. (1993). One sense per collocation. In Proceedings of the ARPA Workshop on Human Language

Technology, pages 266-271.