Ofer Dekel: Let`s get started this morning. So it`s our pleasure to host

advertisement

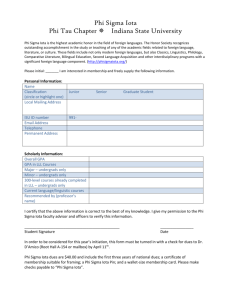

>> Ofer Dekel: Let's get started this morning. So it's our pleasure to host Sebastien Bubeck. Sebastien finished his PhD in France and then did a postdoc in Barcelona with Gabor Lugosi and now he is an assistant professor at Princeton and today he's going to tell us about linear bandits. >> Sebastien Bubeck: Thank you. Thank you Ofer. Thank you very much for the invitation. I'm really happy to be here and to present this work. I have been working for five years on linear bandits. This has been one of the topics where I spent most of my time and I am going to show to you why I decided to spend five years on this and some of the results that I got. Let's start right away by defining mathematically what is the problem and then I will show you some applications with it. First I want to set up the notation and everything. The linear bandits problem is a sequential decision-making problem where a player is going to make a sequential decision is going to play for n rounds so n is given. He has an action set A which is a subset of Rd, a compact subset and there is an adversary who is going to play against him and the adversary also has an action set which designated as Z and Z is also a subset of Rd. The game is going to go on sequentially so at each round for t equals 1 of 2n. We have three steps. The first one is that the player chooses an action at, in his action set A. Simultaneously, the adversary chooses an action in his own action set which is Z and is denoted by by Zt as a chosen action. The loss of the player is going to be the inner product between at and zt. That's going to be the loss of the player and I am going to assume that the only information that I have as a player about the action that the adversary played is this loss, this inner product between at and zt; it's the only thing that I know. What is my goal? My goal is going to be to minimize my loss overall time steps. So the for sum t equals one of 2n of z’s inner product of at zt. More formally I will define what is called the regret or the pseudo-regret in that case but it doesn't really matter for us in this quantity. Here is my own loss is the sum for t equals 1 2n of the inner product between at and zt. Let's not take care of expectation of the moment and I'm comparing myself to how well I could have done in hindsight if I knew what was the sequence of actions z1 of 2zn that the adversary was going to play. If I had to select one action to play against this sequence of actions I would have selected the action a which minimizes the sum for t equals 1 add to n of at times zt. That's how well I could have done in hindsight and I'm comparing myself to this and this is what I call the regret. What is this expectation? We'll see that we have to play randomly. We have to randomize our decision because otherwise if our decision is a deterministic function of the past, then the adversary can just select the worst possible action for my action. So we don't want the adversary to know exactly what we're going to play. That is the product that am going to look at and I am going to try to characterize how small can be the regret, what is the strategy that can work in this setting. Yes? >>: So at is constant in the single case. It seems that the quantity is going to be big no matter what, right? You're comparing with something that's not going to be very good. >> Sebastien Bubeck: Right. Here is how you should view it. You should view it like this. Either this is small, in which case, so either my loss is small, in which case I'm happy. And if it's not small but my regret is small then I know that it's not small because nobody was good, no action was good. Either no action was good or if there is an action which is good then I will identify it. That's the intuition. >>: You are comparing to a constant action? >> Sebastien Bubeck: I am comparing to a constant action. You will see right now I'm going to give you an example and you will see why it's meaningful. Maybe right now it's a little bit hard to see why this is meaningful. Please interrupt me whenever you want if you have a question. It's much easier for me if you have questions. I'm going to give you now one example, which will be kind of a canonical example. This is a program of online routing. This is a map of Barcelona where I did my postdoc. It's not because I didn't want to change the slides since my postdoc. It's just that if I give you a map of Princeton there is no really shortest path program. There is only one road. [laughter]. Anyway, so this was more interesting. Point A is where I lived when I was in Barcelona and point B was the Centre de Recerca where I was working. Every morning I had to choose a path to bike from my place to the university and I had many possible choices. My loss in this case was how long it took me to reach my destination. This time is the sum of the time on each street, so on each street there is a delay which depends on many things. My loss, my total time to travel is going to be the sum of the delays on each street and this is going to be modeled as a linear bounded problem. Let's see a little bit more formally how this goes. We have a graph of the streets of Barcelona and I want to go from this point to the other one over there. As a player what I choose at each day and each time step is I choose a path, so I choose a path like this. Simultaneously, you should think that there is an adversary. I mean, nature is putting delays on the streets of Barcelona, so I have delays. These delays are real numbers Z1 to Zd. I assume that I have d edges in my graph. So I have Z1, Z2 et cetera. What is the delay of my path? Here it is Z2 plus Z7 et cetera up to plus Zd is the total delay on this path. Every day I'm going to choose a path. I will get a loss like this. Now I need to tell you what is my feedback. So I tell you that the main thing that I'm going to be interested in is a case where my feedback is this loss, the sum of the delays. These are all of the feedbacks that we could think of. I'm going also to be somewhat interested in them. The most, the full information feedback, the full feedback is that at the end of the day, I don't know, I listen to the radio and I record the delays on all of the streets of Barcelona. That is very, very strong. It requires a strong investment of my time to do that, but in some cases I ask for information. I would have served exactly what the adversary played. This is what I'm going to call the full information feedback. A weaker feedback which makes more sense, and as you will see is very interesting in many applications, is what I call the semi-bandit feedback. The semi-bandit feedback is that I observed the delays only on the paths that I chose. So in this case I would have observed Z2, Z7 up to Zd, but I don't know what are delays on the streets that I didn't take. The most difficult type of feedback is that I just record how long it took me. That's also the most natural one in some sense. I just record the total time. >>: Not in the biking case. In the biking case the semi-bandit seems reasonable because you would know if you are sending a packet through some network you might only know the example. >> Sebastien Bubeck: Yes. I agree with that, but even in the biking case it could make sense that I only record the total time. It's not, it's the weakest thing that I could do. I mean, all three settings make sense in my opinion. Yes? >>: The adversary for example doesn't seem adversarial. So this is more like a reinforcing or a planning problem? >> Sebastien Bubeck: It's sort of a planning problem except that what you have to understand is that the key point is that the sequence of actions, you don't have to think of them as the adversary. You don't have to think of them as chosen adversaries, but they are fixed. There is no i idea assumption on there, for instance. There is no stochasticness, so it's a worst-case model. It doesn't have to be an adversary choosing the actions, the Zt; it's that it's going to be true, what I'm going to say is going to be true for any sequence you want, Z1, Z2 up to Zn. >>: Yeah, but it seems like the results would be different if on the one hand you have a real adversary who is really trying to mess you up. On the other hand when there is simply just nature out there that is a natural process. Is not trying to intentionally make your reward… >> Sebastien Bubeck: It turns out that the result won't be different. >>: Oh. >> Sebastien Bubeck: That's one of the nice things. >>: Especially if you live in New Jersey. [laughter]. >> Sebastien Bubeck: I didn't think of that. >>: But I mean just to be completely clear on what you are focusing on is outside your minimum over A as a maximum over Z. >> Sebastien Bubeck: I'm going to be very clear on the next slide, so everything will, yes. That's exactly what I'm getting at. Let me make this a little bit more formal, because this is a semibandit framework, for instance, how does it relate to what I was saying before? What I just described was the combinatorial setting. In the combinatorial setting my action set is a subset of the hypercube in d I mention. Is that represent of the item though? Passing the graph, st passing the graph. It kind of represents the spanning trees in the graph can represent many combinatorial structure, so I have this action set which is given to me. In my previous example A are the incident vectors of paths in my graph. Z is going to be the full hypercube 0 run to the d so the adversary can put normalize delays on the edges. On each edge I can put a delay between 0 and 1 and when I choose a path because of this notation, my loss suffered is in the inner product. You see my action At for this path is a vector which has a 1 on this edge, a 1 here and 1 there and 0 everywhere else, and Zt is a vector, is a vector of weights on the graph so the inner product is indeed the sum of the delays. So that makes sense. I'm going to look at the minimax combinatorial regret, so I look at the regret. Now I'm going to try to find the best strategy such that for any, for a given set of [indiscernible] we are going to look at the worst adversary so the inf, infimum of all strategies for my player and the supreme of all adversaries. The adversary may even know what is my strategy and I will want bounds, I mean I will do two things, but for now let's think if I want bounds, I do not depend on the combinatorial set. My strategy can depend on the combinatorial set, but I want a universal bound that will allow us something simple. What I want is to characterize this quantity R n C in the three types of feedback for full information for semi-bandit and bandit information. Is that clear? That's what I want to do. >>: [indiscernible] this guy [indiscernible] >> Sebastien Bubeck: Yes. But it's placed sequentially, but it's placed once. >>: [indiscernible] >> Sebastien Bubeck: But I can learn about the sequence in one of two [indiscernible]. To keep on coming back your question is that if there is nothing to learn there is nothing to learn and then I will not do good. But maybe there is something to learn in the sequence. Maybe there is one direction which is really good and then I will learn it. If I have a small regret I must learn it. This means that I will identify what is a good direction. >>: Question. [indiscernible] characterized [indiscernible] function class that would respond to a malicious intentional adversary versus a stochastic sort of generative model-based adversary? Is that a reasonable prediction? >> Sebastien Bubeck: This would be, I could do a talk on this but this would be completely different. Yes. There are many categories of adversaries. In this talk I'm going to assume the worst possible adversary and it will work fine. Even without making any restriction on the adversary I will be able to make strong statement. >>: [indiscernible] adversary there would be a natural process then the [indiscernible] could be in a sense better. >> Sebastien Bubeck: Absolutely. Absolutely. And this is a very interesting direction. For instance if the Zi is iad then you can prove better bounds. One interesting direction is to have Z better bounds, but also the worst case guarantee, like you want the best of worlds, but that's a different topic. That's the combinatorial setting. That's one setting. The other one is the geometric setting. The geometric setting goes like this. So I just recall what is the definition of a polar, so if I have a set, a subset X of Rd, a polar of X is this set. It's a set of points in Rd that have an inner product smaller than 1 in absolute value for any X in x. So if capital X is a unit ball of some norm and we have two balls than the polar is just the unit ball in the dual norm. It's exactly by definition. If X is the Euclidean ball then X polar is the Euclidean ball also. Why is this interesting? Because it follows for certain normalizations, so in the geometric setting I have a certain action set, no assumption on it, and the adversary is playing on the polar of the action set and that allows for a normalization because then it tells me that the loss is the instantaneous loss is always between -1 and 1. Before here I have a normalization which is Zt Z of the hypercube or 1 to the d. And here the normalization is at the adversary place on the polar. So this is many of particular interest. I think it considers the application but it allows to have a natural and simple normalization. In this case I'm interested in the minimax geometric regret R and G which is the supreme of all action set, all subset of Rd when Z is the polar or a subset of the polar and I gain the same thing in sup of the regret. Now some applications. I think it is a linear bandit problem is really a fundamental problem, as fundamental as linear regression or linear optimization. Right now we only have a few applications but I think in the next year there will be many more. Let me tell you about the application that we have right now. The first one is contextual bandit and I'm using the model of Li, Chu, Langford and Schapire. Let's assume that you have N ads and you are running a website and every time there is a user that comes you have to show him one of the ads. When the user comes he arrives with certain contextual information. This contextual information gives rise to a subset of Rd which are features for each ad. So ait is a point in Rd and it's a feature vector associated to the ith ad when the [indiscernible] arrived. So I have this feature vector and now I'm going to assume that my payoff for showing the ith ad to the user of the [indiscernible] user is going to be a function of Z [indiscernible] product between the feature and some Zt where Zt is unknown. And Zt also depends on the user. It depends on these contacts. It depends on maybe some randomness. Okay. It depends on many things. What I observe is just this inner product or maybe a transformation of this inner product. I don't know what will happen if I showed to him another ad. If I show him ad i what I observed is inner product ait times Zt but I don't know what will happen if I show him ad j. But now because of the Euclidean structure I can infer something but how much can I infer? So it's going to be the bandit program. Here let me just say something. This is a linear bandit program but where the action set is changing over time. This is my action set. It's changing over time and some of the strategies that I will describe do not work in this case. Some of them do work. That's one application. Another one which is maybe slight more theoretical, but I think it could have plenty of applications. I don't want to spend too much time on this in describing exactly what I mean, but I think many people in the room know what is MDP. So an episodic loop free MDP is just an MDP we have no loop in the action state space, so you always move forward, basically, and you stop at some point and then you start over. That's an episodic loop free MDP. With bandit information, meaning that you only observe what happens when you are in the state. You take an action. You observe what is the reward but you don't know what will happen if you had taken another action. It's very natural. Zillions of problems can be modeled like that and I mean really many problems can be modeled as MDP's. In this paper, NIPS 2013 they show that basically episodic loop free MDP's are just combinatorial semi-bandits. It's a one-to-one mapping and it's computationally efficient. So solving, combinatorial semi-bandits allows you to solve this very wide class of problem of episodic loop free MDP's. Actually, also add something similar in another paper and 2012 with a reduction to linear bandit. The last one I should also show you I think the generality of the trouble, and this one to my knowledge has not really been used in practice. Consider your favorite linear program, whatever it is, a real application or an inventory problem or something more surgical. You want to minimize c times x subject to some constraint. In linear programming you know c and you know A. Now what is going to be the linear bandit problem on the action set that corresponds to this constraint? So of the x is that Ax is smaller than b. But it's just you are solving a sequence of linear problems like this but with a cost vector changing over time and you don't really know which cost vector you are optimizing at a certain time. That's in my opinion it's very meaningful. Think of an inventory program. You don't know exactly what is going to be the cost vector of tomorrow. You may have some idea because you have past information and you have learned about the processes, but you don't know exactly what's going to happen tomorrow and so this, in my opinion, is a better model for what happens in practice rather than solving one big LP once and for all. You are probably better off solving a sequence of LPs with some robustness in how the cost vector is going to change. Yes? >>: [indiscernible] do you assume that A and b are known? >> Sebastien Bubeck: Yeah. Absolutely. The constraints on them, that make sense. You know what our the constraints, right? >>: On your [indiscernible] change you can have some cost to the violation of constraints, not so [indiscernible] >> Sebastien Bubeck: Yes. This is related to the first model like changing action set is definitely something meaningful. I agree. Any questions on the application? So the back edge [indiscernible] is also a [indiscernible] one. I'm not going to come back to applications. Let's go into the geometric setting where we want to understand what is the order of magnitude of this quantity and the bandit feedback and what kind of strategies can we do? This is a little bit of the history of the geometric setting. The first thing that was considered is in the seminal paper by Auer, Cesa-Bianchi, Freund and Schapire in 1998, they consider the case where the action set is a simplex, a very simple action set. And they proved the regret bound of order square root of Dn. Remember d is a dimension and n is a time arrival, so I put my paper also with Jean Yves from 2009 because they could only prove square root of dn log d and we could remove the log. Okay. That's not very important. We will not care about logs in this talk. Okay. So that was 1998. Then four years later the first real paper introducing a linear problem was Auer paper in 2002 and he consider only the case of Z1 up to Zn being in iid sequence. If you have an iid sequence you are in known territory. You can do many, many things. It's not such a difficult problem in some sense. He proved a d to the 5/2 square root n regret bound. Two years later Awerbuch and Kleinberg showed an n to the 2/3 regret bound only in the oblivious case which is going back to your question here oblivious means there's no adversary. It's just a fixed sequence Z1 up to Zn. In the same year McMahan and Blum showed an n to the 3/4 regret bound if you assume nothing about the adversary. This was the first general regret bound with only n to the 3/4. When we don't get square root n I don't put the dependency on d because as you can see you can always get squared n. So n is the most important parameter. n is the number times you are going to play. But d is also very important because think of the context of our bandit information, d is the size of the feature space, so a difference between d to the 5/2 and a d square root n which we get at Zn, that can be a huge difference in your regret, a thousand or something like that. You could move from performing an average as good as the best ad with a regret of order I don't know, average regret of the .1 when you have d to the 5/2 and when you just have d if d is just an order of 1000 then you get .001, so you can do much, much better when you improve the dependency on d. Anyway, then two years later they finally proved the n to the 2/3 without the oblivious. Again, two years later in 2008 Dani, Hayes and Kakade, so this was a seminal paper, they got the first square root n regret bound without any assumption on the adversary so they get d to the 3/2 squared n and they show that the best you can hope for is d square root n. In the same year there was an important paper by Abernethy, Hazan and Rakhlin where they showed d to the 3/2 square root n and it's computationally efficient. I will come back to that. Now four years later, finally, so two years ago we proved with Nicolo and Sham the optimal regret bound, d square root n and this is optimal because of the lower bound of Dani, Hayes and Kakade and that's what I'm going to describe. It took 10 years to get to it, but now we have Z optimal bound. Surely it's not going to be computationally efficient and at the end of the talk I will give you, I will show you a new idea for a computationally efficient strategy. Let's get into it. Let's get into, yes? >>: What is special about doing it just to the geometric setting? You are saying that all of this has been considering the geometric setting as opposed to the original. Why is it significant? >> Sebastien Bubeck: If you have a mechanism for the geometric setting, you immediately have an algorithm for the combinatorial setting. >>: But strictly more general. >> Sebastien Bubeck: It's more general. The catch is when you move from the, so in the geometric setting you have this normalization of I play in my action set and you play in the polar. In the combinatorial setting, I play in the action set and the other one is not the polar. It's the hypercube. So you have a sudden fixed constraint on the adversary which has nothing to do with my constraint. That's, potentially you could use that. And that's what I'm going to do. I'm going to show that in the combinatorial setting you can do better than viewing it as a geometric problem. That's going to be the key. That will be the second part of the talk. This is the most important slide. We want to estimate the unseen. We only observe our product a t times zt but we would like to know what would have happened if we had selected another action. We only observe one real value and we want basically d real values. How can we do that? There is a well-known technique; it's importance sampling. How do we do this? You play a t at random, so you have a probability distribution Pt supported in A, so you play your action a trend on this priority distribution Pt and using this we can build an unbiased estimate Zt of t of Zt with this formula. Sigma t is the second moment of my distribution, so the expectation of the outer product a times a transpose when a is drawn from my distribution Pt. I'm going to assume that this is invertible. If it's not, you can just take the [indiscernible] to the inverse and that works. But let's say it's invertible. So Zt of t is equal to sigma t-1 at transpose Zt. The first thing we need to verify is that I can indeed do this computation. So at times Zt that's the real number that I observed. That's the value that I get. Now sigma t -1 I can compute it. It depends on Pt and at, I know what it is. It's what I played. I can compute this quantity. I cannot compute Zt. Zt I never observed it. I just observed this inner product. I have Zt of t. Now let's verify that this is unbiased. I want to show to you that the expectation of Zt of t when at is run from Pt is equal to Zt. With just one real number you can get an unbiased estimate of the entire vector. So let's see. The expectation is this. So Zt of t is just this formula. Sigma t -1 at at transpose Zt. What is random in this quantity when I draw at from Zt? Only at at transpose and what is the expectation of at at transpose? It's sigma t by definition. So we have sigma t -1 times sigma t times Zt so I have Zt. That was it. That's the most important slide. Yes? >>: But if Zt is independent of the cost of Zt it's not going to be very efficient. It's going to cost you a lot to make this [indiscernible] >> Sebastien Bubeck: In what sense? Are you thinking of the variance control? >>: [indiscernible] exploring without feedback because [indiscernible] is independent of… >> Sebastien Bubeck: Yes, but Pt, yes, I see what you mean, but Pt is dependent on Z1 of 2Zt-1, so again it's coming back to this idea of if there was something to learn then I am learning it. It's not, yeah. >>: This is not written that Pt depends on Z1 to Z [indiscernible] >> Sebastien Bubeck: No. I didn't. And that's a key point. My action is the way I'm going to choose my action is going to depend on everything I observed in the past, so all junior products. In the next slide I will tell you how to choose this Pt. Right now I am just expressing that there is a Pt. One other question which is for anyone who has looked at importance sampling knows that the issue of this now is okay. This is unbiased, very good, but what about the variance. If this is just unbiased you said nothing. So the variance we can control it and this is, these two inequalities that gives you everything. If in 2002 Auer knew about it he would've proved almost everything that I'm going to tell you. Here is the variance control. I'm going to control the variance in the known induced by my own sampling. It's not clear why I would do that but I'm telling you this is a good quantity to look at. I look at Z ~ t. When I apply the quadratic form sigma t, so this is a variance [indiscernible] and I want to control this in expectation. So what is the expectation of Z ~ t times sigma t Z ~ t? Let's just look at what is Z ~ t existing? It's a real number times a vector. This real number, because I'm in the geometric setting I can upper bound it by one. I can remove this because I will have this guy square and this is upper bounded by one. Here this upper bound is just removing this, upper bounding it by one. Now what do I have? Well sigma t is symmetric so the inverse is symmetric and when I take this guy transpose I have at transpose times sigma t-1. So I have at transpose sigma t -1 sigma t sigma t -1 at. So one of the sigma t -1 cancels and I get at transpose sigma t -1 at. I did almost nothing. Now I just realized that this is the same thing as a trace of sigma t -1 times at at transpose. This is a real number so it's a trace of x is, I mean x is equal to the trace of x and now I just use trace of ab is equal to trace of ba and so I get this and now the trace is a linear variable so the expectation can come in and I have expectation of at at transpose, by definition that's sigma t, trace of sigma t -1 sigma t. That's trace of identity. I get d. And this seems as tight as it gets, right? The variance is controlled by d, just the dimensions and this is what allows us to get square root n regret bound instead of the n to the 2/3 of Awerbuch and Kleinberg. So this estimate I'm going to use it in the entire talk, this Zt ~ t and [indiscernible]. Now I need to keep on. I'm going to tell you how to choose Pt, because right now I still don't have a strategy. Here is what we propose. Let's assume for now that A is finite. If A is finite I am going to define Exp2 which is a certain parameter distribution Pt which depends on the past. It goes like this. Let's not care about gamma and nu for the moment. The probability of selecting action A is going to be proportional to exponential minus some learning rate eta time to some s equals 1 to t-1 of Z t of s times a. So Zt of s times a, that's my estimate of the loss. If I had played action a in time step s. I can make this type of reasoning now. What would have been my loss if I had play action a in time step s. This is what would have been my loss if I had play action a from time 1 of 2 time t -1; that's my estimate. My probability of playing action a is going to be smaller if this is bigger. If I estimate that I would have had a big loss, then I don't want to play this action. Makes total sense, so I play something proportional to this and I will just tilt it a little bit, so what I will do is refer it so people in the room who are used to bandits know that it's important to do a certain amount of exploration. So what I'm going to do is refer t-1 minus gamma. I replay according to these exponential weights and refer to gamma I will play according to an exploration distribution mu supported, I mean distribution supported on the action set. Yes? >>: [indiscernible] related to the xb3? >> Sebastien Bubeck: Yeah, of course. Yes. Exp3 is this when the action set is a simplex. Sorry, so here when a is a canonical basis. So if a is a canonical basis this is Exp3. I don't want to get into why I call it Exp2; there is a reason. [laughter] let's look at it that way. Okay. Yes? >>: [indiscernible] amount of separation because you assume [indiscernible] horizon? >> Sebastien Bubeck: Exactly, yes absolutely. I assume that I know the horizon. Everything could be done if I didn't know the horizon. I would put a gamma t and an eta t and the proof would be more complicated but everything would work. This is not critical. So now I need to tell you what is this mu. This mu is going to be critical. I want to go quickly over this. In Dani, Hayes and Kakade they use mu as a barycentric spanner. What that means is I get a regret bound which is d square n log continuity of A. In 2009 Cesa-Bianchi and Lugosi used mu to be uniform over the action set. You can imagine uniform on the action set is going to be very bad in certain cases. Let me just do this picture. If my action set is a grid like this, so I have a grid. That's my grid graph and I want to go from this point to this one and what I can select his increasing paths like this. If I select uniformly at random a path to explore, then the probability that I visit this edge is going to be exponentially small. It's going to be 1 of a 2 to the m if it’s an n by m grid. Uniform exploration in a combinatorial setting or in general, I mean just in an action set analogy, it's a bad idea. You don't want to do uniform because of these things. But still if there is enough symmetry in some sense you can hope that it will work and they show that for certain action sets a get you actually square root of dn log continuity of A. What we propose is a distribution based on the results from convex geometry which is called John's theorem and that gets you this square root dn log continuity of A and this is optimal. If you just do a discretization you get a d square root n okay for the geometric setting. Let me just tell you remember what is John's theorem. This is really very well known in convex geometry. I mean you open any book on it and it's page 5 or something like that. You have a set of points in Rd and I'm going to look at the ellipsoid of minimum volumes that contains my set of points. Let's call it E and let's say that E is the unit ball in some norm scalar product. That's always the case. Then I can show that John proved that there exist contact points, so there will be contact points between my convex set, my set of points and the ellipsoid and there is M contact points such that you can find a distribution on this point which gives you an approximate octagonal decomposition. I don't want to spend more time on this. The key point is that now what we do is we have these contact points between the ellipsoid and the action set and we have a distribution on them and we will use this as our exploration distribution. The issue is that this is not computationally efficient. It's NP hard to compute the John's ellipsoid. You can do a square root d approximation, but then you use the optimal regret bounds. Even sampling from exponential weights on the combinatorial structure, that's a very difficult problem. You have exponentially many actions and you want to sample from the exponential distribution. We don't know how to do that. In certain action sets we can do it. For instance, if we look at Birkof’s polytope matchings on the bipartite graph, then with the permanent approximation algorithm of Jerrum, Sinclair and Vigoda we can actually simulate the exponential weights. But this is a difficult result for a very specific action set. Certainly we have nothing general. There are plenty of computational difficulties. My personal impression is that it's impossible to overcome them. This is not the way to get something computationally efficient. What I and other people propose is using ideas from convex optimization. Now I'm going to describe to you, I will take 7 minutes to describe to you an idea in complex optimization that should be more well known. Let's do this quickly. We have a function which is defined on, so even if you don't care about bandits, what I'm going to say is relevant. Let's say we want to optimize a function Rd which is a convex function. Let's say it's a closed function because that's just a technical assumption such that the norm of the gradients are bounded in there too. We want to find the minimum of these functions then Cauchy in the 19th century said okay. Let's do that. So you are the given point. You look at the gradient of the function and you move in the opposite direction. This makes total sense. We first have the learning rate eta. So the equation is Xt +1 is equal to Xt minus eta times the gradient of f Xt. We want to optimize our ball, so if we get out of the ball, what we do is we project back on the ball. That's projected gradient descent. Everybody should know. What you can do is you just, if I ask you okay. Now you have done all of this. You are to some certain point Xt. What do you believe to be the minimum. You shouldn't tell Xt if f is just a convex function, but you should tell the average. One over t [indiscernible] equals [indiscernible] of the Xs and what you can prove is that the distance between this and the true minimum over the ball is going to be bounded by R which is the size of the ball, L which is the size of the gradients divided by the square root t. So what I want you to take out of this is that as t goes to infinity I will be able to optimize this function with the gradient descent. The key point why we care about this is that d does not appear. I told you we were optimizing and I mentioned d but there is no d here. We could be, for all we know we could be in arbitrary Hilbert space H. this is going to be relevant for matching real learning application. So there will be a bounded space in the next slide. I prefer to warn you but it will be a real thing. In an arbitrary Hilbert space we can optimize convex function over a ball. Okay. That's what this is saying. Now that's good. What about a Banach space? What if we want to optimize a ball in a Banach space? Why would we do that? Imagine you want to optimize an L1 ball. In many machine applications you don't want to optimize the [indiscernible] ball. You want to optimize the L1 ball. Then gradient descent does nothing for you. If you were reading L1, L1 infinity you couldn't do gradient descent. You would not optimize a function. The rate would depend on the dimension. Gradient descent gives no guarantees and if you think about it, it does not even make sense. Nabla, f of X the gradient of f is an element of the dual space B star. It's not an element of B so when you do, when you write Xt -eta gradient of f Xt, it doesn't make sense. This is in B and this is in B star. This was realized 30 years ago but some people forgot. So Nemirovski and Yudin said we have this issue, so what we are going to do is we will map Xt to the dual space. How do we map to the dual space? We use the gradient of some function, so you'd use the gradient of some function phi. You map to the dual and then you take a gradient step in the dual. You come back to the primal. That's, you know, descent which I'm going to describe now a little bit more formally. Let's come back to our deal because this was just intuition. Now we want to optimize the function f in Rd. what we are going to do is we will have a function phi which is defined on the superset d of X so I want to optimize over X my convex function. I define phi which is on d, a superset of x, a real value function and what I'm going to do is the following. I'm at Xt, so what does gradient descent do? I would take a gradient step. If I go out of X I would project in the European distance. We saw that this will have a dependency on the dimension if, for instance we're optimizing over the L1 ball. What we would like is something dimension independent as if we were optimizing over an L2 ball. So what we are going to do is imagine that we are in dimension infinity. We take Xt. We go to the dual space using the gradient of phi. Now we are gradient of phi of Xt and here we take a gradient step, so we have gradient of phi of Xt minus eta gradient of f of Xt. Now we are at a new point which we call W t +1 which is the gradient of phi at W t +1. We come back in the primal. How do you come back in the primal? You just take phi star which is potential dual of phi because this is well known in convex optimization. You are W t +1. Maybe you are outside of your constraint set, so now you need to project, but of course you don't want to project in the L2 metric. This would be meaningless. So what you project in is in the sum of the metric induced by your potential phi. You projected the Bregman divergence associated to phi and that gets you a point Xt +1. You do this intuitively and this you can show is going to optimize your function f and it can be dimension independent on L1, for instance. It can also give you fast rates of conversion for SDP, first-order method for SDP. Because something that people have been looking for for years but this actually was invented 30 years ago. What you would take for SDP is phi to be the interpretive P but I won't go into this. The key for us and for machine learning is that this algorithm is robust to noise in the gradients. That's also why first-order methods are getting popular is you can do interpretive methods but interpretive methods are not robust to noise. This is a robust to noise. So if instead of the gradient here as something noisy which is unbiased, then I could do the same thing. Now I'm going to use this idea to do a bandit algorithm. Here it goes. I want to, so here is algorithm which we call OSMD, online stochastic mirror descent. We have our action set here, the convex set of the action set. And what we want is to play a distribution over A. We will take an action at random from the distribution. What I will define a parameter of my algorithm is going to be a [indiscernible] function phi which is defined in the superset D of the convex order A and I will also have what I call a sampling scheme which is mapping from the convex all of A to a spacer distribution so that to any point here, A I can map to a distribution such that this distribution in expectation gives me A. This such sampling scheme always exists and what I'm going to do is the following. Assume that at a certain time step I have this priority distribution Pt. This Pt is associated to a point Xt in the convex order of A through the sampling scheme. Now I'm at Xt. I'm going to do mirror descent. Mirror descent you can write it compactly like this. You take a gradient step in the dual so you take gradient of phi of Xt. Gradient step you are optimizing a linear function so your gradient is just Zt of t. That's your estimate of the gradient with a certain [indiscernible] rate eta and you come back in the primary using the [indiscernible] dual that gives you the [indiscernible] Xt +1. As I told you if you get out of the convex O to project back. You project back using the Bregman associated phi. Now you have a point in the convex O and you use your sampling scheme to define the property distribution Pt +1. That's a general template for our algorithm and now you can instantiate it with different phi and different pi. That's the idea. If we, are there any questions on this? You just do mirror descent and in here where you are trying to optimize linear functions and you don't have the real linear function which would be Zt, but you have the unbiased estimate Z ~and you have a sampling scheme which allows you to move from point in the convex O to distribution over the action sets. What we proved in 2011 is this regret bound. That the regret bound of this algorithm depends on the size of the set of actions measured through the Bregman, so that's the first term. The diameter of the set measured with the Bregman plus a variance term where the variance instead of being in the norm induced by sigma t, it's a variance induced by phi. Let me just take a moment to look at this variance term. What we did before we fix potential weights is that we said that we had the control on the variance when the variance was induced by the norm with sigma t. But here instead of sigma t we have the action of phi at xt and the inverse of that. What we want, if you think about it, is an equation like this. If the action of phi at x is lower bounded by the inverse of the sampling scheme, of the [indiscernible] of the sampling scheme, then you can upper bound this thing by sigma t and you can upper bound the entire thing by D. Let me say that again. What we want is to run on line stochastic mirror descent with a mirror map phi and a sampling scheme which are dual to each other in some sense, in the sense that the action of phi should be related to the covariance matrix of the sampling scheme at x. Of course, you can always do that again because you can just blow up phi. You can make it like, push it. I mean you can do it. The issue is that you also have the diameter of the action set which the Bregman that should remain small. So you have a tension between the two terms. You want this plus keeping the diameter small. We can do it in some cases. Let me show you there are cases where we can do it. In this 2012 paper when you are doing linear optimization of the ball on linear optimization with bandit feedback, we recommend you take this slide. And the sampling scheme is very simple. You have the ball and let's say I want to, I'm at Xt which is an element in my ball B 2d. Let's do it like that. What I'm going to do is the following. With probability norm of Xt, what I will play is actually play Xt over its norm. You see, I go all the way up to the boundary, so if I'm away from the boundary it makes sense. Remember we are in a linear setting so the further away I am from 0 the further I reduce the variance, if you want, if you think in terms of phi ID processes. So what you want is to move away from 0. We refer to the norm of Xt which is more than 1. I will go all the way up to 0. But then I have some probability mass left to do exploration. In a sense my algorithm tells me to play very close to the boundary. It means that the algorithm is certain that I'm going to play well. If it's a little bit away from the boundary, it means that there is some uncertainty and I'm going to exactly use this amount of uncertainty to do exploration. So with the rest of the mass which is one minus the norm of X, I'm going to play uniformly at random on the canonical basis with random Xi. It's sort of it’s doing exploration but it's choosing, it's adaptively choosing the rate at which it should do exploration, which I think is a good idea. >>: [indiscernible] conditional on Xt [indiscernible]? Could there be benefit in playing instead of uniform but playing conditionals and being away from Xt? >> Sebastien Bubeck: No. I don't think so. Because the reason is, oh, maybe you could. This is only one direction. >>: [indiscernible] your distributions seem like this should be proportional to distance from the point you are playing. You want to be away from [indiscernible] >> Sebastien Bubeck: Yes, but the issue is remember there is adversary who could try to trick you into doing that. You have to be careful with that. I mean, when basically the idea is when you decide to do the exploration, you should really mean it, like do it, do exploration. Don't try to still use some exploitation. >>: You can still take the [indiscernible] of Xt [indiscernible] >> Sebastien Bubeck: Exactly. That's what I wanted to say. That's exactly. You can buy this, yes. Instead of doing it on the canonical basis you could take the hyperplane here and do uniform on this hyperplane. So the nice thing is that this is computational complexity linear in d and it gets you square root of dn so even better than before. And this is optimal and this is for an open problem to get this right. Now on the hypercube we propose this function phi. It's weird looking, but I will come back to it in five minutes. So the sum of [indiscernible] the inverse of the hyperbolic tangent, people usually prefer this form which is equal which is the Boolean entropy. If I am at a point X, I have 1 plus Xi log 1Xi +1 minus Xi log 1 minus Xi. But actually the proof goes by looking at it this way. This is the more natural way to look at it for me. The exploration distribution makes it simple, so I have my cube and I am at a certain point here and what I'm going to do is I'm just going to play like this. I'm going to decompose to explode my point and instead of playing this, I will play this one, this one, this one or this one but I will explode it like this. That will allow the idea of this research problem is to do the sampling which chooses the geometry of the set which really uses all of the space that there is in my set. Let me just say about the paper by Abernethy, Hazan and Rakhlin, what they did was they computationally efficient strategy that you have an action set like this and what they do is they look at the ellipsoid which is contained in the set and they do exploration uniformly on the canonical basis and just by the ellipsoid. You see the issue is that when you do that you are restricting yourself, you are confining yourself to an exploration in a very, very small set. You are adapting to the geometry, but this is not good. What you want is really to explode it and explore as far as possible to reduce the variance and to explore in as many directions as possible. So this algorithm as computational convex [indiscernible] on d and [indiscernible]. I showed you two examples in the geometric setting, Euclidean ball and the hypercube and they are the only two that I know where we can get optimal regret bound with poly-time computational complexity. Yes? >>: I have a question about the situation where [indiscernible]. So you are saying that you want to go as far away as possible to minimize variance? I buy it completely, but if I want to look at the next problem of moving from linear functions to other functions, like convex [indiscernible], that would be the worst I do, correct? >> Sebastien Bubeck: Yes. You can do that. But linear is much easier than convex. >>: So local ones have a chance of really extending to convex, but not to explode has no chance. >> Sebastien Bubeck: Exactly. I guess if you believe that your linear more than makes sense you should exploit it as much as possible. Your linear model allows you to instead of doing this go as far as possible and still an expectation be the same, so you should use that opportunity which is given to you by the model. But I agree, if there was a convex function you could udo that. All right. Let me very quickly go over the combinatorial setting. So this is history. Many, many people looked at it, various results. Most of them sub optimal and the first optimal one was in 2010 by Koolen, Warmuth and Kivinen. They obtain optimal bounds and then we did when year later the semi-bandit feedback and we add also some new results from the full information. So what Koolen, Warmuth and Kivinen did is that they looked at mirror dissent with the negative entropy for the mirror map. They proved 2d square root n regret bound. The open problem was whether or not you can change this with exponential weights. You can't. But what we showed is that there exists an action set, a complicated action set subset of the hypercube such that exponential weights no matter which learning rate eta you give to it, it will have a regret which is lower bounded by d to the 3/2 square root n, whereas mirror dissent gets you to d square root n. This is with full information. Not that this is completely different from the geometric setting. I don't know who asked the question about combinatorial versus geometric, but the geometric I just showed you that exponential weight with the right tricks gets you the optimal regret bound. And here we can provably show that in the combinatorial setting you cannot get the optimal bound. The two settings are really different. Even experts, some experts don't really, didn't see the difference between the combinatorial and geometric. It's not easy to understand the difference online, but this shows to you the difference. You cannot get, exponential weight will not work in the combinatorial setting. The reason is really because in the geometric setting you have a notable normalization which allows you to basically bypass the geometry of the problem. Whereas, in the combinatorial setting, you really have to think about the geometry of the action set. You cannot forget it. In semi-bandit feedback life is really much easier. Everything works. It's very easy because what you observe is first coordinate of at times first coordinate of Zt so if first coordinate of at was active you get to 1 so you observe Zt on this coordinate, so you can do this type of estimate. They are unbiased. You have control on the variance in terms of this. I just want to show it to you this slide. Now this slide which is important is a 2013 paper. What we suggest a general type of potential function phi which derives from INF type mirror map potential function phi from the real line to the real line is this. A sum of integrals of the inverse of phi. Why is this anything interesting is because we can show this type of regret bound, whereas the variance is controlled in this lambda t which is one over the inverse of phi, the derivative of the inverse of phi at Xt and you get this type of control. Maybe it's not clear why this is interesting, but maybe here it comes now. What we can show is that with Xi equal to exponential effects this gives you phi of Xi is the negative entropy so you can just look at this formula. This will give you the negative entropy. This gives you another point of view, what is the entropy. Entropy is this phi with Xi equals exponential affect and then we can prove this type of regret bounds where you have the maximal L1 norm within your action set which arise and you prove this. This is suboptimal because of the log factor and if you take Xi to be something polynomial like 1 over X squared you can get this nicer bound, so this allows you, I mean, okay. The nice thing is with this point of view you get the proof of the optimal regret for adversary [indiscernible] semibandit which is 7/9, whereas the first proof that we had was 10 pages because this is really the right point of view. Now the open problem in the bandit feedback is that with John's exploration so we get d square root n in the geometric setting and this gives you d squared square root n in the combinatorial setting because there is a d difference in terms of scaling. We proved this lower bound, d to the 3/2 square n, so there is a gap of square root d and I conjecture that this can be removed and actually can be attained efficiently for reasonable action set. The best procedure right now is by Abernethy, Hazan and Rakhlin that gets you d to the 5/2 square root n and I will spend 2 minutes on the new idea. This has not been explored yet. It's just pushing towards the end, this idea of using all the space that you have in your action set. For C Xi in Rd and if I define P of theta a to be the following distribution. So p of theta a of, it's a distribution with density with respect to the [indiscernible] measure in Rd. is this p theta effects is proportional to exponential of the inner product between theta and x times the indicator that x is in my action set. This is a sort of canonical exponential family. Now you can show that for any point in your action set there always exists a parameter, a natural parameter such that A is going to be the mean of the distribution p sub theta A. So there is a duality going on. It's an exponential family. So you have your action set and you have Rd and Rd is basically the dual of your action set. And what I call this mapping from an action to the distribution with natural parameter theta of A the canonical sampling scheme. For any action it gives you a sampling scheme which is this exponential form such that in expectation you get A and the idea is to use and it really make sense, to use online stochastic mirror dissent with the mirror map phi given by the entropy of the canonical sampling scheme. This algorithm can be implemented efficiently as soon as you can sample from log concave functions from log concave distribution and you can do that with the technique of Lovasz and Vempala. And the key idea coming back to this idea of having the sampling scheme related to the action of phi is that if phi is the entropy of the canonical sampling scheme because of the duality with exponential families, the Fenchel dual of phi is the log partition function of your exponential family. You can show that the action of phi inverse, that the action of the Fenchel dual, is approximately the same thing as the covariance matrix of your canonical sampling scheme, so you get with inequality the things that I wanted with an inequality. I'm hopeful that this will work, but it is not known yet. And that's it and some references. I will stop here. Thank you [applause] >> Ofer Dekel: Any more questions? >>: So have you tested this on [indiscernible] problem [indiscernible] >> Sebastien Bubeck: No I haven't, but I'm hopeful somebody will. Let me tell you what works in this, what I think will work. What will work is the semi-bandit setting. I didn't spend enough time on it, but this is really what would work. And I think one big opportunity is to identify which program could be reduced to combinatorial semi-bandit. I described in one paper in NIPS 2013 where they reduce a [indiscernible] loop free MDPs to combinatorial semi-bandit. I don't remember if they have experimented in this paper, but I think the algorithm described here will work, will do something. Exponential weights with [indiscernible] so it would not do anything. It's just theoretically interesting. >>: As far as I remember the [indiscernible] algorithm of something from [indiscernible] distribution. Also this is a thing that [indiscernible] mentioned to where is prohibited. Do you have any idea on something that even if it doesn't have the full bulletproof guarantee that it would nevertheless work in practice? >> Sebastien Bubeck: Do something? Yes. But then, so I think what would work best if you really have linear bandit feedback is to do something like linear UCB. It's a UCB where basically you are in a linear setting so you have an ellipsoid for the confidence around your true parameter and then you try to maximize within this Xena product with the ellipsoid and so you have robust optimization technique related to mirror dissent actually, where you can use robust, I mean there are books about this. You can use robust optimization technique to efficiently approximate linear UCB and that will do something in practice but it won't have the nice theoretical guarantees. I think one interesting direction which would be in between is coming back to an earlier question is also to be in between something that assumes stochastic and something that is adversarial. What you want is if the world is stochastic and you do as well as if it was ID but you still want some security about what's going on if there is an adversary trying to screw with you. I think one potential direction is to do linear UCB with a robust optimization technique to actually do it in practice on the computer and at the same time have a few checks, a few security checks to verify that you are indeed under the IED setting and then if you observe that something does not behave as you wish it would behave then you can either stop or you can do some of the algorithms that I described here. That's something that would do something in practice. >> Ofer Dekel: Any more questions? Let's thank the speaker. [applause].