Evaluation of Machine Translation System

advertisement

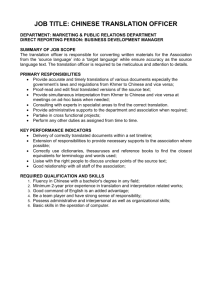

Automatic Evaluation of English-Indian Languages Machine Translation Manisha†, Madhavi Sinha++, Rekha Govil† †Apaji Institute, Banasthali University, Banasthali(Raj.)-304022 smanisha@jp.banasthali.ac.in, grekha@banasthali.ac.in ++ BIT(Extension Center of BISR, Ranchi) Jaipur(Raj.)-302017 madhavi_sinha@rediffmail.com Abstract : Machine Translation(MT) refers to the Amongst many challenges that Natural Language use of a machine for performing translation task which Processing(NLP) presents the biggest is the inherent converts text in one Natural Language(NL) into another ambiguity of natural language. In addition, the Natural Language. Evaluation of MT is a difficult task linguistic diversity between the source and target because there may exist many perfect translations of a language makes MT a bigger challenge. This is given source sentence. Human evaluation is holy grail particularly true of languages widely divergent in their for MT evaluation, but due to lack of time and money it sentence structure such as English and Indian is becoming impractical. In past years many automatic languages. The major structural difference between MT evaluation techniques have been developed, most English and Indian languages is that English follows of them are based on n-gram metric evaluation. In this structure as Subject-Verb-Object, whereas, Indian paper authors are discussing various problems and languages solutions for the automatic evaluation of English-Indian morphology, relatively free word order, and default Languages MT because all these techniques can not be sentence structure as Subject-Object-Verb[3,4]. are highly inflectional, with a rich applied as it is in evaluating English-Indian language As is recognized the world over, with the current state MT systems due to the structural differences in the of art in MT, it is not possible to have fully automatic, languages involved in the MT language pair. high quality, and general-purpose Machine Translation. 1. The major cause being need to handle ambiguity and INTRODUCTION the other complexities of NLP in practical systems. The word ‘Translation’ refers to transformation of one language into other. MT means automatic translation Evaluation of a MT system is as important as the MT of text by computer from one natural language into itself, answering the questions about the accuracy, another natural language. Work on Machine Translation fluency and acceptability of the translation and thus started in the 1950s after the second world war. The artifying the underlying MT algorithm. Evaluation has Georgetown experiment in 1954 involved fully long been a tough task in the development of MT automatic translation of more than sixty Russian systems because there may exist more than one correct sentences into English. The experiment was a great translations of the given sentence. The problem with success and ushered in an era of machine translation natural language is that language is not exact in the way research. Today there are many software available for that mathematical models and theories in science are. translating natural languages between themselves[1,2]. Natural language has some degree of vagueness which 1 makes it hard in MT to put objective numbers to it for Human evaluation is the holy grail for the evaluation of the evaluation. machine translation system, however it is time consuming, costly and subjective i.e. evaluation results 2. VARIOUS METHODS OF EVALUATION vary from person to person for the same sentence pair. Evaluation is needed to compare the performance of The simplest evaluation question to a human expert can different MT engines or to improve the performance of be “Is the translation good?” (Yes/No). This answer a specific MT engine. Although there is a general can agreement about the basic features of evaluation of MT, translation but not a detailed one. Given below is a there are no universally accepted and reliable methods suggestive list of criteria on which human can evaluate and measures for the same. MT evaluation typically the MT. The list has been typically drawn for English- includes features not present in evaluation of other NLP Hindi language pair. systems. These are typically the quality of the 1. Gender/Number is properly translated or not ? raw(unedited) translation - intelligibility, accuracy, 2. Tense in the translated sentence is proper or not ? fidelity, appropriateness and style/register and added 3. Voice of a sentence (i.e. active or passive) is features such as the usability for creating and updating give only the overall quality measure of the properly translated or not? dictionaries, for post editing texts, for controlling input 4. Use of proper noun in the translation language, for customization of the documents, the 5. adjective and adverb corresponding to the nouns extendibility to new language pairs and/or new subject and verbs domains, and cost benefit comparisons with human 6. The selection of words in the target language translation performance. 7. The order of words Three types of evaluation are recognized for MT[5,6] : 8. Use of punctuation symbols (i) adequacy evaluation to determine the fitness of 9. stress on the significant part MT systems within a specified operational 10. maintaining the semantic of the source sentence context 11. Overall quality of the translation, which may (ii) diagnostic evaluation to identify limitations, include localization issues eg format of date, use errors and deficiencies, which may be corrected of colors etc. or improved (by the research team or by the (iii) developers) The other important issue for performance evaluation to assess stages of making the decision on weights to be given to each of system development or the above criterian to compute the final different technical human evaluation is score? Basically these weights are dependent on the nature of implementation. Adequacy evaluation is typically performed by the source and target languages e.g In Sanskrit potential of Language criteria 7 can be given less weight as users and/or purchasers systems(individuals, companies or agencies); diagnostic opposite to criteria 6. evaluation is the concern mainly of researchers and The scale of evaluation can not be binary(T/F). To developers; and performance evaluation may be better judge the quality of translation one can deploy a undertaken by either researchers/developers or by three point or five point scale with each of the above potential users. criteria, e.g. 2 4 Ideal But deploying human evaluation for assessing a MT 3 Perfect system is very expensive in terms of time and cost 2 Acceptable involved in annotating the MT output. Moreover, for a 1 Partially Acceptable more statistically significant result and elimination of 0 Not Acceptable subjective evaluation, human evaluation of each MT Figure 1 shows a screen shot of a web application output needs to be done by more than one evaluator, developed for human evaluation of MT(English-Indian making the cost of human evaluation prohibitive. All Languages) displaying the evaluation screen for the these problems created interest in automatic evaluation human expert: methods. The most commonly used automatic evaluation methods, BLEU[7] and NIST[8] are based on the assumption ‘The closer a machine translation is to a professional human translation, the better it is’. Both are based on n-gram matching approach. Some other methods are F-Measure and Meteor. The basic approach of these methods is described below: 1. IBM’s Bleu[7] - The Bleu metric is probably known as the best known automatic evaluation for Machine Translation. To check how close a candidate translation is to a reference translation, a n-gram comparison Metric is done between is designed from matching of both. candidate translation and reference translations. Main idea of BLEU includes : Exact matches of words Match against a set of reference translations for greater variety of expressions Account for adequacy by looking at word precision Figure 1 : Screenshot of a web application for human evaluation of MT. Account for fluency by calculating n-gram precision for n=1,2,3,4 etc. Weighted average of all the criterion can be used to No recall Final score is weighted geometric average of the find the overall measure of human evaluation. One can n-gram scores add other measures also e.g. Extendibility, Operational capabilities of the system and Efficiency of use etc. Weakness: Sentences framed by switching words with Calculate aggregate score over a large test set completely different meaning also get high score. 3 There are some modifications in the original BLEU as Calculating BLEU given below : Final BLEU Score is calculated as: N BLEU = BP.exp (∑ n=1 2. NIST[8] - developed by National Institute of BLEU’s Modified n-gram Precision for multiple candidates Machine translation focuses on sentence level Standards and Technology, the NIST scoring system evaluation i.e. only one sentence is evaluated at a time. (Doddington, 2002), For a block of text, first the n-gram scores are precision but it employs the arithmetic average of n- calculated for all candidates up to a number N. Then gram counts rather than a geometric average, and the n- n-gram counts for all the candidates are added and the grams in this case are weighted according to their sum is divided by number of candidate n-grams in information contribution, as opposed to just counting corpus. It is denoted by Pn them as in BLEU. The score represents the average is again based on n-gram information per word, given by the n-grams in the translation that match an n-gram of a reference in the the number of n-grams in segment i, in the translation being evaluated, with a matching reference co occurrence in segment i ∑ i reference set. NIST's brevity penalty penalizes very short translations more heavily, and sentences close in Pn = length to the references less than the BLEU brevity ∑ the number of n-grams in segment i, in the translation being evaluated i penalty. Weakness: Being based on the BLEU metric it has the same points. Brevity Penalty (BP) 3. Brevity Penalty was introduced to penalize candidates ‘maximum matching’ from Graph theory, subset of shorter than references. Its main purpose is to prevent co-occurences in the candidate and reference text are very short candidates form receiving too high score. counted in such a way that a token is never counted And if a candidate receives high score then it must twice. On the matching value a Recall and Precision is match the reference in length, in word choice and in defined, where word order. The formula for calculating Brevity Penalty Recall (Candidate|Reference)= F-Measure[5] (New York University) - uses is as follows: MMS(Candidate, Reference)/ |Reference| Precision(Candidate|Reference)= 1 if C > R MMS(Candidate, Reference)/ |Candidate| BP = A reward for longer matches is introduced, this reward e (1-R/C) if C <= R is more for larger matches thus taking care of the fluent measure of the translation. Here, C is total length of translation corpus and R is Final measure total length of reference corpus. In case there are & Recall. multiple references, R is the length of that reference 4. which is closest to the length of the candidate. Wordnet to calculate the use of synonym from the - harmonic mean of Precision Meteor[9] – uses one gram overlaps and uses reference text. It has a separate module to address 4 ordering which explains why higher n-grams are not Example 2. used. A penalty for reordering is calculated on how many chunks in the produced text need to be moved ES: Daman and Diu offers you refreshing holiday. around to get the reference text. C : ताज़गीभरी दे ता अवकाश द्वीप R1 : दमन एवं द्वीप आपके अवकाश Case Study : Evaluation of English to Hindi MT System एवं दमन है आपको । ताज़गीभरे बना दे ता है । R2 : दमन एवं द्वीप तुम्हारे अवकाश ताज़गीभरे बना दे ता है । R3 : दमन एवं द्वीप तुम्हारे अवकाश ताज़गीभरे बनाता है । By far BLEU is the most used evaluation strategy for BP = 1.0 , BLEU = 1.0000, M-BLEU=1.0000, HES = 0.1020 MT. BLEU is mainly designed for the evaluation of translations between language pairs coming from the same family like Spanish-English, French-English, Example 3. German-English etc.[7] and it works well in such cases. But in English-Indian Languages often there is no oneto-one mapping between the source language text and ES: It was rainning when we left for Goa. C : जब हम गोआ के लिए ननकिे यह बाररश हो रही थी। target language text[10]. So this method creates some R1 : जब हम गोआ के लिए ननकिे बाररश हो रही थी। times inadequate results. Here we present few examples of BLEU applied to English-Hindi MT, highlighting BP = 1.0 , BLEU = 0.4647, M-BLEU=0.5358 HES = 0.6430 inadequacy of BLEU alone for MT evaluation of English-Indian Languages. The sentences have been drawn from Tourism domain. Example 4. The notation used is : ES : English Language Sentence(source) ES: There are some portraits of the rulers of Jodhpur also displayed at Jaswant Thada. C : Candidate Sentence(translated sentence) C : वहां जोधपरु के शासकों के कुछ चित्र भी जसवन्त ठाडा पर R1, R2, R3 : Reference Sentences प्रदलशित ककये गये हैं । BP :Brevity Penalty R1 : BLEU : BLEU Score जोधपरु के शासकों के कुछ चित्र भी जसवन्त ठाडा पर प्रदलशित ककये गये हैं । जोधपरु के नरे शो की कुछ तसवीरे भी जसवन्त ठाडा पर M-BLEU : Modified BLEU Score R2 : HES : Human Evaluation Score, प्रदलशित की गयी हैं । R3 : Example 1. जसवन्त ठाडा पर जोधपरु के नरे शो की कुछ तसवीरे भी प्रदलशित की गयी हैं । BP = 1.0 , BLEU = 0.4901, M-BLEU=0.6692, HES = 1.000 ES: Daman and Diu offers you refreshing holiday. C : दमन एवं द्वीप आपको ताज़गीभरी छुट्टियााँ दे ता है । 3. DISCUSSION R1 : दमन एवं द्वीप आपके अवकाश ताज़गीभरे बना दे ता है । R2 : दमन एवं द्वीप तुम्हारे अवकाश ताज़गीभरे बना दे ता है । BLEU is mainly designed for the evaluation of R3 : दमन एवं द्वीप तम् ु हारे अवकाश ताज़गीभरे बनाता है । translations between the language pairs, where there is BP = 1.0 , BLEU = 0.3097, M-BLEU=0.3578, HES = 1.000 one to one mapping, but in English-Hindi translation 5 always it may not be the case. BLEU when applied to correct word but same word is not used in any of English-Hindi MT presents the following problems: the reference then this dictionary will give the word. It considers synonyms as different words, thus if candidate and reference sentences are using 2. Order checking is not done by BLEU, if shallow synonyms BLEU will score the translation parsing is included with the automatic tool then the low(Example 1 छुट्टियााँ, अवकाश). This is a tool can also evaluate the candidate on the basis of weakness in BLEU, not typical of English-Hindi order of the words. MT. 5. REFERENCES: It does not take care of changes in the order of 1. James Allen: Natural Language Understanding, (Benjamin/Cummings Series in Computer Science) Menlo Park: Benjamin/Cummings Publishing Company, 1987. 2. S Nirenburg, J Carbonell, M Tomita, K Goodman :Machine Translation: A Knowledge-Based Approach, Morgan Kaufmann Publishers Inc., San Francisco, CA, USA 1994. 3. Durgesh Rao, Machine Translation in India: A Brief Survey, NCST(CDAC) Mumbai, available at http://www.elda.org/en/proj/scalla/SCALLA2001/ SCALLA2001Rao.pdf 4. Salil Badodekar, Translation Resources, Services and Tools for Indian Languages, Computer Science and Engineering Department, IIT Mumbai, available at http://www.cfilt.iitbac.in/ Translationsurvey/survey.pdf 5. Joseph P. Turian, Luke Shen, and I. Dan Mela, Evaluation of Machine Translation and its Evaluation Proceedings of MT Summit IX; New Orleans, USA, 23-28 September 2003 6. Yasuhiro Akibay;z, Eiichiro Sumitay, Hiromi Nakaiway, Seiichi Yamamotoy, and Hiroshi G. Okunoz Experimental Comparison of MT Evaluation Methods: RED vs. BLEU, available at http://www.amtaweb.org/summit /MTSummit/FinalPapers/55-Akiba-final.pdf, MT Summit X was in Phuket, Thailand, September 1216, 2005. 7. K. Papineni, S. Roukos, T. Ward, and W. Zhu (2002) “BLEU:a Method for Automatic Evaluation of Machine Translation”. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (ACL):311–318. Philadelphia. 8. G. Doddington (2002) “Automatic Evaluation of MachineTranslation Quality Using N-gram CoOccurrence Statistics”. In Human Language Technology: Notebook Proceedings:128–132. San Diego. 9. Banerjee Satanjeev, Lavie Alon, “METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments”, different words in the sentences, which is very crucial in case of English-Hindi translation(Example 2, The BLEU score is much higher than Human score). Some times translation of each word is not required in output sentence for eg if the sentence is ‘It is raining’, and translation is ‘यह बाररश हो रही है’ , then translation of ‘It’ is not required here(Example 3) and evaluation method should consider it. 4. CONCLUSION This case study shows that theoretical and practical evidence that Bleu may not correlate with human judgment to the degree that it is currently believed to do for English-Hindi pair. Bleu score is not sufficient to reflect a genuine improvement in translation quality. We have further shown that it is not necessary to receive a higher Bleu score in order to be judged to have better translation quality by human experts. Automatic evaluation tools are based on the references only. The quality and quantity of references influence the results. Perhaps use of lexical resources along with these automatic tools will provide better scores in terms of validity. Following are some suggestions on the use of some lexical resources: 1. To deal with the synonym words , resource dictionary can be used which stores the synonym of each word then if candidate translation is using a 6 http://www.cs.cmu.edu/~alavie/papers/ BanerjeeLavie2005-final.pdf 10. Ananthakrishnan R, Pushpak Bhattacharyya, M. Sasikumar, Ritesh Shah, Some Issues in Automatic Evaluation of English-Hindi MT: more blues for BLEU in proceeding of 5th International Conference on Natural Language Processing(ICON-07), Hyderabad, India. 7