C. Combined Complex-Valued Artificial Neural Network

advertisement

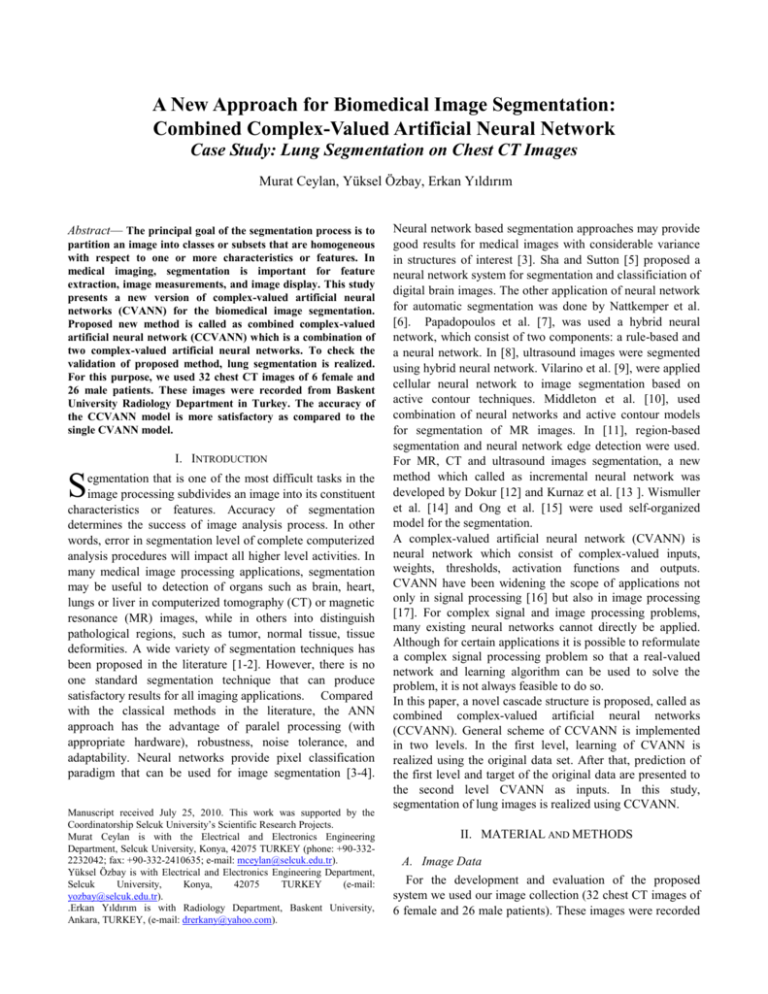

A New Approach for Biomedical Image Segmentation: Combined Complex-Valued Artificial Neural Network Case Study: Lung Segmentation on Chest CT Images Murat Ceylan, Yüksel Özbay, Erkan Yıldırım Abstract— The principal goal of the segmentation process is to partition an image into classes or subsets that are homogeneous with respect to one or more characteristics or features. In medical imaging, segmentation is important for feature extraction, image measurements, and image display. This study presents a new version of complex-valued artificial neural networks (CVANN) for the biomedical image segmentation. Proposed new method is called as combined complex-valued artificial neural network (CCVANN) which is a combination of two complex-valued artificial neural networks. To check the validation of proposed method, lung segmentation is realized. For this purpose, we used 32 chest CT images of 6 female and 26 male patients. These images were recorded from Baskent University Radiology Department in Turkey. The accuracy of the CCVANN model is more satisfactory as compared to the single CVANN model. I. INTRODUCTION S egmentation that is one of the most difficult tasks in the image processing subdivides an image into its constituent characteristics or features. Accuracy of segmentation determines the success of image analysis process. In other words, error in segmentation level of complete computerized analysis procedures will impact all higher level activities. In many medical image processing applications, segmentation may be useful to detection of organs such as brain, heart, lungs or liver in computerized tomography (CT) or magnetic resonance (MR) images, while in others into distinguish pathological regions, such as tumor, normal tissue, tissue deformities. A wide variety of segmentation techniques has been proposed in the literature [1-2]. However, there is no one standard segmentation technique that can produce satisfactory results for all imaging applications. Compared with the classical methods in the literature, the ANN approach has the advantage of paralel processing (with appropriate hardware), robustness, noise tolerance, and adaptability. Neural networks provide pixel classification paradigm that can be used for image segmentation [3-4]. Manuscript received July 25, 2010. This work was supported by the Coordinatorship Selcuk University’s Scientific Research Projects. Murat Ceylan is with the Electrical and Electronics Engineering Department, Selcuk University, Konya, 42075 TURKEY (phone: +90-3322232042; fax: +90-332-2410635; e-mail: mceylan@selcuk.edu.tr). Yüksel Özbay is with Electrical and Electronics Engineering Department, Selcuk University, Konya, 42075 TURKEY (e-mail: yozbay@selcuk.edu.tr). .Erkan Yıldırım is with Radiology Department, Baskent University, Ankara, TURKEY, (e-mail: drerkany@yahoo.com). Neural network based segmentation approaches may provide good results for medical images with considerable variance in structures of interest [3]. Sha and Sutton [5] proposed a neural network system for segmentation and classificiation of digital brain images. The other application of neural network for automatic segmentation was done by Nattkemper et al. [6]. Papadopoulos et al. [7], was used a hybrid neural network, which consist of two components: a rule-based and a neural network. In [8], ultrasound images were segmented using hybrid neural network. Vilarino et al. [9], were applied cellular neural network to image segmentation based on active contour techniques. Middleton et al. [10], used combination of neural networks and active contour models for segmentation of MR images. In [11], region-based segmentation and neural network edge detection were used. For MR, CT and ultrasound images segmentation, a new method which called as incremental neural network was developed by Dokur [12] and Kurnaz et al. [13 ]. Wismuller et al. [14] and Ong et al. [15] were used self-organized model for the segmentation. A complex-valued artificial neural network (CVANN) is neural network which consist of complex-valued inputs, weights, thresholds, activation functions and outputs. CVANN have been widening the scope of applications not only in signal processing [16] but also in image processing [17]. For complex signal and image processing problems, many existing neural networks cannot directly be applied. Although for certain applications it is possible to reformulate a complex signal processing problem so that a real-valued network and learning algorithm can be used to solve the problem, it is not always feasible to do so. In this paper, a novel cascade structure is proposed, called as combined complex-valued artificial neural networks (CCVANN). General scheme of CCVANN is implemented in two levels. In the first level, learning of CVANN is realized using the original data set. After that, prediction of the first level and target of the original data are presented to the second level CVANN as inputs. In this study, segmentation of lung images is realized using CCVANN. II. MATERIAL AND METHODS A. Image Data For the development and evaluation of the proposed system we used our image collection (32 chest CT images of 6 female and 26 male patients). These images were recorded from Baskent University Radiology Department in Turkey [17]. This collection includes 10 images with benign nodules and 22 images with malign nodules. Averaged age of patients is 64. Each CT slice used in this study has dimensions of 752 x 752 pixels with grey level. B. Complex Wavelet Transform (CWT) Wavelet techniques are successfully applied to various problems in signal and image processing [18-19]. It is perceived that the wavelet transform is an important tool for analysis and processing of signals and images. In spite of its efficient computational algorithm, the wavelet transform suffers from three main disadvantages. These disadvantages are shift sensitivity, poor directionality and absence of phase information. CWT overcomes these disadvantages [20]. Recent research in the development of CWTs can be broadly classified in two groups; RCWT (Redundant CWTs) and NRCWT (Non-redundant CWTs). The RCWT include two almost similar CWT. They are denoted as DT-DWT (Dual-Tree DWT based CWT, with two almost similar versions namely Kingsbury’s and Selesnick’s [20]. In this paper, we used Kingsbury’s CWT [20] for feature extraction of image to be segmented. C. Combined Complex-Valued Artificial Neural Network (CCVANN) In this study, a complex back-propagation (CBP) algorithm has been used for pattern recognition. We will first give the theory of the CBP algorithm as applied to a multi layer CVANN. Figure 1 shows a CVANN model. The input signals, weights, thresholds, and output signals are all complex numbers. The activity Yn of neuron n is defined as: (1) Yn Wnm X m Vn Use the formula in (3) to calculate output signals. 4. Adaptation of weights and thresholds If this condition is satisfied, algorithm is stopped and weights and biases are frozen. N Tn p n 1 ( p) On( p ) 2 10 1 (4) where Tn(p) and On(p) are complex numbers and denote the desired and output value, respectively. The actual output value of the neuron n for the pattern p, i.e the left side of (4) denotes the error between the desired output pattern and the actual output pattern. N denotes the number of neurons in the output layer [21]. Fig. 1. CVANN model The general CCVANN model which is a combination of two CVANN used in this study is illustrated in Fig. 2. The CVANNs were used at the first and second levels for the complex-valued pattern recognition. m where Wnm is the complex-valued (CV) weight connecting neuron n and m, Xm is the CV input signal from neuron m, and Vn is the CV threshold value of neuron n. To obtain the CV output signal, the activity value Yn is converted into its real and imaginary parts as follows: (2) Yn x iy z where i denotes 1 . Although various output functions of each neuron can be considered, the output function used in this study is defined by the following equation: (3) fC ( z ) f R ( x) i. f R ( y) where fR(u)=1/(1+exp(-u)) and is called the sigmoid function. Summary of CBP algorithm: 1. Initialization Set all the weights and thresholds to small complex random values. 2. Presentation of input and desired (target) outputs Present the input vector X(1), X(2),….,X(N) and corresponding desired (target) response T(1), T(2),….T(N), one pair at a time, where N is the total number of training patterns. 3. Calculation of actual outputs Fig. 2. CCVANN model III. MEASUREMENT FOR PERFORMANCE EVALUATION In this paper, we used an algorithm to evaluate the classification results of CVANN’s outputs for training and test images that include lung region pixels and surrounding pixels of lung-region [17]. Number of correct classified pixels in segmented image (752x752) was calculated according to the following algorithm: IF I T - IO = 0 THEN Number of correct classified pixel (CCP) increase by 1 ELSE Number of incorrect classified pixel (iCCP) increase by 1 where, IT and IO are target of network and actual output of network, respectively. Finally, we measured the accuracy of proposed methods using following equation: Accuracy (%) = (CCP / (CCP + iCCP)) x 100 (5) IV. RESULTS AND DISCUSSION In this paper, segmentation of lung region was realized using CWT based CCVANN. Complex wavelet transform was used to reduce the size of input matrix of training and test images. In proposed cascade structure, feature vector of original CT images (size of 752x752) was extracted using CWT with 2nd, 3rd and 4th level. Size of feature vectors was obtained as 188x188, 94x94 and 47x47 for 2 nd, 3rd and 4th level, respectively. Obtained complex-valued feature vectors were presented as inputs to CCVANN. Figure 3 shows block representation of proposed method. The complex-valued backpropagation algorithm was used for training of the proposed networks. Fig. 4. Lung images with benign nodules (a) (image no: B10) and malign nodules (b) (image no: M16) and their results, c and d, respectively. To compare with lung segmentation using “single” CVANN, learning rate, number of hidden nodes and maximum iteration number of first CVANN was chosen as 0.1, 10 and 10, respectively, similarly [17]. These parameters for second CVANN were determined via experimentation. Network structures are given in Table 1. TABLE I NETWORK STRUCTURES Network Learning Rate 1th CVANN [17] 2nd CVANN 0.1 1e-6 No. of Hidden Nodes 10 40 No. of Iteration 10 10 Results of CCVANN and CVANN [17] with three levels CWT were presented in Table 2 and Table 3. These results are given for best and worst accuracy rates of CVANN. In Table 2, B signify an image with benign nodule and M signify an image with malign nodule. According to Table 2 and Table 3, CCVANN can segment lung region better than CVANN for all of the CWT’s level. Fig 3. Block representation of proposed method for lung segmentation. The training of CWT-CVANN was stopped when the error goal (4) was achieved. In training phase, half of total 32 images were used. After that, the performance of this network was tested by presenting other 16 test images. Averaged accuracy rate of test is calculated as 99.80 %. Fig. 4 shows the segmented images using 4th level CWT and CCVANN with best accuracy rate. V. CONCLUSIONS In this paper, a combined complex-valued artificial neural network model is proposed for biomedical image segmentation. Following conclusions may be drawn based on the results presented; 1. The results of the CCVANN compared to experimental results are found to be more satisfactory. The proposed CCVANN methods’ results have a lower number of incorrect classification pixels than using a single CVANN model. 2. Although the performance of the developed CCVANN model is limited to the range of input data used in the training and testing process, the method can easily be applied with additional new set of data. ACKNOWLEDGMENT TABLE II COMPARISON OF CVANN AND CCVANN USING NUMBER OF CCP AND iCCP Number of CCP CWT This work is supported by the Coordinatorship of Selcuk University's Scientific Research Projects. REFERENCES Number of iCCP [1] Image No. Level CVANN CCVANN CVANN CCVANN B10 (best) 564279 564477 1225 1027 B1 (worst) 563483 563609 2021 1895 M16 (best) 564150 564255 1354 1249 M19 (worst) 563483 563732 2021 1772 [2] [3] [4] 2 [5] B10 (best) 564322 564380 1182 1124 B1 (worst) 562808 562942 2696 2562 M6 (best) 564226 564460 1278 1044 M10 (worst) 563548 563907 1956 1597 [6] [7] 3 B10 (best) 564169 564559 1335 945 B1 (worst) 561201 561480 4303 4024 M16 (best) 564079 564463 1425 1041 M4 (worst) 563349 563872 2155 1632 4 TABLE III COMPARISON OF CVANN AND CCVANN USING ACCURACY RATE [8] [9] [10] [11] [12] [13] Accuracy (%) CWT Image No. Level [14] CVANN CCVANN B10 (best) 99.7834 99.8184 B1 (worst) 99.6426 99.6649 M16 (best) 99.7606 99.7791 M19 (worst) 99.6426 99.6867 B10 (best) 99.7910 99.8012 B1 (worst) 99.5233 99.5470 M6 (best) 99.7740 99.8154 M10 (worst) 99.6541 99.7176 [19] B10 (best) 99.7639 99.8329 [20] B1 (worst) 99.2391 99.2884 M16 (best) 99.7480 99.8159 M4 (worst) 99.6189 99.7114 2 [15] [16] [17] 3 [18] [21] 4 K. S. Fu, J. K. Mui, “A survey on image segmentation”, Pattern Recognition, vol. 13-1, pp. 3-16 , 1981. R. M. Haralick , L. G. Shapiro: “Survey: image segmentation techniques”, Comp. Vision Graph Image Proc vol. 29, pp. 100-132, 1985. A. P. Dhawan, Medical Image Analysis, Wiley-Int., USA, 2003. B. M. Ozkan, R. J. Dawant,, “Neural-network based segmentation of multi-modal medical images: a comparative and prospective study”, IEEE Trans. Med. Imaging vol. 12, 1993. D. D. Sha, J. P. Sutton, “Towards automated enhancement, segmentation and classification of digital brain images using networks of networks”, Information Sciences, vol. 138, pp. 45-77, 2001. T. W. Nattkemper, H. Wersing, W. Schubert, H. Ritter, “ A neural network architecture for automatic segmentation of fluorescence micrographs”, Neurocomputing vol. 48, pp. 357-367, 2002. A. Papadopoulos, D. I. Fotiadis, A. Likas, “An automatic microcalcification detection system based on a hybrid neural network classifier”, Artificial Int. in Medicine vol. 25, pp. 149-167 2002 Z. Dokur, T. Olmez, “Segmentation of ultrasound images by using a hybrid neural network”, Pattern Recognition Letters vol. 23, pp. 1825-1836, 2002 D. L. Vilarino, D. Cabello, X. M. Pardo, V. M. Brea, “Cellular neural networks and active contours: a tool for image segmentation”, Image and Vision Computing vol. 21, pp. 189-204, 2003. I. Middleton, R. I. Damper, “Segmentation of magnetic resonance images using a combination of neural networks and active contour models”, Medical Engineering and Physics vol. 26, pp. 71-86, 2004. M. I. Rajab, M. S. Woolfson, S. P Morgan,.”Application of regionbased segmentation and neural network edge detection to skin lesions”, Comp. Med. Imaging and Graphics vol. 28, pp. 61-68, 2004 Z. Dokur, “A unified framework for image compression and segmentation by using an incremental neural network”, Expert Systems with Applications vol. 34, pp. 611-619, 2008. M. N. Kurnaz,, Z. Dokur, T. Olmez, “An incremental neural network for tissue segmentation in ultrasound images”, Computer Methods an Programs in Biomedicine vol. 85, pp. 187-195, 2007. A. Wismuller, F. Vietze, J. Behrens, A. Meyer-Baese, M. Reiser, H. Ritter, “Fully automated biomedical image segmentation by selforganized model adaptation”, Neural Networks vol. 17, pp.13271344, 2004. S. H. Ong, N.C. Yeo, K. H. Lee, Y. V. Venkatesh, D. M. Cao, “Segmentation of color images using a two-stage self-organizing network”, Image and Visual Computing vol. 20, pp. 279-289, 2002. Y. Özbay, M. Ceylan, “Effects of window types on classification of carotid artery Doppler signals in the early phase of atherosclerosis using complex-valued artificial neural network”, Computers in Biology and Medicine, vol. 37, pp. 287-295, 2007. M. Ceylan, Y. Özbay, O. N. Uçan, E. Yıldırım, “A novel method for lung segmentation on chest CT images: Complex-valued artificial neural network with complex wavelet transform”, Turk. J. Elec. and Comp. Sciences, vol. 18 (4), 2010. G. Beylkin, R. Coifman, V. Rokhlin, “Fast wavelet transforms and numerical algorithms”, Communications on Pure and Applied Mathematics, pp. 141-183, 1991. M. Unser, “Texture classification and segmentation using wavelet frames”, IEEE Trans. Image Processing, vol.4, pp. 1549-1560, 1995. I. Selesnick, W. R. G. Baraniuk, N. G. Kingsbury, “The dual-tree complex wavelet transform”, IEEE Signal Processing Magazine, vol.22, pp. 123-151, 2005. T. Nitta (Edt.), Complex-Valued Neural Networks: Utilizing HighDimensional Parameters, Inf. Science Reference, Penns., USA, 2009.