Educational Assessment Syllabus

advertisement

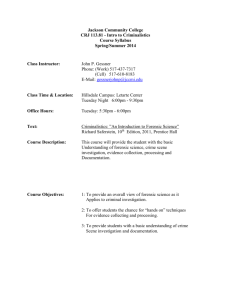

SPRING 2010 COURSE SYLLABUS Educational Assessment FOUN 3710 - 3 s.h. - CRN 22335) Prerequisites: FOUN 1501. (Note: When grouped with other courses for the purposes of block instruction, students must be admitted to the complete instructional block to take this course.) Class meetings: Spring Semester, 2010 TT 08:00 to 09:15; BCOE Room 4302 Text/Resources: Hogan, T. Educational Assessment: A Practical Introduction (2007). John Wiley & Sons, Inc McEwing, Richard. Website - http://www.cc.ysu.edu/~ramcewin (Essential details related to this course are on the class web site) Syllabus developed by Dr. Richard McEwing Instructor: Dr. Richard A. McEwing, Professor Department of Educational Foundations, Research, Technology & Leadership Beeghly College of Education Youngstown State University Youngstown, OH 44555-0001 Office: Beeghly College of Education, Rm 4105 Office Hours: MWF 9:00 to 10:00; TT 9:15 to 11:00 Office Phone: (330) 941-1933 E-mail: ramcewin@cc.ysu.edu Technology/Materials Fee: Students are required to have purchased individual TaskStream accounts. TaskStream is a web-based program used for a number of class requirements in this course and is the web-based program used throughout the teacher education program. Catalog Description: Critical review of types, purposes, procedures, uses, and limitations of assessment strategies and techniques including authentic assessment, value-added assessment, and alternate assessment. Standardized testing and implications for current practice. Critical Tasks: The FOUN 3710 Critical Tasks provide an assessment of the candidate’s capabilities in 1) reviewing an article from their own professional literature that deals a testing issue and 2) constructing a classroom test appropriate for the their teaching field. The classroom test must include assessment alternatives for two diverse students. These assignments must be submitted on TaskStream. McEwing - Foun 3710 - Page 1 of 13 SPRING 2010 Knowledge Base Rationale: As the bell curve becomes obsolete as an acceptable standard of teachers' success with students as our democratizing and developing society demands more, and more equitably disbursed, education - teachers must be able to successfully to teach groups of students whom they have not, in the past, succeeded in teaching. To attain this emerging professional standard, teachers will need to stronger, in depth and breadth, in their three traditional areas of learning: content knowledge; pedagogy; and knowledge of the learners and the teaching-learning situation in their familial, local, societal, cultural, and political contexts. Educational Assessment addresses a key component of pedagogy – assessment. This course, like its Foundations predecessor, Foun 1501, continues to foster commitment to the principle that children of all colors, backgrounds, creeds, abilities, and styles can learn. The focus of the course in educational assessment to include assessments generated by teachers and standardized assessments teachers must interpret. The class meeting discussions, field work assignments, and course assessments flow from intertwined topics. The overriding approach used is to introduce the techniques of “how” along with the concerns of “to what purpose.” The following are knowledge bases used in setting the course objectives -1. If assessment tools and approaches are not of high quality or not appropriate for the purpose used, there is potential for harm when decisions affecting students' futures are being made based on the results. The most important factors in determining technical quality are the reliability, validity, and fairness of classroom assessments. The Praxis II PLT Category II - Assessment topics will be a central guide; i.e., types of assessments, characteristics of assessments, scoring assessments, uses of assessments, understanding of measurement theory and assessment-related issues (Nuttall, 1989; ETS Booklet on the PLT - Tests and a Glance). 2. Professionals need to know certain statistical and measurement concepts to organize, use, interpret and evaluate data. Candidates will learn to utilize internet calculator web sites to explore basic concepts and develop skills in descriptive statistics (e.g., scales, distributions, measures of central tendency, measures of variability, measures of relationship) and understanding test scores (e.g. criterion referenced interpretation, norm-referenced interpretation, percentiles, standard scores) and the purposes/timing of testing; e.g. placement, diagnostic, formative, summative (U.S. Congress, 1992, February; Blommers, 1977; Bloom, Hastings & Madaus, 1971; Coladarci & Coladarci, 1980; Hooke, 1983). 3. The primary aim of assessment is to foster learning of worthwhile academic content for all students (Wolf, Bixby, Glenn, & Gardner, 1991). Many educators believe that what gets assessed is what gets taught and that the format of assessment influences the format of instruction (O'Day & Smith, 1993). McEwing - Foun 3710 - Page 2 of 13 SPRING 2010 4. Assessments of student achievement are ever changing. Candidates need to understand not only the basics of assessment technique, but they also need to learn to think critically, analyze, and make inferences. Student assessment is the centerpiece of educational improvement efforts. Changes in assessment may cause teachers to do things differently (Linn, 1987; Madaus, 1985). 5. Candidates need to be aware of the promise and the challenges inherent in using assessment practices for high-stakes decisions (such as student retention, promotion, graduation, and assignment to particular instructional groups). In many schools, districts, and states, interpretations based on a single test score have been used to place students in low-track classes, to require students to repeat grades, and to deny high school graduation diplomas. The negative personal and societal effects for students are well-documented: exposure to a less challenging curriculum, significantly increased dropout rates, and lives of unemployment and welfare dependency (Oakes, 1986a; Oakes, 1986b; Shepard & Smith, 1986; Jaeger, 1991). 6. Authentic assessment involves candidates creating problems which are engaging or questions of importance, in which students must use knowledge to fashion performances effectively and creatively. The tasks are either replicas of, or analogous to, the kinds of problems faced by adult citizens and consumers or professionals in the field. (Wiggins, 1993). Examples of tools or instruments used in authentic assessment include rubrics and portfolios. 7. Value-added assessment gives candidates a powerful diagnostic tool for measuring the effect of their own teaching academic achievement. Student performance on assessments can be measured in two very different ways, both of which are important. Achievement describes the absolute levels attained by students in their end-of-year tests. Growth, in contrast, describes the progress in test scores made over the school year. Value-added assessment measures growth and answers the question: how much value did the school staff add to the students who live in its community? If teachers and schools are to be judged fairly, it is important to understand this significant difference (McCaffrey, Lockwood, Koretz, & Hamilton, 2004). 8. It is key that assessment results are report effectively to students, parents and the wider community so that their needs for information are met and they have a clear understanding of the assessment. When properly presented, assessment reports can help build support for schools and for initiatives that educators wish to carry out. But if assessment results are poorly reported, they can be disregarded or interpreted incorrectly, adversely affecting students, educators, and others in the school community. Determine the audience for the reporting activity. Reports should be geared toward the audience (Roeber, Donovan, and Cole, 1980). 9. An examination historic and current controversies in educational assessment. The issues have less to do with technique than they do with deep seated beliefs, working conditions, and social conditions. Issues will vary over time but typical issues would include test anxiety, test wise-ness, coaching, the computer in assessment, environmental assessment, and the politics of assessment (Messick, 1989; Kochman, (1989). McEwing - Foun 3710 - Page 3 of 13 SPRING 2010 Connections to the BCOE Conceptual Framework and Ohio’s Performance-Based Licensure Expectations: The BCOE Conceptual Framework “Reflection in Action” uses the mnemonic device “REFLECT” to specify its seven keys components. These seven components are then specified as candidate learning outcomes in the “BCOE Institutional Standards & Outcome Statements.” These outcome statements are index to the Ohio Standards for the Teaching Profession in a matrix called the Alignment of Ohio Standards for the Teaching Profession and BCOE Conceptual Framework. Course Objectives: (OS#_ indicates Ohio Standard connections; R#_ indicates BCOE Conceptual Framework connections.) A. Cognitive Domain (Knowledge categories - Bloom's taxonomy, revised 2001) 1.1. Remember (was Knowledge) 1.1.1. Knows the definitions of common measurement terms, e.g., validity, reliability, mastery (OS#3.1; R#1B). 1.1.2. Knows where to find sources of information about standardized testing (OS#3.1; R#1B). 1.1.3. Knows basic testing concepts, e.g., norms, standard error of measurement, percentile rank (OS#3.1; R#1B). 1.1.4. Knows how to develop learning outcomes objectives using taxonomies, domains, categories, illustrative verbs (OS#3.1; R#1B). 1.2. Understand (was Comprehension) 1.2.1. Understands the difference between criterion-referenced and norm-referenced testing (OS#3.1; R#1B). 1.2.2. Understands advantages and disadvantages of various item types (OS#3.1; R#1B). 1.2.3. Understands how factors unrelated to the test itself may influence the test results (OS#3.1; R#1B). 1.2.4. Generalizes principles that under gird sound test administration procedures. (OS#3.1; R#1B) 1.3. Apply (was Application) 1.3.1. Predicts appropriate situational use for the various categories of evaluation, e.g., placement, diagnostic, formative, summative (OS#3.2; R#1B). 1.3.2. Demonstrates correct usage of a variety of evaluation procedures, e.g., anecdotal records, rating scales, checklists, achievement and aptitude tests (OS#3.2; R#1B). 1.3.3. - 1.3.7 (See Psychomotor Domain) McEwing - Foun 3710 - Page 4 of 13 SPRING 2010 1.4. Analyze (was Analysis) 1.4.1. Differentiates among the contributions and limitations of standardized tests and other high stakes assessments (OS#3.3; R#1C). 1.4.2. Identifies strengths and weaknesses of teacher-made classroom tests (OS#3.3; R#1C). 1.4.3. Interprets the results of standardized tests (OS#3.3; R#1C). 1.5. Evaluate (was Evaluation) 1.5.1. Judges the relative worth of a wide variety of educational data possibilities (OS#3.5; R#1C). 1.5.2. Evaluates data to make decisions (OS#3.5; R#1C). 1.6. Create (was Synthesis) 1.6.1. Constructs well-organized reviews on topics related to educational evaluation (TaskStream) (OS#3.1; R#1B). 1.6.2. Formulates a well-balanced exam (TaskStream) (OS#3.1; R#1B). B. Psychomotor Domain (Skills) 2.1. Develops a table of specifications (OS#3.2; R#1B). 2.2. Constructs various item types (OS#3.2; R#1B). 2.3. Assembles items into a classroom test (OS#3.2; R#1B). 2.4. Computes simple descriptive statistics (Internet Calculators) (OS#3.2; R#1B). 2.5. Performs item analysis (OS#3.2; R#1B). C. Affective Domain (Dispositions) 3.1. Accepts the importance of the individual in meaningful interpretation of test results (OS#3.5; R#1C). 3.2. Shows awareness of professional ethical standards relating to confidentiality of test scores (OS#3.4; R#3A). 3.3. Appreciates the role of reporting and communicating testing information to all legitimate parties (OS#3.4; R#3D). 3.4. Values testing as means to obtain reliable data in order to make decisions about students (OS#3.5; R#1C). 3.5. Values multifaceted assessment as a means of creating fairness in a grading policy (OS#3.1; R#1B). 3.6. Displays sensitivity to social and political issues related to testing (OS#3.4; R#3D). McEwing - Foun 3710 - Page 5 of 13 SPRING 2010 Course Outline - Class meeting topics and due dates for written submissions are scheduled to follow the sequence below. Should adjustments to this plan be necessary, they will be announced in class. Dates Topic Related Material and Readings Jan 12 Review of Syllabus & Course Expectations Syllabus / web site Educational Assessment Pretest Jan 14 The World of Educational Assessment Jan 18 UNIVERSITY CLOSED – MARTIN LUTHER KING DAY Jan 19 Statistics Hogan Chap 2 Jan 21 More Statistics Hogan Chap 2 Jan 26 Reliability Hogan Chap 3 Hogan Chap 1 **Jan 26 Schedule Individual Statistics Task with Instructor Jan 28 Validity Hogan Chap 4 **Jan 28 “Article Review” via TaskStream is Due Feb 2 Norms and Criteria Hogan Chap 5 Feb 4 Planning for Assessment Hogan Chap 6 Feb 9 Selected Response Items Hogan Chap 7 Feb 11 Constructed Response Items Group Work “Dwarfs” Testing Activity Hogan Chap 8 **Feb 16 Mid-Term Exam – Chapters 1 through 8 Feb 18,23 Administering & Analyzing Tests Hogan Chap 10 Feb 23,25 Grading & Reporting Hogan Chap 13 Mar 2 High Stakes Testing Standardized Tests: Achievement Hogan Chap 11 McEwing - Foun 3710 - Page 6 of 13 SPRING 2010 Mar 4 High Stakes Testing Continued: School Report Cards & Value Added Assessment Class Web Site **March 4 “Test Construction Project” via TaskStream Due Mar 9,11 NO CLASSES ON CAMPUS – SPRING BREAK Totally in the field Mar 16,18 In the Field Mar 23,25 In the Field Mar20,Apr1 In the Field Apr 6,8 In the Field Apr 13,15 In the Field Apr 20,22 In the Field Back to YSU Classroom Apr 27 Evaluating Teaching: Applying Assessment To Yourself Hogan Chap 15 **Apr 27 Schedule Extra Credit Individual Statistics Task if Desired Apr 27,29 What Lies Beneath – Attitude & Ability Nontest Indicators: e.g. – Creativity, Interests Hogan Chap 9 Standardized Tests: e.g - Ability, Personality Hogan Chap 12 Apr 29 Educational Assessment & the Law Hogan Chap 14 **Apr 30 Last Date for Extra Credit Individual Statistics Task **May 4 - Final Exam 8:00 am – 10:00 am (Chapters 9 – 15, School Report Cards, Value Added) McEwing - Foun 3710 - Page 7 of 13 SPRING 2010 Course Grading: The course Grade Determination Checklist below indicates the maximum point values assigned to each evaluation area: Evaluation Area Points Possible Related Course Obj. No. 1 Educational Assessment Pretest 10 1.3 No. 2 Statistics Performance Task 20 2.4 No. 3 Critical Task - Article Review 10 1.2, 1.4, 1.5, 3.6 No. 4 Class-Participation 20 3.1 through 3.6 No. 5 Critical Task – Test Construction Project 40 1.6, 2.1 through 2.5 No. 6 Mid-Term Exam 25 1.1, 1.2 No. 7 Final Exam 25 1.1, 1.2 ---150 Total Each student starts the class with 150 points. The checklist points earned above are added to determine the course grade as follows: 271 - 300 . . . . . . . . A 241 - 270 . . . . . . . . B 211 - 240 . . . . . . . . C 181 - 210 . . . . . . . . D 0 - 180 . . . . . . . . F Educational Assessment Pretest Points Explained: All students will be given an assessment at the beginning of the course to determine their awareness of key concepts and terms related to assessment. Each student taking the pretest is awarded all 10 points. The instructor will use the scores on the pretest to guide subsequent instruction. Educational Assessment Mid-Term and Final Points Explained: All students will be given two tests to determine their knowledge of key concepts and terms related to assessment. These are called the Mid-Term and the Final. Each test will be 50 multiple choice or other selected response item types. Students taking these exams earn .5 point for each correct answer. Each test is worth 25 points. The Final is not cumulative. Critical Task #1 - Article Review Points Explained: For the first Critical Task all students must review an article from their own professional literature that deals with an issue in testing. Each selected article will be evaluated using TaskStream according a rubric created by the instructor. The article review itself is worth a potential 10 points toward the course grade (see rubric). McEwing - Foun 3710 - Page 8 of 13 SPRING 2010 Critical Task #2 – The Test Construction Project Points Explained: The second Critical Task for the course is the construction of a classroom test appropriate for the candidate’s teaching field. This will be submitted through TaskStream and is worth a potential of 40 points (see rubric). Evaluation of the candidate’s project will be done through TaskStream. Test Construction Project Components Introduction o a description of the unit or topic to be taught o the grade level in which the unit or topic could be taught A list of at least three and no more than four specific learning objectives or outcomes, each targeted at a different Bloom level (specify by name and briefly explain), that will be achieved during instruction, with a table of specifications. (Note: all objectives/outcomes listed must be evaluated by the test as demonstrated through the table of specifications). A pretest assessment plan designed to be given prior to instruction. A formative evaluation plan. A summative whole class assessment using a quantitative approach. This may vary a bit but it is expected to include the following pieces: o clear directions and point values associated with each item or group of items. o 20 selected-response items. This is normally achieved best using multiple choice (or interpretive) questions. Matching or true-false items, depending on your unit, may be appropriate. Your test must contain at least two selective-response item types. You may NOT use short answer or fill-in-the-blank as selective-response. o a separate scoring key listing all the correct answers for your selected response items o 2 constructed-response essay questions o 1 constructed-response performance-based task o a sample full-credit separate scoring rubric for each of the 3 constructed-response questions o identification of the objective/outcome tested by that item or group of items An assessment alternative for two students; each with a different alternative assessment. Specify what it is about these students (i.e., justify alternative assessments for these two students) that leads you to create an alternative assessment for each. The difference might relate to learning mode, reading ability, language acquisition, activity level, and so on. You may use qualitative approaches here. McEwing - Foun 3710 - Page 9 of 13 SPRING 2010 Statistics Performance Task Explained: Six statistics approaches using internet programs (bar graph, circle graph, histogram, meanmedian-mode, Spearman Rank Correlation and Pearson Correlation) are explored in class. Each student will be required to demonstrate one-on-one his/her ability to use one of these programs. The student will used the data sets found on the class website, use the statistic program discussed in class to analyze the data, and answer questions the instructor raises during the process. Each student will schedule an individual time to meet with the instructor. The student will not know which of the six she/he must demonstrate to the instructor until the time of the meeting. The internet program selected will be determined by roll of the die. Sub-tasks total to 20 points possible on the overall task (see website examples for scoring tables). Extra Credit Explained: If a student chooses, he/she may do a second performance task for a potential extra credit of 15 points. This time, however, the student will be required to create his/her own data, use a statistics program discussed in class to analyze the data, and answer questions the instructor raises during the process. Sub-tasks total to 15 points possible on the overall task (see website examples for scoring tables). The student may choose to do any of the six internet statistical programs. This extra credit performance task must be scheduled and completed prior to the start of final exam week. Class Participation Points Explained: While the knowledge base related to this course can be acquired through reading the text, the examination of (and reflection on) our ideas with regard to this knowledge is attained only by being present at class activities and discussions. In recognition of this commitment, individuals who attend all classes earn 20 pts. For every class absence, 4 points are deductive from the 20 points possible. Other Course Definitions and Policies Class Cancellation: Notice that this class is being cancelled for any one day because of instructor illness, or other reasons, will be sent to the student address <@student.ysu.edu> as soon as possible. Universitywide closure or class cancellation is a decision made through the Presidents office, and announced via the YSU homepage and on WYSU-FM radio. Academic Honesty - Departmental Policy: All candidates are expected to comply with generally accepted professional ethics of Academic Honesty in meeting their course requirements (refer to http://penguinconnection.ysu.edu/handbook/Policies/POLICIES.shtml). Candidates are expected to submit materials that are respectful of intellectual property rights, as well as complying with all Federal Copyright Laws (refer to http://www.copyright.gov/). Any breach of this code of ethics will be handled according to the YSU Student Handbook. Any proven acts of cheating, plagiarizing, or engaging in any form of academic dishonesty, could result in a severe disciplinary action, an “F” grade for the assignment or course, and possible referral to the Office of Student Affairs for disciplinary action. McEwing - Foun 3710 - Page 10 of 13 SPRING 2010 Students with Disabilities: In accordance with University procedures, if you have a documented disability and require accommodations to obtain equal access in this course; please contact me privately to discuss your specific needs. You must be registered with CSP Disability Services, located at Wick House, and provide a letter of accommodation to verify your eligibility. You can reach CSP Disability Services at 330 941-1372. Incomplete Grade Policy: An incomplete grade of an “I” may be given to a student who has been doing satisfactory work in a course but, for reasons beyond control of the student and deemed justifiable by the instructor, had not completed all requirements for a course when grades were submitted. A written explanation of the reason for the “I” and a date (which must be within one year) by which all course requirements will be completed, must be forwarded to the Registrar for inclusion in the student’s permanent record, with copies to the student and department chairperson. Upon the subsequent completion of the course requirements, the instructor will initiate a grade change. If no formal grade change occurs within one year, the “I” automatically converts to an “F.” If graduation occurs within the one-year time period, the “Incomplete” grade will be converted to an “F” prior to graduation. Candidate Disposition Alert Process: The purpose of this alert process is to identify candidate performance or conduct that fails to satisfy professional expectations associated with professionalism, inclusivity and collaboration determined by the BCOE faculty as necessary standards to effectively serve all students or clients. The Candidate Performance Alert form is completed when a concern is raised about a candidate’s performance during any class, sponsored activity by the Beeghly College of Education, or during a YSU required field or clinical experience. This form may be used when a candidate engages in conduct, irrespective of its time or location, which raises substantial questions about the candidate’s ability to perform his or her role as an educational professional. The Candidate Performance Alert Form can be used by university faculty, staff, supervisors, cooperating teachers, or other school personnel when they have a concern, other than one that can be effectively addressed through routine means of supervision. Critical Tasks: Selected performance-based assignments reflect a candidate’s knowledge, skills and/or dispositions and are aligned with the standards for teacher preparation of the licensure area. These tasks assess a candidate’s ability to move through the teacher preparation program in an effective way, meeting and/or exceeding expectations in these professional standards. Therefore, candidates must effectively pass a critical task to pass the course. Failure to effectively pass the critical task(s) will result in remediation through repetition of the course to guarantee that all teacher candidates are prepared to be an effe ctive educator once they leave Youngstown State University. Missed Exams: A make-up exam will be scheduled only when verification that an absence was justified is provided. McEwing - Foun 3710 - Page 11 of 13 SPRING 2010 References Baker, E. L., O'Neill, H. F., Jr., & Linn, R. L. (1993). Policy and validity prospects for performance-based assessments. American Psychologist, 48, 1210-1218. Blommers, Paul J. (1977). Elementary statistical methods in psychology and education. Lanham, Md.: University Press of America. Bloom, Benjamin S., J. Thomas Hastings, George F. Madaus. Handbook On Formative And Summative Evaluation Of Student Learning. New York: McGraw-Hill, 1971. Braun, Henry I. “Using Student Progress to Evaluate Teachers: A Primer on Value-Added Models,” Educational Testing Service - Policy Information Center. September 2005. Coladarci, Arthur and Theodore Coladarci. (1980). Elementary Descriptive Statistics. San Francisco: Wadsworth, Inc. Darling-Hammond, L. (1994, Spring). Performance assessment and educational equity. Harvard Educational Review, 64(1), 5-29. Educational Testing Service (ETS) website (www.ets.org). Hooke, Robert. (1983). How to tell the liars from the statisticians. New York: M. Dekker. Jaeger, R.M. (1991). Legislative perspectives on statewide testing. Phi Delta Kappan, 73(3), 239-242. Kochman, T. (1989). Black and white cultural styles in pluralistic perspective. In B. R. Gifford (Ed.), Test policy and test performance: Education, language and culture. Boston: Kluwer Academic Publishers. Linn, R. (1987). Accountability: The comparison of educational systems and the quality of test results. Educational Policy, 1 (2), 181-198. Linn, R.L., Baker, E.L., & Dunbar, S.B. (1991, November). Complex, performance-based assessment: Expectations and validation criteria. Educational Researcher, 20 (8), 15-21. Madaus, G. (1985). Public policy and the testing profession - You've never had it so good? Educational Measurement: Issues and Practices, 4 (1), 5-11. McEwing - Foun 3710 - Page 12 of 13 SPRING 2010 McCaffrey, Daniel, Lockwood, J.R., Koretz, Daniel, and Hamilton, Laura. "Evaluating ValueAdded Models for Teacher Accountability." An Education Report by the Rand Corporation (prepared for the Carnegie Corporation), 2004. Messick, S. (1989). Meaning and values in test validation: The science and ethics of assessment. Educational Researcher, 18(2), 5-11. Nuttall, D. L. (1989). The validity of assessments. In P. Murphy & B. Moon (Ed.), Developments in learning and assessment (pp. 265-276). London: Hodder & Stoughton. Oakes, J. (1986a, Fall). Beyond tracking. Educational Horizons, 65(1), 32-35. Oakes, J. (1986b, November). Tracking, inequality, and the rhetoric of school reform: Why schools don't change. Journal of Education, 168(1), 61-80. O'Day, J.A., & Smith, M. (1993). Systemic school reform and educational opportunity. In S. Fuhrman (Ed.), Designing coherent educational policy: Improving the system (pp. 250-311). San Francisco: Jossey-Bass. Roeber, E. D., Donovan, D., & Cole, R. (1980, December). Telling the statewide testing story ... and living to tell it again. Phi Delta Kappan, 62(4), 273-274. Sanders, William. "Beyond No Child Left Behind.” 2003 Annual Meeting. American Educational Research Association. Chicago. 2003. Shepard, L.A., & Smith, M.L. (1989). Flunking grades: Research and policies on retention. New York: Falmer Press. Value-Added Assessment Special Issue, Journal of Educational and Behavioral Statistics, Volume 29 No 1, Spring 2004 Wiggins, G. (1993). Assessing student performance: Exploring the purpose and limits of testing. San Francisco: Jossey Bass. Wolf, D., Bixby, J., Glenn, J., III, & Gardner, H. (1991). To use their minds well: Investigating new forms of student assessment. Review of Research in Education, 17, 31-74. U.S. Congress, Office of Technology Assessment. (1992, February). Testing in American Schools: Asking the right questions. (OTA-SET-519). Washington, DC: U.S. Government Printing Office. McEwing - Foun 3710 - Page 13 of 13