report - Faculty

Investigation into the usage of frequency spectrum analysis for speech recognition.

McCusker E, Rivard K, Valdivia A

Abstract.

This project has been an investigation into the usage of frequency spectrum analysis as a component of simple speech recognition software. Utilizing MATLAB, Fast

Fourier Transforms, and filters, a sample recording of a spoken word was analyzed and compared to a standard set of vowel sounds. A word match was then made based on the number of matching syllables and vowel sounds present in the word.

Though this method has not proven reliable in repeatability trials, it can be shown that this is likely due to arbitrary constant values selected for several functions or the use of a flawed pool for the standard vowel data set.

Background

.

Speech recognition is a device’s ability to recognize and perform an action when a desired phrase or word is spoken. Technology has advanced to the point where speech can be translated into text or visual images, and additional information can be requested of the user to ensure that the right task is completed.

There are a large number of applications for voice and speech recognition software available to both consumers and professionals that are accessible in everyday life.

There are phone menus with voice-activated selections, and most cell phones now have standard voice-activated dialing where the phone recognizes simple names or commands pre-recorded by the user. Advanced software available on selected phones behaves as a personal assistant, responding to questions and more complicated commands.

Transcription software that enables speech recognition to produce documents has been implemented to assist those with carpal tunnel syndrome and other, long-term, disabilities that prevent them from typing or other computer use. Newer programs can also provide translations or visuals of spoken conversations to any of the fifteen percent of the US population that is now hearing-impaired or deaf. Medical professionals have been using increasingly accurate and flexible transcription software to reduce the amount of written documents and miscommunication in hospitals and doctor's offices. Similar programs have been implemented for secretaries, lawyers, and various other professions.

In this project, digital signals processing techniques such as frequency spectrum analysis and filters were used to develop an algorithm for identifying a collection of

three spoken words. After cursory analysis of several frequency spectrums of vowel sounds, it was decided that an algorithm based on the vowels and syllables present would best help identify the words. As nearly all calculations are thus done in the frequency domain, Fourier transforms were invaluable in translating the initial timedomain signal into frequency data that could then be analyzed.

Methods

.

The approach taken for this project was to establish standard data for each of the five vowels which could then later be compared to the data obtained from a sound recording broken into syllables. Each syllable would be identified with the contained vowel and a word match could be made. The three words that we chose to use were lock, key, and open, having the distinct vowel sounds (ahhh, eeee, ohhh, ehh) and the third of which being the only word to have two syllables.

We began by using Fast Fourier Transforms to observe five pure vowel sounds and determined that the resulting peaks tended to occur at roughly the same frequencies

(the harmonics of the fundamental) but the heights of the individual harmonics were different for each vowel. Based on this observation, the rank order of the heights of the peaks was determined to be the crucial point in distinguishing the frequency spectrums for the different sounds and a program was written to determine the five largest peaks and rank them by relative height. A peak is defined as a point which is either higher than both neighboring peaks or far enough away from its neighboring peaks to stand on its own.

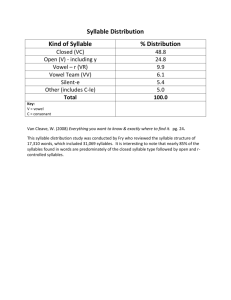

Next, it was necessary to develop a set of standard data that could later be used for comparison to the incoming information. 40-50 samples of the vowel sounds were recorded and peaks ranked. The mean rank of each peak position was determined along with the standard deviation. These five pairs of data were stored for each vowel to use as a standard. Factoring in the standard deviation of the peak ranking for each vowel allowed for focus on the peaks that were most often associated with that rank for each sound. This helped to reduce the problem we observed when the first two or three tallest peaks were very distinct for each of the words whereas the last two or three were more variable(for instance, the 4 th and 5 th tallest peaks tend to switch rankings depending on the recording). The completed standard set that was used for this project is illustrated in Figure 1 which displays the average height rankings of the peaks for each vowel sound.

When a user records the word to be analyzed, the signal is segmented into a given number of regions, containing a maximum of 100 samples from the original recording. An FFT is then performed on each region to obtain a frequency spectrum of the time data. The average magnitude for the segments can then be determined, thus obtaining the average power over time.

Figure 1. The standard ranks of the top five peaks for pure vowels. For each of the top five peaks for the pure vowel sounds investigated during this project, the average standard ranking is given within one standard deviation. These rankings are the premise of comparison between the different vowel sounds and ultimately the basis of the speech-recognition.

To determine the syllables for each word, an average low-power magnitude and standard deviation is determined, allowing the high-power regions to be defined as regions that are significantly taller than this mean height. Previous to the detection of

high-power regions, the power over time data is filtered using a five-point average to reduce noise and smooth peak boundaries.

The low-powered region is defined by the program as a region of at least 80 segments of the filtered data with the lowest standard deviation. Based on the observations that time regions where a person is speaking correspond to regions of increased power and that each syllable produces a unique peak, it was concluded that syllables could be defined as peaks in the power over time data greater than a predefined number of standard deviations than the mean low-power height. Figure 2 is a graph of the power over time data for a user sample illustrating the results of this method.

Figure 2. The location of syllables from the frequency spectrum. The red crosses on this diagram indicate the beginning and end of a syllable. As is evident, the two peaks have each been identified as syllables for this word. The smoothness of this graph is a result of the five-point averaging filter applied prior to the determination of syllable location.

The time region corresponding to each syllable is then analyzed using a frequency spectrum analysis that produces a ranked order of peaks using the process by which the standard set of data was created. The ranked order is then compared to the standard created for each vowel. The program determines which word was said based on the number of syllables present in the user recording as well as which vowel sounds were located within the syllables.

Results and Conclusion.

Ultimately, our investigation has identified several areas which are necessary in using frequency spectrum analysis as a tool in speech-recognition. We were, however, unable to recognize the recorded word with significant repeatability, even after improving the sample set for comparison.

There are multiple areas where error may have been introduced into the algorithm that supports our speech recognition software. To begin, the standard data set that was initially used was a set of four samples created by Katie Rivard for each vowel sound. Though the samples were clean and recorded at approximately the same time and place, the total number of samples was small, and Katie’s voice varied arbitrarily.

To improve, Alex Valdivia recorded a set of 50 samples of each vowel sound and the average rankings for the peaks were determined. The observed variation between the samples confirmed that multiple samples would be necessary to obtain a representative average for the heights.

The sample set could further be improved in the future by including consonant sounds, which are sometimes more distinguishable than the vowel sounds, or the precise syllables we would expect to obtain from the data. For example, a standard set would include “ohhh” and “pehn” for comparison to the syllables found in

“open.”

A larger source of error could be the introduction of false peaks by the user. If the sample is misinterpreted and a false peak is included in the ranking, it will affect the computer's ability to match it to a given vowel sound correctly. This effect is best illustrated in Figure 3.

Occasionally, the power vs. time graphs appear to contain multiple peaks of high power that would be interpreted by the program as multiple syllables as illustrated in

Figure 4. This was reduced initially with the introduction of the five-point averager, as shown in Figure 1, but some distinct consonant sounds continue to affect the number of syllables returned. Additionally, the current inability to distinguish the consonant and vowel sounds within a given syllable has affected the rank orders of the peaks, thereby affecting the vowel selection and ultimately, the word selection.

Figure 3. The effect of false peak on match algorithm. This figure is an example of how the creation of a false peak from a user sample affects the ability of the vowel-matching algorithms to correctly identify the sound. Peak 2 has been incorrectly identified. By shifting peaks 3-5 over, a much better match for the "ee" vowel is achieved. This problem could possibly be prevented by standardizing a recording method.

In expanding this project, the sample set could be modified to include a wider range of sounds and words, thereby expanding the vocabulary that the program would be able to analyze and match. The program could also be modified to focus on voicerecognition (user-specific) rather than simply speech-recognition (word-specific.)

Figure 4. The multiple syllable effect. This figure illustrates how the program occasionally located an incorrect number of syllables for the recorded word. This effect was diminished with the introduction of a filter, and also by increasing the number of standard deviations that the peak must be greater than in order to qualify as a syllable.