3. Multi-view Face Detection

advertisement

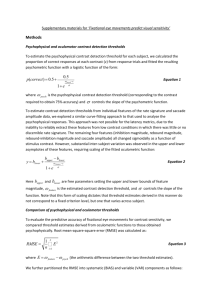

IMAGE THUMBNAILING VIA MULTI-VIEW FACE DETECTION CHIH-CHAU MA1, YI-HSUAN YANG2, WINSON HSU3 1 Department of Computer Science and Information Engineering, National Taiwan University, Taipei, Taiwan 2 Graduate Institute of Communication Engineering, National Taiwan University, Taipei, Taiwan 3 Graduate Institute of Networking and Multimedia, National Taiwan University, Taipei, Taiwan E-mail: b91902082@ntu.edu.tw, affige@gmail.com, winston@csie.ntu.edu.tw Abstract: The abstract is an essential part of the paper. Use short, direct, and complete sentences. Keywords: Tracking; estimation; management 1. information fusion; resource Introduction These are instructions for authors typesetting for the Asia-Pacific Workshop on Visual Information Processing (VIP07) to be held in Taiwan during 15 to 17 December 2007. This document has been prepared using the required format. The electronic copy of this document can be found on http://conf.ncku.edu.tw/vip2007/index.htm. Figure 1. Figure’s caption. 2. Related Works MS Word users: please use the paragraph styles contained in this document: Title, Author, Affiliation, Abstract, Keywords, Body Text, Equation, Reference, Figure, and Caption. Try not to change the styles manually. 2.1. 2.2. Length Papers should be limited to 6 pages. Do not include page numbers in the text. Mathematical formulas Mathematical formulas should be roughly centered and have to be numbered as formula (1). y f (x) (1) Face Detection Face Patch Classification in out Skin Color Detection Face Region Shift Face Region Merging SVM Face Classification SVM View Classification efficiency. We tune the parameter of fdlib so that most faces can be detected. Along with the resulting high recall rate, we also have more false alarms and therefore low precision rate. However, this is favorable since face candidates have been greatly reduced in comparison with exhaustive search, and the precision rate can be improved in the following stages. Figure 3 shows a sample result of fdlib. View Classification Figure 2. System diagram of the proposed multi-view face classification method. 3. Multi-view Face Detection We decompose multi-view face detection to two sub-problems, namely, face detection and multi-view face classification. For each input image, we first apply a face detector to select some face candidates, and then perform multi-view face classification to identify if each candidate is a face, as well as the view of them. The decomposition makes the optimization of the two classifiers easier since the useful features and parameters may be greatly different for the two sub-problems. To reduce computational effort, we develop a hierarchical approach for our algorithm based on the simple-to-complex strategy [1]. At the first and second stages, the face detectors are simple, yet sufficient to discard most of the non-faces. The remaining non-faces are removed by a classifier composed of several Support Vector Machines (SVM [8]), which is known for its strong discriminating power. The relatively higher computation burden of SVM is largely alleviated because typically only a few dozens of face candidates remain to be classified. For view classification, we train another classifier aiming at discriminating the five views of concern. The system diagram of the proposed multi-view face detection is shown in Figure 2, and the details of each component are described in the following subsections. 3.1. Figure 3. Sample result of the face patch classifier fdlib. Red squares represent detected face candidates. Left: low threshold (high precision, low recall); right: high threshold (low precision, high recall). Figure 4. Sample results of the skin color detector. Red squares: face candidates of the first stage; green squares: detected face candidates of the skin color detector. The precision rate has been greatly improved. Face Detection Given an input image, we must detect the regions of faces with multi-view in it. An efficient face detector is used first, and the results are thresholded to remove some false alarms. The remaining results are enlarged and shifted to locate on the head regions more precisely. Then they are sent to the face classifier in 3.2 as face candidates. As the first stage for face detection, we consider only the luminance part of the images and adopt a real-time face patch classifier fdlib [6] to detect faces for its speed and Figure 5. Sample results of the face region shift algorithm. Blue squares represent the shifted face regions. Note the blue squares cover almost the head areas. Next it comes to the chromatic part. We compute a binary skin color map for each image by the skin color detection method proposed in [7], and discard face candidates whose proportion of skin-tone pixels does not exceed a pre-defined threshold δc. The threshold is set adaptively according to the proportion of skin-tone pixels in the whole image (denote as r): c max(0.25, min(0.5, 2r )) . (1) Two sample results are shown in Figure 4. It can be found the employment of skin color detector removes most of the false alarms in the detection results. Because a face candidate from the first two stages only encompasses the face region, i.e. the area from eyes to mouth, we shift and scale the face candidate to include the whole head region so that we can exploit information such as the distribution of hair, which can be an useful feature for both face and view classification. The face candidates produced by previous stages are square regions with various scales. For a face candidate with center (x, y) and length l, we enlarge the face region to cover a region of (x–l: x+l, y–2l/3: y+4l/3). The unequal proportion along the y–axis is because a face region usually locates lower in the head area (due to hair and forehead). After scaling, the length of the face region becomes 2l. Because the face candidate does not always locates at the central part of the corresponding head area due to detection error (especially for profile faces), we compute the centroid of skin-tone pixels in the enlarged face region, and then shift the face region to center at the skin-tone centroid. Two sample results are shown in Figure 5. The shifted face region includes almost the whole head area now. 3.2. Multi-View Face Classification Many face candidates are selected in the process described in 3.1, but parts of them are false alarms. Hence, face classification is required to find the true faces. In our approach, the candidates are classified as face or non-face by a face classifier first. Then a view classifier is applied to the positive results in order to identify the different views of faces. Both classifiers are composed of several SVMs, and LIBSVM [9] is used in the implementation. The training face data are collected from the web via search engines. Some keywords related to people (like “wedding”, “movie star”, “birthday”) are used to find photos that have people appearance, and the face regions are cropped and resized to the resolution of 100×100 pixels. To keep the balance of face views, 110 faces are collected for each of the five views, for a total of 550 faces. These data are directly used as the training data of view classifier. On the other hand, the training data of face classifier are collected by applying the face detector and the skin area threshold in 3.1 to some photos which include people’s face, and labeling the results as positive data (those which are actually faces) and negative data (those which are not faces but erroneously detected). This setting let the face classifier complement the weakness of the detection procedure. Furthermore, since the frontal faces are more than the profile faces in general photos, some training data of view classifier are added to the positive data of face classifier, so that the views of faces are still balance in training data and the classifier can work on multi-view faces. The training images and validation data are available on the web 1. The collected training images contain the whole head, not only the facial features from eyes to mouth. This is because that we want to use the hair information as features. And since the training data are 100×100 images, the face candidates selected from previous stages should be resized to 100×100 pixels before sending into the classifiers. For feature extraction, several kinds of features are tested, and the features with better CV accuracy are selected. In our final approach, three feature sets are used: (a) Texture features: this includes Gabor textures and phase congruency [10]. The implementation of this type of feature is based on the method in [11]. (b) Skin area: we use the skin detector described in 3.1. to find a 100×100 binary skin map, and then downsample it to 20×20 as a feature vector. Each sample includes 5×5 pixels, and the feature value is the ratio of skin pixels in it. This is an important feature for classification because a face image must contain skin, and different views of faces have different distribution of skin area. (c) Block color histogram: the image is divided into 5×5 blocks, and an RGB color histogram with 4×4×4 bins is constructed for each block. This feature plays a similar role to the skin ones. Moreover, it can capture hair information in addition to skin distribution. The dimension of features in a feature set is reduced by a feature selection algorithm. The quality of a feature is measure by formula (1) as the signal-to-noise ratio [12] of feature values between classes: s 2n( f ) 1 2 1 2 (1) where μ1, μ2 are the mean of feature values for two classes, and σ1, σ2 are their standard deviation. For multi-class case in view classification, this measure is computed between all pairs of classes and then take their average. The features with N highest signal-to-noise ratios are selected and used in training. For each feature set, different values of N are tried and a parameter search of SVM with RBF kernel [8] is done for each N according to 5-Fold CV accuracy. More details are described in 5.2. The SVM classifiers using different feature sets are combined to form a better one. This is done by constructing probabilistic models by LIBSVM so that the classifier can answer the probability distribution of a given instance as well as predicting its label. The distributions are averaged over classifiers, and the label with the highest probability is the final prediction of an instance. For the combination, the view classifier uses all of the three feature sets, but the face classifier uses only texture and color histogram ones. This choice is according to the performance of feature sets. Although the classifiers can filter out most of the false positives, there is still a problem in the result. For one person, many face candidates may be found in slightly different resolution by the face detector, and more than one of them are classified as face. Since they represent the same person, we will merge them to a single result. If two nearby face regions result from the face classifier overlap, with the overlapped area greater than 50% of the smaller region, then they are considered to represent the same person, and only the larger face region is kept as well as its view. Figure 6 is a sample result of this merging algorithm. 5.2. Figure 6. A sample result of the face region merging algorithm. Left: before merging, red squares are detected faces with view categories, blue squares are detected non-faces; right: after merging, green squares are merged into the largest red square, and the view is set according to the red square. As described above, the multi-view face classification algorithm takes the face candidates produced by 3.1. as input, and find the true faces among them as well as the views of those faces. The view information can be used in photo thumbnailing on the consideration of aesthetics, which will be described in Chapter 4. 6. 4. 5. 5.1. Photo Thumbnailing Experimental Result Efficiency xx Accuracy The best CV accuracy obtained by each type of feature is shown in Table 1, as well as the value of N. Texture(172) Skin(400) Color(1600) Face View 97.09%(100) 87.45%(150) 96.73%(150) 77.45%(100) 77.27%(150) 76.18%(150) Table 1. The best CV accuracy for three types of features: texture(172 features), skin area(400 features), and color histogram(1600 features). The face classifier has two classes (face/nonface), and the view classifier has five classes for five different views. The number in brackets is the value of N used for that CV accuracy. According to the results, the skin area feature is good for view classification, but bad for face one. The reason is that false positives of face detectors are usually have colors similar to the skin, so it is harder to classify them. The texture and color histogram features work well in both cases. This approach does raise the performance, as the resulting CV accuracies of fusion models are 98.58% and 87.96% for face and view classification respectively. Conclusion References [1] Z.-Q. Zhang, L. Zhu, S.-Z. Li, H.-J. Zhang, “Real-time multi-view face detection,” Proceeding of Int. Conf. Automatic Face and Gesture Recognition, pp. 142–147, 2002. [2] Y. Li, S. Gong, J. Sherrah, and H. Liddell, “Support vector machine based multi-view face detection and recognition,” Image and Vision Computing, pp. 413–427, 2004. [3] P. Wang and Q. Ji, “Multi-view face detection under complex scene based on combined SVMs,” Proceeding of Int. Conf. Pattern Recognition, 2004. [4] K.-S. Huang and M.M. Trivedi, “Real-time multi-view face detection and pose estimation in Video Stream,” Proceeding of Int. Conf. Pattern Recognition, pp. 965–968, 2004. [5] B. Ma, W. Zhang, S. Shan, X. Chen, and W. Gao, “Robust head pose estimation using LGBP,” Proceeding of Int. Conf. Pattern Recognition, pp. 512–515, 2006. [6] W. Kienzle, G. Bakir, M. Franz, and B. Schölkopf, “Face detection - efficient and rank deficient,” Advances in Neural Information Processing Systems 17, pp. 673–680, 2005. [7] R.-L. Hsu, M. Abdel-Mottaleb, A. K. Jain, “Face detection in color images,” Trans. Pattern Recognition and Machine Learning, pp. 696–706, 2002. [8] C.-W. Hsu, C.-C. Chang, and C.-J. Lin, “A practical guide to support vector classification”, Technical report, Department of Computer Science and Information Engineering, National Taiwan University, Taipei, 2003. [9] C.-C. Chang, and C.-J. Lin, “LIBSVM: a library for support vector machines”, 2001. Available at: http://www.csie.ntu.edu.tw/~cjlin/libsvm/. [10] P. D. Kovesi, “Image features from phase congruency”, Videre: Journal of Computer Vision Research, Vol. 1, No. 3, pp. 1–26, 1999. [11] P. D. Kovesi, “MATLAB and Octave Functions for Computer Vision and Image Processing”, School of Computer Science & Software Engineering, The University of Western Australia. Available at: http://www.csse.uwa.edu.au/~pk/research/matlabfns/. [12] T. R. Golub et al., “Molecular classification of cancer: Class discovery and class prediction by gene expression monitoring”, Science, Vol. 286, pp. 531–537, 1999. [13] blah 1 http://www.csie.ntu.edu.tw/~r95007/facedata.htm