galaxy - South Green Bioinformatics platform

advertisement

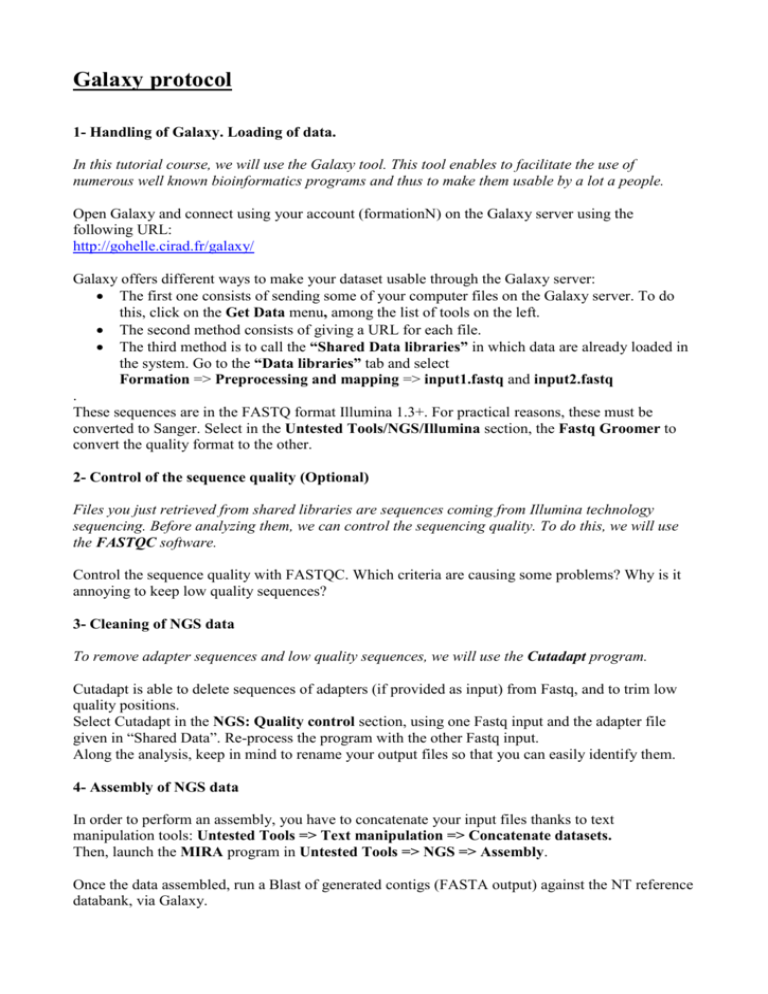

Galaxy protocol 1- Handling of Galaxy. Loading of data. In this tutorial course, we will use the Galaxy tool. This tool enables to facilitate the use of numerous well known bioinformatics programs and thus to make them usable by a lot a people. Open Galaxy and connect using your account (formationN) on the Galaxy server using the following URL: http://gohelle.cirad.fr/galaxy/ Galaxy offers different ways to make your dataset usable through the Galaxy server: The first one consists of sending some of your computer files on the Galaxy server. To do this, click on the Get Data menu, among the list of tools on the left. The second method consists of giving a URL for each file. The third method is to call the “Shared Data libraries” in which data are already loaded in the system. Go to the “Data libraries” tab and select Formation => Preprocessing and mapping => input1.fastq and input2.fastq . These sequences are in the FASTQ format Illumina 1.3+. For practical reasons, these must be converted to Sanger. Select in the Untested Tools/NGS/Illumina section, the Fastq Groomer to convert the quality format to the other. 2- Control of the sequence quality (Optional) Files you just retrieved from shared libraries are sequences coming from Illumina technology sequencing. Before analyzing them, we can control the sequencing quality. To do this, we will use the FASTQC software. Control the sequence quality with FASTQC. Which criteria are causing some problems? Why is it annoying to keep low quality sequences? 3- Cleaning of NGS data To remove adapter sequences and low quality sequences, we will use the Cutadapt program. Cutadapt is able to delete sequences of adapters (if provided as input) from Fastq, and to trim low quality positions. Select Cutadapt in the NGS: Quality control section, using one Fastq input and the adapter file given in “Shared Data”. Re-process the program with the other Fastq input. Along the analysis, keep in mind to rename your output files so that you can easily identify them. 4- Assembly of NGS data In order to perform an assembly, you have to concatenate your input files thanks to text manipulation tools: Untested Tools => Text manipulation => Concatenate datasets. Then, launch the MIRA program in Untested Tools => NGS => Assembly. Once the data assembled, run a Blast of generated contigs (FASTA output) against the NT reference databank, via Galaxy. 5- Separate sequences of each individual using a regular expression Sequences are sampled from different individuals, we must separate reads by individual (RC), in order to later identify the origin of variations. We are going to use the tool Untested Tools => NGS => Generic Fastq manipulation => Manipulate FASTQ Select your cleaned sequence file and click on “match reads”. Choose by “Name/Identifier” with an identifier detected by a “Regular expression” which eliminates all sequences that not belong to the individual of interest. In “Manipulate Reads”, add the “miscellaneous action” entitled “Remove reads”. The created file should contain only reads from the chosen individual. Restart the process with the other file. 6- Mapping of sequences on the Rice transcriptome At this point, sequences are cleaned and separated by individual, and are thus ready to be analyzed. Possessing a reference for the Rice transcriptome, we are going to try to position each read on this reference. To do this mapping, we will use the BWA program. Input files are the 2 cleaned and sample-separated files. Each group of 2 persons can perform a mapping for a specified RC sample (Cultivated Rice). Reference sequence can imported from Data libraries => preprocessing and Mapping => reference.fasta Run BWA: NGS: Mapping => BWA The output file is a SAM file. Observe the different columns of the SAM format. Sort this SAM file by coordinate using the SortSam utility of PicardTools. NGS: SAM/BAM manipulation => SortSam Add the sample name (ReadGroup) into your mapping SAM file using the AddReadGroupIntoSAM utility of SNiPlay. NGS: SNP Detection => SNiPlay => AddReadGroupIntoSAM 7- Creation of workflows As you may have noticed, chaining all these steps can be fastidious. Galaxy is able to create workflow to automate the program enchainment. A workflow consists of the automated enchainment of several programs. Our workflow will be composed of three parts: Data formatting Data treatment Data formatting 1- Go to the Workflow menu and create a new workflow 2- Add the FastqGroomer program 3- Add the Cutadapt program 4- Bind the FastqGroomer output to the input of Cutadapt 5- Add the Manipulate Fastq program 6- Connect the Cutadapt output to Manipulate Fastq 7- Repeat the process (i.e. all the previous workflow) for the second initial Fastq 8- Concatenate outputs of these two workflows 9- Add the BWA program 10- Connect the output of Concatenation to BWA 11- Add the AddReadGroupIntoSam program and connect the BWA output to it 12- Add the SortSam utility and connect to previous program. Your workflow is now functional. Run your workflow with files input1.fastq and input2.fastq, and parameterize the options. Options can also be informed and configured directly in the workflow steps so that you don’t have to fill each time you run the workflow. 8- Merging of SAM files Finally, share your history so that all groups can get your mapping. Then, load the mapping files for each RC in the other histories. In order to generate a global SAM file containing all samples, merge the different SAM files using the MergeSam utility of PicardTools.