Analysis Team Write Up

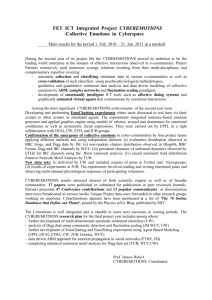

advertisement

Analyzing Team Report Mengfei Zhang, Jie Zhao, Yiming Zhou (Tsinghua University Graduate School in Shenzhen, China) Richard Gomer, Sarosh Khan, Terhi Nurmikko (University of Southampton, UK) Introduction The aim of the Analysis was to receive a corpus of unprocessed text from the Resources Team, carry out a two-fold analysis and present statistics and metrics to the Visualisation Team. Given the practical limitations of the internet connection, Cloud storage services were not feasible. The corpuses were the subject of two forms of analysis, sentiment followed by frequency count; the former to establish whether the perception held by one state of another was broadly positive or negative and the latter to establish the most commonly used words about the state being discussed. Objectives To identify the tools and processes to carry out sentiment analysis, To identify the tools and processes to carry out frequency count, To process the data provided by the Resources Team and provide Visualisation Team with statistics. Sentiment Analysis Sentiment analysis was carried out over the text to establish the generally positive or negative nature of the opinions voiced by the young of the U.K. and China. Sentiment analysis was carried out to establish whether the comments were generally positive, negative or neutral with each message assigned a probability of belonging to each group. This allowed for a general view to be established. Three values for each country were passed on to the Visualisation Team, representing the number of positive, negative and neutral messages. Fig. 1 Sentiment analysis workflow The sentiment analysis was carried out using a web service at www.text-processing.com. This was chosen because time constraints meant that sentiment analysis tools could not be implemented into the team’s custom-built software, nor trained. The web service was used in order to simplify the process. Since the aforementioned web service was tailored to English, the Chinese text was first translated into English. The BING API was used as this tool is freely available, unlike other considered options such as the Google Translate API. Limitations of time meant that only cursory searches for suitable tools were possible, and the training and integration of existing and custom-made programs was limited. No suitable program for the direct analysis of the Chinese text was identified and as a result, it was decided that the most conducive method to analyze the Chinese data was to initially translate it into English and then subsequently use the text processing program at www.text-processing.com to analyze the sentiment. The BING API had problems translating the Chinese text; the Analysis Team found that only one term out of a number was translated. This resulted in some concerns over the resulting data set and it is recommended that accuracy is measured against human translation to determine the degree of error or alternatively, that the analysis is carried out directly with a sentiment analysis tool trained in Chinese. The Frequency Count The frequency count was deployed as a means of establishing the ten most commonly used nouns and adjectives from the dataset provided by the Resources Team. The value of such an analysis is that it allowed us to gauge what words young people in the U.K. and China were using in relation to each other and other states, and to provide some data for the Visualisation Team. The frequency counting for all the content was carried out through the usage of a Java Program1 developed by the Chinese students and using a library developed by the Chinese Academy of Science. Initially, an attempt was made to analyse the entire sample of 7,000 words but it was found that the memory was exhausted and as a result, the Program terminated itself. It was then decided that the set of 7,000 was to be broken down into sections of 1,000 which were analysed in turn; once the first 1,000 had been processed they were deleted so as not to be processed again and then the next 1,000 were processed. The part of speech for each word was identified 1 https://github.com/zhouym06/webvisionproject and the twenty most frequently used nouns were identified. For the English content, automatic detection of part of speech was not carried out. Fig.2 Frequency count workflow A list of stop words meant that many commonly occurring words and phrases with little semantic value were removed, reducing memory requirements for the program and providing a set of cleaner results. This machine analysis was followed by a qualitative filtering to remove words which remained in the results. These included pronouns, conjunctions and words lacking contextual value. Furthermore, it was decided that the nation being discussed as well as the nation state discussing them were to be removed from the results alongside terms in relation to the nationalities of the two states; where young people from the U.K. were talking about China, phrases such as ‘U.K.’, ‘China’, ‘British’ and ‘Chinese’ and all their synonyms were excluded and removed. The reason for this was that it was believed that leaving such terms in would add no value in establishing what young people thought and merely take up words. The Visualisation Team was then provided with .txt files containing the 20 most commonly used phrases for each separate country and the its view in relation to another state (e.g. ‘UKviewsonChina.txt). Further Work The work carried out by the Analysis Team highlighted many potential instances for future work. A longer timescale would allow for the integration of local sentiment analysis libraries with existing programs, and the training of such tools for Chinese. Future work should also include further examination of possible suitable natural language processing (NLP) techniques, such as handling mixed case words more effectively. Further work ought to also include time to solve issues such as bugs associated with the practical implementation of the Java script used for the analysis. One of the challenges encountered was that data sets of certain states were insufficient in terms of size. Limitations on the size were the result of limitations on time and therefore the results presented were not as comprehensive for the opinions of young people from the U.K. to the United States of America (USA) as they might otherwise have been. Future research would scrape more data for each country including Australia, Singapore and Canada, with a view towards providing a more comprehensive result. Future work could increase the number and type of words that were to be placed on the stop list. However, exploring phrases rather than individual words ought to be considered as an method for acquiring more informative results. The research found that in certain instances particular phrases were appearing, for example in the case of the perception from the U.K. of Japan, the words ‘Western’ and ‘Front’ appeared quite high up the list in terms of frequency, although the word ‘Western’ was not high up enough to be in the top 20 to appear in the data passed on to the Visualisation Team. As a result, frequency counting of phrases through clusters whereby sets of three words (for example) are analysed in turn would allow for the spotting of particular phrases as opposed to merely words.