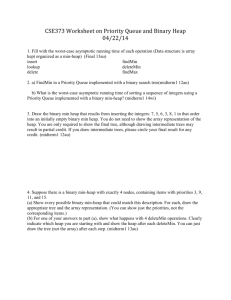

CS-404 Analysis & Design of Algorithm [ADA]

advertisement

![CS-404 Analysis & Design of Algorithm [ADA]](http://s3.studylib.net/store/data/007238023_1-56e43678b82e01f44f3ae3739f0a22ac-768x994.png)

1

GEC Group of College’s

Dept. of Computer Sc. &Engg. and IT

Teaching Notes

CS/IT – 404

ADA

Prepared by

Mr. Dharmveer Singh bhadauria

2

Analysis & Design of Algorithm(syllabus)

Unit I

Algorithms, Designing algorithms, analyzing algorithms, asymptotic notations, heap and heap sort.

Introduction to divide and conquer technique, analysis, design and comparison of various algorithms

based on this technique, example binary search, merge sort, quick sort, strassen’s matrix

multiplication.

Unit II

Study of Greedy strategy, examples of greedy method like optimal merge patterns, Huffman coding,

minimum spanning trees, knapsack problem, job sequencing with deadlines, single source

shortest path algorithm

Unit III

Concept of dynamic programming, problems based on this approach such as 0/1 knapsack,

multistage graph, reliability design, Floyd-Warshall algorithm

Unit IV

Backtracking concept and its examples like 8 queen’s problem, Hamiltonian cycle, Graph coloring

problem etc. Introduction to branch & bound method, examples of branch and bound method like

traveling salesman problem etc. Meaning of lower bound theory and its use in solving algebraic

problem, introduction to parallel algorithms.

Unit V

Binary search trees, height balanced trees, 2-3 trees, B-trees, basic search and traversal techniques

for trees and graphs (In order, preorder, postorder, DFS, BFS), NP-completeness

3

S.NO

PAGE NO.

NAME OF TOPIC

1

Algorithms, Designing algorithms, analyzing algorithms,

asymptotic notations

4-13

2

Heap and heap sort

14-19

3

Introduction to divide and conquer technique,

20

4

Binary search

21

5

Merge sort

22-29

6

Quick sort

30-35

7

Strassen’s matrix multiplication

36-37

4

Algorithm

• An algorithm is a set of instructions to be followed to solve a

problem.

– There can be more than one solution (more than one

algorithm) to solve a given problem.

– An algorithm can be implemented using different

programming languages on different platforms.

• An algorithm must be correct. It should correctly solve the

problem.

– e.g. For sorting, this means even if

– (1) the input is already sorted, or

– (2) it contains repeated elements.

Once we have a correct algorithm for a problem, we have to

determine the efficiency of that algorithm.

Algorithmic Performance

There are two aspects of algorithmic performance:

• Time

• Instructions take time.

• How fast does the algorithm perform?

• What affects its runtime?

• Space

• Data structures take space

5

• What kind of data structures can be used?

• How does choice of data structure affect the

runtime?

We will focus on time:

– How to estimate the time required for an algorithm.

– How to reduce the time required.

Analysis of Algorithms

• Analysis of Algorithms is the area of computer science that

provides tools to analyze the efficiency of different methods

of solutions.

• How do we compare the time efficiency of two algorithms

that solve the same problem?

Naïve Approach: implement these algorithms in a

programming language (C++), and run them to compare their time

requirements. Comparing the programs (instead of algorithms)

has difficulties.

– How are the algorithms coded?

• Comparing running times means comparing the

implementations.

• We should not compare implementations, because

they are sensitive to programming style that may

cloud the issue of which algorithm is inherently

more efficient.

– What computer should we use?

6

• We should compare the efficiency of the

algorithms independently of a particular computer.

– What data should the program use?

Any analysis must be independent of specific data.

• When we analyze algorithms, we should employ

mathematical

techniques

that

analyze

algorithms

independently of specific implementations, computers, or

data.

• To analyze algorithms:

– First, we start to count the number of significant

operations in a particular solution to assess its

efficiency.

– Then, we will express the efficiency of algorithms using

growth functions.

The Execution Time of Algorithms

• Each operation in an algorithm (or a program) has a cost.

Each operation takes a certain of time.

count = count + 1; take a certain amount of time,

but it is constant

A sequence of operations:

count = count + 1;

Cost: c1

sum = sum + count;

Cost: c2

7

Total Cost = c1 + c2

Example: Simple If-Statement

Cost

if (n < 0)

absval = -n

Times

c1

1

c2

1

c3

1

else

absval = n;

Total Cost <= c1 + max(c2,c3)

Example: Simple Loop

Cost

Times

i = 1;

c1

1

sum = 0;

c2

1

while (i <= n) {

c3

n+1

i = i + 1;

c4

n

sum = sum + i;

c5

n

}

Total Cost = c1 + c2 + (n+1)*c3 + n*c4 + n*c5

The time required for this algorithm is

proportional to n

Example: Nested Loop

8

Cost

Times

i=1;

c1

1

sum = 0;

c2

1

while (i <= n) {

c3

n+1

j=1;

c4

n

while (j <= n) {

c5

n*(n+1)

sum = sum + i;

c6

n*n

j = j + 1;

c7

n*n

}

i = i +1;

c8

}

Total Cost = c1 + c2 + (n+1)*c3 + n*c4 +

n*(n+1)*c5+n*n*c6+n*n*c7+n*c8

The time required for this algorithm is

proportional to n2

n

9

General Rules for Estimation

• Loops: The running time of a loop is at most the running

time of the statements inside of that loop times the number

of iterations.

•

Nested Loops: Running time of a nested loop containing a

statement in the inner most loop is the running time of

statement multiplied by the product of the sized of all loops.

• Consecutive Statements: Just add the running times of

those consecutive statements.

•

If/Else: Never more than the running time of the test plus the

larger of running times of S1 and S2.

Algorithm Growth Rates

• We measure an algorithm’s time requirement as a function

of the problem size.

– Problem size depends on the application: e.g. number

of elements in a list for a sorting algorithm, the number

disks for towers of hanoi.

• So, for instance, we say that (if the problem size is n)

– Algorithm A requires 5*n2 time units to solve a problem

of size n.

– Algorithm B requires 7*n time units to solve a problem

of size n.

10

• The most important thing to learn is how quickly the

algorithm’s time requirement grows as a function of the

problem size.

– Algorithm A requires time proportional to n2.

– Algorithm B requires time proportional to n.

• An algorithm’s proportional time requirement is known as

growth rate.

• We can compare the efficiency of two algorithms by

comparing their growth rates.

Time requirements as a function of the problem size n

11

Common Growth Rates

Function

Growth

Name

c

Constant

log N

Logarithmic

log2N

Log-squared

N

Linear

N log N

N2

Quadratic

N3

Cubic

2N

Exponential

Rate

12

Figure 1.1

Running times for small inputs

Order-of-Magnitude Analysis and Big O Notation

• If Algorithm A requires time proportional to f(n), Algorithm A

is said to be order f(n), and it is denoted as O(f(n)).

• The function f(n) is called the algorithm’s growth-rate

function.

• Since the capital O is used in the notation, this notation is

called the Big O notation.

• If Algorithm A requires time proportional to n2, it is O(n2).

• If Algorithm A requires time proportional to n, it is O(n).

13

Definition of the Order of an Algorithm

Definition:

Algorithm A is order f(n) – denoted as O(f(n)) –

if constants k and n0 exist such that A requires

no more than k*f(n) time units to solve a problem

of size n n0.

• The requirement of n n0 in the definition of O(f(n))

formalizes the notion of sufficiently large problems.

– In general, many values of k and n can satisfy this

definition.

– If an algorithm requires n2–3*n+10 seconds to solve a

problem size n. If constants k and n0 exist such that

–

–

–

–

–

k*n2 > n2–3*n+10 for all n n0 .

the algorithm is order n2 (In fact, k is 3 and n0 is 2)

3*n2 > n2–3*n+10 for all n 2 .

Thus, the algorithm requires no more than k*n2 time

units for n n0 ,

So it is O(n2)

14

What to Analyze

• An algorithm can require different times to solve different

problems of the same size.

– Eg. Searching an item in a list of n elements using

sequential search. Cost: 1,2,...,n

• Worst-Case Analysis –The maximum amount of time that

an algorithm require to solve a problem of size n.

– This gives an upper bound for the time complexity of an

algorithm.

– Normally, we try to find worst-case behavior of an

algorithm.

• Best-Case Analysis –The minimum amount of time that an

algorithm require to solve a problem of size n.

– The best case behavior of an algorithm is NOT so

useful.

• Average-Case Analysis –The average amount of time that

an algorithm require to solve a problem of size n.

– Sometimes, it is difficult to find the average-case

behavior of an algorithm.

– We have to look at all possible data organizations of a

given size n, and their distribution probabilities of these

organizations.

Worst-case analysis is more common than averagecase analysis.

15

What is a “heap

Definitions of heap:

1. A large area of memory from which the programmer

can allocate blocks as needed, and deallocate them (or

allow them to be garbage collected) when no longer

needed.

2. A balanced, left-justified binary tree in which no node

has a value greater than the value in its parent.

These two definitions have little in common.

Heapsort uses the second definition.

The heap property

A node has the heap property if the value in the node is

as large as or larger than the values in its children

All leaf nodes automatically have the heap property

A binary tree is a heap if all nodes in it have the heap

property

16

siftUp

Given a node that does not have the heap property, you

can give it the heap property by exchanging its value

with the value of the larger child.

All leaf nodes automatically have the heap property.

A binary tree is a heap if all nodes in it have the heap

property.

Sorting

What do heaps have to do with sorting an array?

Here’s the neat part:

Because the binary tree is balanced and left justified, it can

be represented as an array

Danger Will Robinson: This representation works well only

with balanced, left-justified binary trees

All our operations on binary trees can be represented as

operations on arrays

To sort:

heapify the array;

while the array isn’t empty {

remove and replace the root;

reheap

the

new

root

node;

}

17

Mapping into an array

18

Removing and replacing the root

19

Reheap and repeat

...And again, remove and replace the root

node

20

Analysis

21

Divide and Conquer

Divide–and-Conquer

is a very common and very powerful algorithm design technique. The

general idea:

1. Divide the complete instance of problem into two (sometimes more)

sub problems that are smaller instances of the original.

2. Solve the sub problems (recursively).

3. Combine the sub problem solutions into a solution to the complete

(original) instance.

While the most common case is that the problem of size n

is divided into 2 sub problems of size n/2 . But in general, we can divide

the problem into b sub problems of size n/b , where a of those sub

problems need to be solved.

A sequential search algorithm

n

i

( n 2 n) / 2

n

n

i 1

22

Binary Search

Binary Search – Analysis

23

Merge Sort Approach

24

Example – n Power of 2

Divide

1

2

3

4

5

6

7

8

5

2

4

7

1

3

2

6

q=4

1

2

3

4

5

6

7

8

5

2

4

7

1

3

2

6

1

2

3

4

5

6

7

8

5

2

4

7

1

3

2

6

1

2

3

4

5

6

7

8

5

2

4

7

1

3

2

6

7

Example – n Power of 2

Conquer

and

Merge

1

2

3

4

5

6

7

8

1

2

2

3

4

5

6

7

1

2

3

4

5

6

7

8

2

4

5

7

1

2

3

6

1

2

3

4

5

6

7

8

2

5

4

7

1

3

2

6

1

2

3

4

5

6

7

8

5

2

4

7

1

3

2

6

8

25

Example – n Not a Power of 2

Conquer

and

Merge

1

2

3

4

5

6

7

8

9

1

2

2

3

4

4

5

6

6

10

1

2

3

4

5

6

7

8

9

1

2

4

4

6

7

2

3

5

1

2

3

4

5

6

7

8

9

2

4

7

1

4

6

3

5

7

1

2

3

4

5

6

7

8

9

4

7

2

1

6

4

3

7

5

1

2

4

5

7

8

4

7

6

1

7

3

11

7

7

10

11

6

7

10

11

2

6

10

11

2

6

10

Merging

p

r

q

1

2

3

4

5

6

7

8

2

4

5

7

1

2

3

6

• Input: Array A and indices p, q, r such that

p ≤q < r

– Subarrays A[p . . q] and A[q + 1 . . r] are sorted

• Output: One single sorted subarray A[p . . r]

11

26

Merging

• Idea for merging:

– Two piles of sorted cards

p

r

q

1

2

3

4

5

6

7

8

2

4

5

7

1

2

3

6

• Choose the smaller of the two top cards

• Remove it and place it in the output pile

– Repeat the process until one pile is empty

– Take the remaining input pile and place it face-down

onto the output pile

A1 A[p, q]

A[p, r]

A2 A[q+1, r]

12

Example: MERGE(A, 9, 12, 16)

14

27

Example (cont.)

15

Example (cont.)

16

28

Example (cont.)

Done!

17

Merge - Pseudocode

Alg.: MERGE(A, p, q, r)

p

1

2

r

q

2

3

4

5

6

7

8

4

5

7

1

2

3

6

1. Compute n1 and n2

2. Copy the first n1 elements into

n1

L[1 . . n1 + 1] and the next n2 elements into R[1 . . n2

p

3. L[n1 + 1] ← ;

R[n2 + 1] ←

4. i ← 1;

j ← 1

L

2

4

5

q+ 1

5. for k ← p to r

R

1

2

3

6.

do if L[ i ] ≤ R[ j ]

7.

then A[k] ← L[ i ]

8.

i ←i + 1

9.

else A[k] ← R[ j ]

10.

j ← j + 1

n2

+ 1]

q

7

r

6

18

29

Running Time of Merge

(assume last for loop)

• Initialization (copying into temporary arrays):

– (n1 + n2) = (n)

• Adding the elements to the final array:

- n iterations, each taking constant time (n)

• Total time for Merge:

– (n)

19

MERGE-SORT Running Time

• Divide:

– compute q as the average of p and r: D(n) = (1)

• Conquer:

– recursively solve 2 subproblems, each of size n/2

2T (n/2)

• Combine:

– MERGE on an n-element subarray takes (n) time

C(n) = (n)

T(n) =

(1)

if n =1

2T(n/2) + (n) if n > 1

21

30

Solve the Recurrence

T(n) =

c

2T(n/2) + cn

if n = 1

if n > 1

Use Master’s Theorem:

Compare n with f(n) = cn

Case 2: T(n) = Θ(nlgn)

22

Merge Sort - Discussion

• Running time insensitive of the input

• Advantages:

– Guaranteed to run in (nlgn)

• Disadvantage

– Requires extra space N

23

31

Quicksort

• Sort an array A[p…r]

A[p…q]

≤ A[q+1…r]

• Divide

– Partition the array A into 2 subarrays A[p..q] and A[q+1..r], such

that each element of A[p..q] is smaller than or equal to each

element in A[q+1..r]

– Need to find index q to partition the array

30

Quicksort

A[p…q]

≤ A[q+1…r]

• Conquer

– Recursively sort A[p..q] and A[q+1..r] using Quicksort

• Combine

– Trivial: the arrays are sorted in place

– No additional work is required to combine them

– The entire array is now sorted

31

32

QUICKSORT

Alg.: QUICKSORT(A, p, r)

Initially: p=1, r=n

if p < r

then q PARTITION(A, p, r)

QUICKSORT (A, p, q)

QUICKSORT (A, q+1, r)

Recurrence:

T(n) = T(q) + T(n – q) + f(n)

PARTITION())

32

Partitioning the Array

• Ch o o s i n g PARTIT ION()

– Ther e ar e dif f er ent ways

– Each has it s own

t o do t his

advant ages/ disadvant a ges

• Ho a re p a rtit i on (see pro b. 7-1 , p ag e 15 9 )

– Select a pivot

elem ent

– G r ows t wo r egions

A[ p…i ] x

x ar ound

which

t o par t it ion

A[ p…i ] x

x A[ j…r]

x A[ j …r]

i

j

33

33

Example

A[p…r]

5

3

2

6

4

pivot x=5

1

3

7

i

5

j

3

3

2

6

4

1

i

5

7

3

2

6

4

1

i

3

3

2

j

3

2

1

i

4

6

5

6

4

1

i

7

3

7

j

3

j

2

5

7

j

A[p…q]

3

3

A[q+1…r]

1

4

j

6

5

7

i

34

Example

35

34

Partitioning the Array

Alg. PARTITION (A, p, r)

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

r

p

x A[p]

5

3

2

6

4

1

3

7

A:

i p – 1

j r + 1

i

j

A[p…q]

≤ A[q+1…r]

while TRUE

ap

ar

do repeat j j – 1

A:

until A[j] ≤ x

j=q i

do repeat i i + 1

until A[i] ≥ x

Each element is

if i < j

visited once!

Running time: (n)

then exchange A[i] A[j]

n = r – p + 1

else return j

36

Recurrence

Alg.: QUICKSORT(A, p, r)

Initially: p=1, r=n

if p < r

then q PARTITION(A, p, r)

QUICKSORT (A, p, q)

QUICKSORT (A, q+1, r)

Recurrence:

T(n) = T(q) + T(n – q) + n

37

35

Worst Case Partitioning

•

Worst-case partitioning

– One region has one element and the other has n – 1 elements

– Maximally unbalanced

•

T(n) = T(1) + T(n – 1) + n,

T(1) = (1)

n

1

Recurrence: q=1

1

n

n- 2

1

n- 2

n- 3

1

T(n) = T(n – 1) + n

=

n

n

n- 1

n- 1

3

2

1

n

n k 1 ( n ) ( n 2 ) ( n 2 )

k 1

1

2

(n2)

When does the worst case happen?

38

Best Case Partitioning

•

Best-case partitioning

– Partitioning produces two regions of size n/2

•

Recurrence: q=n/2

T(n) = 2T(n/2) + (n)

T(n) = (nlgn) (Master theorem)

39

36

Performance of Quicksort

•

Average case

– All permutations of the input numbers are equally likely

– On a random input array, we will have a mix of well balanced

and unbalanced splits

– Good and bad splits are randomly distributed across throughout

the tree

n

combined partitioning cost:

n

2n-1 = (n)

1

n- 1

(n – 1)/2 + 1

(n – 1)/2

(n – 1)/2

Alternate of a good

and a bad split

•

partitioning cost:

n = (n)

(n – 1)/2

Nearly well

balanced split

Running time of Quicksort when levels alternate

between good and bad splits is O(nlgn)

43

37

Strassen's Matrix Multiplication Algorithm

Write a threaded code to multiply two random matrices using

Strassen's Algorithm. The application will generate two matrices

A(M,P) and B(P,N), multiply them together using

(1) a sequential method and then

(2) via Strassen's Algorithm resulting in C(M,N). The application

should then compare the results of the two multiplications to

ensure that the Strassen's results match the sequential

computations.

The input to the application comes from the command line. The

input will be 3 integers describing the sizes of the matrices to be

used: M, N, and P. Code restrictions: A very simple, serial version

of the application, written in C, will be available here. This source

file should be used as a starting point. Your entry should keep the

body of the main function, the matrix generation function, the

sequential multiplication code, and the function to compare the

two matrix product results. Changes needed for implementation in

a different language are permitted. You are also allowed to

change the memory allocation and other code to thread the

Strassen's computations. (It would be a good idea to document

the changes and the reason for such changes in your solution

write-up.) After the needed changes, your submitted solution must

use some form of Strassen's Algorithm to compute the second

matrix multiplication result.

Timing: The time for execution of the Strassen's Algorithm will be

used for scoring. Each

submission should include timing code to measure and print this

time to stdout. If not, the total execution time will be used.

38

Matrix multiplication

Given two matrices AM*P and BP*N, the product of the two is a matrix CM*N which is

computed as

follows:

void seqMatMult(int m, int n, int p, double** A, double** B,

double** C) {

for (int i = 0; i < m; i++)

for (int j = 0; j < n; j++) {

C[i][j] = 0.0;

for (int k = 0; k < p; k++)

C[i][j] += A[i][k] * B[k][j];

}

}

To calculate the matrix product C = AB, Strassen's algorithm partitions the data to

reduce the

number of multiplications performed. This algorithm requires M, N and P to be powers

of 2.

The algorithm is described below.

1. Partition A, B and and C into 4 equal parts:

A=

A11 A12

A21 A22

B=

B11 B12

B21 B22

C=

C11 C12

C21 C22

2. Evaluate the intermediate matrices:

M1 = (A11 + A22) (B11 + B22)

M2 = (A21 + A22) B11

M3 = A11 (B12 – B22)

M4 = A22 (B21 – B11)

M5 = (A11 + A12) B22

M6 = (A21 – A11) (B11 + B12)

M7 = (A12 – A22) (B21 + B22)

3. Construct C using the intermediate matrices:

C11 = M1 + M4 – M5 + M7

C12 = M3 + M5

C21 = M2 + M4

C22 = M1 – M2 + M3