14_Evaluation_Process_and_Timeline

advertisement

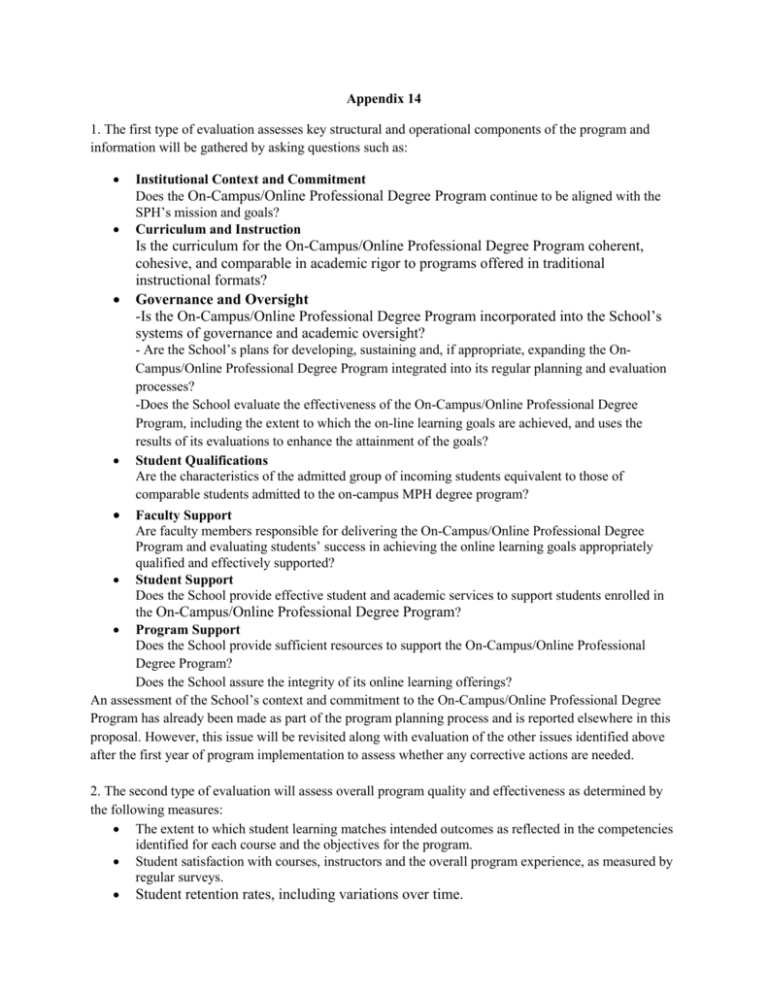

Appendix 14 1. The first type of evaluation assesses key structural and operational components of the program and information will be gathered by asking questions such as: Institutional Context and Commitment Does the On-Campus/Online Professional Degree Program continue to be aligned with the SPH’s mission and goals? Curriculum and Instruction Is the curriculum for the On-Campus/Online Professional Degree Program coherent, cohesive, and comparable in academic rigor to programs offered in traditional instructional formats? Governance and Oversight -Is the On-Campus/Online Professional Degree Program incorporated into the School’s systems of governance and academic oversight? - Are the School’s plans for developing, sustaining and, if appropriate, expanding the OnCampus/Online Professional Degree Program integrated into its regular planning and evaluation processes? -Does the School evaluate the effectiveness of the On-Campus/Online Professional Degree Program, including the extent to which the on-line learning goals are achieved, and uses the results of its evaluations to enhance the attainment of the goals? Student Qualifications Are the characteristics of the admitted group of incoming students equivalent to those of comparable students admitted to the on-campus MPH degree program? Faculty Support Are faculty members responsible for delivering the On-Campus/Online Professional Degree Program and evaluating students’ success in achieving the online learning goals appropriately qualified and effectively supported? Student Support Does the School provide effective student and academic services to support students enrolled in the On-Campus/Online Professional Degree Program? Program Support Does the School provide sufficient resources to support the On-Campus/Online Professional Degree Program? Does the School assure the integrity of its online learning offerings? An assessment of the School’s context and commitment to the On-Campus/Online Professional Degree Program has already been made as part of the program planning process and is reported elsewhere in this proposal. However, this issue will be revisited along with evaluation of the other issues identified above after the first year of program implementation to assess whether any corrective actions are needed. 2. The second type of evaluation will assess overall program quality and effectiveness as determined by the following measures: The extent to which student learning matches intended outcomes as reflected in the competencies identified for each course and the objectives for the program. Student satisfaction with courses, instructors and the overall program experience, as measured by regular surveys. Student retention rates, including variations over time. Measures of the extent to which library and learning resources are used appropriately by the program’s students. Measures of student competence in fundamental skills such as communication, comprehension, and analysis. The extent to which student expectations for job skills are met. Assessment and tracking of job placement and career advancement relative to the expectations of candidates, and program administration. The extent to which the applicant pool reflects the intended target audience of working public health professionals. Assessment by employers of the preparation of candidates for needed roles and job functions. Faculty satisfaction with program support for instructional excellence as measured by regular surveys and by formal and informal peer-review processes. Faculty engagement with continuously improving the program, as measured by the actual number of completed course refreshments and revisions each year compared to the expected. Student and staff surveys will also probe for measurable elements of faculty engagement. The extent to which the content and scope of the program meet or exceed the quality standards set for the on-campus MPH instructional program. Assessment of whether the delivery and student and faculty services in this program meet external CEPH accrediting standards. The extent to which the interaction and learning accomplished in this program are equivalent to or higher than the comparable on-campus MPH program. How does the quality of academic preparation of applicants to the OOP-MPH compare with the residential MPH applicant pool. The evaluation will be conducted by an independent vendor and the planned Evaluation reports will be produced and made widely available at five points in the process of serving the first two cohorts of this program: 1. Prior to initial delivery: evaluation of program content, instructional design; technical infrastructure and services to support course development and delivery; projected start-up budget compared to actual costs of start-up.. 2. At the time of admission of students: comparability of student characteristics with on-campus cohort; actual enrollment versus target for each semester. 3. At the end of one year: qualitative and quantitative assessments of student’s progress and learning outcomes; interim assessment of program quality; review of budget and actual costs and adjustments (variance analysis). 4. End of year three (graduation of first cohort): characteristics of graduating students; persistence rates; quality of learning outcomes; enrollment and budget variances. 5. One year after graduation: qualitative and quantitative (survey) assessments of the relevance of student learning to practice and professional lives; impact of MPH on promotions and new job placements; are there gaps in skill acquisition that students have identified? Timeline summary: the numbers in the table correspond to the numbered statements above. Aug 2011 1* April 2012 2 Oct 2012 3 April 2013 2 Jan 2014 4 April 2014 2 Feb 2015 4 &5 The findings will be reported to the Deans of SPH and UNEX and to SPH Faculty Council for review. Recommendations from Faculty Council will be shared with the Deans and the Executive leadership of the program (Faculty Director and UNEX counterparts). The Executive leadership will propose specific plans to execute on the recommendations and develop a monitoring protocol to follow progress on implemented changes. The Faculty Council will require a report from the Dean and Faculty Director on progress in no more than 6 months to assure appropriate actions have been taken.